-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28IPFS-dropzone

Instead of uploading the dropped files to an URL, this subclass of Dropzone.js publishes them to IPFS with js-ipfs (no running local nodes needed).

See also

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Parallel Chains

We want merged-mined blockchains. We want them because it is possible to do things in them that aren't doable in the normal Bitcoin blockchain because it is rightfully too expensive, but there are other things beside the world money that could benefit from a "distributed ledger" -- just like people believed in 2013 --, like issued assets and domain names (just the most obvious examples).

On the other hand we can't have -- like people believed in 2013 -- a copy of Bitcoin for every little idea with its own native token that is mined by proof-of-work and must get off the ground from being completely valueless into having some value by way of a miracle that operated only once with Bitcoin.

It's also not a good idea to have blockchains with custom merged-mining protocol (like Namecoin and Rootstock) that require Bitcoin miners to run their software and be an active participant and miner for that other network besides Bitcoin, because it's too cumbersome for everybody.

Luckily Ruben Somsen invented this protocol for blind merged-mining that solves the issue above. Although it doesn't solve the fact that each parallel chain still needs some form of "native" token to pay miners -- or it must use another method that doesn't use a native token, such as trusted payments outside the chain.

How does it work

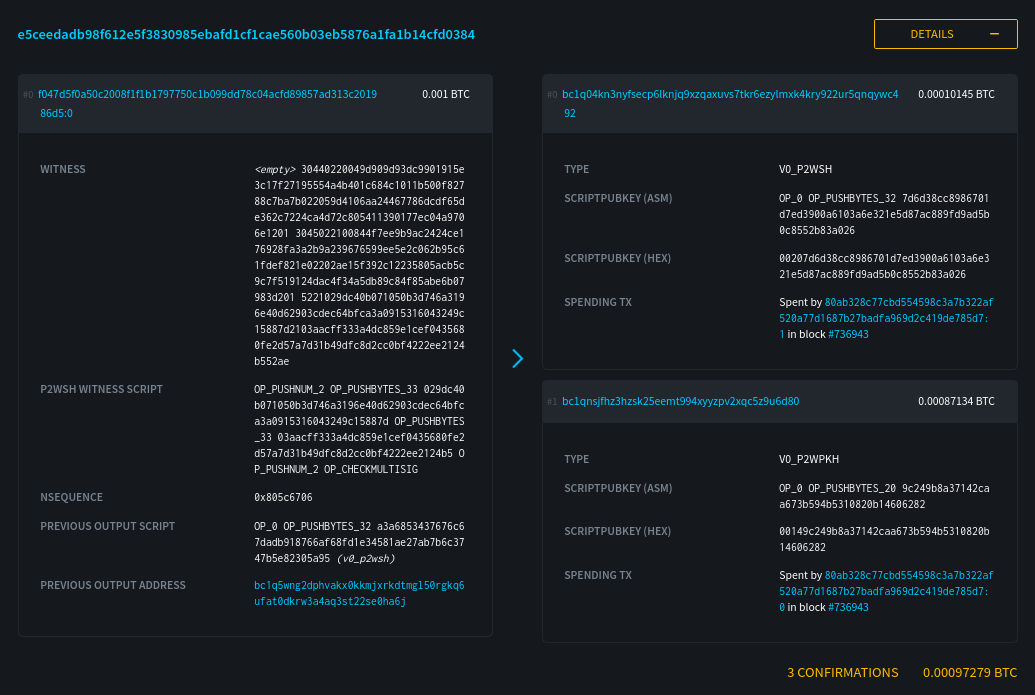

With the

SIGHASH_NOINPUT/SIGHASH_ANYPREVOUTsoft-fork[^eltoo] it becomes possible to create presigned transactions that aren't related to any previous UTXO.Then you create a long sequence of transactions (sufficient to last for many many years), each with an

nLockTimeof 1 and each spending the next (you create them from the last to the first). Since theirscriptSig(the unlocking script) will useSIGHASH_ANYPREVOUTyou can obtain a transaction id/hash that doesn't include the previous TXO, you can, for example, in a sequence of transactionsA0-->B(B spends output 0 from A), include the signature for "spending A0 on B" inside thescriptPubKey(the locking script) of "A0".With the contraption described above it is possible to make that long string of transactions everybody will know (and know how to generate) but each transaction can only be spent by the next previously decided transaction, no matter what anyone does, and there always must be at least one block of difference between them.

Then you combine it with

RBF,SIGHASH_SINGLEandSIGHASH_ANYONECANPAYso parallel chain miners can add inputs and outputs to be able to compete on fees by including their own outputs and getting change back while at the same time writing a hash of the parallel block in the change output and you get everything working perfectly: everybody trying to spend the same output from the long string, each with a different parallel block hash, only the highest bidder will get the transaction included on the Bitcoin chain and thus only one parallel block will be mined.See also

[^eltoo]: The same thing used in Eltoo.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28litepub

A Go library that abstracts all the burdensome ActivityPub things and provides just the right amount of helpers necessary to integrate an existing website into the "fediverse" (what an odious name). Made for the gravity integration.

See also

-

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Replacing the web with something saner

This is a simplification, but let's say that basically there are just 3 kinds of websites:

- Websites with content: text, images, videos;

- Websites that run full apps that do a ton of interactive stuff;

- Websites with some interactive content that uses JavaScript, or "mini-apps";

In a saner world we would have 3 different ways of serving and using these. 1 would be "the web" (and it was for a while, although I'm not claiming here that the past is always better and wanting to get back to the glorious old days).

1 would stay as "the web", just static sites, styled with CSS, no JavaScript whatsoever, but designers can still thrive and make they look pretty. Or it could also be something like Gemini. Maybe the two protocols could coexist.

2 would be downloadable native apps, much easier to write and maintain for developers (considering that multi-platform and cross-compilation is easy today and getting easier), faster, more polished experience for users, more powerful, integrates better with the computer.

(Remember that since no one would be striving to make the same app run both on browsers and natively no one would have any need for Electron or other inefficient bloated solutions, just pure native UI, like the Telegram app, have you seen that? It's fast.)

But 2 is mostly for apps that people use every day, something like Google Docs, email (although email is also broken technology), Netflix, Twitter, Trello and so on, and all those hundreds of niche SaaS that people pay monthly fees to use, each tailored to a different industry (although most of functions they all implement are the same everywhere). What do we do with dynamic open websites like StackOverflow, for example, where one needs to not only read, but also search and interact in multiple ways? What about that website that asks you a bunch of questions and then discovers the name of the person you're thinking about? What about that mini-app that calculates the hash of your provided content or shrinks your video, or that one that hosts your image without asking any questions?

All these and tons of others would fall into category 3, that of instantly loaded apps that you don't have to install, and yet they run in a sandbox.

The key for making category 3 worth investing time into is coming up with some solid grounds, simple enough that anyone can implement in multiple different ways, but not giving the app too much choices.

Telegram or Discord bots are super powerful platforms that can accomodate most kinds of app in them. They can't beat a native app specifically made with one purpose, but they allow anyone to provide instantly usable apps with very low overhead, and since the experience is so simple, intuitive and fast, users tend to like it and sometimes even pay for their services. There could exist a protocol that brings apps like that to the open world of (I won't say "web") domains and the websockets protocol -- with multiple different clients, each making their own decisions on how to display the content sent by the servers that are powering these apps.

Another idea is that of Alan Kay: to design a nice little OS/virtual machine that can load these apps and run them. Kinda like browsers are today, but providing a more well-thought, native-like experience and framework, but still sandboxed. And I add: abstracting away details about design, content disposition and so on.

These 3 kinds of programs could coexist peacefully. 2 are just standalone programs, they can do anything and each will be its own thing. 1 and 3, however, are still similar to browsers of today in the sense that you need clients to interact with servers and show to the user what they are asking. But by simplifying everything and separating the scopes properly these clients would be easy to write, efficient, small, the environment would be open and the internet would be saved.

See also

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Soft-fork activation through

bitcoindcompetitionOr: how to activate Drivechain.

Imagine a world in which there are 10 different

bitcoindflavors, as described inbitcoinddecentralization.Now how do you enable a soft-fork?

Flavor 1 enables it. Seeing that nothing bad happened, flavor 2 enables it. Then flavor 3 enables it.

And so on.

When what is perceived by miners to be a big chunk of support for the proposal, a miner can try to mine a block that contains the new feature.

No need for a flag day or a centralized decision making process that depends on one or two courageous leaders to enable a timer.

This probably sounds silly, and maybe is.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28LessPass remoteStorage

LessPass is a nice idea: a password manager without any state. Just remember one master password and you can generate a different one for every site using the power of hashes.

But it has a very bad issue: some sites require just numbers, others have a minimum or maximum character limits, some require non-letter characters, uppercase characters, others forbid these and so on.

The solution: to allow you to specify parameters when generating the password so you can fit a generated password on every service.

The problem with the solution: it creates state. Now you must remember what parameters you used when generating a password for each site.

This was a way to store these settings on a remoteStorage bucket. Since it isn't confidential information in any way, that wasn't a problem, and I thought it was a good fit for remoteStorage.

Some time later I realized it maybe would be better to have a centralized repository hosting all weird requirements for passwords each domain forced on its users, and let LessPass use data from that central place when generating a password. Still stateful, not ideal, not very far from a centralized password manager, but still requiring less trust and less cryptographic assumptions.

- https://github.com/fiatjaf/lesspass-remotestorage

- https://addons.mozilla.org/firefox/addon/lesspass-remotestorage/

- https://chrome.google.com/webstore/detail/lesspass-remotestorage/aogdpopejodechblppdkpiimchbmdcmc

- https://lesspass.alhur.es/

See also

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: Rumple

a payments network based on trust channels

This is the description of a Lightning-like network that will work only with credit or trust-based channels and exist alongside the normal Lightning Network. I imagine some people will think this is undesirable and at the same time very easy to do (such that if it doesn't exist yet it must be because no one cares), but in fact it is a very desirable thing -- which I hope I can establish below -- and at the same time a very non-trivial problem to solve, as the history of Ryan Fugger's Ripple project and posterior copies of it show.

Read these first to get the full context:

- Ryan Fugger's Ripple

- Ripple and the problem of the decentralized commit

- The Lightning Network solves the problem of the decentralized commit

- Parallel Chains

Explanation about the name

Since we're copying the fundamental Ripple idea from Ryan Fugger and since the name "Ripple" is now associated with a scam coin called XRP, and since Ryan Fugger has changed the name of his old website "Ripplepay" to "Rumplepay", we will follow his lead here. If "Ripplepay" was the name of a centralized prototype to the open peer-to-peer network "Ripple", now that the centralized version is called "Rumplepay" the peer-to-peer version must be called "Rumple".

Now the idea

Basically we copy the Lightning Network, but without HTLCs or channels being opened and closed with funds committed to them on multisig Bitcoin transactions published to the blockchain. Instead we use pure trust relationships like the original Ripple concept.

And we use the blockchain commit method, but instead of spending an absurd amount of money to use the actual Bitcoin blockchain instead we use a parallel chain.

How exactly -- a protocol proposal attempt

It could work like this:

The parallel chain, or "Rumple Chain"

- We define a parallel chain with a genesis block;

- Following blocks must contain

a. the ID of the previous block; b. a list of up to 32768 entries of arbitrary 32-byte values; c. an ID constituted by sha256(the previous block ID + the merkle root of all the entries) - To be mined, each parallel block must be included in the Bitcoin chain according as explained above.

Now that we have a structure for a simple "blockchain" that is completely useless, just blocks over blocks of meaningless values, we proceed to the next step of assigning meaning to these values.

The off-chain payments network, or "Rumple Network"

- We create a network of nodes that can talk to each other via TCP messages (all details are the same as the Lightning Network, except where mentioned otherwise);

- These nodes can create trust channels to each other. These channels are backed by nothing except the willingness of one peer to pay the other what is owed.

- When Alice creates a trust channel with Bob (

Alice trusts Bob), contrary to what happens in the Lightning Network, it's A that can immediately receive payments through that channel, and everything A receives will be an IOU from Bob to Alice. So Alice should never open a channel to Bob unless Alice trusts Bob. But also Alice can choose the amount of trust it has in Bob, she can, for example, open a very small channel with Bob, which means she will only lose a few satoshis if Bob decides to exit scam her. (in the original Ripple examples these channels were always depicted as friend relationships, and they can continue being that, but it's expected -- given the experience of the Lightning Network -- that the bulk of the channels will exist between users and wallet provider nodes that will act as hubs). - As Alice receive a payment through her channel with Bob, she becomes a creditor and Bob a debtor, i.e., the balance of the channel moves a little to her side. Now she can use these funds to make payments over that channel (or make a payment that combines funds from multiple channels using MPP).

- If at any time Alice decides to close her channel with Bob, she can send all the funds she has standing there to somewhere else (for example, another channel she has with someone else, another wallet somewhere else, a shop that is selling some good or service, or a service that will aggregate all funds from all her channels and send a transaction to the Bitcoin chain on her behalf).

- If at any time Bob leaves the network Alice is entitled by Bob's cryptographic signatures to knock on his door and demand payment, or go to a judge and ask him to force Bob to pay, or share the signatures and commitments online and hurt Bob's reputation with the rest of the network (but yes, none of these things is good enough and if Bob is a very dishonest person none of these things is likely to save Alice's funds).

The payment flow

- Suppose there exists a route

Alice->Bob->Caroland Alice wants to send a payment to Carol. - First Alice reads an invoice she received from Carol. The invoice (which can be pretty similar or maybe even the same as BOLT11) contains a payment hash

hand information about how to reach Carol's node, optionally an amount. Let's say it's 100 satoshis. - Using the routing information she gathered, Alice builds an onion and sends it to Bob, at the same time she offers to Bob a "conditional IOU". That stands for a signed commitment that Alice will owe Bob an 100 satoshis if in the next 50 blocks of the Rumple Chain there appears a block containing the preimage

psuch thatsha256(p) == h. - Bob peels the onion and discovers that he must forward that payment to Carol, so he forwards the peeled onion and offers a conditional IOU to Carol with the same

h. Bob doesn't know Carol is the final recipient of the payment, it could potentially go on and on. - When Carol gets the conditional IOU from Bob, she makes a list of all the nodes who have announced themselves as miners (which is not something I have mentioned before, but nodes that are acting as miners will must announce themselves somehow) and are online and bidding for the next Rumple block. Each of these miners will have previously published a random 32-byte value

vthey they intend to include in their next block. - Carol sends payments through routes to all (or a big number) of these miners, but this time the conditional IOU contains two conditions (values that must appear in a block for the IOU to be valid):

psuch thatsha256(p) == h(the same that featured in the invoice) andv(which must be unique and constant for each miner, something that is easily verifiable by Carol beforehand). Also, instead of these conditions being valid for the next 50 blocks they are valid only for the single next block. - Now Carol broadcasts

pto the mempool and hopes one of the miners to which she sent conditional payments sees it and, allured by the possibility of cashing in Carol's payment, includespin the next block. If that does not happen, Carol can try again in the next block.

Why bother with this at all?

-

The biggest advantage of Lightning is its openness

It has been said multiple times that if trust is involved then we don't need Lightning, we can use Coinbase, or worse, Paypal. This is very wrong. Lightning is good specially because it serves as a bridge between Coinbase, Paypal, other custodial provider and someone running their own node. All these can transact freely across the network and pay each other without worrying about who is in which provider or setup.

Rumple inherits that openness. In a Rumple Network anyone is free to open new trust channels and immediately route payments to anyone else.

Also, since Rumple payments are also based on the reveal of a preimage it can do swaps with Lightning inside a payment route from day one (by which I mean one can pay from Rumple to Lightning and vice-versa).

-

Rumple fixes Lightning's fragility

Lightning is too fragile.

It's known that Lightning is vulnerable to multiple attacks -- like the flood-and-loot attack, for example, although not an attack that's easy to execute, it's still dangerous even if failed. Given the existence of these attacks, it's important to not ever open channels with random anonymous people. Some degree of trust must exist between peers.

But one does not even have to consider attacks. The creation of HTLCs is a liability that every node has to do multiple times during its life. Every initiated, received or forwarded payment require adding one HTLC then removing it from the commitment transaction.

Another issue that makes trust needed between peers is the fact that channels can be closed unilaterally. Although this is a feature, it is also a bug when considering high-fee environments. Imagine you pay $2 in fees to open a channel, your peer may close that unilaterally in the next second and then you have to pay another $15 to close the channel. The opener pays (this is also a feature that can double as a bug by itself). Even if it's not you opening the channel, a peer can open a channel with you, make a payment, then clone the channel, and now you're left with, say, an output of 800 satoshis, which is equal to zero if network fees are high.

So you should only open channels with people you know and know aren't going to actively try to hack you and people who are not going to close channels and impose unnecessary costs on you. But even considering a fully trusted Lightning Network, even if -- to be extreme -- you only opened channels with yourself, these channels would still be fragile. If some HTLC gets stuck for any reason (peer offline or some weird small incompatibility between node softwares) and you're forced to close the channel because of that, there are the extra costs of sweeping these UTXO outputs plus the total costs of closing and reopening a channel that shouldn't have been closed in the first place. Even if HTLCs don't get stuck, a fee renegotiation event during a mempool spike may cause channels to force-close, become valueless or settle for very high closing fee.

Some of these issues are mitigated by Eltoo, others by only having channels with people you trust. Others referenced above, plus the the griefing attack and in general the ability of anyone to spam the network for free with payments that can be pending forever or a lot of payments fail repeatedly makes it very fragile.

Rumple solves most of these problems by not having to touch the blockchain at all. Fee negotiation makes no sense. Opening and closing channels is free. Flood-and-loot is a non-issue. The griefing attack can be still attempted as funds in trust channels must be reserved like on Lightning, but since there should be no theoretical limit to the number of prepared payments a channel can have, the griefing must rely on actual amounts being committed, which prevents large attacks from being performed easily.

-

Rumple fixes Lightning's unsolvable reputation issues

In the Lightning Conference 2019, Rusty Russell promised there would be pre-payments on Lightning someday, since everybody was aware of potential spam issues and pre-payments would be the way to solve that. Fast-forward to November 2020 and these pre-payments have become an apparently unsolvable problem[^thread-402]: no one knows how to implement them reliably without destroying privacy completely or introducing worse problems.

Replacing these payments with tables of reputation between peers is also an unsolved problem[^reputation-lightning], for the same reasons explained in the thread above.

-

Rumple solves the hot wallet problem

Since you don't have to use Bitcoin keys or sign transactions with a Rumple node, only your channel trust is at risk at any time.

-

Rumple ends custodianship

Since no one is storing other people's funds, a big hub or wallet provider can be used in multiple payment routes, but it cannot be immediately classified as a "custodian". At best, it will be a big debtor.

-

Rumple is fun

Opening channels with strangers is boring. Opening channels with friends and people you trust even a little makes that relationship grow stronger and the trust be reinforced. (But of course, like it happens in the Lightning Network today, if Rumple is successful the bulk of trust will be from isolated users to big reliable hubs.)

Questions or potential issues

-

So many advantages, yes, but trusted? Custodial? That's easy and stupid!

Well, an enormous part of the current Lightning Network (and also onchain Bitcoin wallets) already rests on trust, mainly trust between users and custodial wallet providers like ZEBEDEE, Alby, Wallet-of-Satoshi and others. Worse: on the current Lightning Network users not only trust, they also expose their entire transaction history to these providers[^hosted-channels].

Besides that, as detailed in point 3 of the previous section, there are many unsolvable issues on the Lightning protocol that make each sovereign node dependent on some level of trust in its peers (and the network in general dependent on trusting that no one else will spam it to death).

So, given the current state of the Lightning Network, to trust peers like Rumple requires is not a giant change -- but it is still a significant change: in Rumple you shouldn't open a large trust channel with someone just because it looks trustworthy, you must personally know that person and only put in what you're willing to lose. In known brands that have reputation to lose you can probably deposit more trust, same for long-term friends, and that's all. Still it is probably good enough, given the existence of MPP payments and the fact that the purpose of Rumple is to be a payments network for day-to-day purchases and not a way to buy real estate.

-

Why would anyone run a node in this parallel chain?

I don't know. Ideally every server running a Rumple Network node will be running a Bitcoin node and a Rumple chain node. Besides using it to confirm and publish your own Rumple Network transactions it can be set to do BMM mining automatically and maybe earn some small fees comparable to running a Lightning routing node or a JoinMarket yield generator.

Also it will probably be very lightweight, as pruning is completely free and no verification-since-the-genesis-block will take place.

-

What is the maturity of the debt that exists in the Rumple Network or its legal status?

By default it is to be understood as being payable on demand for payments occurring inside the network (as credit can be used to forward or initiate payments by the creditor using that channel). But details of settlement outside the network or what happens if one of the peers disappears cannot be enforced or specified by the network.

Perhaps some standard optional settlement methods (like a Bitcoin address) can be announced and negotiated upon channel creation inside the protocol, but nothing more than that.

[^thread-402]: Read at least the first 10 messages of the thread to see how naïve proposals like you and me could have thought about are brought up and then dismantled very carefully by the group of people most committed to getting Lightning to work properly. [^reputation-lightning]: See also the footnote at Ripple and the problem of the decentralized commit. [^hosted-channels]: Although that second part can be solved by hosted channels.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Thafne venceu o Soletrando 2008.

As palavras que Thafne teve que soletrar: "ocioso", "hermético", "glossário", "argênteo", "morfossintaxe", "infra-hepático", "hagiológio". Enquanto isso Eder recebia: "intramuscular", "destilação", "inabitável", "subcutâneo", "homogeneidade", "predecessor", "displicência", "subconsciência", "psicroestesia" (isto segundo o site da folha, donde certamente faltam algumas palavras de Thafne). Sério, "argênteo"? Não é errado dizer que a Globo tentou promover o menino pobre da escola pública do sertão contra a riquinha de Curitiba.

O mais espetacular disto é que deu errado e o Brasil inteiro torceu pela Thafne, o que se verifica com uma simples busca no Google. Eis aqui alguns exemplos:

- O problema de Thafne traz comentários tentando incriminar o governo do Estado de Minas Gerais com a vitória forçada de Eder.

- este vídeo mostrando os erros do programa e a vitória triunfal, embora parcial, de Thafne, traz a brilhante descrição "globo de puleira quis complicar a vida da menina!!!!!!!!!!!!!!!!!!!!!!"

- este vídeo, com o mesmo conteúdo,, porém chamado "Thafne versus Luciano Huck, o confronto do século", tem, além disto, vários comentários de francos torcedores de Thafne:

- "Nossa isso é burrice porq o doutor falou duas vezes como o luciano não prestou atenção logo thafine deu duas patadas no luciano... Proxima luciano presta atenção na pronuncia"

- "ele nao pronunciou errado porque é burro, isso foi pra manipular o resultado"

- "Gabriel o Bostador ficou pianinho. Babaca do krl"

- "Pena que ela perdeu :("

- "verdade... ela que ganhou, o outro só ficou com o título :S"

- "A menina deu um banho nesse que além de idiota é BURRO."

- e muitos, muitos outros.

- Globo Erra e Luciano Huck dá Vexame, um breve artigo descrevendo alguns dos pontos em que Eder foi favorecido.

- esta comunidade do Orkut, apenas a maior dentre várias que foram criadas.

O movimento de apoio a Thafne é um exemplo entre poucos de união total da nação em prol de uma causa.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Just malinvestiment

Traditionally the Austrian Theory of Business Cycles has been explained and reworked in many ways, but the most widely accepted version (or the closest to the Mises or Hayek views) view is that banks (or the central bank) cause the general interest rate to decline by creation of new money and that prompts entrepreneurs to invest in projects of longer duration. This can be confusing because sometimes entrepreneurs embark in very short-time projects during one of these bubbles and still contribute to the overall cycle.

The solution is to think about the "longer term" problem is to think of the entire economy going long-term, not individual entrepreneurs. So if one entrepreneur makes an investiment in a thing that looks simple he may actually, knowingly or not, be inserting himself in a bigger machine that is actually involved in producing longer-term things. Incidentally this thinking also solves the biggest criticism of the Austrian Business Cycle Theory: that of the rational expectations people who say: "oh but can't the entrepreneurs know that the interest rate is artificially low and decide to not make long-term investiments?" ("and if they don't know they should lose money and be replaced like in a normal economy flow blablabla?"). Well, the answer is that they are not really relying on the interest rate, they are only looking for profit opportunities, and this is the key to another confusion that has always followed my thinkings about this topic.

If a guy opens a bar in an area of a town where many new buildings are being built during a "housing bubble" he may not know, but he is inserting himself right into the eye of that business cycle. He expects all these building projects to continue, and all the people involved in that to be getting paid more and be able to spend more at his bar and so on. That is a bet that may or may not end up paying.

Now what does that bar investiment has to do with the interest rate? Nothing. It is just a guy who saw a business opportunity in a place where hungry people with money had no bar to buy things in, so he opened a bar. Additionally the guy has made some calculations about all the ending, starting and future building projects in the area, and then the people that would live or work in that area afterwards (after all the buildings were being built with the expectation of being used) and so on, there is no interest rate calculations involved. And yet that may be a malinvestiment because some building projects will end up being canceled and the expected usage of the finished ones will turn out to be smaller than predicted.

This bubble may have been caused by a decline in interest rates that prompted some people to start buying houses that they wouldn't otherwise, but this is just a small detail. The bubble can only be kept going by a constant influx of new money into the economy, but the focus on the interest rate is wrong. If new money is printed and used by the government to buy ships then there will be a boom and a bubble in the ship market, and that involves all the parts of production process of ships and also bars that will be opened near areas of the town where ships are built and new people are being hired with higher salaries to do things that will eventually contribute to the production of ships that will then be sold to the government.

It's not interest rates or the length of the production process that matters, it's just printed money and malinvestiment.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Profits, not wages, as the originary factor

Adam Smith says that there were first workers earning wages, but then came the capitalists and extracted profits from those wages.

But in fact if that primitive state ever existed there were no workers, but entrepreneursearning profit. And since they were not capitalists ("capitalist" defined as someone that buys with the intent of selling) they were earning an infinite rate of profit.

When capitalists came they were responsible for introducing costs (investment) reducing thus the rate of profit -- and the more capitalistic the society the smaller the rate of profits.

-- George Reisman in https://www.bobmurphyshow.com/139

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28nostr - Notes and Other Stuff Transmitted by Relays

The simplest open protocol that is able to create a censorship-resistant global "social" network once and for all.

It doesn't rely on any trusted central server, hence it is resilient; it is based on cryptographic keys and signatures, so it is tamperproof; it does not rely on P2P techniques, therefore it works.

Very short summary of how it works, if you don't plan to read anything else:

Everybody runs a client. It can be a native client, a web client, etc. To publish something, you write a post, sign it with your key and send it to multiple relays (servers hosted by someone else, or yourself). To get updates from other people, you ask multiple relays if they know anything about these other people. Anyone can run a relay. A relay is very simple and dumb. It does nothing besides accepting posts from some people and forwarding to others. Relays don't have to be trusted. Signatures are verified on the client side.

This is needed because other solutions are broken:

The problem with Twitter

- Twitter has ads;

- Twitter uses bizarre techniques to keep you addicted;

- Twitter doesn't show an actual historical feed from people you follow;

- Twitter bans people;

- Twitter shadowbans people.

- Twitter has a lot of spam.

The problem with Mastodon and similar programs

- User identities are attached to domain names controlled by third-parties;

- Server owners can ban you, just like Twitter; Server owners can also block other servers;

- Migration between servers is an afterthought and can only be accomplished if servers cooperate. It doesn't work in an adversarial environment (all followers are lost);

- There are no clear incentives to run servers, therefore they tend to be run by enthusiasts and people who want to have their name attached to a cool domain. Then, users are subject to the despotism of a single person, which is often worse than that of a big company like Twitter, and they can't migrate out;

- Since servers tend to be run amateurishly, they are often abandoned after a while — which is effectively the same as banning everybody;

- It doesn't make sense to have a ton of servers if updates from every server will have to be painfully pushed (and saved!) to a ton of other servers. This point is exacerbated by the fact that servers tend to exist in huge numbers, therefore more data has to be passed to more places more often;

- For the specific example of video sharing, ActivityPub enthusiasts realized it would be completely impossible to transmit video from server to server the way text notes are, so they decided to keep the video hosted only from the single instance where it was posted to, which is similar to the Nostr approach.

The problem with SSB (Secure Scuttlebutt)

- It doesn't have many problems. I think it's great. In fact, I was going to use it as a basis for this, but

- its protocol is too complicated because it wasn't thought about being an open protocol at all. It was just written in JavaScript in probably a quick way to solve a specific problem and grew from that, therefore it has weird and unnecessary quirks like signing a JSON string which must strictly follow the rules of ECMA-262 6th Edition;

- It insists on having a chain of updates from a single user, which feels unnecessary to me and something that adds bloat and rigidity to the thing — each server/user needs to store all the chain of posts to be sure the new one is valid. Why? (Maybe they have a good reason);

- It is not as simple as Nostr, as it was primarily made for P2P syncing, with "pubs" being an afterthought;

- Still, it may be worth considering using SSB instead of this custom protocol and just adapting it to the client-relay server model, because reusing a standard is always better than trying to get people in a new one.

The problem with other solutions that require everybody to run their own server

- They require everybody to run their own server;

- Sometimes people can still be censored in these because domain names can be censored.

How does Nostr work?

- There are two components: clients and relays. Each user runs a client. Anyone can run a relay.

- Every user is identified by a public key. Every post is signed. Every client validates these signatures.

- Clients fetch data from relays of their choice and publish data to other relays of their choice. A relay doesn't talk to another relay, only directly to users.

- For example, to "follow" someone a user just instructs their client to query the relays it knows for posts from that public key.

- On startup, a client queries data from all relays it knows for all users it follows (for example, all updates from the last day), then displays that data to the user chronologically.

- A "post" can contain any kind of structured data, but the most used ones are going to find their way into the standard so all clients and relays can handle them seamlessly.

How does it solve the problems the networks above can't?

- Users getting banned and servers being closed

- A relay can block a user from publishing anything there, but that has no effect on them as they can still publish to other relays. Since users are identified by a public key, they don't lose their identities and their follower base when they get banned.

- Instead of requiring users to manually type new relay addresses (although this should also be supported), whenever someone you're following posts a server recommendation, the client should automatically add that to the list of relays it will query.

- If someone is using a relay to publish their data but wants to migrate to another one, they can publish a server recommendation to that previous relay and go;

- If someone gets banned from many relays such that they can't get their server recommendations broadcasted, they may still let some close friends know through other means with which relay they are publishing now. Then, these close friends can publish server recommendations to that new server, and slowly, the old follower base of the banned user will begin finding their posts again from the new relay.

-

All of the above is valid too for when a relay ceases its operations.

-

Censorship-resistance

- Each user can publish their updates to any number of relays.

-

A relay can charge a fee (the negotiation of that fee is outside of the protocol for now) from users to publish there, which ensures censorship-resistance (there will always be some Russian server willing to take your money in exchange for serving your posts).

-

Spam

-

If spam is a concern for a relay, it can require payment for publication or some other form of authentication, such as an email address or phone, and associate these internally with a pubkey that then gets to publish to that relay — or other anti-spam techniques, like hashcash or captchas. If a relay is being used as a spam vector, it can easily be unlisted by clients, which can continue to fetch updates from other relays.

-

Data storage

- For the network to stay healthy, there is no need for hundreds of active relays. In fact, it can work just fine with just a handful, given the fact that new relays can be created and spread through the network easily in case the existing relays start misbehaving. Therefore, the amount of data storage required, in general, is relatively less than Mastodon or similar software.

-

Or considering a different outcome: one in which there exist hundreds of niche relays run by amateurs, each relaying updates from a small group of users. The architecture scales just as well: data is sent from users to a single server, and from that server directly to the users who will consume that. It doesn't have to be stored by anyone else. In this situation, it is not a big burden for any single server to process updates from others, and having amateur servers is not a problem.

-

Video and other heavy content

-

It's easy for a relay to reject large content, or to charge for accepting and hosting large content. When information and incentives are clear, it's easy for the market forces to solve the problem.

-

Techniques to trick the user

- Each client can decide how to best show posts to users, so there is always the option of just consuming what you want in the manner you want — from using an AI to decide the order of the updates you'll see to just reading them in chronological order.

FAQ

- This is very simple. Why hasn't anyone done it before?

I don't know, but I imagine it has to do with the fact that people making social networks are either companies wanting to make money or P2P activists who want to make a thing completely without servers. They both fail to see the specific mix of both worlds that Nostr uses.

- How do I find people to follow?

First, you must know them and get their public key somehow, either by asking or by seeing it referenced somewhere. Once you're inside a Nostr social network you'll be able to see them interacting with other people and then you can also start following and interacting with these others.

- How do I find relays? What happens if I'm not connected to the same relays someone else is?

You won't be able to communicate with that person. But there are hints on events that can be used so that your client software (or you, manually) knows how to connect to the other person's relay and interact with them. There are other ideas on how to solve this too in the future but we can't ever promise perfect reachability, no protocol can.

- Can I know how many people are following me?

No, but you can get some estimates if relays cooperate in an extra-protocol way.

- What incentive is there for people to run relays?

The question is misleading. It assumes that relays are free dumb pipes that exist such that people can move data around through them. In this case yes, the incentives would not exist. This in fact could be said of DHT nodes in all other p2p network stacks: what incentive is there for people to run DHT nodes?

- Nostr enables you to move between server relays or use multiple relays but if these relays are just on AWS or Azure what’s the difference?

There are literally thousands of VPS providers scattered all around the globe today, there is not only AWS or Azure. AWS or Azure are exactly the providers used by single centralized service providers that need a lot of scale, and even then not just these two. For smaller relay servers any VPS will do the job very well.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Alternatives to Drivechain

If Drivechain doesn't get soft-forked into Bitcoin, the alternatives people are left with are:

- Altcoins. People who want super-powers (privacy, smart contracts, cheap transactions) move their stake to shitcoins. This doesn't make much sense because even if altcoins had the necessary technology they wouldn't have the base money with which to use the technology, but still this remains an option.

- Fully-custodial and trusted systems. Instead of moving their money to a sidechain secured by Drivechain people can use a centralized service with much less safety and subject to all kinds of regulations, hacks and government takedowns.

- Federated sidechains, which are the same as custodial systems, but with distributed trust and maybe less, maybe more government involvement.

- Less secure sidechain-like constructions, like sidechains secured by a multisig of a fixed set of entities with names, or BTC tokens in other blockchains guaranteed by a collateral denominated in shitcoins which tends to zero.

- Corporate takeover. Big banks and giant corporations start buying all the coins and exposing part of them through their closed systems to normal people. Instead of an open network and free market as everybody expected, all meaningful activity now happens inside these legacy evil entities that are already sold to governments from the start.

Every time one person goes against Drivechain without proposing something else better, they're condemning bitcoiners to one or many of the above forever.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28On Bitcoin Bounties

The HRF has awarded two bounties yesterday. The episode exposes some of the problems of the bounties and grants culture that exists on Bitcoin.

First, when the bounties were announced, almost an year ago, I felt they were very hard to achieve (and also very useless, but let's set that aside).

The first, "a wallet that integrates bolt12 so it can receive tips noncustodially", could be understood as a bounty for mobile wallets only, in which case the implementation would be hacky, hard and take a lot of time; or it could be understood as being valid for any wallet, in which case it was already implemented in CLN (at the time called "c-lightning"), so the bounty didn't make sense.

The second, a wallet with a noncustodial US dollar balance, is arguably impossible, since there is no way to achieve it without trusted oracles, therefore it is probably invalid. If one assumed that trust was fine, then it was already implemented by StandardSats at the time. It felt it was designed to use some weird construct like DLCs -- and Chris Steward did publish a guide on how to implement a wallet that would be eligible for the bounty using DLCs, therefore the path seemed to be set there, but this would be a very hard and time-intensive thing.

The third, a noncustodial wallet with optional custodial ecash functionality, seemed to be targeting Fedimint directly, which already existed at the time and was about to release exactly these features.

Time passed and apparently no one tried to claim any of these bounties. My explanation is that, at least for 1 and 2, it was so hard to get it done that no one would risk trying and getting rejected. It is better for a programmer to work on something that interests them directly if they're working for free.

For 3 I believe no one even tried anything because the bounty was already set to be given to Fedimint.

Fast-forward to today and bounties 1 and 3 were awarded to two projects that were created by the sole interest of the developers with no attempt to actually claim these bounties -- and indeed, the two winners strictly do not qualify according to the descriptions from last year.

What if someone was working for months on trying to actually fulfill the criteria? That person would be in a very bad shape now, having thrown away all the work. Considering this it was a very good choice for everyone involved to not try to claim any of the bounties.

The winners have merit only in having pursued their own interests and in creating useful programs as the result. I'm sure the bounties do not feel to them like a deserved payment for the specific work they did, but more like a token of recognition for having worked on Bitcoin-related stuff at all, and an incentive to continue to work.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Splitpages

The simplest possible service: it splitted PDF pages in half.

Created specially to solve the problem of those scanned books that come with two pages side-by-side as if they were a single page and are much harder to read on Kindle because of that.

It required me to learn about Heroku Buildpacks though, and fork or contribute to a Heroku Buildpack that embedded a mupdf binary.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Gerador de tabelas de todos contra todos

I don't remember exactly when I did this, but I think a friend wanted to do software that would give him money over the internet without having to work. He didn't know how to program. He mentioned this idea he had which was some kind of football championship manager solution, but I heard it like this: a website that generated a round-robin championship table for people to print.

It is actually not obvious to anyone how to do it, it requires an algorithm that people will not reach casually while thinking, and there was no website doing it in Portuguese at the time, so I made this and it worked and it had a couple hundred daily visitors, and it even generated money from Google Ads (not much)!

First it was a Python web app running on Heroku, then Heroku started charging or limiting the amount of free time I could have on their platform, so I migrated it to a static site that ran everything on the client. Since I didn't want to waste my Python code that actually generated the tables I used Brython to run Python on JavaScript, which was an interesting experience.

In hindsight I could have just taken one of the many

round-robinJavaScript libraries that exist on NPM, so eventually after a couple of more years I did that.I also removed Google Ads when Google decided it had so many requirements to send me the money it was impossible, and then the money started to vanished.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28How being "flexible" can bloat a protocol

(A somewhat absurd example, but you'll get the idea)

Iimagine some client decides to add support for a variant of nip05 that checks for values at /.well-known/nostr.yaml besides /.well-known/nostr.json. "Why not?", they think, "I like YAML more than JSON, this can't hurt anyone".

Then some user makes a nip05 file in YAML and it will work on that client, they will think their file is good since it works on that client. When the user sees that other clients are not recognizing their YAML file, they will complain to the other client developers: "Hey, your client is broken, it is not supporting my YAML file!".

The developer of the other client, astonished, replies: "Oh, I am sorry, I didn't know that was part of the nip05 spec!"

The user, thinking it is doing a good thing, replies: "I don't know, but it works on this other client here, see?"

Now the other client adds support. The cycle repeats now with more users making YAML files, more and more clients adding YAML support, for fear of providing a client that is incomplete or provides bad user experience.

The end result of this is that now nip05 extra-officially requires support for both JSON and YAML files. Every client must now check for /.well-known/nostr.yaml too besides just /.well-known/nostr.json, because a user's key could be in either of these. A lot of work was wasted for nothing. And now, going forward, any new clients will require the double of work than before to implement.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28tempreites

My first library to get stars on GitHub, was a very stupid templating library that used just HTML and HTML attributes ("DSL-free"). I was inspired by http://microjs.com/ at the time and ended up not using the library. Probably no one ever did.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28OP_CHECKTEMPLATEVERIFYand the "covenants" dramaThere are many ideas for "covenants" (I don't think this concept helps in the specific case of examining proposals, but fine). Some people think "we" (it's not obvious who is included in this group) should somehow examine them and come up with the perfect synthesis.

It is not clear what form this magic gathering of ideas will take and who (or which ideas) will be allowed to speak, but suppose it happens and there is intense research and conversations and people (ideas) really enjoy themselves in the process.

What are we left with at the end? Someone has to actually commit the time and put the effort and come up with a concrete proposal to be implemented on Bitcoin, and whatever the result is it will have trade-offs. Some great features will not make into this proposal, others will make in a worsened form, and some will be contemplated very nicely, there will be some extra costs related to maintenance or code complexity that will have to be taken. Someone, a concreate person, will decide upon these things using their own personal preferences and biases, and many people will not be pleased with their choices.

That has already happened. Jeremy Rubin has already conjured all the covenant ideas in a magic gathering that lasted more than 3 years and came up with a synthesis that has the best trade-offs he could find. CTV is the result of that operation.

The fate of CTV in the popular opinion illustrated by the thoughtless responses it has evoked such as "can we do better?" and "we need more review and research and more consideration of other ideas for covenants" is a preview of what would probably happen if these suggestions were followed again and someone spent the next 3 years again considering ideas, talking to other researchers and came up with a new synthesis. Again, that person would be faced with "can we do better?" responses from people that were not happy enough with the choices.

And unless some famous Bitcoin Core or retired Bitcoin Core developers were personally attracted by this synthesis then they would take some time to review and give their blessing to this new synthesis.

To summarize the argument of this article, the actual question in the current CTV drama is that there exists hidden criteria for proposals to be accepted by the general community into Bitcoin, and no one has these criteria clear in their minds. It is not as simple not as straightforward as "do research" nor it is as humanly impossible as "get consensus", it has a much bigger social element into it, but I also do not know what is the exact form of these hidden criteria.

This is said not to blame anyone -- except the ignorant people who are not aware of the existence of these things and just keep repeating completely false and unhelpful advice for Jeremy Rubin and are not self-conscious enough to ever realize what they're doing.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: Custom multi-use database app

Since 2015 I have this idea of making one app that could be repurposed into a full-fledged app for all kinds of uses, like powering small businesses accounts and so on. Hackable and open as an Excel file, but more efficient, without the hassle of making tables and also using ids and indexes under the hood so different kinds of things can be related together in various ways.

It is not a concrete thing, just a generic idea that has taken multiple forms along the years and may take others in the future. I've made quite a few attempts at implementing it, but never finished any.

I used to refer to it as a "multidimensional spreadsheet".

Can also be related to DabbleDB.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: a website for feedback exchange

I thought a community of people sharing feedback on mutual interests would be a good thing, so as always I broadened and generalized the idea and mixed with my old criticue-inspired idea-feedback project and turned it into a "token". You give feedback on other people's things, they give you a "point". You can then use that point to request feedback from others.

This could be made as an Etleneum contract so these points were exchanged for satoshis using the shitswap contract (yet to be written).

In this case all the Bitcoin/Lightning side of the website must be hidden until the user has properly gone through the usage flow and earned points.

If it was to be built on Etleneum then it needs to emphasize the login/password login method instead of the lnurl-auth method. And then maybe it could be used to push lnurl-auth to normal people, but with a different name.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28bolt12 problems

- clients can't programatically build new offers by changing a path or query params (services like zbd.gg or lnurl-pay.me won't work)

- impossible to use in a load-balanced custodian way -- since offers would have to be pregenerated and tied to a specific lightning node.

- the existence of fiat currency fields makes it so wallets have to fetch exchange rates from somewhere on the internet (or offer a bad user experience), using HTTP which hurts user privacy.

- the vendor field is misleading, can be phished very easily, not as safe as a domain name.

- onion messages are an improvement over fake HTLC-based payments as a way of transmitting data, for sure. but we must decide if they are (i) suitable for transmitting all kinds of data over the internet, a replacement for tor; or (ii) not something that will scale well or on which we can count on for the future. if there was proper incentivization for data transmission it could end up being (i), the holy grail of p2p communication over the internet, but that is a very hard problem to solve and not guaranteed to yield the desired scalability results. since not even hints of attempting to solve that are being made, it's safer to conclude it is (ii).

bolt12 limitations

- not flexible enough. there are some interesting fields defined in the spec, but who gets to add more fields later if necessary? very unclear.

- services can't return any actionable data to the users who paid for something. it's unclear how business can be conducted without an extra communication channel.

bolt12 illusions

- recurring payments is not really solved, it is just a spec that defines intervals. the actual implementation must still be done by each wallet and service. the recurring payment cannot be enforced, the wallet must still initiate the payment. even if the wallet is evil and is willing to initiate a payment without the user knowing it still needs to have funds, channels, be online, connected etc., so it's not as if the services could rely on the payments being delivered in time.

- people seem to think it will enable pushing payments to mobile wallets, which it does not and cannot.

- there is a confusion of contexts: it looks like offers are superior to lnurl-pay, for example, because they don't require domain names. domain names, though, are common and well-established among internet services and stores, because these services have websites, so this is not really an issue. it is an issue, though, for people that want to receive payments in their homes. for these, indeed, bolt12 offers a superior solution -- but at the same time bolt12 seems to be selling itself as a tool for merchants and service providers when it includes and highlights features as recurring payments and refunds.

- the privacy gains for the receiver that are promoted as being part of bolt12 in fact come from a separate proposal, blinded paths, which should work for all normal lightning payments and indeed are a very nice solution. they are (or at least were, and should be) independent from the bolt12 proposal. a separate proposal, which can be (and already is being) used right now, also improves privacy for the receiver very much anway, it's called trampoline routing.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Thoughts on Nostr key management

On Why I don't like NIP-26 as a solution for key management I talked about multiple techniques that could be used to tackle the problem of key management on Nostr.

Here are some ideas that work in tandem:

- NIP-41 (stateless key invalidation)

- NIP-46 (Nostr Connect)

- NIP-07 (signer browser extension)

- Connected hardware signing devices

- other things like musig or frostr keys used in conjunction with a semi-trusted server; or other kinds of trusted software, like a dedicated signer on a mobile device that can sign on behalf of other apps; or even a separate protocol that some people decide to use as the source of truth for their keys, and some clients might decide to use that automatically

- there are probably many other ideas

Some premises I have in my mind (that may be flawed) that base my thoughts on these matters (and cause me to not worry too much) are that

- For the vast majority of people, Nostr keys aren't a target as valuable as Bitcoin keys, so they will probably be ok even without any solution;

- Even when you lose everything, identity can be recovered -- slowly and painfully, but still --, unlike money;

- Nostr is not trying to replace all other forms of online communication (even though when I think about this I can't imagine one thing that wouldn't be nice to replace with Nostr) or of offline communication, so there will always be ways.

- For the vast majority of people, losing keys and starting fresh isn't a big deal. It is a big deal when you have followers and an online persona and your life depends on that, but how many people are like that? In the real world I see people deleting social media accounts all the time and creating new ones, people losing their phone numbers or other accounts associated with their phone numbers, and not caring very much -- they just find a way to notify friends and family and move on.

We can probably come up with some specs to ease the "manual" recovery process, like social attestation and explicit signaling -- i.e., Alice, Bob and Carol are friends; Alice loses her key; Bob sends a new Nostr event kind to the network saying what is Alice's new key; depending on how much Carol trusts Bob, she can automatically start following that and remove the old key -- or something like that.

One nice thing about some of these proposals, like NIP-41, or the social-recovery method, or the external-source-of-truth-method, is that they don't have to be implemented in any client, they can live in standalone single-purpose microapps that users open or visit only every now and then, and these can then automatically update their follow lists with the latest news from keys that have changed according to multiple methods.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A response to Achim Warner's "Drivechain brings politics to miners" article

I mean this article: https://achimwarner.medium.com/thoughts-on-drivechain-i-miners-can-do-things-about-which-we-will-argue-whether-it-is-actually-a5c3c022dbd2

There are basically two claims here:

1. Some corporate interests might want to secure sidechains for themselves and thus they will bribe miners to have these activated

First, it's hard to imagine why they would want such a thing. Are they going to make a proprietary KYC chain only for their users? They could do that in a corporate way, or with a federation, like Facebook tried to do, and that would provide more value to their users than a cumbersome pseudo-decentralized system in which they don't even have powers to issue currency. Also, if Facebook couldn't get away with their federated shitcoin because the government was mad, what says the government won't be mad with a sidechain? And finally, why would Facebook want to give custody of their proprietary closed-garden Bitcoin-backed ecosystem coins to a random, open and always-changing set of miners?

But even if they do succeed in making their sidechain and it is very popular such that it pays miners fees and people love it. Well, then why not? Let them have it. It's not going to hurt anyone more than a proprietary shitcoin would anyway. If Facebook really wants a closed ecosystem backed by Bitcoin that probably means we are winning big.

2. Miners will be required to vote on the validity of debatable things

He cites the example of a PoS sidechain, an assassination market, a sidechain full of nazists, a sidechain deemed illegal by the US government and so on.

There is a simple solution to all of this: just kill these sidechains. Either miners can take the money from these to themselves, or they can just refuse to engage and freeze the coins there forever, or they can even give the coins to governments, if they want. It is an entirely good thing that evil sidechains or sidechains that use horrible technology that doesn't even let us know who owns each coin get annihilated. And it was the responsibility of people who put money in there to evaluate beforehand and know that PoS is not deterministic, for example.

About government censoring and wanting to steal money, or criminals using sidechains, I think the argument is very weak because these same things can happen today and may even be happening already: i.e., governments ordering mining pools to not mine such and such transactions from such and such people, or forcing them to reorg to steal money from criminals and whatnot. All this is expected to happen in normal Bitcoin. But both in normal Bitcoin and in Drivechain decentralization fixes that problem by making it so governments cannot catch all miners required to control the chain like that -- and in fact fixing that problem is the only reason we need decentralization.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Things a coalition of evil miners can do on Bitcoin

If a miner coalition has 75% of hashrate for 6 months they can steal coins from a Drivechain without any risk, that's what the drivechain haters say.

What other evil things can a coalition of 75% hashrate do in 6 months?

- steal money from all open Lightning nodes on the network by opening channels to them, mining 3 blocks, spending the funds out on Lightning instantly, then rolling back the 3 blocks and canceling the channel.

- the above would eventually -- but not instantly and only after many steals have happened (even if they're not all perfectly coordinated and instant) -- cause Lightning to shrink in size a lot and become more of a closed friends network than truly open.

- only mine empty blocks and cause the mempool to be enormously clogged.

- refuse to use the mempool, force a proprietary API for transaction propagation with zero transparency and force other miners to use that same infrastructure too and extract a fee from them.

- censor anything and force other miners to censor too, at the threat of orphaning their blocks.

- easily cause so much confusion in the mining process that Bitcoin is deemed unusable and price falls drastically, miners can then buy at low price.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Sol e Terra

A Terra não gira em torno do Sol. Tudo depende do ponto de referência e não existe um ponto de referência absoluto. Só é melhor dizer que a Terra gira em torno do Sol porque há outros planetas fazendo movimentos análogos e aí fica mais fácil para todo mundo entender os movimentos tomando o Sol como ponto de referência.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Using Spacechains and Fedimint to solve scaling

What if instead of trying to create complicated "layer 2" setups involving noveau cryptographic techniques we just did the following:

- we take that Fedimint source code and remove the "mint" stuff, and just use their federation stuff secure coins with multisig;

- then we make a spacechain;

- and we make the federations issue multisig-btc tokens on it;

- and then we put some uniswap-like thing in there to allow these tokens to be exchanged freely.

Why?

The recent spike in fees caused by Ordinals and BRC-20 shitcoinery has shown that Lightning isn't a silver bullet. Channels are too fragile, it costs a lot to open a channel under a high fee environment, to run a routing node and so on.

People who want to keep using Lightning are instead flocking to the big Lightning custodial providers: WalletofSatoshi, ZEBEDEE, OpenNode and so on. We could leverage that trust people have in these companies (and individuals) operating shadow Lightning providers and turn each of these into a btc-token issuer. Each issue their own token, transactions flow freely. Each person can hold only assets from the issuers they trust more.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28On HTLCs and arbiters

This is another attempt and conveying the same information that should be in Lightning and its fake HTLCs. It assumes you know everything about Lightning and will just highlight a point. This is also valid for PTLCs.

The protocol says HTLCs are trimmed (i.e., not actually added to the commitment transaction) when the cost of redeeming them in fees would be greater than their actual value.

Although this is often dismissed as a non-important fact (often people will say "it's trusted for small payments, no big deal"), but I think it is indeed very important for 3 reasons:

- Lightning absolutely relies on HTLCs actually existing because the payment proof requires them. The entire security of each payment comes from the fact that the payer has a preimage that comes from the payee. Without that, the state of the payment becomes an unsolvable mystery. The inexistence of an HTLC breaks the atomicity between the payment going through and the payer receiving a proof.

- Bitcoin fees are expected to grow with time (arguably the reason Lightning exists in the first place).

- MPP makes payment sizes shrink, therefore more and more of Lightning payments are to be trimmed. As I write this, the mempool is clear and still payments smaller than about 5000sat are being trimmed. Two weeks ago the limit was at 18000sat, which is already below the minimum most MPP splitting algorithms will allow.

Therefore I think it is important that we come up with a different way of ensuring payment proofs are being passed around in the case HTLCs are trimmed.

Channel closures

Worse than not having HTLCs that can be redeemed is the fact that in the current Lightning implementations channels will be closed by the peer once an HTLC timeout is reached, either to fulfill an HTLC for which that peer has a preimage or to redeem back that expired HTLCs the other party hasn't fulfilled.

For the surprise of everybody, nodes will do this even when the HTLCs in question were trimmed and therefore cannot be redeemed at all. It's very important that nodes stop doing that, because it makes no economic sense at all.

However, that is not so simple, because once you decide you're not going to close the channel, what is the next step? Do you wait until the other peer tries to fulfill an expired HTLC and tell them you won't agree and that you must cancel that instead? That could work sometimes if they're honest (and they have no incentive to not be, in this case). What if they say they tried to fulfill it before but you were offline? Now you're confused, you don't know if you were offline or they were offline, or if they are trying to trick you. Then unsolvable issues start to emerge.

Arbiters

One simple idea is to use trusted arbiters for all trimmed HTLC issues.

This idea solves both the protocol issue of getting the preimage to the payer once it is released by the payee -- and what to do with the channels once a trimmed HTLC expires.

A simple design would be to have each node hardcode a set of trusted other nodes that can serve as arbiters. Once a channel is opened between two nodes they choose one node from both lists to serve as their mutual arbiter for that channel.

Then whenever one node tries to fulfill an HTLC but the other peer is unresponsive, they can send the preimage to the arbiter instead. The arbiter will then try to contact the unresponsive peer. If it succeeds, then done, the HTLC was fulfilled offchain. If it fails then it can keep trying until the HTLC timeout. And then if the other node comes back later they can eat the loss. The arbiter will ensure they know they are the ones who must eat the loss in this case. If they don't agree to eat the loss, the first peer may then close the channel and blacklist the other peer. If the other peer believes that both the first peer and the arbiter are dishonest they can remove that arbiter from their list of trusted arbiters.

The same happens in the opposite case: if a peer doesn't get a preimage they can notify the arbiter they hadn't received anything. The arbiter may try to ask the other peer for the preimage and, if that fails, settle the dispute for the side of that first peer, which can proceed to fail the HTLC is has with someone else on that route.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28On the state of programs and browsers

Basically, there are basically (not exhaustively) 2 kinds of programs one can run in a computer nowadays:

1.1. A program that is installed, permanent, has direct access to the Operating System, can draw whatever it wants, modify files, interact with other programs and so on; 1.2. A program that is transient, fetched from someone else's server at run time, interpreted, rendered and executed by another program that bridges the access of that transient program to the OS and other things.

Meanwhile, web browsers have basically (not exhaustively) two use cases:

2.1. Display text, pictures, videos hosted on someone else's computer; 2.2. Execute incredibly complex programs that are fetched at run time, executed and so on -- you get it, it's the same 1.2.

These two use cases for browsers are at big odds with one another. While stretching itsel f to become more and more a platform for programs that can do basically anything (in the 1.1 sense) they are still restricted to being an 1.2 platform. At the same time, websites that were supposed to be on 2.1 sometimes get confused and start acting as if they were 2.2 -- and other confusing mixed up stuff.

I could go hours in philosophical inquiries on the nature of browsers, how rewriting everything in JavaScript is not healthy or where everything went wrong, but I think other people have done this already.

One thing that bothers me a lot, though, is that computers can do a lot of things, and with the internet and in the current state of the technology it's fairly easy to implement tools that would help in many aspects of human existence and provide high-quality, useful programs, with the help of a server to coordinate access, store data, authenticate users and so on many things are possible. However, due to the nature of UI in the browser, it's very hard to get any useful tool to users.

Writing a UI, even the most basic UI imaginable (some text input boxes and some buttons, or a table) can take a long time, always more than the time necessary to code the actual core features of whatever program is being developed -- and that is considering that the person capable of writing interesting programs that do the functionality in the backend are also capable of interacting with JavaScript and the giant amount of frameworks, transpilers, styling stuff, CSS, the fact that all this is built on top of HTML and so on.

This is not good.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28IPFS problems: Community

I was an avid IPFS user until yesterday. Many many times I asked simple questions for which I couldn't find an answer on the internet in the #ipfs IRC channel on Freenode. Most of the times I didn't get an answer, and even when I got it was rarely by someone who knew IPFS deeply. I've had issues go unanswered on js-ipfs repositories for year – one of these was raising awareness of a problem that then got fixed some months later by a complete rewrite, I closed my own issue after realizing that by myself some couple of months later, I don't think the people responsible for the rewrite were ever acknowledge that he had fixed my issue.

Some days ago I asked some questions about how the IPFS protocol worked internally, sincerely trying to understand the inefficiencies in finding and fetching content over IPFS. I pointed it would be a good idea to have a drawing showing that so people would understand the difficulties (which I didn't) and wouldn't be pissed off by the slowness. I was told to read the whitepaper. I had already the whitepaper, but read again the relevant parts. The whitepaper doesn't explain anything about the DHT and how IPFS finds content. I said that in the room, was told to read again.

Before anyone misread this section, I want to say I understand it's a pain to keep answering people on IRC if you're busy developing stuff of interplanetary importance, and that I'm not paying anyone nor I have the right to be answered. On the other hand, if you're developing a super-important protocol, financed by many millions of dollars and a lot of people are hitting their heads against your software and there's no one to help them; you're always busy but never delivers anything that brings joy to your users, something is very wrong. I sincerely don't know what IPFS developers are working on, I wouldn't doubt they're working on important things if they said that, but what I see – and what many other users see (take a look at the IPFS Discourse forum) is bugs, bugs all over the place, confusing UX, and almost no help.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A list of things artificial intelligence is not doing

If AI is so good why can't it:

- write good glue code that wraps a documented HTTP API?

- make good translations using available books and respective published translations?

- extract meaningful and relevant numbers from news articles?

- write mathematical models that fit perfectly to available data better than any human?

- play videogames without cheating (i.e. simulating human vision, attention and click speed)?

- turn pure HTML pages into pretty designs by generating CSS

- predict the weather

- calculate building foundations