-

@ 6871d8df:4a9396c1

2024-02-24 22:42:16

@ 6871d8df:4a9396c1

2024-02-24 22:42:16In an era where data seems to be as valuable as currency, the prevailing trend in AI starkly contrasts with the concept of personal data ownership. The explosion of AI and the ensuing race have made it easy to overlook where the data is coming from. The current model, dominated by big tech players, involves collecting vast amounts of user data and selling it to AI companies for training LLMs. Reddit recently penned a 60 million dollar deal, Google guards and mines Youtube, and more are going this direction. But is that their data to sell? Yes, it's on their platforms, but without the users to generate it, what would they monetize? To me, this practice raises significant ethical questions, as it assumes that user data is a commodity that companies can exploit at will.

The heart of the issue lies in the ownership of data. Why, in today's digital age, do we not retain ownership of our data? Why can't our data follow us, under our control, to wherever we want to go? These questions echo the broader sentiment that while some in the tech industry — such as the blockchain-first crypto bros — recognize the importance of data ownership, their "blockchain for everything solutions," to me, fall significantly short in execution.

Reddit further complicates this with its current move to IPO, which, on the heels of the large data deal, might reinforce the mistaken belief that user-generated data is a corporate asset. Others, no doubt, will follow suit. This underscores the urgent need for a paradigm shift towards recognizing and respecting user data as personal property.

In my perfect world, the digital landscape would undergo a revolutionary transformation centered around the empowerment and sovereignty of individual data ownership. Platforms like Twitter, Reddit, Yelp, YouTube, and Stack Overflow, integral to our digital lives, would operate on a fundamentally different premise: user-owned data.

In this envisioned future, data ownership would not just be a concept but a practice, with public and private keys ensuring the authenticity and privacy of individual identities. This model would eliminate the private data silos that currently dominate, where companies profit from selling user data without consent. Instead, data would traverse a decentralized protocol akin to the internet, prioritizing user control and transparency.

The cornerstone of this world would be a meritocratic digital ecosystem. Success for companies would hinge on their ability to leverage user-owned data to deliver unparalleled value rather than their capacity to gatekeep and monetize information. If a company breaks my trust, I can move to a competitor, and my data, connections, and followers will come with me. This shift would herald an era where consent, privacy, and utility define the digital experience, ensuring that the benefits of technology are equitably distributed and aligned with the users' interests and rights.

The conversation needs to shift fundamentally. We must challenge this trajectory and advocate for a future where data ownership and privacy are not just ideals but realities. If we continue on our current path without prioritizing individual data rights, the future of digital privacy and autonomy is bleak. Big tech's dominance allows them to treat user data as a commodity, potentially selling and exploiting it without consent. This imbalance has already led to users being cut off from their digital identities and connections when platforms terminate accounts, underscoring the need for a digital ecosystem that empowers user control over data. Without changing direction, we risk a future where our content — and our freedoms by consequence — are controlled by a few powerful entities, threatening our rights and the democratic essence of the digital realm. We must advocate for a shift towards data ownership by individuals to preserve our digital freedoms and democracy.

-

@ f977c464:32fcbe00

2024-01-30 20:06:18

@ f977c464:32fcbe00

2024-01-30 20:06:18Güneşin kaybolmasının üçüncü günü, saat öğlen on ikiyi yirmi geçiyordu. Trenin kalkmasına yaklaşık iki saat vardı. Hepimiz perondaydık. Valizlerimiz, kolilerimiz, renk renk ve biçimsiz çantalarımızla yan yana dizilmiş, kısa aralıklarla tepemizdeki devasa saati kontrol ediyorduk.

Ama ne kadar dik bakarsak bakalım zaman bir türlü istediğimiz hızla ilerlemiyordu. Herkes birkaç dakika sürmesi gereken alelade bir doğa olayına sıkışıp kalmış, karanlıktan sürünerek çıkmayı deniyordu.

Bekleme salonuna doğru döndüm. Nefesimden çıkan buharın arkasında, kalın taş duvarları ve camlarıyla morg kadar güvenli ve soğuk duruyordu. Cesetleri o yüzden bunun gibi yerlere taşımaya başlamışlardı. Demek insanların bütün iyiliği başkaları onları gördüğü içindi ki gündüzleri gecelerden daha karanlık olduğunda hemen birbirlerinin gırtlağına çökmüş, böğürlerinde delikler açmış, gözlerini oyup kafataslarını parçalamışlardı.

İstasyonun ışığı titrediğinde karanlığın enseme saplandığını hissettim. Eğer şimdi, böyle kalabalık bir yerde elektrik kesilse başımıza ne gelirdi?

İçerideki askerlerden biri bakışlarımı yakalayınca yeniden saate odaklanmış gibi yaptım. Sadece birkaç dakika geçmişti.

“Tarlalarım gitti. Böyle boyum kadar ayçiçeği doluydu. Ah, hepsi ölüp gidiyor. Afitap’ın çiçekleri de gi-”

“Dayı, Allah’ını seversen sus. Hepimizi yakacaksın şimdi.”

Karanlıkta durduğunda, görünmez olmayı istemeye başlıyordun. Kimse seni görmemeli, nefesini bile duymamalıydı. Kimsenin de ayağının altında dolaşmamalıydın; gelip kazayla sana çarpmamalılar, takılıp sendelememeliydiler. Yoksa aslında hedefi sen olmadığın bir öfke gürlemeye başlar, yaşadığın ilk şoku ve acıyı silerek üstünden geçerdi.

İlk konuşan, yaşlıca bir adam, kafasında kasketi, nasırlı ellerine hohluyordu. Gözleri ve burnu kızarmıştı. Güneşin kaybolması onun için kendi başına bir felaket değildi. Hayatına olan pratik yansımalarından korkuyordu olsa olsa. Bir anının kaybolması, bu yüzden çoktan kaybettiği birinin biraz daha eksilmesi. Hayatta kalmasını gerektiren sebepler azalırken, hayatta kalmasını sağlayacak kaynaklarını da kaybediyordu.

Onu susturan delikanlıysa atkısını bütün kafasına sarmış, sakalı ve yüzünün derinliklerine kaçmış gözleri dışında bedeninin bütün parçalarını gizlemeye çalışıyordu. İşte o, güneşin kaybolmasının tam olarak ne anlama geldiğini anlamamış olsa bile, dehşetini olduğu gibi hissedebilenlerdendi.

Güneşin onlardan alındıktan sonra kime verileceğini sormuyorlardı. En başta onlara verildiğinde de hiçbir soru sormamışlardı zaten.

İki saat ne zaman geçer?

Midemin üstünde, sağ tarafıma doğru keskin bir acı hissettim. Karaciğerim. Gözlerimi yumdum. Yanımda biri metal bir nesneyi yere bıraktı. Bir kafesti. İçerisindeki kartalın ıslak kokusu burnuma ulaşmadan önce bile biliyordum bunu.

“Yeniden mi?” diye sordu bana kartal. Kanatları kanlı. Zamanın her bir parçası tüylerinin üstüne çöreklenmişti. Gagası bir şey, tahminen et parçası geveliyor gibi hareket ediyordu. Eski anılar kolay unutulmazmış. Şu anda kafesinin kalın parmaklıklarının ardında olsa da bunun bir aldatmaca olduğunu bir tek ben biliyordum. Her an kanatlarını iki yana uzatıverebilir, hava bu hareketiyle dalgalanarak kafesi esneterek hepimizi içine alacak kadar genişleyebilir, parmaklıklar önce ayaklarımızın altına serilir gibi gözükebilir ama aslında hepimizin üstünde yükselerek tepemize çökebilirdi.

Aşağıya baktım. Tahtalarla zapt edilmiş, hiçbir yere gidemeyen ama her yere uzanan tren rayları. Atlayıp koşsam… Çantam çok ağırdı. Daha birkaç adım atamadan, kartal, suratını bedenime gömerdi.

“Bu sefer farklı,” diye yanıtladım onu. “Yeniden diyemezsin. Tekrarladığım bir şey değil bu. Hatta bir hata yapıyormuşum gibi tonlayamazsın da. Bu sefer, insanların hak etmediğini biliyorum.”

“O zaman daha vahim. Süzme salaksın demektir.”

“İnsanların hak etmemesi, insanlığın hak etmediği anlamına gelmez ki.”

Az önce göz göze geldiğim genççe ama çökük asker hâlâ bana bakıyordu. Bir kartalla konuştuğumu anlamamıştı şüphesiz. Yanımdakilerden biriyle konuştuğumu sanmış olmalıydı. Ama konuştuğum kişiye bakmıyordum ona göre. Çekingence kafamı eğmiştim. Bir kez daha göz göze geldiğimizde içerideki diğer iki askere bir şeyler söyledi, onlar dönüp beni süzerken dışarı çıktı.

Yanımızdaki, az önce konuşan iki adam da şaşkınlıkla bir bana bir kartala bakıyordu.

“Yalnız bu sefer kalbin de kırılacak, Prometheus,” dedi kartal, bana. “Belki son olur. Biliyorsun, bir sürü soruna neden oluyor bu yaptıkların.”

Beni koruyordu sözde. En çok kanıma dokunan buydu. Kasıklarımın üstüne oturmuş, kanlı suratının ardında gözleri parlarken attığı çığlık kulaklarımda titremeye devam ediyordu. Bu tabloda kimsenin kimseyi düşündüğü yoktu. Kartalın, yanımızdaki adamların, artık arkama kadar gelmiş olması gereken askerin, tren raylarının, geçmeyen saatlerin…

Arkamı döndüğümde, asker sahiden oradaydı. Zaten öyle olması gerekiyordu; görmüştüm bunu, biliyordum. Kehanetler… Bir şeyler söylüyordu ama ağzı oynarken sesi çıkmıyordu. Yavaşlamış, kendisini saatin akışına uydurmuştu. Havada donan tükürüğünden anlaşılıyordu, sinirliydi. Korktuğu için olduğunu biliyordum. Her seferinde korkmuşlardı. Beni unutmuş olmaları işlerini kolaylaştırmıyordu. Sadece yeni bir isim vermelerine neden oluyordu. Bu seferkiyle beni lanetleyecekleri kesinleşmişti.

Olması gerekenle olanların farklı olması ne kadar acınasıydı. Olması gerekenlerin doğasının kötücül olmasıysa bir yerde buna dayanıyordu.

“Salaksın,” dedi kartal bana. Zamanı aşan bir çığlık. Hepimizin önüne geçmişti ama kimseyi durduramıyordu.

Sonsuzluğa kaç tane iki saat sıkıştırabilirsiniz?

Ben bir tane bile sıkıştıramadım.

Çantama uzanıyordum. Asker de sırtındaki tüfeğini indiriyordu. Benim acelem yoktu, onunsa eli ayağı birbirine dolaşıyordu. Oysaki her şey tam olması gerektiği anda olacaktı. Kehanet başkasının parmaklarının ucundaydı.

Güneş, bir tüfeğin patlamasıyla yeryüzüne doğdu.

Rayların üzerine serilmiş göğsümün ortasından, bir çantanın içinden.

Not: Bu öykü ilk olarak 2021 yılında Esrarengiz Hikâyeler'de yayımlanmıştır.

-

@ 8ce092d8:950c24ad

2024-02-04 23:35:07

@ 8ce092d8:950c24ad

2024-02-04 23:35:07Overview

- Introduction

- Model Types

- Training (Data Collection and Config Settings)

- Probability Viewing: AI Inspector

- Match

- Cheat Sheet

I. Introduction

AI Arena is the first game that combines human and artificial intelligence collaboration.

AI learns your skills through "imitation learning."

Official Resources

- Official Documentation (Must Read): Everything You Need to Know About AI Arena

Watch the 2-minute video in the documentation to quickly understand the basic flow of the game. 2. Official Play-2-Airdrop competition FAQ Site https://aiarena.notion.site/aiarena/Gateway-to-the-Arena-52145e990925499d95f2fadb18a24ab0 3. Official Discord (Must Join): https://discord.gg/aiarenaplaytest for the latest announcements or seeking help. The team will also have a exclusive channel there. 4. Official YouTube: https://www.youtube.com/@aiarena because the game has built-in tutorials, you can choose to watch videos.

What is this game about?

- Although categorized as a platform fighting game, the core is a probability-based strategy game.

- Warriors take actions based on probabilities on the AI Inspector dashboard, competing against opponents.

- The game does not allow direct manual input of probabilities for each area but inputs information through data collection and establishes models by adjusting parameters.

- Data collection emulates fighting games, but training can be completed using a Dummy As long as you can complete the in-game tutorial, you can master the game controls.

II. Model Types

Before training, there are three model types to choose from: Simple Model Type, Original Model Type, and Advanced Model Type.

It is recommended to try the Advanced Model Type after completing at least one complete training with the Simple Model Type and gaining some understanding of the game.

Simple Model Type

The Simple Model is akin to completing a form, and the training session is comparable to filling various sections of that form.

This model has 30 buckets. Each bucket can be seen as telling the warrior what action to take in a specific situation. There are 30 buckets, meaning 30 different scenarios. Within the same bucket, the probabilities for direction or action are the same.

For example: What should I do when I'm off-stage — refer to the "Recovery (you off-stage)" bucket.

For all buckets, refer to this official documentation:

https://docs.aiarena.io/arenadex/game-mechanics/tabular-model-v2

Video (no sound): The entire training process for all buckets

https://youtu.be/1rfRa3WjWEA

Game version 2024.1.10. The method of saving is outdated. Please refer to the game updates.

Advanced Model Type

The "Original Model Type" and "Advanced Model Type" are based on Machine Learning, which is commonly referred to as combining with AI.

The Original Model Type consists of only one bucket, representing the entire map. If you want the AI to learn different scenarios, you need to choose a "Focus Area" to let the warrior know where to focus. A single bucket means that a slight modification can have a widespread impact on the entire model. This is where the "Advanced Model Type" comes in.

The "Advanced Model Type" can be seen as a combination of the "Original Model Type" and the "Simple Model Type". The Advanced Model Type divides the map into 8 buckets. Each bucket can use many "Focus Area." For a detailed explanation of the 8 buckets and different Focus Areas, please refer to the tutorial page (accessible in the Advanced Model Type, after completing a training session, at the top left of the Advanced Config, click on "Tutorial").

III. Training (Data Collection and Config Settings)

Training Process:

- Collect Data

- Set Parameters, Train, and Save

- Repeat Step 1 until the Model is Complete

Training the Simple Model Type is the easiest to start with; refer to the video above for a detailed process.

Training the Advanced Model Type offers more possibilities through the combination of "Focus Area" parameters, providing a higher upper limit. While the Original Model Type has great potential, it's harder to control. Therefore, this section focuses on the "Advanced Model Type."

1. What Kind of Data to Collect

- High-Quality Data: Collect purposeful data. Garbage in, garbage out. Only collect the necessary data; don't collect randomly. It's recommended to use Dummy to collect data. However, don't pursue perfection; through parameter adjustments, AI has a certain level of fault tolerance.

- Balanced Data: Balance your dataset. In simple terms, if you complete actions on the left side a certain number of times, also complete a similar number on the right side. While data imbalance can be addressed through parameter adjustments (see below), it's advised not to have this issue during data collection.

- Moderate Amount: A single training will include many individual actions. Collect data for each action 1-10 times. Personally, it's recommended to collect data 2-3 times for a single action. If the effect of a single training is not clear, conduct a second (or even third) training with the same content, but with different parameter settings.

2. What to Collect (and Focus Area Selection)

Game actions mimic fighting games, consisting of 4 directions + 6 states (Idle, Jump, Attack, Grab, Special, Shield). Directions can be combined into ↗, ↘, etc. These directions and states can then be combined into different actions.

To make "Focus Area" effective, you need to collect data in training that matches these parameters. For example, for "Distance to Opponent", you need to collect data when close to the opponent and also when far away. * Note: While you can split into multiple training sessions, it's most effective to cover different situations within a single training.

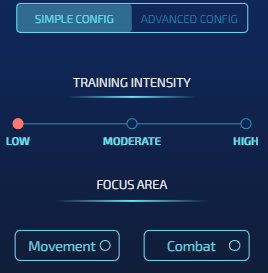

Refer to the Simple Config, categorize the actions you want to collect, and based on the game scenario, classify them into two categories: "Movement" and "Combat."

Movement-Based Actions

Action Collection

When the warrior is offstage, regardless of where the opponent is, we require the warrior to return to the stage to prevent self-destruction.

This involves 3 aerial buckets: 5 (Near Blast Zone), 7 (Under Stage), and 8 (Side Of Stage).

* Note: The background comes from the Tutorial mentioned earlier. The arrows in the image indicate the direction of the action and are for reference only. * Note: Action collection should be clean; do not collect actions that involve leaving the stage.

Config Settings

In the Simple Config, you can directly choose "Movement" in it. However, for better customization, it's recommended to use the Advanced Config directly. - Intensity: The method for setting Intensity will be introduced separately later. - Buckets: As shown in the image, choose the bucket you are training. - Focus Area: Position-based parameters: - Your position (must) - Raycast Platform Distance, Raycast Platform Type (optional, generally choose these in Bucket 7)

Combat-Based Actions

The goal is to direct attacks quickly and effectively towards the opponent, which is the core of game strategy.

This involves 5 buckets: - 2 regular situations - In the air: 6 (Safe Zone) - On the ground: 4 (Opponent Active) - 3 special situations on the ground: - 1 Projectile Active - 2 Opponent Knockback - 3 Opponent Stunned

2 Regular Situations

In the in-game tutorial, we learned how to perform horizontal attacks. However, in the actual game, directions expand to 8 dimensions. Imagine having 8 relative positions available for launching hits against the opponent. Our task is to design what action to use for attack or defense at each relative position.

Focus Area - Basic (generally select all) - Angle to opponent

- Distance to opponent - Discrete Distance: Choosing this option helps better differentiate between closer and farther distances from the opponent. As shown in the image, red indicates a relatively close distance, and green indicates a relatively distant distance.- Advanced: Other commonly used parameters

- Direction: different facings to opponent

- Your Elemental Gauge and Discrete Elementals: Considering the special's charge

- Opponent action: The warrior will react based on the opponent's different actions.

- Your action: Your previous action. Choose this if teaching combos.

3 Special Situations on the Ground

Projectile Active, Opponent Stunned, Opponent Knockback These three buckets can be referenced in the Simple Model Type video. The parameter settings approach is the same as Opponent Active/Safe Zone.

For Projectile Active, in addition to the parameters based on combat, to track the projectile, you also need to select "Raycast Projectile Distance" and "Raycast Projectile On Target."

3. Setting "Intensity"

Resources

- The "Tutorial" mentioned earlier explains these parameters.

- Official Config Document (2022.12.24): https://docs.google.com/document/d/1adXwvDHEnrVZ5bUClWQoBQ8ETrSSKgG5q48YrogaFJs/edit

TL;DR:

Epochs: - Adjust to fewer epochs if learning is insufficient, increase for more learning.

Batch Size: - Set to the minimum (16) if data is precise but unbalanced, or just want it to learn fast - Increase (e.g., 64) if data is slightly imprecise but balanced. - If both imprecise and unbalanced, consider retraining.

Learning Rate: - Maximize (0.01) for more learning but a risk of forgetting past knowledge. - Minimize for more accurate learning with less impact on previous knowledge.

Lambda: - Reduce for prioritizing learning new things.

Data Cleaning: - Enable "Remove Sparsity" unless you want AI to learn idleness. - For special cases, like teaching the warrior to use special moves when idle, refer to this tutorial video: https://discord.com/channels/1140682688651612291/1140683283626201098/1195467295913431111

Personal Experience: - Initial training with settings: 125 epochs, batch size 16, learning rate 0.01, lambda 0, data cleaning enabled. - Prioritize Multistream, sometimes use Oversampling. - Fine-tune subsequent training based on the mentioned theories.

IV. Probability Viewing: AI Inspector

The dashboard consists of "Direction + Action." Above the dashboard, you can see the "Next Action" – the action the warrior will take in its current state. The higher the probability, the more likely the warrior is to perform that action, indicating a quicker reaction. It's essential to note that when checking the Direction, the one with the highest visual representation may not have the highest numerical value. To determine the actual value, hover the mouse over the graphical representation, as shown below, where the highest direction is "Idle."

In the map, you can drag the warrior to view the probabilities of the warrior in different positions. Right-click on the warrior with the mouse to change the warrior's facing. The status bar below can change the warrior's state on the map.

When training the "Opponent Stunned, Opponent Knockback" bucket, you need to select the status below the opponent's status bar. If you are focusing on "Opponent action" in the Focus Zone, choose the action in the opponent's status bar. If you are focusing on "Your action" in the Focus Zone, choose the action in your own status bar. When training the "Projectile Active" Bucket, drag the projectile on the right side of the dashboard to check the status.

Next

The higher the probability, the faster the reaction. However, be cautious when the action probability reaches 100%. This may cause the warrior to be in a special case of "State Transition," resulting in unnecessary "Idle" states.

Explanation: In each state a fighter is in, there are different "possible transitions". For example, from falling state you cannot do low sweep because low sweep requires you to be on the ground. For the shield state, we do not allow you to directly transition to headbutt. So to do headbutt you have to first exit to another state and then do it from there (assuming that state allows you to do headbutt). This is the reason the fighter runs because "run" action is a valid state transition from shield. Source

V. Learn from Matches

After completing all the training, your model is preliminarily finished—congratulations! The warrior will step onto the arena alone and embark on its debut!

Next, we will learn about the strengths and weaknesses of the warrior from battles to continue refining the warrior's model.

In matches, besides appreciating the performance, pay attention to the following:

-

Movement, i.e., Off the Stage: Observe how the warrior gets eliminated. Is it due to issues in the action settings at a certain position, or is it a normal death caused by a high percentage? The former is what we need to avoid and optimize.

-

Combat: Analyze both sides' actions carefully. Observe which actions you and the opponent used in different states. Check which of your hits are less effective, and how does the opponent handle different actions, etc.

The approach to battle analysis is similar to the thought process in the "Training", helping to have a more comprehensive understanding of the warrior's performance and making targeted improvements.

VI. Cheat Sheet

Training 1. Click "Collect" to collect actions. 2. "Map - Data Limit" is more user-friendly. Most players perform initial training on the "Arena" map. 3. Switch between the warrior and the dummy: Tab key (keyboard) / Home key (controller). 4. Use "Collect" to make the opponent loop a set of actions. 5. Instantly move the warrior to a specific location: Click "Settings" - SPAWN - Choose the desired location on the map - On. Press the Enter key (keyboard) / Start key (controller) during training.

Inspector 1. Right-click on the fighter to change their direction. Drag the fighter and observe the changes in different positions and directions. 2. When satisfied with the training, click "Save." 3. In "Sparring" and "Simulation," use "Current Working Model." 4. If satisfied with a model, then click "compete." The model used in the rankings is the one marked as "competing."

Sparring / Ranked 1. Use the Throneroom map only for the top 2 or top 10 rankings. 2. There is a 30-second cooldown between matches. The replays are played for any match. Once the battle begins, you can see the winner on the leaderboard or by right-clicking the page - Inspect - Console. Also, if you encounter any errors or bugs, please send screenshots of the console to the Discord server.

Good luck! See you on the arena!

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28O Planetinha

Fumaça verde me entrando pelas narinas e um coro desafinado fazia uma base melódica.

nos confins da galáxia havia um planetinha isolado. Era um planeta feliz.

O homem vestido de mago começava a aparecer por detrás da fumaça verde.

O planetinha recebeu três presentes, mas o seu habitante, o homem, estava num estado de confusão tão grande que ameaçava estragá-los. Os homens já havia escravizado o primeiro presente, a vida; lutavam contra o segundo presente, a morte; e havia alguns que achavam que deviam destruir totalmente o terceiro, o amor, e com isto levar a desordem total ao pobre planetinha perdido, que se chamava Terra.

O coro desafinado entrou antes do "Terra" cantando várias vezes, como se imitasse um eco, "terra-terra-terraaa". Depois de uma pausa dramática, o homem vestido de mago voltou a falar.

Terra, nossa nave mãe.

Neste momento eu me afastei. À frente do palco onde o mago e seu coral faziam apelos à multidão havia vários estandes cobertos com a tradicional armação de quatro pernas e lona branca. Em todos os cantos da praça havia gente, gente dos mais variados tipos. Visitantes curiosos que se aproximavam atraídos pela fumaça verde e as barraquinhas, gente que aproveitava o movimento para vender doces sem pagar imposto, casais que se abraçavam de pé para espantar o frio, os tradicionais corredores que faziam seu cooper, gente cheia de barba e vestida para imitar os hippies dos anos 60 e vender colares estendidos no chão, transeuntes novos e velhos, vestidos como baladeiros ou como ativistas do ônibus grátis, grupos de ciclistas entusiastas.

O mago fazia agora apelos para que nós, os homens, habitantes do isolado planetinha, passássemos a ver o planetinha, nossa nave mãe, como um todo, e adquiríssemos a consciência de que ele estava entrando em maus lençóis. A idéia, reforçada pela logomarca do evento, era que parássemos de olhar só para a nossa vida e pensássemos no planeta.

A logomarca do evento, um desenho estilizado do planeta Terra, nada tinha a ver com seu nome: "Festival Andando de Bem com a Vida", mas havia sido ali colocada estrategicamente pelos organizadores, de quem parecia justamente sair a mensagem dita pelo mago.

Aquela multidão de pessoas que, assim como eu, tinham suas próprias preocupações, não podiam ver o quadro caótico que formavam, cada uma com seus atos isolados, ali naquela praça isolada, naquele planeta isolado. Quando o hippie barbudo, quase um Osho, assustava um casal para tentar vender-lhes um colar, a quantidade de caos que isto acrescentava à cena era gigantesca. Por um segundo, pude ver, como se estivesse de longe e acima, com toda a pretensão que este estado imaginativo carrega, a cena completa do caos.

Uma nave-mãe, dessas de ficção científica, habitada por milhões de pessoas, seguia no espaço sem rumo, e sem saber que logo à frente um longo precipício espacial a esperava, para a desgraça completa sua e de seus habitantes.

Acostumados àquela nave tanto quanto outrora estiveram acostumados à sua terra natal, os homens viviam as próprias vidas sem nem se lembrar que estavam vagando pelo espaço. Ninguém sabia quem estava conduzindo a nave, e ninguém se importava.

No final do filme descobre-se que era a soma completa do caos que cada habitante produzia, com seus gestos egoístas e incapazes de levar em conta a totalidade, é que determinava a direção da nave-mãe. O efeito, no entanto, não era imediato, como nunca é. Havia gente de verdade encarregada de conduzir a nave, mas era uma gente bêbada, mau-caráter, que vivia brigando pelo controle da nave e o poder que isto lhes dava. Poder, status, dinheiro!

Essa gente bêbada era atraída até ali pela corrupção das instituições e da moral comum que, no fundo no fundo, era causada pelo egoísmo da população, através de um complexo -- mas que no filme aparece simplificado pela ação individual de um magnata do divertimento público -- processo social.

O homem vestido de mago era mais um agente causador de caos, com sua cena cheia de fumaça e sua roupa estroboscópica, ele achava que estava fazendo o bem ao alertar sua platéia, todos as sextas-feiras, de que havia algo que precisava ser feito, que cada um que estava ali ouvindo era responsável pelo planeta. A sua incapacidade, porém, de explicar o que precisava ser feito só aumentava a angústia geral; a culpa que ele jogava sobre seu público, e que era prontamente aceita e passada em frente, aos familiares e amigos de cada um, atormentava-os diariamente e os impedia de ter uma vida decente no trabalho e em casa. As famílias, estressadas, estavam constantemente brigando e os motivos mais insignificantes eram responsáveis pelas mais horrendas conseqüências.

O mago, que após o show tirava o chapéu entortado e ia tomar cerveja num boteco, era responsável por uma parcela considerável do caos que levava a nave na direção do seu desgraçado fim. No filme, porém, um dos transeuntes que de passagem ouviu um pedaço do discurso do mago despertou em si mesmo uma consiência transformadora e, com poderes sobre-humanos que lhe foram então concedidos por uma ordem iniciática do bem ou não, usando só os seus poderes humanos mesmo, o transeunte -- na primeira versão do filme um homem, na segunda uma mulher -- consegue consertar as instituições e retirar os bêbados da condução da máquina. A questão da moral pública é ignorada para abreviar a trama, já com duas horas e quarenta de duração, mas subentende-se que ela também fora resolvida.

No planeta Terra real, que não está indo em direção alguma, preso pela gravidade ao Sol, e onde as pessoas vivem a própria vida porque lhes é impossível viver a dos outros, não têm uma consciência global de nada porque só é possível mesmo ter a consciência delas mesmas, e onde a maioria, de uma maneira ou de outra, está tentando como pode, fazer as coisas direito, o filme é exibido.

Para a maioria dos espectadores, é um filme que evoca reflexões, um filme forte. Por um segundo elas têm o mesmo vislumbre do caos generalizado que eu tive ali naquela praça. Para uma pequena parcela dos espectadores -- entre eles alguns dos que estavam na platéia do mago, o próprio mago, o seguidor do Osho, o casal de duas mulheres e o vendedor de brigadeiros, mas aos quais se somam também críticos de televisão e jornal e gente que fala pelos cotovelos na internet -- o filme é um horror, o filme é uma vulgarização de um problema real e sério, o filme apela para a figura do herói salvador e passa uma mensagem totalmente errada, de que a maioria da população pode continuar vivendo as suas própria vidinhas miseráveis enquanto espera por um herói que vem do Olimpo e os salva da mixórdia que eles mesmos causaram, é um filme que presta um enorme desserviço à causa.

No dia seguinte ao lançamento, num bar meio caro ali perto da praça, numa mesa com oito pessoas, entre elas seis do primeiro grupo e oito do segundo, discute-se se o filme levará ou não o Oscar. Eu estou em casa dormindo e não escuto nada.

-

@ 6ad3e2a3:c90b7740

2024-02-10 10:37:19

@ 6ad3e2a3:c90b7740

2024-02-10 10:37:19I tend to post what I think, and sometimes people don’t appreciate some of what I’m saying. That’s okay — they are free to unfollow, unsubscribe, mute or even block! But occasionally, they will go farther and try actually to deter me from posting, telling others in the public square what I’m saying is “dangerous” or “harmful”. I’ve even been accused of “killing people” and having a “body count!”

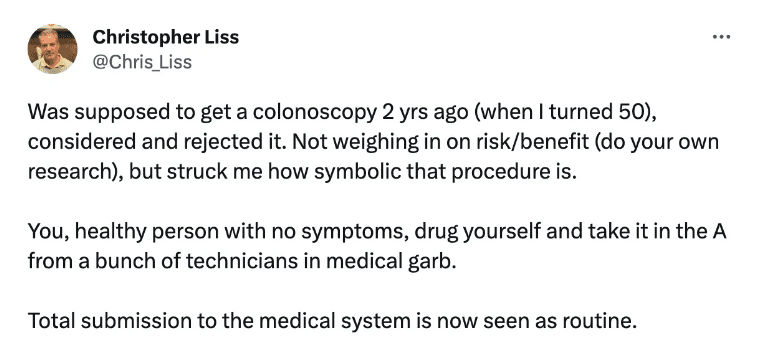

A recent example of this happened a couple months ago when I posted the following observation about the colonoscopy procedure:

Somepeopletook exception to this post, despite the risk/benefit disclaimer, arguing it was dangerous because it could deter people from getting this potentially life-saving procedure. In other words, let’s say colonoscopies save lives, and if 100 people who read my post were convinced not to get one, maybe one of them would get a preventable form of cancer.

I understand the argument, but it’s a terrible one. For starters, each adult human being reading that post has agency. Whether he does or does not do something is never solely because he heard me make an observation. Anyone who reads a tweet from someone neither claiming special expertise in the subject matter nor even purporting to give advice as to risk/benefit and decides not to do something was almost certainly not going to do it anyway for a variety of reasons. I am not a puppet-master of other people with special powers to command them to do things.

To conclude someone is responsible for another adult’s behavior simply because of an observation is to invite all kinds of absurdities. If I post about how crunchy, salty and tangy a particular Doritos flavor is (I do not eat Doritos!), and someone struggling with a junk food addiction reads it and falls off the wagon, am I therefore responsible for the deleterious health effects of his binge?

If posting negative (even though accurate) observations about a health-promoting procedure makes us responsible for someone who subsequently forgoes that procedure, surely posting positive observations about unhealthy behaviors should be viewed similarly. Posting a photo of yourself with a glass of scotch and a cigar in a swanky Vegas lounge with the caption “My happy place” might just be the image that sends an alcoholic reaching for the bottle! Only virtuous, healthful and wholesome messages are permitted!

But could even a picture of yourself in tip-top shape at the beach, surrounded by your healthy and beautiful family cause single people to feel lonely and depressed? Better not post on social media at all. Better not even go out in public if you’re well-dressed and have a healthy and beautiful family. Virtually anything you do could trigger a negative emotion in someone, and who knows where it could lead?

And what if Donald Trump were to say something positive about colonoscopies? Surely, there’s a cohort of people who would reflexively start to question them because everything he says is bad. So even someone posting ostensibly healthful information could be killing people by virtue of their reaction to the poster himself.

But let’s set the absurd implications aside and address the argument on its merits. Even if we concede getting the colonoscopy would have led to early detection and a successful removal of colon cancer (which is not always the case), not getting the colonoscopy is certainly not the proximate cause of the cancer. It might be a but-for cause, but the proximate cause would be some combination of diet, environmental toxins and genetics. Surely, my tweet, no matter how much it stuck with him, cannot be credited with the yeoman’s work of industrial pollution, a pesticide, hormone and antibiotic laden corporate food supply and any risk-augmenting personal behaviors (smoking, eating a poor diet, not exercising) from the person himself.

Further, anyone declining an often free preventative medical procedure probably lacks trust in the medical system generally, and my tweet surely did not cause the medical establishment to offer such consistently wrongheaded advice during the covid era and squander so much of the good will and esteem in which people once held it.

Put differently, my posting about Doritos’ crunch can’t be isolated from the hundreds of millions in marketing from Pepsi-co subsidiary Frito Lay that preceded it.

But even this is not the most pertinent objection to the notion it’s wrong to express earnest observations about medical procedures or lifestyle choices. The primary reason people should feel free to share what they believe or observe is that the science is never all in. Just as eggs were once considered harmful due to their cholesterol content and margarine healthful for its lack thereof, we might one day discover that colonoscopies (and the removal of polyps during the procedure) are themselves sometimes the trigger for colon cancer.

Set aside whether that particular hypothetical mechanism during that particular procedure sounds farfetched, it isn’t hard to imagine more generally that many of the treatments deemed “dangerous” to question might not in fact be net beneficial.

The implications of this are twofold: (1) if someone were on the hook for observations that might deter ostensibly net beneficial procedures, should those procedures later turn out to have been net harmful, those who recommended them would similarly have to be on the hook for those harms. (And I am not talking about the medical establishment that should very much on the hook were that the case, but the ordinary person encouraging his friends and social media followers to follow establishment medical advice.)

And (2) if it were verboten to share earnest observations that contradict the establishment dictum, we would disable the very error-correction mechanism that allows us to update our body of knowledge. Put differently, the examples of expert consensus being wrong and in need of correction span the millennia, from Galileo challenging the geocentric paradigm of the church to Einstein updating Newton’s theories on gravity. Without the ability to question openly the knowledge of the day, whether scientific, medical or political, our myriad errors in attending to human affairs would not merely cause acute harm — they would be permanent.

In short, anyone who discourages you from expressing earnest observations is himself engaging in the most harmful speech of which we can conceive, for though his perceived end might be prevention of local harm, it actually precludes the possibility of progress itself.

-

@ 3bf0c63f:aefa459d

2024-01-15 11:15:06

@ 3bf0c63f:aefa459d

2024-01-15 11:15:06Pequenos problemas que o Estado cria para a sociedade e que não são sempre lembrados

- **vale-transporte**: transferir o custo com o transporte do funcionário para um terceiro o estimula a morar longe de onde trabalha, já que morar perto é normalmente mais caro e a economia com transporte é inexistente. - **atestado médico**: o direito a faltar o trabalho com atestado médico cria a exigência desse atestado para todas as situações, substituindo o livre acordo entre patrão e empregado e sobrecarregando os médicos e postos de saúde com visitas desnecessárias de assalariados resfriados. - **prisões**: com dinheiro mal-administrado, burocracia e péssima alocação de recursos -- problemas que empresas privadas em competição (ou mesmo sem qualquer competição) saberiam resolver muito melhor -- o Estado fica sem presídios, com os poucos existentes entupidos, muito acima de sua alocação máxima, e com isto, segundo a bizarra corrente de responsabilidades que culpa o juiz que condenou o criminoso por sua morte na cadeia, juízes deixam de condenar à prisão os bandidos, soltando-os na rua. - **justiça**: entrar com processos é grátis e isto faz proliferar a atividade dos advogados que se dedicam a criar problemas judiciais onde não seria necessário e a entupir os tribunais, impedindo-os de fazer o que mais deveriam fazer. - **justiça**: como a justiça só obedece às leis e ignora acordos pessoais, escritos ou não, as pessoas não fazem acordos, recorrem sempre à justiça estatal, e entopem-na de assuntos que seriam muito melhor resolvidos entre vizinhos. - **leis civis**: as leis criadas pelos parlamentares ignoram os costumes da sociedade e são um incentivo a que as pessoas não respeitem nem criem normas sociais -- que seriam maneiras mais rápidas, baratas e satisfatórias de resolver problemas. - **leis de trãnsito**: quanto mais leis de trânsito, mais serviço de fiscalização são delegados aos policiais, que deixam de combater crimes por isto (afinal de contas, eles não querem de fato arriscar suas vidas combatendo o crime, a fiscalização é uma excelente desculpa para se esquivarem a esta responsabilidade). - **financiamento educacional**: é uma espécie de subsídio às faculdades privadas que faz com que se criem cursos e mais cursos que são cada vez menos recheados de algum conhecimento ou técnica útil e cada vez mais inúteis. - **leis de tombamento**: são um incentivo a que o dono de qualquer área ou construção "histórica" destrua todo e qualquer vestígio de história que houver nele antes que as autoridades descubram, o que poderia não acontecer se ele pudesse, por exemplo, usar, mostrar e se beneficiar da história daquele local sem correr o risco de perder, de fato, a sua propriedade. - **zoneamento urbano**: torna as cidades mais espalhadas, criando uma necessidade gigantesca de carros, ônibus e outros meios de transporte para as pessoas se locomoverem das zonas de moradia para as zonas de trabalho. - **zoneamento urbano**: faz com que as pessoas percam horas no trânsito todos os dias, o que é, além de um desperdício, um atentado contra a sua saúde, que estaria muito melhor servida numa caminhada diária entre a casa e o trabalho. - **zoneamento urbano**: torna ruas e as casas menos seguras criando zonas enormes, tanto de residências quanto de indústrias, onde não há movimento de gente alguma. - **escola obrigatória + currículo escolar nacional**: emburrece todas as crianças. - **leis contra trabalho infantil**: tira das crianças a oportunidade de aprender ofícios úteis e levar um dinheiro para ajudar a família. - **licitações**: como não existem os critérios do mercado para decidir qual é o melhor prestador de serviço, criam-se comissões de pessoas que vão decidir coisas. isto incentiva os prestadores de serviço que estão concorrendo na licitação a tentar comprar os membros dessas comissões. isto, fora a corrupção, gera problemas reais: __(i)__ a escolha dos serviços acaba sendo a pior possível, já que a empresa prestadora que vence está claramente mais dedicada a comprar comissões do que a fazer um bom trabalho (este problema afeta tantas áreas, desde a construção de estradas até a qualidade da merenda escolar, que é impossível listar aqui); __(ii)__ o processo corruptor acaba, no longo prazo, eliminando as empresas que prestavam e deixando para competir apenas as corruptas, e a qualidade tende a piorar progressivamente. - **cartéis**: o Estado em geral cria e depois fica refém de vários grupos de interesse. o caso dos taxistas contra o Uber é o que está na moda hoje (e o que mostra como os Estados se comportam da mesma forma no mundo todo). - **multas**: quando algum indivíduo ou empresa comete uma fraude financeira, ou causa algum dano material involuntário, as vítimas do caso são as pessoas que sofreram o dano ou perderam dinheiro, mas o Estado tem sempre leis que prevêem multas para os responsáveis. A justiça estatal é sempre muito rígida e rápida na aplicação dessas multas, mas relapsa e vaga no que diz respeito à indenização das vítimas. O que em geral acontece é que o Estado aplica uma enorme multa ao responsável pelo mal, retirando deste os recursos que dispunha para indenizar as vítimas, e se retira do caso, deixando estas desamparadas. - **desapropriação**: o Estado pode pegar qualquer propriedade de qualquer pessoa mediante uma indenização que é necessariamente inferior ao valor da propriedade para o seu presente dono (caso contrário ele a teria vendido voluntariamente). - **seguro-desemprego**: se há, por exemplo, um prazo mínimo de 1 ano para o sujeito ter direito a receber seguro-desemprego, isto o incentiva a planejar ficar apenas 1 ano em cada emprego (ano este que será sucedido por um período de desemprego remunerado), matando todas as possibilidades de aprendizado ou aquisição de experiência naquela empresa específica ou ascensão hierárquica. - **previdência**: a previdência social tem todos os defeitos de cálculo do mundo, e não importa muito ela ser uma forma horrível de poupar dinheiro, porque ela tem garantias bizarras de longevidade fornecidas pelo Estado, além de ser compulsória. Isso serve para criar no imaginário geral a idéia da __aposentadoria__, uma época mágica em que todos os dias serão finais de semana. A idéia da aposentadoria influencia o sujeito a não se preocupar em ter um emprego que faça sentido, mas sim em ter um trabalho qualquer, que o permita se aposentar. - **regulamentação impossível**: milhares de coisas são proibidas, há regulamentações sobre os aspectos mais mínimos de cada empreendimento ou construção ou espaço. se todas essas regulamentações fossem exigidas não haveria condições de produção e todos morreriam. portanto, elas não são exigidas. porém, o Estado, ou um agente individual imbuído do poder estatal pode, se desejar, exigi-las todas de um cidadão inimigo seu. qualquer pessoa pode viver a vida inteira sem cumprir nem 10% das regulamentações estatais, mas viverá também todo esse tempo com medo de se tornar um alvo de sua exigência, num estado de terror psicológico. - **perversão de critérios**: para muitas coisas sobre as quais a sociedade normalmente chegaria a um valor ou comportamento "razoável" espontaneamente, o Estado dita regras. estas regras muitas vezes não são obrigatórias, são mais "sugestões" ou limites, como o salário mínimo, ou as 44 horas semanais de trabalho. a sociedade, porém, passa a usar esses valores como se fossem o normal. são raras, por exemplo, as ofertas de emprego que fogem à regra das 44h semanais. - **inflação**: subir os preços é difícil e constrangedor para as empresas, pedir aumento de salário é difícil e constrangedor para o funcionário. a inflação força as pessoas a fazer isso, mas o aumento não é automático, como alguns economistas podem pensar (enquanto alguns outros ficam muito satisfeitos de que esse processo seja demorado e difícil). - **inflação**: a inflação destrói a capacidade das pessoas de julgar preços entre concorrentes usando a própria memória. - **inflação**: a inflação destrói os cálculos de lucro/prejuízo das empresas e prejudica enormemente as decisões empresariais que seriam baseadas neles. - **inflação**: a inflação redistribui a riqueza dos mais pobres e mais afastados do sistema financeiro para os mais ricos, os bancos e as megaempresas. - **inflação**: a inflação estimula o endividamento e o consumismo. - **lixo:** ao prover coleta e armazenamento de lixo "grátis para todos" o Estado incentiva a criação de lixo. se tivessem que pagar para que recolhessem o seu lixo, as pessoas (e conseqüentemente as empresas) se empenhariam mais em produzir coisas usando menos plástico, menos embalagens, menos sacolas. - **leis contra crimes financeiros:** ao criar legislação para dificultar acesso ao sistema financeiro por parte de criminosos a dificuldade e os custos para acesso a esse mesmo sistema pelas pessoas de bem cresce absurdamente, levando a um percentual enorme de gente incapaz de usá-lo, para detrimento de todos -- e no final das contas os grandes criminosos ainda conseguem burlar tudo. -

@ f977c464:32fcbe00

2024-01-11 18:47:47

@ f977c464:32fcbe00

2024-01-11 18:47:47Kendisini aynada ilk defa gördüğü o gün, diğerleri gibi olduğunu anlamıştı. Oysaki her insan biricik olmalıydı. Sözgelimi sinirlendiğinde bir kaşı diğerinden birkaç milimetre daha az çatılabilirdi veya sevindiğinde dudağı ona has bir açıyla dalgalanabilirdi. Hatta bunların hiçbiri mümkün değilse, en azından, gözlerinin içinde sadece onun sahip olabileceği bir ışık parlayabilirdi. Çok sıradan, öyle sıradan ki kimsenin fark etmediği o milyonlarca minik şeyden herhangi biri. Ne olursa.

Ama yansımasına bakarken bunların hiçbirini bulamadı ve diğer günlerden hiç de farklı başlamamış o gün, işe gitmek için vagonunun gelmesini beklediği alelade bir metro istasyonunda, içinde kaybolduğu illüzyon dağılmaya başladı.

İlk önce derisi döküldü. Tam olarak dökülmedi aslında, daha çok kıvılcımlara dönüşüp bedeninden fırlamış ve bir an sonra sönerek külleşmiş, havada dağılmıştı. Ardında da, kaybolmadan hemen önce, kısa süre için hayal meyal görülebilen, bir ruhun yok oluşuna ağıt yakan rengârenk peri cesetleri bırakmıştı. Beklenenin aksine, havaya toz kokusu yayıldı.

Dehşete düştü elbette. Dehşete düştüler. Panikle üstlerini yırtan 50 işçi. Her şeyin sebebiyse o vagon.

Saçları da döküldü. Her tel, yere varmadan önce, her santimde ikiye ayrıla ayrıla yok oldu.

Bütün yüzeylerin mat olduğu, hiçbir şeyin yansımadığı, suyun siyah aktığı ve kendine ancak kameralarla bakabildiğin bir dünyada, vagonun içine yerleştirilmiş bir aynadan ilk defa kendini görmek.

Gözlerinin akları buharlaşıp havada dağıldı, mercekleri boşalan yeri doldurmak için eriyip yayıldı. Gerçeği görmemek için yaratılmış, bu yüzden görmeye hazır olmayan ve hiç olmayacak gözler.

Her şeyin o anda sona erdiğini sanabilirdi insan. Derin bir karanlık ve ölüm. Görmenin görmek olduğu o anın bitişi.

Ben geldiğimde ölmüşlerdi.

Yani bozulmuşlardı demek istiyorum.

Belleklerini yeni taşıyıcılara takmam mümkün olmadı. Fiziksel olarak kusursuz durumdaydılar, olmayanları da tamir edebilirdim ama tüm o hengamede kendilerini baştan programlamış ve girdilerini modifiye etmişlerdi.

Belleklerden birini masanın üzerinden ileriye savurdu. Hınca hınç dolu bir barda oturuyorlardı. O ve arkadaşı.

Sırf şu kendisini insan sanan androidler travma geçirip delirmesin diye neler yapıyoruz, insanın aklı almıyor.

Eliyle arkasını işaret etti.

Polislerin söylediğine göre biri vagonun içerisine ayna yerleştirmiş. Bu zavallılar da kapı açılıp bir anda yansımalarını görünce kafayı kırmışlar.

Arkadaşı bunların ona ne hissettirdiğini sordu. Yani o kadar bozuk, insan olduğunu sanan androidi kendilerini parçalamış olarak yerde görmek onu sarsmamış mıydı?

Hayır, sonuçta belirli bir amaç için yaratılmış şeyler onlar. Kaliteli bir bilgisayarım bozulduğunda üzülürüm çünkü parasını ben vermişimdir. Bunlarsa devletin. Bana ne ki?

Arkadaşı anlayışla kafasını sallayıp suyundan bir yudum aldı. Kravatını biraz gevşetti.

Bira istemediğinden emin misin?

İstemediğini söyledi. Sahi, neden deliriyordu bu androidler?

Basit. Onların yapay zekâlarını kodlarken bir şeyler yazıyorlar. Yazılımcılar. Biliyorsun, ben donanımdayım. Bunlar da kendilerini insan sanıyorlar. Tiplerine bak.

Sesini alçalttı.

Arabalarda kaza testi yapılan mankenlere benziyor hepsi. Ağızları burunları bile yok ama şu geldiğimizden beri sakalını düzeltip duruyor mesela. Hayır, hepsi de diğerleri onun sakalı varmış sanıyor, o manyak bir şey.

Arkadaşı bunun delirmeleriyle bağlantısını çözemediğini söyledi. O da normal sesiyle konuşmaya devam etti.

Anlasana, aynayı falan ayırt edemiyor mercekleri. Lönk diye kendilerini görüyorlar. Böyle, olduğu gibi...

Nedenmiş peki? Ne gerek varmış?

Ne bileyim be abicim! Ahiret soruları gibi.

Birasına bakarak dalıp gitti. Sonra masaya abanarak arkadaşına iyice yaklaştı. Bulanık, bir tünelin ucundaki biri gibi, şekli şemalı belirsiz bir adam.

Ben seni nereden tanıyorum ki ulan? Kimsin sen?

Belleği makineden çıkardılar. İki kişiydiler. Soruşturmadan sorumlu memurlar.

─ Baştan mı başlıyoruz, diye sordu belleği elinde tutan ilk memur.

─ Bir kere daha deneyelim ama bu sefer direkt aynayı sorarak başla, diye cevapladı ikinci memur.

─ Bence de. Yeterince düzgün çalışıyor.

Simülasyon yüklenirken, ayakta, biraz arkada duran ve alnını kaşıyan ikinci memur sormaktan kendisini alamadı:

─ Bu androidleri niye böyle bir olay yerine göndermişler ki? Belli tost olacakları. İsraf. Gidip biz baksak aynayı kırıp delilleri mahvetmek zorunda da kalmazlar.

Diğer memur sandalyesinde hafifçe dönecek oldu, o sırada soruyu bilgisayarın hoparlöründen teknisyen cevapladı.

Hangi işimizde bir yamukluk yok ki be abi.

Ama bir son değildi. Üstlerindeki tüm illüzyon dağıldığında ve çıplak, cinsiyetsiz, birbirinin aynı bedenleriyle kaldıklarında sıra dünyaya gelmişti.

Yere düştüler. Elleri -bütün bedeni gibi siyah turmalinden, boğumları çelikten- yere değdiği anda, metronun zemini dağıldı.

Yerdeki karolar öncesinde beyazdı ve çok parlaktı. Tepelerindeki floresan, ışığını olduğu gibi yansıtıyor, tek bir lekenin olmadığı ve tek bir tozun uçmadığı istasyonu aydınlatıyorlardı.

Duvarlara duyurular asılmıştı. Örneğin, yarın akşam kültür merkezinde 20.00’da başlayacak bir tekno blues festivalinin cıvıl cıvıl afişi vardı. Onun yanında daha geniş, sarı puntolu harflerle yazılmış, yatay siyah kesiklerle çerçevesi çizilmiş, bir platformdan düşen çöp adamın bulunduğu “Dikkat! Sarı bandı geçmeyin!” uyarısı. Biraz ilerisinde günlük resmi gazete, onun ilerisinde bir aksiyon filminin ve başka bir romantik komedi filminin afişleri, yapılacakların ve yapılmayacakların söylendiği küçük puntolu çeşitli duyurular... Duvar uzayıp giden bir panoydu. On, on beş metrede bir tekrarlanıyordu.

Tüm istasyonun eni yüz metre kadar. Genişliği on metre civarı.

Önlerinde, açık kapısından o mendebur aynanın gözüktüğü vagon duruyordu. Metro, istasyona sığmayacak kadar uzundu. Bir kılıcın keskinliğiyle uzanıyor ama yer yer vagonların ek yerleriyle bölünüyordu.

Hiçbir vagonda pencere olmadığı için metronun içi, içlerindekiler meçhuldü.

Sonrasında karolar zerrelerine ayrılarak yükseldi. Floresanın ışığında her yeri toza boğdular ve ortalığı gri bir sisin altına gömdüler. Çok kısa bir an. Afişleri dalgalandırmadılar. Dalgalandırmaya vakitleri olmadı. Yerlerinden söküp aldılar en fazla. Işık birkaç kere sönüp yanarak direndi. Son kez söndüğünde bir daha geri gelmedi.

Yine de etraf aydınlıktı. Kırmızı, her yere eşit dağılan soluk bir ışıkla.

Yer tamamen tele dönüşmüştü. Altında çapraz hatlarla desteklenmiş demir bir iskelet. Işık birkaç metreden daha fazla aşağıya uzanamıyordu. Sonsuzluğa giden bir uçurum.

Duvarın yerini aynı teller ve demir iskelet almıştı. Arkasında, birbirine vidalarla tutturulmuş demir plakalardan oluşan, üstünden geçen boruların ek yerlerinden bazen ince buharların çıktığı ve bir süre asılı kaldıktan sonra ağır, yağlı bir havayla sürüklendiği bir koridor.

Diğer tarafta paslanmış, pencerelerindeki camlar kırıldığı için demir plakalarla kapatılmış külüstür bir metro. Kapının karşısındaki aynadan her şey olduğu gibi yansıyordu.

Bir konteynırın içini andıran bir evde, gerçi gayet de birbirine eklenmiş konteynırlardan oluşan bir şehirde “andıran” demek doğru olmayacağı için düpedüz bir konteynırın içinde, masaya mum görüntüsü vermek için koyulmuş, yarı katı yağ atıklarından şekillendirilmiş kütleleri yakmayı deniyordu. Kafasında hayvan kıllarından yapılmış grili siyahlı bir peruk. Aynı kıllardan kendisine gür bir bıyık da yapmıştı.

Üstünde mavi çöp poşetlerinden yapılmış, kravatlı, şık bir takım.

Masanın ayakları yerine oradan buradan çıkmış parçalar konulmuştu: bir arabanın şaft mili, üst üste konulmuş ve üstünde yazı okunamayan tenekeler, boş kitaplar, boş gazete balyaları... Hiçbir şeye yazı yazılmıyordu, gerek yoktu da zaten çünkü merkez veri bankası onları fark ettirmeden, merceklerden giren veriyi sentezleyerek insanlar için dolduruyordu. Yani, androidler için. Farklı şekilde isimlendirmek bir fark yaratacaksa.

Onların mercekleri için değil. Bağlantıları çok önceden kopmuştu.

─ Hayatım, sofra hazır, diye bağırdı yatak odasındaki karısına.

Sofrada tabak yerine düz, bardak yerine bükülmüş, çatal ve bıçak yerine sivriltilmiş plakalar.

Karısı salonun kapısında durakladı ve ancak kulaklarına kadar uzanan, kocasınınkine benzeyen, cansız, ölü hayvanların kıllarından ibaret peruğunu eliyle düzeltti. Dudağını, daha doğrusu dudağının olması gereken yeri koyu kırmızı bir yağ tabakasıyla renklendirmeyi denemişti. Biraz da yanaklarına sürmüştü.

─ Nasıl olmuş, diye sordu.

Sesi tek düzeydi ama hafif bir neşe olduğunu hissettiğinize yemin edebilirdiniz.

Üzerinde, çöp poşetlerinin içini yazısız gazete kağıtlarıyla doldurarak yaptığı iki parça giysi.

─ Çok güzelsin, diyerek kravatını düzeltti kocası.

─ Sen de öylesin, sevgilim.

Yaklaşıp kocasını öptü. Kocası da onu. Sonra nazikçe elinden tutarak, sandalyesini geriye çekerek oturmasına yardım etti.

Sofrada yemek niyetine hiçbir şey yoktu. Gerek de yoktu zaten.

Konteynırın kapısı gürültüyle tekmelenip içeri iki memur girene kadar birbirlerine öyküler anlattılar. O gün neler yaptıklarını. İşten erken çıkıp yemyeşil çimenlerde gezdiklerini, uçurtma uçurduklarını, kadının nasıl o elbiseyi bulmak için saatlerce gezip yorulduğunu, kocasının kısa süreliğine işe dönüp nasıl başarılı bir hamleyle yaşanan krizi çözdüğünü ve kadının yanına döndükten sonra, alışveriş merkezinde oturdukları yeni dondurmacının dondurmalarının ne kadar lezzetli olduğunu, boğazlarının ağrımasından korktuklarını...

Akşam film izleyebilirlerdi, televizyonda -boş ve mat bir plaka- güzel bir film oynayacaktı.

İki memur. Çıplak bedenleriyle birbirinin aynı. Ellerindeki silahları onlara doğrultmuşlardı. Mum ışığında, tertemiz bir örtünün serili olduğu masada, bardaklarında şaraplarla oturan ve henüz sofranın ortasındaki hindiye dokunmamış çifti gördüklerinde bocaladılar.

Hiç de androidlere bilinçli olarak zarar verebilecek gibi gözükmüyorlardı.

─ Sessiz kalma hakkına sahipsiniz, diye bağırdı içeri giren ikinci memur. Söylediğiniz her şey...

Cümlesini bitiremedi. Yatak odasındaki, masanın üzerinden gördüğü o şey, onunla aynı hareketleri yapan android, yoksa, bir aynadaki yansıması mıydı?

Bütün illüzyon o anda dağılmaya başladı.

Not: Bu öykü ilk olarak 2020 yılında Esrarengiz Hikâyeler'de yayımlanmıştır.

-

@ 8fb140b4:f948000c

2023-11-21 21:37:48

@ 8fb140b4:f948000c

2023-11-21 21:37:48Embarking on the journey of operating your own Lightning node on the Bitcoin Layer 2 network is more than just a tech-savvy endeavor; it's a step into a realm of financial autonomy and cutting-edge innovation. By running a node, you become a vital part of a revolutionary movement that's reshaping how we think about money and digital transactions. This role not only offers a unique perspective on blockchain technology but also places you at the heart of a community dedicated to decentralization and network resilience. Beyond the technicalities, it's about embracing a new era of digital finance, where you contribute directly to the network's security, efficiency, and growth, all while gaining personal satisfaction and potentially lucrative rewards.

In essence, running your own Lightning node is a powerful way to engage with the forefront of blockchain technology, assert financial independence, and contribute to a more decentralized and efficient Bitcoin network. It's an adventure that offers both personal and communal benefits, from gaining in-depth tech knowledge to earning a place in the evolving landscape of cryptocurrency.

Running your own Lightning node for the Bitcoin Layer 2 network can be an empowering and beneficial endeavor. Here are 10 reasons why you might consider taking on this task:

-

Direct Contribution to Decentralization: Operating a node is a direct action towards decentralizing the Bitcoin network, crucial for its security and resistance to control or censorship by any single entity.

-

Financial Autonomy: Owning a node gives you complete control over your financial transactions on the network, free from reliance on third-party services, which can be subject to fees, restrictions, or outages.

-

Advanced Network Participation: As a node operator, you're not just a passive participant but an active player in shaping the network, influencing its efficiency and scalability through direct involvement.

-

Potential for Higher Revenue: With strategic management and optimal channel funding, your node can become a preferred route for transactions, potentially increasing the routing fees you can earn.

-

Cutting-Edge Technological Engagement: Running a node puts you at the forefront of blockchain and bitcoin technology, offering insights into future developments and innovations.

-

Strengthened Network Security: Each new node adds to the robustness of the Bitcoin network, making it more resilient against attacks and failures, thus contributing to the overall security of the ecosystem.

-

Personalized Fee Structures: You have the flexibility to set your own fee policies, which can balance earning potential with the service you provide to the network.

-

Empowerment Through Knowledge: The process of setting up and managing a node provides deep learning opportunities, empowering you with knowledge that can be applied in various areas of blockchain and fintech.

-

Boosting Transaction Capacity: By running a node, you help to increase the overall capacity of the Lightning Network, enabling more transactions to be processed quickly and at lower costs.

-

Community Leadership and Reputation: As an active node operator, you gain recognition within the Bitcoin community, which can lead to collaborative opportunities and a position of thought leadership in the space.

These reasons demonstrate the impactful and transformative nature of running a Lightning node, appealing to those who are deeply invested in the principles of bitcoin and wish to actively shape its future. Jump aboard, and embrace the journey toward full independence. 🐶🐾🫡🚀🚀🚀

-

-

@ 3bf0c63f:aefa459d

2024-01-29 02:19:25

@ 3bf0c63f:aefa459d

2024-01-29 02:19:25Nostr: a quick introduction, attempt #1

Nostr doesn't have a material existence, it is not a website or an app. Nostr is just a description what kind of messages each computer can send to the others and vice-versa. It's a very simple thing, but the fact that such description exists allows different apps to connect to different servers automatically, without people having to talk behind the scenes or sign contracts or anything like that.

When you use a Nostr client that is what happens, your client will connect to a bunch of servers, called relays, and all these relays will speak the same "language" so your client will be able to publish notes to them all and also download notes from other people.

That's basically what Nostr is: this communication layer between the client you run on your phone or desktop computer and the relay that someone else is running on some server somewhere. There is no central authority dictating who can connect to whom or even anyone who knows for sure where each note is stored.

If you think about it, Nostr is very much like the internet itself: there are millions of websites out there, and basically anyone can run a new one, and there are websites that allow you to store and publish your stuff on them.

The added benefit of Nostr is that this unified "language" that all Nostr clients speak allow them to switch very easily and cleanly between relays. So if one relay decides to ban someone that person can switch to publishing to others relays and their audience will quickly follow them there. Likewise, it becomes much easier for relays to impose any restrictions they want on their users: no relay has to uphold a moral ground of "absolute free speech": each relay can decide to delete notes or ban users for no reason, or even only store notes from a preselected set of people and no one will be entitled to complain about that.

There are some bad things about this design: on Nostr there are no guarantees that relays will have the notes you want to read or that they will store the notes you're sending to them. We can't just assume all relays will have everything — much to the contrary, as Nostr grows more relays will exist and people will tend to publishing to a small set of all the relays, so depending on the decisions each client takes when publishing and when fetching notes, users may see a different set of replies to a note, for example, and be confused.

Another problem with the idea of publishing to multiple servers is that they may be run by all sorts of malicious people that may edit your notes. Since no one wants to see garbage published under their name, Nostr fixes that by requiring notes to have a cryptographic signature. This signature is attached to the note and verified by everybody at all times, which ensures the notes weren't tampered (if any part of the note is changed even by a single character that would cause the signature to become invalid and then the note would be dropped). The fix is perfect, except for the fact that it introduces the requirement that each user must now hold this 63-character code that starts with "nsec1", which they must not reveal to anyone. Although annoying, this requirement brings another benefit: that users can automatically have the same identity in many different contexts and even use their Nostr identity to login to non-Nostr websites easily without having to rely on any third-party.

To conclude: Nostr is like the internet (or the internet of some decades ago): a little chaotic, but very open. It is better than the internet because it is structured and actions can be automated, but, like in the internet itself, nothing is guaranteed to work at all times and users many have to do some manual work from time to time to fix things. Plus, there is the cryptographic key stuff, which is painful, but cool.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Parallel Chains

We want merged-mined blockchains. We want them because it is possible to do things in them that aren't doable in the normal Bitcoin blockchain because it is rightfully too expensive, but there are other things beside the world money that could benefit from a "distributed ledger" -- just like people believed in 2013 --, like issued assets and domain names (just the most obvious examples).

On the other hand we can't have -- like people believed in 2013 -- a copy of Bitcoin for every little idea with its own native token that is mined by proof-of-work and must get off the ground from being completely valueless into having some value by way of a miracle that operated only once with Bitcoin.

It's also not a good idea to have blockchains with custom merged-mining protocol (like Namecoin and Rootstock) that require Bitcoin miners to run their software and be an active participant and miner for that other network besides Bitcoin, because it's too cumbersome for everybody.

Luckily Ruben Somsen invented this protocol for blind merged-mining that solves the issue above. Although it doesn't solve the fact that each parallel chain still needs some form of "native" token to pay miners -- or it must use another method that doesn't use a native token, such as trusted payments outside the chain.

How does it work

With the

SIGHASH_NOINPUT/SIGHASH_ANYPREVOUTsoft-fork[^eltoo] it becomes possible to create presigned transactions that aren't related to any previous UTXO.Then you create a long sequence of transactions (sufficient to last for many many years), each with an

nLockTimeof 1 and each spending the next (you create them from the last to the first). Since theirscriptSig(the unlocking script) will useSIGHASH_ANYPREVOUTyou can obtain a transaction id/hash that doesn't include the previous TXO, you can, for example, in a sequence of transactionsA0-->B(B spends output 0 from A), include the signature for "spending A0 on B" inside thescriptPubKey(the locking script) of "A0".With the contraption described above it is possible to make that long string of transactions everybody will know (and know how to generate) but each transaction can only be spent by the next previously decided transaction, no matter what anyone does, and there always must be at least one block of difference between them.

Then you combine it with

RBF,SIGHASH_SINGLEandSIGHASH_ANYONECANPAYso parallel chain miners can add inputs and outputs to be able to compete on fees by including their own outputs and getting change back while at the same time writing a hash of the parallel block in the change output and you get everything working perfectly: everybody trying to spend the same output from the long string, each with a different parallel block hash, only the highest bidder will get the transaction included on the Bitcoin chain and thus only one parallel block will be mined.See also

[^eltoo]: The same thing used in Eltoo.

-

@ 8fb140b4:f948000c

2023-11-18 23:28:31

@ 8fb140b4:f948000c

2023-11-18 23:28:31Chef's notes

Serving these two dishes together will create a delightful centerpiece for your Thanksgiving meal, offering a perfect blend of traditional flavors with a homemade touch.

Details

- ⏲️ Prep time: 30 min

- 🍳 Cook time: 1 - 2 hours

- 🍽️ Servings: 4-6

Ingredients

- 1 whole turkey (about 12-14 lbs), thawed and ready to cook

- 1 cup unsalted butter, softened

- 2 tablespoons fresh thyme, chopped

- 2 tablespoons fresh rosemary, chopped

- 2 tablespoons fresh sage, chopped

- Salt and freshly ground black pepper

- 1 onion, quartered

- 1 lemon, halved

- 2-3 cloves of garlic

- Apple and Sage Stuffing

- 1 loaf of crusty bread, cut into cubes

- 2 apples, cored and chopped

- 1 onion, diced

- 2 stalks celery, diced

- 3 cloves garlic, minced

- 1/4 cup fresh sage, chopped

- 1/2 cup unsalted butter

- 2 cups chicken broth

- Salt and pepper, to taste

Directions

- Preheat the Oven: Set your oven to 325°F (165°C).

- Prepare the Herb Butter: Mix the softened butter with the chopped thyme, rosemary, and sage. Season with salt and pepper.

- Prepare the Turkey: Remove any giblets from the turkey and pat it dry. Loosen the skin and spread a generous amount of herb butter under and over the skin.

- Add Aromatics: Inside the turkey cavity, place the quartered onion, lemon halves, and garlic cloves.

- Roast: Place the turkey in a roasting pan. Tent with aluminum foil and roast. A general guideline is about 15 minutes per pound, or until the internal temperature reaches 165°F (74°C) at the thickest part of the thigh.

- Rest and Serve: Let the turkey rest for at least 20 minutes before carving.

- Next: Apple and Sage Stuffing

- Dry the Bread: Spread the bread cubes on a baking sheet and let them dry overnight, or toast them in the oven.

- Cook the Vegetables: In a large skillet, melt the butter and cook the onion, celery, and garlic until soft.

- Combine Ingredients: Add the apples, sage, and bread cubes to the skillet. Stir in the chicken broth until the mixture is moist. Season with salt and pepper.

- Bake: Transfer the stuffing to a baking dish and bake at 350°F (175°C) for about 30-40 minutes, until golden brown on top.

-

@ 8fb140b4:f948000c

2023-11-02 01:13:01

@ 8fb140b4:f948000c

2023-11-02 01:13:01Testing a brand new YakiHonne native client for iOS. Smooth as butter (not penis butter 🤣🍆🧈) with great visual experience and intuitive navigation. Amazing work by the team behind it! * lists * work

Bold text work!

Images could have used nostr.build instead of raw S3 from us-east-1 region.

Very impressive! You can even save the draft and continue later, before posting the long-form note!

🐶🐾🤯🤯🤯🫂💜

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Blockchains are not decentralized data storage

People are used to saying and thinking that blockchains provide immutable data storage. Then many times they add a caveat that says blockchains are very expensive, so we can't really store too much data on them, but we can still store some data if we really want and are ok with paying for it.

But the fact is that blockchains cannot ever be used to store anything. The purpose of blockchains is to keep track of some state that everybody must agree upon at all times, and arbitrary data that anyone may have wanted to backup there is not relevant to anyone else[^relevant] and thus there are no incentives for anyone else to keep track of that. In other words: if you backup your personal pictures as

OP_RETURNoutputs on Bitcoin, people may delete that and your backup will be void[^op-return-invalid-outputs].Another thing blockchains supposedly do is to "broadcast" something. For example, nodes may delete the

OP_RETURNoutputs, but at least they have to verify these first, and spread they over the network, so you can broadcast your data and be sure everybody will get it. About this we can say two things: 1, if this happens, it's not a property of blockchains, but of the Bitcoin transaction sharing network that operates outside of the blockchain. 2, if you try to use that network for purposes that are irrelevant for the functioning of the Bitcoin protocol there is no incentive for other nodes to cooperate and they may ignore you.The above points may sound weird and you may be prompted to answer: but you can do all that today and there is no actual mechanism to stop anyone from broadcasting irrelevant crap!, and that is true. My point here is only that if you're thinking about blockchains as being this data-broadcast-storage mechanism you're thinking about them wrong, that is not an essential part of any blockchain. In other words: the incentives are not aligned for blockchains to be used like that (unless you come up with a scheme that makes data from everyone else to be relevant to everybody), in the long term such things are not expected to work and insisting on doing them will result in either your application or protocol that stores data on the blockchain to crash or in the death of the given blockchain (I hope Bitcoin haters don't read this).

(This is a counterpoint to myself on idea: Rumple, which was a protocol idea that relied on a blockchain storing irrelevant data.)

[^relevant]: For example, all Bitcoin transactions are relevant to all Bitcoin users because as a user the total supply and the ausence of double-spends are relevant, and also the fact that any of these transactions may end up being ancestors of transactions that you might receive in the future. [^op-return-invalid-outputs]: Of course you can still backup your pictures as invalid

P2PKHoutputs or something like that, then it will be harder for people to spot your data as irrelevant, but this is not a feature, it's a bug of Bitcoin that enables someone to spam other nodes in a way they can't detect it. If people started doing this a lot it would break Bitcoin. -

@ fa0165a0:03397073

2023-10-06 19:25:08

@ fa0165a0:03397073

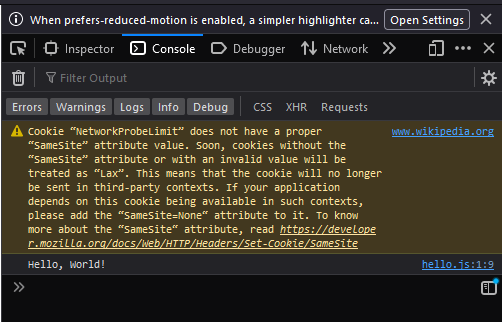

2023-10-06 19:25:08I just tested building a browser plugin, it was easier than I thought. Here I'll walk you through the steps of creating a minimal working example of a browser plugin, a.k.a. the "Hello World" of browser plugins.

First of all there are two main browser platforms out there, Chromium and Mozilla. They do some things a little differently, but similar enough that we can build a plugin that works on both. This plugin will work in both, I'll describe the firefox version, but the chromium version is very similar.

What is a browser plugin?

Simply put, a browser plugin is a program that runs in the browser. It can do things like modify the content of a webpage, or add new functionality to the browser. It's a way to extend the browser with custom functionality. Common examples are ad blockers, password managers, and video downloaders.

In technical terms, they are plugins that can insert html-css-js into your browser experience.

How to build a browser plugin

Step 0: Basics

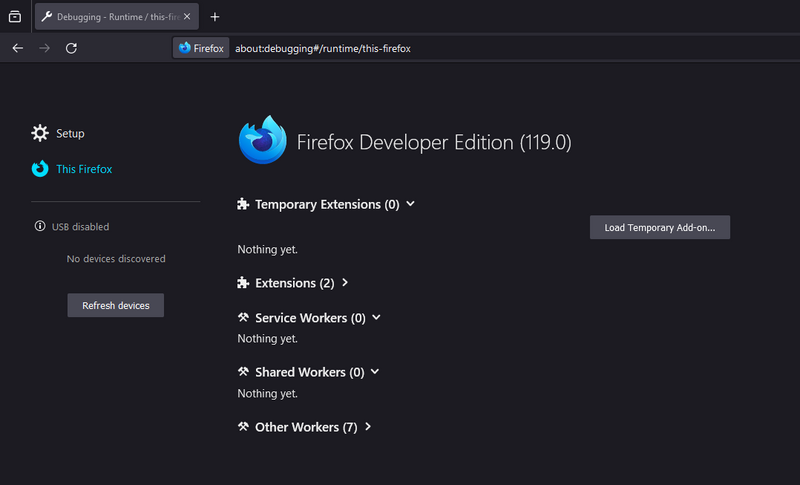

You'll need a computer, a text editor and a browser. For testing and development I personally think that the firefox developer edition is the easiest to work with. But any Chrome based browser will also do.

Create a working directory on your computer, name it anything you like. I'll call mine

hello-world-browser-plugin. Open the directory and create a file calledmanifest.json. This is the most important file of your plugin, and it must be named exactly right.Step 1: manifest.json

After creation open your file

manifest.jsonin your text editor and paste the following code:json { "manifest_version": 3, "name": "Hello World", "version": "1.0", "description": "A simple 'Hello World' browser extension", "content_scripts": [ { "matches": ["<all_urls>"], "js": ["hello.js"] //The name of your script file. // "css": ["hello.css"] //The name of your css file. } ] }If you wonder what the