-

@ 42342239:1d80db24

2024-04-05 08:21:50

@ 42342239:1d80db24

2024-04-05 08:21:50Trust is a topic increasingly being discussed. Whether it is trust in each other, in the media, or in our authorities, trust is generally seen as a cornerstone of a strong and well-functioning society. The topic was also the theme of the World Economic Forum at its annual meeting in Davos earlier this year. Even among central bank economists, the subject is becoming more prevalent. Last year, Agustín Carstens, head of the BIS ("the central bank of central banks"), said that "[w]ith trust, the public will be more willing to accept actions that involve short-term costs in exchange for long-term benefits" and that "trust is vital for policy effectiveness".

It is therefore interesting when central banks or others pretend as if nothing has happened even when trust has been shattered.

Just as in Sweden and in hundreds of other countries, Canada is planning to introduce a central bank digital currency (CBDC), a new form of money where the central bank or its intermediaries (the banks) will have complete insight into citizens' transactions. Payments or money could also be made programmable. Everything from transferring ownership of a car automatically after a successful payment to the seller, to payments being denied if you have traveled too far from home.

"If Canadians decide a digital dollar is necessary, our obligation is to be ready" says Carolyn Rogers, Deputy Head of Bank of Canada, in a statement shared in an article.

So, what do the citizens want? According to a report from the Bank of Canada, a whopping 88% of those surveyed believe that the central bank should refrain from developing such a currency. About the same number (87%) believe that authorities should guarantee the opportunity to pay with cash instead. And nearly four out of five people (78%) do not believe that the central bank will care about people's opinions. What about trust again?

Canadians' likely remember the Trudeau government's actions against the "Freedom Convoy". The Freedom Convoy consisted of, among others, truck drivers protesting the country's strict pandemic policies, blocking roads in the capital Ottawa at the beginning of 2022. The government invoked never-before-used emergency measures to, among other things, "freeze" people's bank accounts. Suddenly, truck drivers and those with a "connection" to the protests were unable to pay their electricity bills or insurances, for instance. Superficially, this may not sound so serious, but ultimately, it could mean that their families end up in cold houses (due to electricity being cut off) and that they lose the ability to work (driving uninsured vehicles is not taken lightly). And this applied not only to the truck drivers but also to those with a "connection" to the protests. No court rulings were required.

Without the freedom to pay for goods and services, i.e. the freedom to transact, one has no real freedom at all, as several participants in the protests experienced.

In January of this year, a federal judge concluded that the government's actions two years ago were unlawful when it invoked the emergency measures. The use did not display "features of rationality - motivation, transparency, and intelligibility - and was not justified in relation to the relevant factual and legal limitations that had to be considered". He also argued that the use was not in line with the constitution. There are also reports alleging that the government fabricated evidence to go after the demonstrators. The case is set to continue to the highest court. Prime Minister Justin Trudeau and Finance Minister Chrystia Freeland have also recently been sued for the government's actions.

The Trudeau government's use of emergency measures two years ago sadly only provides a glimpse of what the future may hold if CBDCs or similar systems replace the current monetary system with commercial bank money and cash. In Canada, citizens do not want the central bank to proceed with the development of a CBDC. In canada, citizens in Canada want to strengthen the role of cash. In Canada, citizens suspect that the central bank will not listen to them. All while the central bank feverishly continues working on the new system...

"Trust is vital", said Agustín Carstens. But if policy-makers do not pause for a thoughtful reflection even when trust has been utterly shattered as is the case in Canada, are we then not merely dealing with lip service?

And how much trust do these policy-makers then deserve?

-

@ 42342239:1d80db24

2024-03-31 11:23:36

@ 42342239:1d80db24

2024-03-31 11:23:36Biologist Stuart Kauffman introduced the concept of the "adjacent possible" in evolutionary biology in 1996. A bacterium cannot suddenly transform into a flamingo; rather, it must rely on small exploratory changes (of the "adjacent possible") if it is ever to become a beautiful pink flying creature. The same principle applies to human societies, all of which exemplify complex systems. It is indeed challenging to transform shivering cave-dwellers into a space travelers without numerous intermediate steps.

Imagine a water wheel – in itself, perhaps not such a remarkable invention. Yet the water wheel transformed the hard-to-use energy of water into easily exploitable rotational energy. A little of the "adjacent possible" had now been explored: water mills, hammer forges, sawmills, and textile factories soon emerged. People who had previously ground by hand or threshed with the help of oxen could now spend their time on other things. The principles of the water wheel also formed the basis for wind power. Yes, a multitude of possibilities arose – reminiscent of the rapid development during the Cambrian explosion. When the inventors of bygone times constructed humanity's first water wheel, they thus expanded the "adjacent possible". Surely, the experts of old likely sought swift prohibitions. Not long ago, our expert class claimed that the internet was going to be a passing fad, or that it would only have the same modest impact on the economy as the fax machine. For what it's worth, there were even attempts to ban the number zero back in the days.

The pseudonymous creator of Bitcoin, Satoshi Nakamoto, wrote in Bitcoin's whitepaper that "[w]e have proposed a system for electronic transactions without relying on trust." The Bitcoin system enables participants to agree on what is true without needing to trust each other, something that has never been possible before. In light of this, it is worth noting that trust in the federal government in the USA is among the lowest levels measured in almost 70 years. Trust in media is at record lows. Moreover, in countries like the USA, the proportion of people who believe that one can trust "most people" has decreased significantly. "Rebuilding trust" was even the theme of the World Economic Forum at its annual meeting. It is evident, even in the international context, that trust between countries is not at its peak.

Over a fifteen-year period, Bitcoin has enabled electronic transactions without its participants needing to rely on a central authority, or even on each other. This may not sound like a particularly remarkable invention in itself. But like the water wheel, one must acknowledge that new potential seems to have been put in place, potential that is just beginning to be explored. Kauffman's "adjacent possible" has expanded. And despite dogmatic statements to the contrary, no one can know for sure where this might lead.

The discussion of Bitcoin or crypto currencies would benefit from greater humility and openness, not only from employees or CEOs of money laundering banks but also from forecast-failing central bank officials. When for instance Chinese Premier Zhou Enlai in the 1970s was asked about the effects of the French Revolution, he responded that it was "too early to say" - a far wiser answer than the categorical response of the bureaucratic class. Isn't exploring systems not based on trust is exactly what we need at this juncture?

-

@ b12b632c:d9e1ff79

2024-03-23 16:42:49

@ b12b632c:d9e1ff79

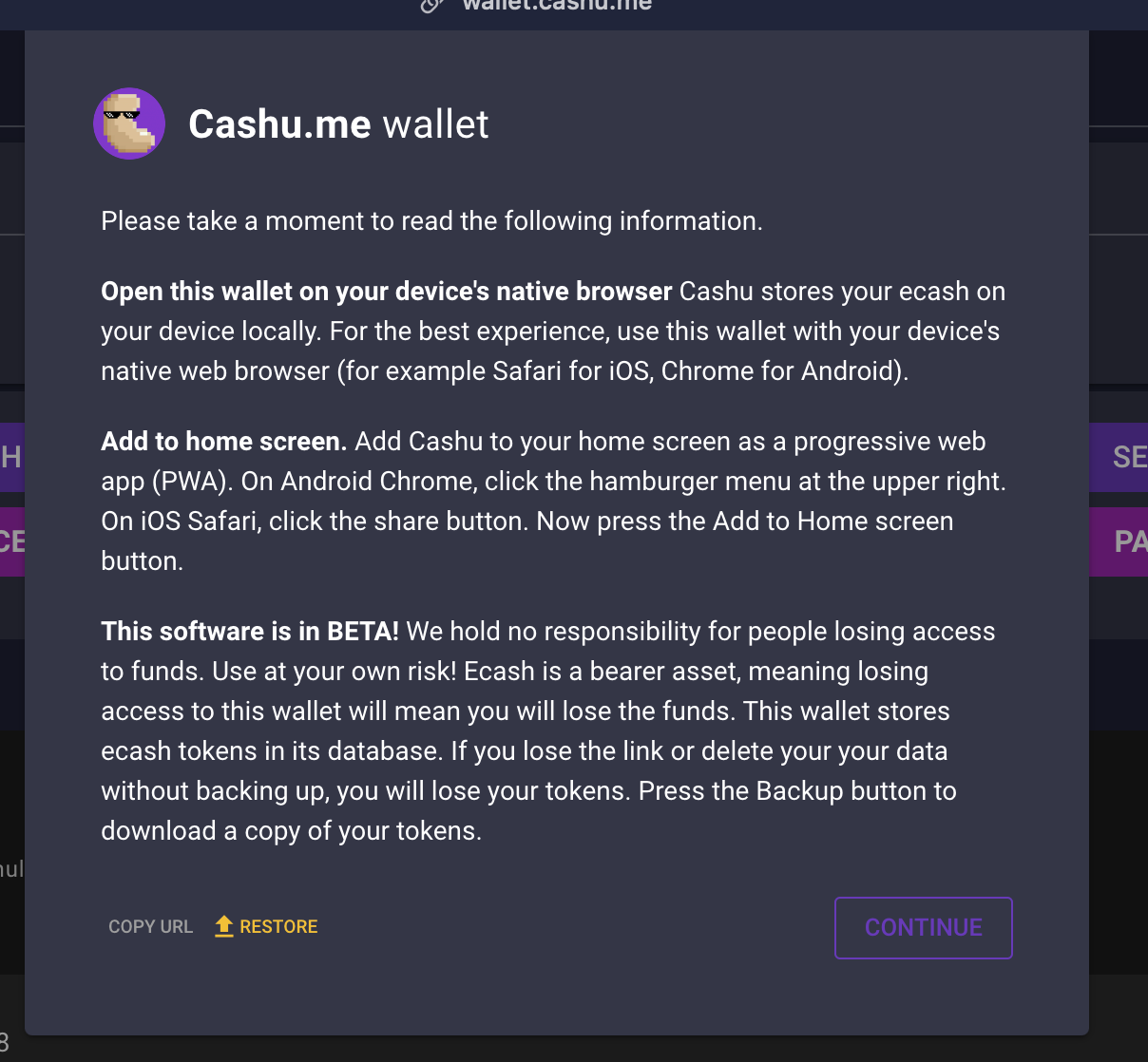

2024-03-23 16:42:49CASHU AND ECASH ARE EXPERIMENTAL PROJECTS. BY THE OWN NATURE OF CASHU ECASH, IT'S REALLY EASY TO LOSE YOUR SATS BY LACKING OF KNOWLEDGE OF THE SYSTEM MECHANICS. PLEASE, FOR YOUR OWN GOOD, ALWAYS USE FEW SATS AMOUNT IN THE BEGINNING TO FULLY UNDERSTAND HOW WORKS THE SYSTEM. ECASH IS BASED ON A TRUST RELATIONSHIP BETWEEN YOU AND THE MINT OWNER, PLEASE DONT TRUST ECASH MINT YOU DONT KNOW. IT IS POSSIBLE TO GENERATE UNLIMITED ECASH TOKENS FROM A MINT, THE ONLY WAY TO VALIDATE THE REAL EXISTENCE OF THE ECASH TOKENS IS TO DO A MULTIMINT SWAP (BETWEEN MINTS). PLEASE, ALWAYS DO A MULTISWAP MINT IF YOU RECEIVE SOME ECASH FROM SOMEONE YOU DON'T KNOW/TRUST. NEVER TRUST A MINT YOU DONT KNOW!

IF YOU WANT TO RUN AN ECASH MINT WITH A BTC LIGHTNING NODE IN BACK-END, PLEASE DEDICATE THIS LN NODE TO YOUR ECASH MINT. A BAD MANAGEMENT OF YOUR LN NODE COULD LET PEOPLE TO LOOSE THEIR SATS BECAUSE THEY HAD ONCE TRUSTED YOUR MINT AND YOU DID NOT MANAGE THE THINGS RIGHT.

What's ecash/Cashu ?

I recently listened a passionnating interview from calle 👁️⚡👁 invited by the podcast channel What Bitcoin Did about the new (not so much now) Cashu protocol.

Cashu is a a free and open-source Chaumian ecash project built for Bitcoin protocol, recently created in order to let users send/receive Ecash over BTC Lightning network. The main Cashu ecash goal is to finally give you a "by-design" privacy mechanism to allow us to do anonymous Bitcoin transactions.

Ecash for your privacy.\ A Cashu mint does not know who you are, what your balance is, or who you're transacting with. Users of a mint can exchange ecash privately without anyone being able to know who the involved parties are. Bitcoin payments are executed without anyone able to censor specific users.

Here are some useful links to begin with Cashu ecash :

Github repo: https://github.com/cashubtc

Documentation: https://docs.cashu.space

To support the project: https://docs.cashu.space/contribute

A Proof of Liabilities Scheme for Ecash Mints: https://gist.github.com/callebtc/ed5228d1d8cbaade0104db5d1cf63939

Like NOSTR and its own NIPS, here is the list of the Cashu ecash NUTs (Notation, Usage, and Terminology): https://github.com/cashubtc/nuts?tab=readme-ov-file

I won't explain you at lot more on what's Casu ecash, you need to figured out by yourself. It's really important in order to avoid any mistakes you could do with your sats (that you'll probably regret).

If you don't have so much time, you can check their FAQ right here: https://docs.cashu.space/faq

I strongly advise you to listen Calle's interviews @whatbbitcoindid to "fully" understand the concept and the Cashu ecash mechanism before using it:

Scaling Bitcoin Privacy with Calle

In the meantime I'm writing this article, Calle did another really interesting interview with ODELL from CitadelDispatch:

CD120: BITCOIN POWERED CHAUMIAN ECASH WITH CALLE

Which ecash apps?

There are several ways to send/receive some Ecash tokens, you can do it by using mobile applications like eNuts, Minibits or by using Web applications like Cashu.me, Nustrache or even npub.cash. On these topics, BTC Session Youtube channel offers high quality contents and very easy to understand key knowledge on how to use these applications :

Minibits BTC Wallet: Near Perfect Privacy and Low Fees - FULL TUTORIAL

Cashu Tutorial - Chaumian Ecash On Bitcoin

Unlock Perfect Privacy with eNuts: Instant, Free Bitcoin Transactions Tutorial

Cashu ecash is a very large and complex topic for beginners. I'm still learning everyday how it works and the project moves really fast due to its commited developpers community. Don't forget to follow their updates on Nostr to know more about the project but also to have a better undertanding of the Cashu ecash technical and political implications.

There is also a Matrix chat available if you want to participate to the project:

https://matrix.to/#/#cashu:matrix.org

How to self-host your ecash mint with Nutshell

Cashu Nutshell is a Chaumian Ecash wallet and mint for Bitcoin Lightning. Cashu Nutshell is the reference implementation in Python.

Github repo:

https://github.com/cashubtc/nutshell

Today, Nutshell is the most advanced mint in town to self-host your ecash mint. The installation is relatively straightforward with Docker because a docker-compose file is available from the github repo.

Nutshell is not the only cashu ecash mint server available, you can check other server mint here :

https://docs.cashu.space/mints

The only "external" requirement is to have a funding source. One back-end funding source where ecash will mint your ecash from your Sats and initiate BTC Lightning Netwok transactions between ecash mints and BTC Ligtning nodes during a multimint swap. Current backend sources supported are: FakeWallet*, LndRestWallet, CoreLightningRestWallet, BlinkWallet, LNbitsWallet, StrikeUSDWallet.

*FakeWallet is able to generate unlimited ecash tokens. Please use it carefully, ecash tokens issued by the FakeWallet can be sent and accepted as legit ecash tokens to other people ecash wallets if they trust your mint. In the other way, if someone send you 2,3M ecash tokens, please don't trust the mint in the first place. You need to force a multimint swap with a BTC LN transaction. If that fails, someone has maybe tried to fool you.

I used a Voltage.cloud BTC LN node instance to back-end my Nutshell ecash mint:

SPOILER: my nutshell mint is working but I have an error message "insufficient balance" when I ask a multiswap mint from wallet.cashu.me or the eNuts application. In order to make it work, I need to add some Sats liquidity (I can't right now) to the node and open few channels with good balance capacity. If you don't have an ecash mint capable of doig multiswap mint, you'll only be able to mint ecash into your ecash mint and send ecash tokens to people trusting your mint. It's working, yes, but you need to be able to do some mutiminit swap if you/everyone want to fully profit of the ecash system.

Once you created your account and you got your node, you need to git clone the Nutshell github repo:

git clone https://github.com/cashubtc/nutshell.gitYou next need to update the docker compose file with your own settings. You can comment the wallet container if you don't need it.

To generate a private key for your node, you can use this openssl command

openssl rand -hex 32 054de2a00a1d8e3038b30e96d26979761315cf48395aa45d866aeef358c91dd1The CLI Cashu wallet is not needed right now but I'll show you how to use it in the end of this article. Feel free to comment it or not.

``` version: "3" services: mint: build: context: . dockerfile: Dockerfile container_name: mint

ports:

- "3338:3338"

environment:- DEBUG=TRUE

- LOG_LEVEL=DEBUG

- MINT_URL=https://YourMintURL - MINT_HOST=YourMintDomain.tld - MINT_LISTEN_HOST=0.0.0.0 - MINT_LISTEN_PORT=3338 - MINT_PRIVATE_KEY=YourPrivateKeyFromOpenSSL - MINT_INFO_NAME=YourMintInfoName - MINT_INFO_DESCRIPTION=YourShortInfoDesc - MINT_INFO_DESCRIPTION_LONG=YourLongInfoDesc - MINT_LIGHTNING_BACKEND=LndRestWallet #- MINT_LIGHTNING_BACKEND=FakeWallet - MINT_INFO_CONTACT=[["email","YourConctact@email"], ["twitter","@YourTwitter"], ["nostr", "YourNPUB"]] - MINT_INFO_MOTD=Thanks for using my mint! - MINT_LND_REST_ENDPOINT=https://YourVoltageNodeDomain:8080 - MINT_LND_REST_MACAROON=YourDefaultAdminMacaroonBase64 - MINT_MAX_PEG_IN=100000 - MINT_MAX_PEG_OUT=100000 - MINT_PEG_OUT_ONLY=FALSE command: ["poetry", "run", "mint"]wallet-voltage: build: context: . dockerfile: Dockerfile container_name: wallet-voltage

ports:

- "4448:4448"

depends_on: - nutshell-voltage environment:- DEBUG=TRUE

- MINT_URL=http://nutshell-voltage:3338

- API_HOST=0.0.0.0 command: ["poetry", "run", "cashu", "-d"]```

To build, run and see the container logs:

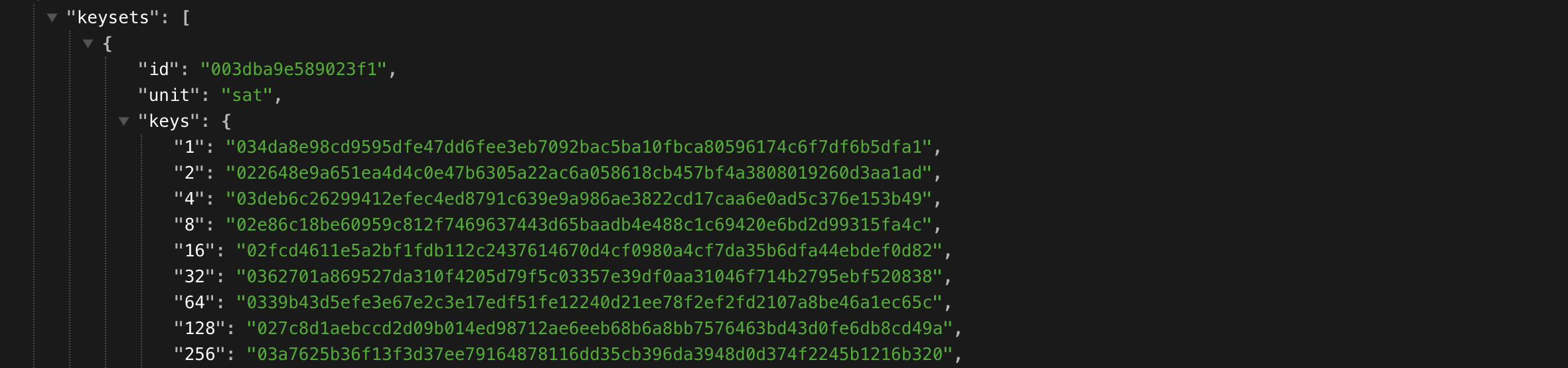

docker compose up -d && docker logs -f mint0.15.1 2024-03-22 14:45:45.490 | WARNING | cashu.lightning.lndrest:__init__:49 - no certificate for lndrest provided, this only works if you have a publicly issued certificate 2024-03-22 14:45:45.557 | INFO | cashu.core.db:__init__:135 - Creating database directory: data/mint 2024-03-22 14:45:45.68 | INFO | Started server process [1] 2024-03-22 14:45:45.69 | INFO | Waiting for application startup. 2024-03-22 14:45:46.12 | INFO | Loaded 0 keysets from database. 2024-03-22 14:45:46.37 | INFO | Current keyset: 003dba9e589023f1 2024-03-22 14:45:46.37 | INFO | Using LndRestWallet backend for method: 'bolt11' and unit: 'sat' 2024-03-22 14:45:46.97 | INFO | Backend balance: 1825000 sat 2024-03-22 14:45:46.97 | INFO | Data dir: /root/.cashu 2024-03-22 14:45:46.97 | INFO | Mint started. 2024-03-22 14:45:46.97 | INFO | Application startup complete. 2024-03-22 14:45:46.98 | INFO | Uvicorn running on http://0.0.0.0:3338 (Press CTRL+C to quit) 2024-03-22 14:45:47.27 | INFO | 172.19.0.22:48528 - "GET /v1/keys HTTP/1.1" 200 2024-03-22 14:45:47.34 | INFO | 172.19.0.22:48544 - "GET /v1/keysets HTTP/1.1" 200 2024-03-22 14:45:47.38 | INFO | 172.19.0.22:48552 - "GET /v1/info HTTP/1.1" 200If you see the line :

Uvicorn running on http://0.0.0.0:3338 (Press CTRL+C to quit)Nutshell is well started.

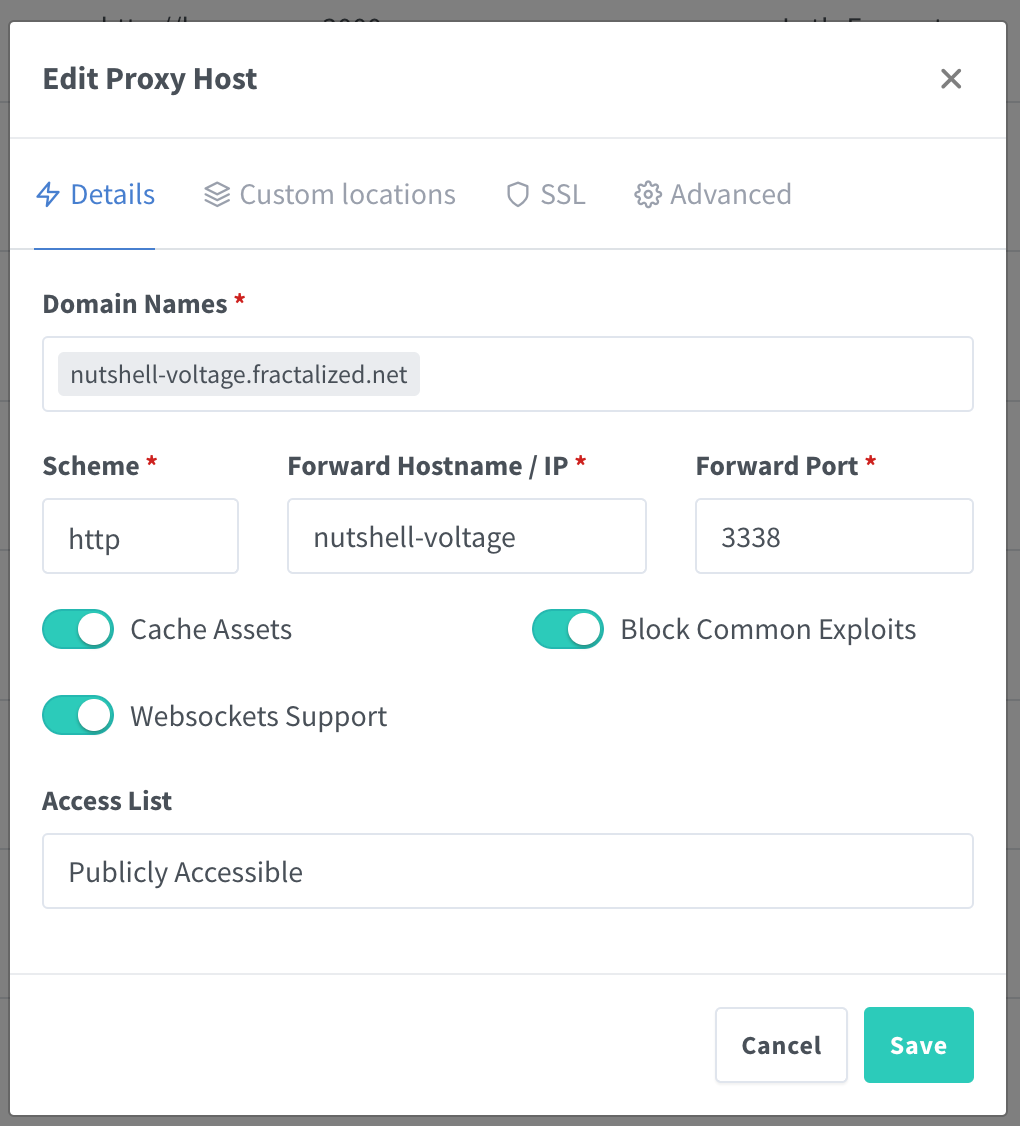

I won't explain here how to create a reverse proxy to Nutshell, you can find how to do it into my previous article. Here is the reverse proxy config into Nginx Proxy Manager:

If everything is well configured and if you go on your mint url (https://yourminturl) you shoud see this:

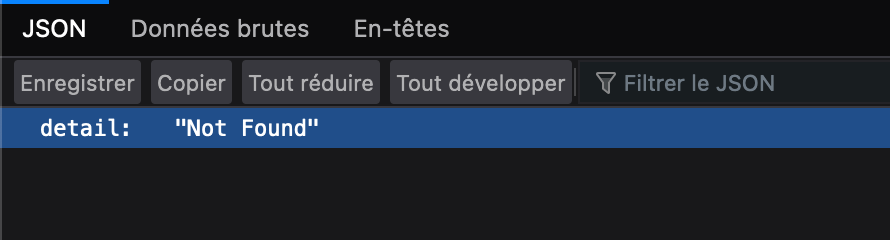

It's not helping a lot because at first glance it seems to be not working but it is. You can also check these URL path to confirm :

- https://yourminturl/keys and https://yourminturl/keysets

or

- https://yourminturl/v1/keys and https://yourminturl/v1/keysets

Depending of the moment when you read this article, the first URLs path might have been migrated to V1. Here is why:

https://github.com/cashubtc/nuts/pull/55

The final test is to add your mint to your prefered ecash wallets.

SPOILER: AT THIS POINT, YOU SHOUD KNOW THAT IF YOU RESET YOUR LOCAL BROWSER INTERNET CACHE FILE, YOU'LL LOSE YOUR MINTED ECASH TOKENS. IF NOT, PLEASE READ THE DOCUMENTATION AGAIN.

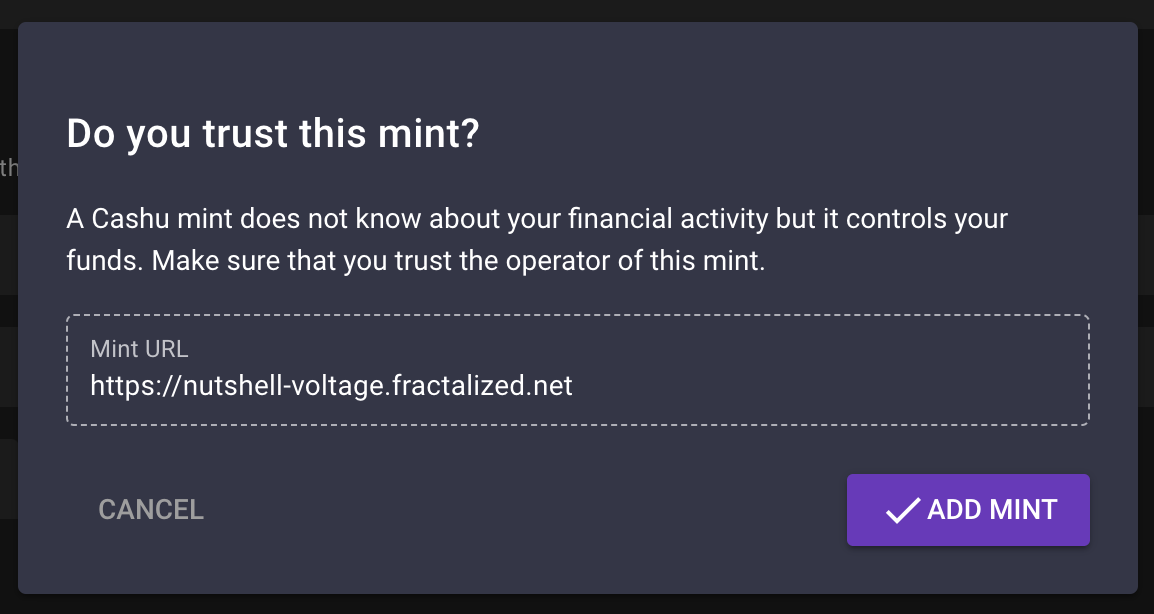

For instace, if we use wallet.cashu.me:

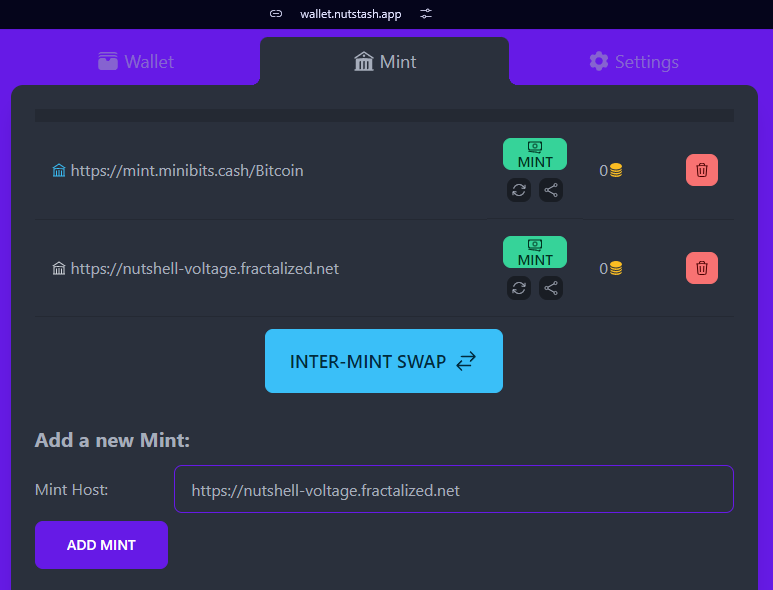

You can go into the "Settings" tab and add your mint :

If everything went find, you shoud see this :

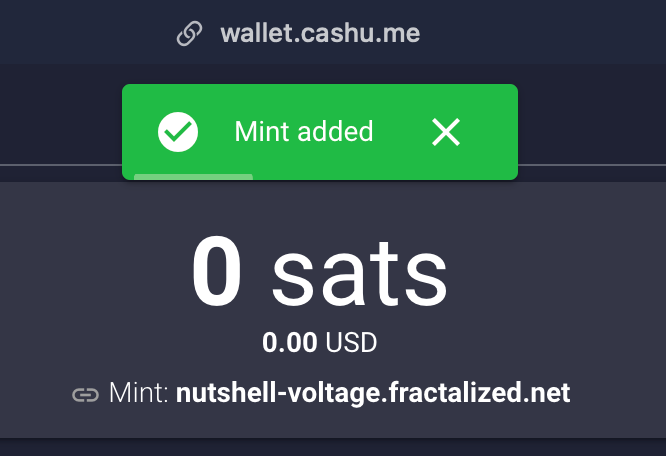

You can now mint some ecash from your mint creating a sats invoice :

You can now scan the QR diplayed with your prefered BTC LN wallet. If everything is OK, you should receive the funds:

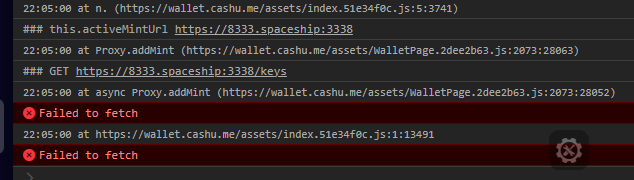

It may happen that some error popup sometimes. If you are curious and you want to know what happened, Cashu wallet has a debug console you can activate by clicking on the "Settings" page and "OPEN DEBUG TERMINAL". A little gear icon will be displayed in the bottom of the screen. You can click on it, go to settings and enable "Auto Display If Error Occurs" and "Display Extra Information". After enabling this setting, you can close the popup windows and let the gear icon enabled. If any error comes, this windows will open again and show you thé error:

Now that you have some sats in your balance, you can try to send some ecash. Open in a new windows another ecash wallet like Nutstach for instance.

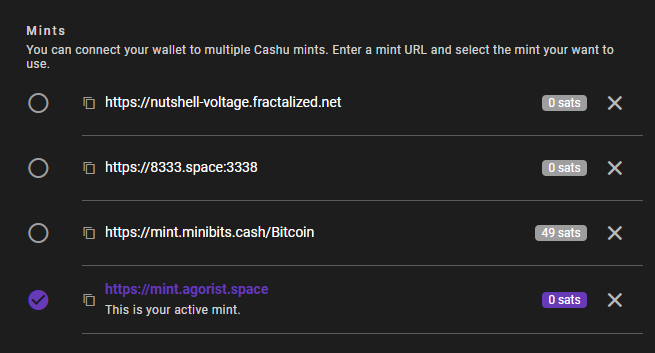

Add your mint again :

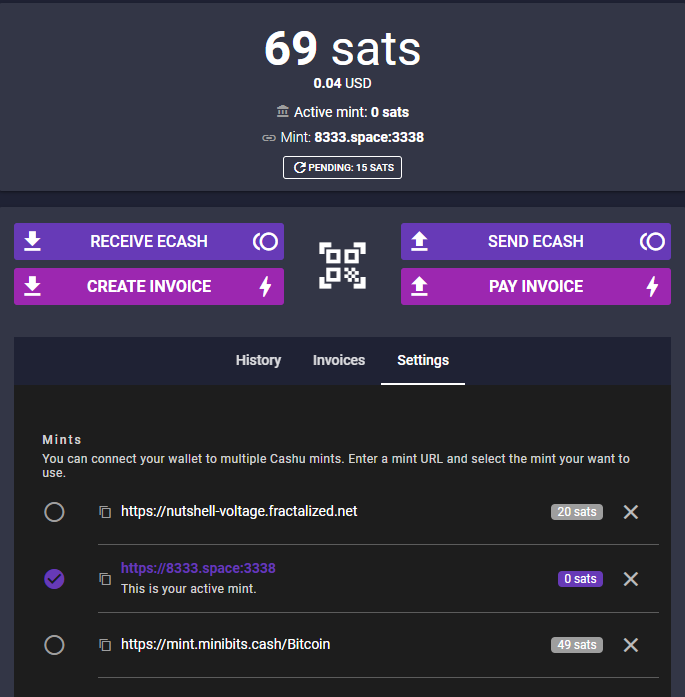

Return on Cashu wallet. The ecash token amount you see on the Cashu wallet home page is a total of all the ecash tokens you have on all mint connected.

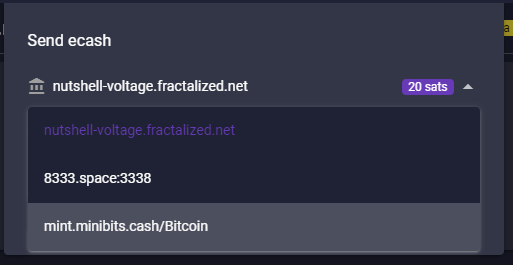

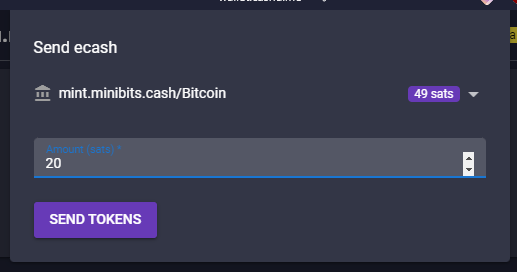

Next, click on "Send ecach". Insert the amout of ecash you want to transfer to your other wallet. You can select the wallet where you want to extract the funds by click on the little arrow near the sats funds you currenly selected :

Click now on "SEND TOKENS". That will open you a popup with a QR code and a code CONTAINING YOUR ECASH TOKENS (really).

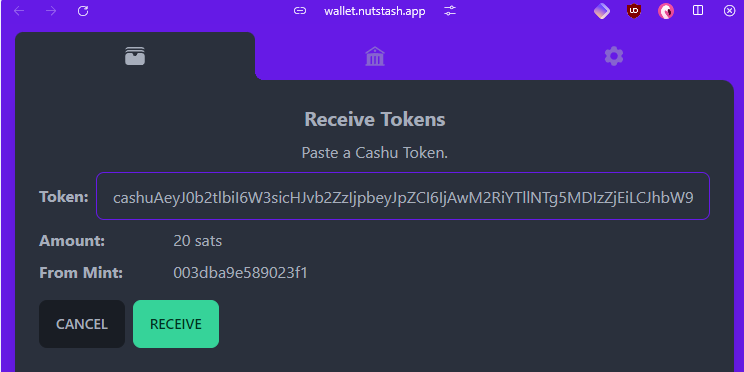

You can now return on nutstach, click on the "Receive" button and paste the code you get from Cashu wallet:

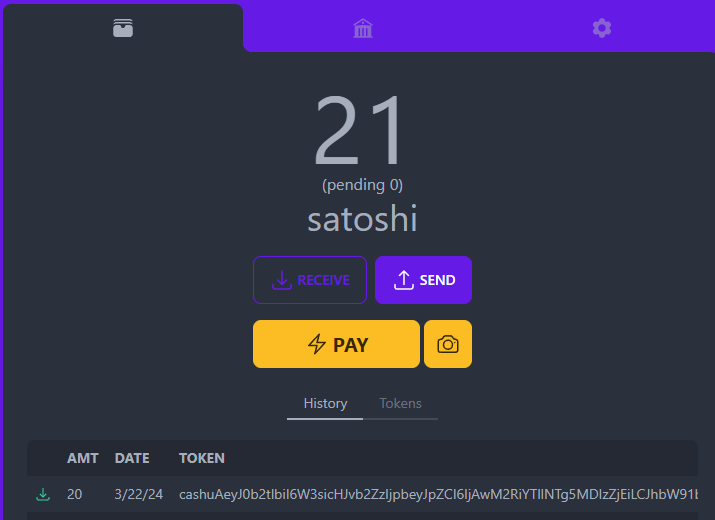

Click on "RECEIVE" again:

Congrats, you transfered your first ecash tokens to yourself ! 🥜⚡

You may need some time to transfer your ecash tokens between your wallets and your mint, there is a functionality existing for that called "Multimint swaps".

Before that, if you need new mints, you can check the very new website Bitcoinmints.com that let you see the existing ecash mints and rating :

Don't forget, choose your mint carefuly because you don't know who's behind.

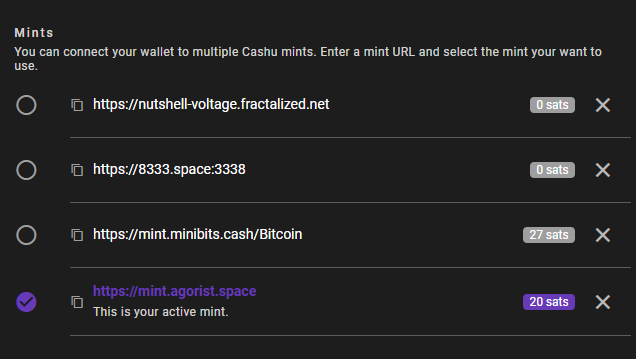

Let's take a mint and add it to our Cashu wallet:

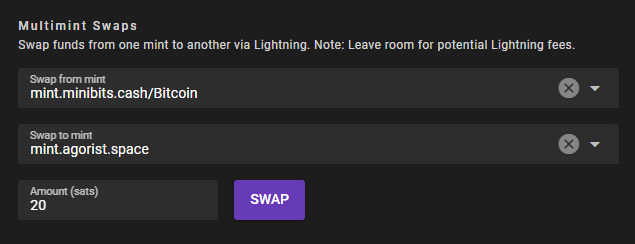

If you want to transfer let's say 20 sats from minibits mint to bitcointxoko mint, go just bottom into the "Multimint swap" section. Select the mint into "Swap from mint", the mint into "Swap to mint" and click on "SWAP" :

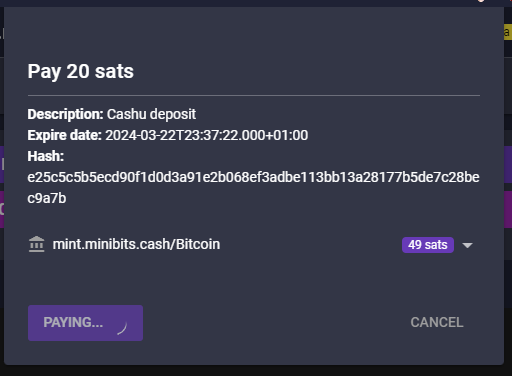

A popup window will appear and will request the ecash tokens from the source mint. It will automatically request the ecash amount via a Lightning node transaction and add the fund to your other wallet in the target mint. As it's a Lightning Network transaction, you can expect some little fees.

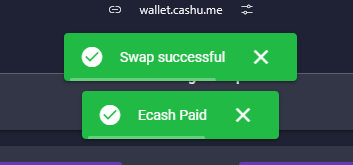

If everything is OK with the mints, the swap will be successful and the ecash received.

You can now see that the previous sats has been transfered (minus 2 fee sats).

Well done, you did your first multimint swap ! 🥜⚡

One last thing interresting is you can also use CLI ecash wallet. If you created the wallet contained in the docker compose, the container should be running.

Here are some commands you can do.

To verify which mint is currently connected :

``` docker exec -it wallet-voltage poetry run cashu info

2024-03-22 21:57:24.91 | DEBUG | cashu.wallet.wallet:init:738 | Wallet initialized 2024-03-22 21:57:24.91 | DEBUG | cashu.wallet.wallet:init:739 | Mint URL: https://nutshell-voltage.fractalized.net 2024-03-22 21:57:24.91 | DEBUG | cashu.wallet.wallet:init:740 | Database: /root/.cashu/wallet 2024-03-22 21:57:24.91 | DEBUG | cashu.wallet.wallet:init:741 | Unit: sat 2024-03-22 21:57:24.92 | DEBUG | cashu.wallet.wallet:init:738 | Wallet initialized 2024-03-22 21:57:24.92 | DEBUG | cashu.wallet.wallet:init:739 | Mint URL: https://nutshell-voltage.fractalized.net 2024-03-22 21:57:24.92 | DEBUG | cashu.wallet.wallet:init:740 | Database: /root/.cashu/wallet 2024-03-22 21:57:24.92 | DEBUG | cashu.wallet.wallet:init:741 | Unit: sat Version: 0.15.1 Wallet: wallet Debug: True Cashu dir: /root/.cashu Mints: - https://nutshell-voltage.fractalized.net ```

To verify your balance :

``` docker exec -it wallet-voltage poetry run cashu balance

2024-03-22 21:59:26.67 | DEBUG | cashu.wallet.wallet:init:738 | Wallet initialized 2024-03-22 21:59:26.67 | DEBUG | cashu.wallet.wallet:init:739 | Mint URL: https://nutshell-voltage.fractalized.net 2024-03-22 21:59:26.67 | DEBUG | cashu.wallet.wallet:init:740 | Database: /root/.cashu/wallet 2024-03-22 21:59:26.67 | DEBUG | cashu.wallet.wallet:init:741 | Unit: sat 2024-03-22 21:59:26.68 | DEBUG | cashu.wallet.wallet:init:738 | Wallet initialized 2024-03-22 21:59:26.68 | DEBUG | cashu.wallet.wallet:init:739 | Mint URL: https://nutshell-voltage.fractalized.net 2024-03-22 21:59:26.68 | DEBUG | cashu.wallet.wallet:init:740 | Database: /root/.cashu/wallet 2024-03-22 21:59:26.68 | DEBUG | cashu.wallet.wallet:init:741 | Unit: sat Balance: 0 sat ```

To create an sats invoice to have ecash :

``` docker exec -it wallet-voltage poetry run cashu invoice 20

2024-03-22 22:00:59.12 | DEBUG | cashu.wallet.wallet:_load_mint_info:275 | Mint info: name='nutshell.fractalized.net' pubkey='02008469922e985cbc5368ce16adb6ed1aaea0f9ecb21639db4ded2e2ae014a326' version='Nutshell/0.15.1' description='Official Fractalized Mint' description_long='TRUST THE MINT' contact=[['email', 'pastagringo@fractalized.net'], ['twitter', '@pastagringo'], ['nostr', 'npub1ky4kxtyg0uxgw8g5p5mmedh8c8s6sqny6zmaaqj44gv4rk0plaus3m4fd2']] motd='Thanks for using official ecash fractalized mint!' nuts={4: {'methods': [['bolt11', 'sat']], 'disabled': False}, 5: {'methods': [['bolt11', 'sat']], 'disabled': False}, 7: {'supported': True}, 8: {'supported': True}, 9: {'supported': True}, 10: {'supported': True}, 11: {'supported': True}, 12: {'supported': True}} Balance: 0 sat

Pay invoice to mint 20 sat:

Invoice: lnbc200n1pjlmlumpp5qh68cqlr2afukv9z2zpna3cwa3a0nvla7yuakq7jjqyu7g6y69uqdqqcqzzsxqyz5vqsp5zymmllsqwd40xhmpu76v4r9qq3wcdth93xthrrvt4z5ct3cf69vs9qyyssqcqppurrt5uqap4nggu5tvmrlmqs5guzpy7jgzz8szckx9tug4kr58t4avv4a6437g7542084c6vkvul0ln4uus7yj87rr79qztqldggq0cdfpy

You can use this command to check the invoice: cashu invoice 20 --id 2uVWELhnpFcNeFZj6fWzHjZuIipqyj5R8kM7ZJ9_

Checking invoice .................2024-03-22 22:03:25.27 | DEBUG | cashu.wallet.wallet:verify_proofs_dleq:1103 | Verified incoming DLEQ proofs. Invoice paid.

Balance: 20 sat ```

To pay an invoice by pasting the invoice you received by your or other people :

``` docker exec -it wallet-voltage poetry run cashu pay lnbc150n1pjluqzhpp5rjezkdtt8rjth4vqsvm50xwxtelxjvkq90lf9tu2thsv2kcqe6vqdq2f38xy6t5wvcqzzsxqrpcgsp58q9sqkpu0c6s8hq5pey8ls863xmjykkumxnd8hff3q4fvxzyh0ys9qyyssq26ytxay6up54useezjgqm3cxxljvqw5vq2e94ru7ytqc0al74hr4nt5cwpuysgyq8u25xx5la43mx4ralf3mq2425xmvhjzvwzqp54gp0e3t8e

2024-03-22 22:04:37.23 | DEBUG | cashu.wallet.wallet:_load_mint_info:275 | Mint info: name='nutshell.fractalized.net' pubkey='02008469922e985cbc5368ce16adb6ed1aaea0f9ecb21639db4ded2e2ae014a326' version='Nutshell/0.15.1' description='Official Fractalized Mint' description_long='TRUST THE MINT' contact=[['email', 'pastagringo@fractalized.net'], ['twitter', '@pastagringo'], ['nostr', 'npub1ky4kxtyg0uxgw8g5p5mmedh8c8s6sqny6zmaaqj44gv4rk0plaus3m4fd2']] motd='Thanks for using official ecash fractalized mint!' nuts={4: {'methods': [['bolt11', 'sat']], 'disabled': False}, 5: {'methods': [['bolt11', 'sat']], 'disabled': False}, 7: {'supported': True}, 8: {'supported': True}, 9: {'supported': True}, 10: {'supported': True}, 11: {'supported': True}, 12: {'supported': True}} Balance: 20 sat 2024-03-22 22:04:37.45 | DEBUG | cashu.wallet.wallet:get_pay_amount_with_fees:1529 | Mint wants 0 sat as fee reserve. 2024-03-22 22:04:37.45 | DEBUG | cashu.wallet.cli.cli:pay:189 | Quote: quote='YpNkb5f6WVT_5ivfQN1OnPDwdHwa_VhfbeKKbBAB' amount=15 fee_reserve=0 paid=False expiry=1711146847 Pay 15 sat? [Y/n]: y Paying Lightning invoice ...2024-03-22 22:04:41.13 | DEBUG | cashu.wallet.wallet:split:613 | Calling split. POST /v1/swap 2024-03-22 22:04:41.21 | DEBUG | cashu.wallet.wallet:verify_proofs_dleq:1103 | Verified incoming DLEQ proofs. Error paying invoice: Mint Error: Lightning payment unsuccessful. insufficient_balance (Code: 20000) ```

It didn't work, yes. That's the thing I told you earlier but it would work with a well configured and balanced Lightning Node.

That's all ! You should now be able to use ecash as you want! 🥜⚡

See you on NOSTR! 🤖⚡\ PastaGringo

-

@ ee11a5df:b76c4e49

2024-03-22 23:49:09

@ ee11a5df:b76c4e49

2024-03-22 23:49:09Implementing The Gossip Model

version 2 (2024-03-23)

Introduction

History

The gossip model is a general concept that allows clients to dynamically follow the content of people, without specifying which relay. The clients have to figure out where each person puts their content.

Before NIP-65, the gossip client did this in multiple ways:

- Checking kind-3 contents, which had relay lists for configuring some clients (originally Astral and Damus), and recognizing that wherever they were writing our client could read from.

- NIP-05 specifying a list of relays in the

nostr.jsonfile. I added this to NIP-35 which got merged down into NIP-05. - Recommended relay URLs that are found in 'p' tags

- Users manually making the association

- History of where events happen to have been found. Whenever an event came in, we associated the author with the relay.

Each of these associations were given a score (recommended relay urls are 3rd party info so they got a low score).

Later, NIP-65 made a new kind of relay list where someone could advertise to others which relays they use. The flag "write" is now called an OUTBOX, and the flag "read" is now called an INBOX.

The idea of inboxes came about during the development of NIP-65. They are a way to send an event to a person to make sure they get it... because putting it on your own OUTBOX doesn't guarantee they will read it -- they may not follow you.

The outbox model is the use of NIP-65. It is a subset of the gossip model which uses every other resource at it's disposal.

Rationale

The gossip model keeps nostr decentralized. If all the (major) clients were using it, people could spin up small relays for both INBOX and OUTBOX and still be fully connected, have their posts read, and get replies and DMs. This is not to say that many people should spin up small relays. But the task of being decentralized necessitates that people must be able to spin up their own relay in case everybody else is censoring them. We must make it possible. In reality, congregating around 30 or so popular relays as we do today is not a problem. Not until somebody becomes very unpopular with bitcoiners (it will probably be a shitcoiner), and then that person is going to need to leave those popular relays and that person shouldn't lose their followers or connectivity in any way when they do.

A lot more rationale has been discussed elsewhere and right now I want to move on to implementation advice.

Implementation Advice

Read NIP-65

NIP-65 will contain great advice on which relays to consult for which purposes. This post does not supersede NIP-65. NIP-65 may be getting some smallish changes, mostly the addition of a private inbox for DMs, but also changes to whether you should read or write to just some or all of a set of relays.

How often to fetch kind-10002 relay lists for someone

This is up to you. Refreshing them every hour seems reasonable to me. Keeping track of when you last checked so you can check again every hour is a good idea.

Where to fetch events from

If your user follows another user (call them jack), then you should fetch jack's events from jack's OUTBOX relays. I think it's a good idea to use 2 of those relays. If one of those choices fails (errors), then keep trying until you get 2 of them that worked. This gives some redundancy in case one of them is censoring. You can bump that number up to 3 or 4, but more than that is probably just wasting bandwidth.

To find events tagging your user, look in your user's INBOX relays for those. In this case, look into all of them because some clients will only write to some of them (even though that is no longer advised).

Picking relays dynamically

Since your user follows many other users, it is very useful to find a small subset of all of their OUTBOX relays that cover everybody followed. I wrote some code to do this as (it is used by gossip) that you can look at for an example.

Where to post events to

Post all events (except DMs) to all of your users OUTBOX relays. Also post the events to all the INBOX relays of anybody that was tagged or mentioned in the contents in a nostr bech32 link (if desired). That way all these mentioned people are aware of the reply (or quote or repost).

DMs should be posted only to INBOX relays (in the future, to PRIVATE INBOX relays). You should post it to your own INBOX relays also, because you'll want a record of the conversation. In this way, you can see all your DMs inbound and outbound at your INBOX relay.

Where to publish your user's kind-10002 event to

This event was designed to be small and not require moderation, plus it is replaceable so there is only one per user. For this reason, at the moment, just spread it around to lots of relays especially the most popular relays.

For example, the gossip client automatically determines which relays to publish to based on whether they seem to be working (several hundred) and does so in batches of 10.

How to find replies

If all clients used the gossip model, you could find all the replies to any post in the author's INBOX relays for any event with an 'e' tag tagging the event you want replies to... because gossip model clients will publish them there.

But given the non-gossip-model clients, you should also look where the event was seen and look on those relays too.

Clobbering issues

Please read your users kind 10002 event before clobbering it. You should look many places to make sure you didn't miss the newest one.

If the old relay list had tags you don't understand (e.g. neither "read" nor "write"), then preserve them.

How users should pick relays

Today, nostr relays are not uniform. They have all kinds of different rule-sets and purposes. We severely lack a way to advice non-technical users as to which relays make good OUTBOX relays and which ones make good INBOX relays. But you are a dev, you can figure that out pretty well. For example, INBOX relays must accept notes from anyone meaning they can't be paid-subscription relays.

Bandwidth isn't a big issue

The outbox model doesn't require excessive bandwidth when done right. You shouldn't be downloading the same note many times... only 2-4 times depending on the level of redundancy your user wants.

Downloading 1000 events from 100 relays is in theory the same amount of data as downloading 1000 events from 1 relay.

But in practice, due to redundancy concerns, you will end up downloading 2000-3000 events from those 100 relays instead of just the 1000 you would in a single relay situation. Remember, per person followed, you will only ask for their events from 2-4 relays, not from all 100 relays!!!

Also in practice, the cost of opening and maintaining 100 network connections is more than the cost of opening and maintaining just 1. But this isn't usually a big deal unless...

Crypto overhead on Low-Power Clients

Verifying Schnorr signatures in the secp256k1 cryptosystem is not cheap. Setting up SSL key exchange is not cheap either. But most clients will do a lot more event signature validations than they will SSL setups.

For this reason, connecting to 50-100 relays is NOT hugely expensive for clients that are already verifying event signatures, as the number of events far surpasses the number of relay connections.

But for low-power clients that can't do event signature verification, there is a case for them not doing a lot of SSL setups either. Those clients would benefit from a different architecture, where half of the client was on a more powerful machine acting as a proxy for the low-power half of the client. These halves need to trust each other, so perhaps this isn't a good architecture for a business relationship, but I don't know what else to say about the low-power client situation.

Unsafe relays

Some people complain that the outbox model directs their client to relays that their user has not approved. I don't think it is a big deal, as such users can use VPNs or Tor if they need privacy. But for such users that still have concerns, they may wish to use clients that give them control over this. As a client developer you can choose whether to offer this feature or not.

The gossip client allows users to require whitelisting for connecting to new relays and for AUTHing to relays.

See Also

-

@ 42342239:1d80db24

2024-03-21 09:49:01

@ 42342239:1d80db24

2024-03-21 09:49:01It has become increasingly evident that our financial system has started undermine our constitutionally guaranteed freedoms and rights. Payment giants like PayPal, Mastercard, and Visa sometimes block the ability to donate money. Individuals, companies, and associations lose bank accounts — or struggle to open new ones. In bank offices, people nowadays risk undergoing something resembling being cross-examined. The regulations are becoming so cumbersome that their mere presence risks tarnishing the banks' reputation.

The rules are so complex that even within the same bank, different compliance officers can provide different answers to the same question! There are even departments where some of the compliance officers are reluctant to provide written responses and prefer to answer questions over an unrecorded phone call. Last year's corporate lawyer in Sweden recently complained about troublesome bureaucracy, and that's from a the perspective of a very large corporation. We may not even fathom how smaller businesses — the keys to a nation's prosperity — experience it.

Where do all these rules come?

Where do all these rules come from, and how well do they work? Today's regulations on money laundering (AML) and customer due diligence (KYC - know your customer) primarily originate from a G7 meeting in the summer of 1989. (The G7 comprises the seven advanced economies: the USA, Canada, the UK, Germany, France, Italy, and Japan, along with the EU.) During that meeting, the intergovernmental organization FATF (Financial Action Task Force) was established with the aim of combating organized crime, especially drug trafficking. Since then, its mandate has expanded to include fighting money laundering, terrorist financing, and the financing of the proliferation of weapons of mass destruction(!). One might envisage the rules soon being aimed against proliferation of GPUs (Graphics Processing Units used for AI/ML). FATF, dominated by the USA, provides frameworks and recommendations for countries to follow. Despite its influence, the organization often goes unnoticed. Had you heard of it?

FATF offered countries "a deal they couldn't refuse"

On the advice of the USA and G7 countries, the organization decided to begin grading countries in "blacklists" and "grey lists" in 2000, naming countries that did not comply with its recommendations. The purpose was to apply "pressure" to these countries if they wanted to "retain their position in the global economy." The countries were offered a deal they couldn't refuse, and the number of member countries rapidly increased. Threatening with financial sanctions in this manner has even been referred to as "extraterritorial bullying." Some at the time even argued that the process violated international law.

If your local Financial Supervisory Authority (FSA) were to fail in enforcing compliance with FATF's many checklists among financial institutions, the risk of your country and its banks being barred from the US-dominated financial markets would loom large. This could have disastrous consequences.

A cost-benefit analysis of AML and KYC regulations

Economists use cost-benefit analysis to determine whether an action or a policy is successful. Let's see what such an analysis reveals.

What are the benefits (or revenues) after almost 35 years of more and more rules and regulations? The United Nations Office on Drugs and Crime estimated that only 0.2% of criminal proceeds are confiscated. Other estimates suggest a success rate from such anti-money laundering rules of 0.07% — a rounding error for organized crime. Europol expects to recover 1.2 billion euros annually, equivalent to about 1% of the revenue generated in the European drug market (110 billion euros). However, the percentage may be considerably lower, as the size of the drug market is likely underestimated. Moreover, there are many more "criminal industries" than just the drug trade; human trafficking is one example - there are many more. In other words, criminal organizations retain at least 99%, perhaps even 99.93%, of their profits, despite all cumbersome rules regarding money laundering and customer due diligence.

What constitutes the total cost of this bureaurcratic activity, costs that eventually burden taxpayers and households via higher fees? Within Europe, private financial firms are estimated to spend approximately 144 billion euros on compliance. According to some estimates, the global cost is twice as high, perhaps even eight times as much.

For Europe, the cost may thus be about 120 times (144/1.2) higher than the revenues from these measures. These "compliance costs" bizarrely exceed the total profits from the drug market, as one researcher put it. Even though the calculations are uncertain, it is challenging — perhaps impossible — to legitimize these regulations from a cost-benefit perspective.

But it doesn't end there, unfortunately. The cost of maintaining this compliance circus, with around 80 international organizations, thousands of authorities, far more employees, and all this across hundreds of countries, remains a mystery. But it's unlikely to be cheap.

The purpose of a system is what it does

In Economic Possibilities for our Grandchildren (1930), John Maynard Keynes foresaw that thanks to technological development, we could have had a 15-hour workweek by now. This has clearly not happened. Perhaps jobs have been created that are entirely meaningless? Anthropologist David Graeber argued precisely this in Bullshit Jobs in 2018. In that case, a significant number of people spend their entire working lives performing tasks they suspect deep down don't need to be done.

"The purpose of a system is what it does" is a heuristic coined by Stafford Beer. He observed there is "no point in claiming that the purpose of a system is to do what it constantly fails to do. What the current regulatory regime fails to do is combat criminal organizations. Nor does it seem to prevent banks from laundering money as never before, or from providing banking services to sex-offending traffickers

What the current regulatory regime does do, is: i) create armies of meaningless jobs, ii) thereby undermining mental health as well as economic prosperity, while iii) undermining our freedom and rights.

What does this say about the purpose of the system?

-

@ ee11a5df:b76c4e49

2024-03-21 00:28:47

@ ee11a5df:b76c4e49

2024-03-21 00:28:47I'm glad to see more activity and discussion about the gossip model. Glad to see fiatjaf and Jack posting about it, as well as many developers pitching in in the replies. There are difficult problems we need to overcome, and finding notes while remaining decentralized without huge note copying overhead was just the first. While the gossip model (including the outbox model which is just the NIP-65 part) completely eliminates the need to copy notes around to lots of relays, and keeps us decentralized, it brings about it's own set of new problems. No community is ever of the same mind on any issue, and this issue is no different. We have a lot of divergent opinions. This note will be my updated thoughts on these topics.

COPYING TO CENTRAL RELAYS IS A NON-STARTER: The idea that you can configure your client to use a few popular "centralized" relays and everybody will copy notes into those central relays is a non-starter. It destroys the entire raison d'être of nostr. I've heard people say that more decentralization isn't our biggest issue. But decentralization is THE reason nostr exists at all, so we need to make sure we live up to the hype. Otherwise we may as well just all join Bluesky. It has other problems too: the central relays get overloaded, and the notes get copied to too many relays, which is both space-inefficient and network bandwith inefficient.

ISSUE 1: Which notes should I fetch from which relays? This is described pretty well now in NIP-65. But that is only the "outbox" model part. The "gossip model" part is to also work out what relays work for people who don't publish a relay list.

ISSUE 2: Automatic failover. Apparently Peter Todd's definition of decentralized includes a concept of automatic failover, where new resources are brought up and users don't need to do anything. Besides this not being part of any definition of decentralized I have never heard of, we kind of have this. If a user has 5 outboxes, and 3 fail, everything still works. Redundancy is built in. No user intervention needed in most cases, at least in the short term. But we also don't have any notion of administrators who can fix this behind the scenes for the users. Users are sovereign and that means they have total control, but also take on some responsibility. This is obvious when it comes to keypair management, but it goes further. Users have to manage where they post and where they accept incoming notes, and when those relays fail to serve them they have to change providers. Putting the users in charge, and not having administrators, is kinda necessary to be truly decentralized.

ISSUE 3: Connecting to unvetted relays feels unsafe. It might even be the NSA tracking you! First off, this happens with your web browser all the time: you go visit a web page and it instructs your browser to fetch a font from google. If you don't like it, you can use uBlock origin and manage it manually. In the nostr world, if you don't like it, you can use a client that puts you more in control of this. The gossip client for example has options for whether you want to manually approve relay connections and AUTHs, just once or always, and always lets you change your mind later. If you turn those options on, initially it is a giant wall of approval requests... but that situation resolves rather quickly. I've been running with these options on for a long time now, and only about once a week do I have to make a decision for a new relay.

But these features aren't really necessary for the vast majority of users who don't care if a relay knows their IP address. Those users use VPNs or Tor when they want to be anonymous, and don't bother when they don't care (me included).

ISSUE 4: Mobile phone clients may find the gossip model too costly in terms of battery life. Bandwidth is actually not a problem: under the gossip model (if done correctly) events for user P are only downloaded from N relays (default for gossip client is N=2), which in general is FEWER events retrieved than other models which download the same event maybe 8 or more times. Rather, the problem here is the large number of network connections and in particular, the large number of SSL setups and teardowns. If it weren't for SSL, this wouldn't be much of a problem. But setting up and tearing down SSL on 50 simultaneous connections that drop and pop up somewhat frequently is a battery drain.

The solution to this that makes the most sense to me is to have a client proxy. What I mean by that is a piece of software on a server in a data centre. The client proxy would be a headless nostr client that uses the gossip model and acts on behalf of the phone client. The phone client doesn't even have to be a nostr client, but it might as well be a nostr client that just connects to this fixed proxy to read and write all of its events. Now the SSL connection issue is solved. These proxies can serve many clients and have local storage, whereas the phones might not even need local storage. Since very few users will set up such things for themselves, this is a business opportunity for people, and a better business opportunity IMHO than running a paid-for relay. This doesn't decentralize nostr as there can be many of these proxies. It does however require a trust relationship between the phone client and the proxy.

ISSUE 5: Personal relays still need moderation. I wrongly thought for a very long time that personal relays could act as personal OUTBOXes and personal INBOXes without needing moderation. Recently it became clear to me that clients should probably read from other people's INBOXes to find replies to events written by the user of that INBOX (which outbox model clients should be putting into that INBOX). If that is happening, then personal relays will need to serve to the public events that were just put there by the public, thus exposing them to abuse. I'm greatly disappointed to come to this realization and not quite settled about it yet, but I thought I had better make this known.

-

@ b12b632c:d9e1ff79

2024-02-19 19:18:46

@ b12b632c:d9e1ff79

2024-02-19 19:18:46Nostr decentralized network is growing exponentially day by day and new stuff comes out everyday. We can now use a NIP46 server to proxify our nsec key to avoid to use it to log on Nostr websites and possibly leak it, by mistake or by malicious persons. That's the point of this tutorial, setup a NIP46 server Nsec.app with its own Nostr relay. You'll be able to use it for you and let people use it, every data is stored locally in your internet browser. It's an non-custodial application, like wallets !

It's nearly a perfect solution (because nothing is perfect as we know) and that makes the daily use of Nostr keys much more secure and you'll see, much more sexy ! Look:

Nsec.app is not the only NIP46 server, in fact, @PABLOF7z was the first to create a NIP46 server called nsecBunker. You can also self-hosted nsecBunkerd, you can find a detailed explanation here : nsecbunkerd. I may write a how to self-host nsecBunkderd soon.

If you want more information about its bunker and what's behind this tutorial, you can check these links :

Few stuffs before beginning

Spoiler : I didn't automatized everything. The goal here is not to give you a full 1 click installation process, it's more to let you see and understand all the little things to configure and understand how works Nsec.app and the NIP46. There is a little bit of work, yes, but you'll be happy when it will work! Believe me.

Before entering into the battlefield, you must have few things : A working VPS with direct access to internet or a computer at home but NAT will certain make your life a hell. Use a VPS instead, on DigitalOcean, Linode, Scaleway, as you wish. A web domain that your own because we need to use at least 3 DNS A records (you can choose the subdomain you like) : domain.tld, noauth.domain.tld, noauth.domain.tld. You need to have some programs already installed : git, docker, docker-compose, nano/vi. if you fill in all the boxes, we can move forward !

Let's install everything !

I build a repo with a docker-compose file with all the required stuff to make the Bunker works :

Nsec.app front-end : noauth Nsec.app back-end : noauthd Nostr relay : strfry Nostr NIP05 : easy-nip5

First thing to do is to clone the repo "nsec-app-docker" from my repo:

$ git clone git clone https://github.com/PastaGringo/nsec-app-docker.git $ cd nsec-app-dockerWhen it's done, you'll have to do several things to make it work. 1) You need to generate some keys for the web-push library (keep them for later) :

``` $ docker run pastagringo/web-push-generate-keys

Generating your web-push keys...

Your private key : rQeqFIYKkInRqBSR3c5iTE3IqBRsfvbq_R4hbFHvywE Your public key : BFW4TA-lUvCq_az5fuQQAjCi-276wyeGUSnUx4UbGaPPJwEemUqp3Rr3oTnxbf0d4IYJi5mxUJOY4KR3ZTi3hVc ```

2) Generate a new keys pair (nsec/npub) for the NIP46 server by clicking on "Generate new key" from NostrTool website: nostrtool.com.

You should have something like this :

console Nostr private key (nsec): keep this -> nsec1zcyanx8zptarrmfmefr627zccrug3q2vhpfnzucq78357hshs72qecvxk6 Nostr private key (hex): 1609d998e20afa31ed3bca47a57858c0f888814cb853317300f1e34f5e178794 Nostr public key (npub): npub1ywzwtnzeh64l560a9j9q5h64pf4wvencv2nn0x4h0zw2x76g8vrq68cmyz Nostr public key (hex): keep this -> 2384e5cc59beabfa69fd2c8a0a5f550a6ae6667862a7379ab7789ca37b483b06You need to keep Nostr private key (nsec) & Nostr public key (npub). 3) Open (nano/vi) the .env file located in the current folder and fill all the required info :

```console

traefik

EMAIL=pastagringo@fractalized.net <-- replace with your own domain NSEC_ROOT_DOMAIN=plebes.ovh <-- replace with your own domain <-- replace with your own relay domain RELAY_DOMAIN=relay.plebes.ovh <-- replace with your own noauth domainay.plebes.ovh <-- replace with your own relay domain <-- replace with your own noauth domain NOAUTH_DOMAIN=noauth.plebes.ovh <-- replace with your own noauth domain NOAUTHD_DOMAIN=noauthd.plebes.ovh <-- replace with your own noauth domain

noauth

APP_WEB_PUSH_PUBKEY=BGVa7TMQus_KVn7tAwPkpwnU_bpr1i6B7D_3TT-AwkPlPd5fNcZsoCkJkJylVOn7kZ-9JZLpyOmt7U9rAtC-zeg <-- replace with your own web push public key APP_NOAUTHD_URL=https://$NOAUTHD_DOMAIN APP_DOMAIN=$NSEC_ROOT_DOMAIN APP_RELAY=wss://$RELAY_DOMAIN

noauthd

PUSH_PUBKEY=$APP_WEB_PUSH_PUBKEY PUSH_SECRET=_Sz8wgp56KERD5R4Zj5rX_owrWQGyHDyY4Pbf5vnFU0 <-- replace with your own web push private key ORIGIN=https://$NOAUTHD_DOMAIN DATABASE_URL=file:./prod.db BUNKER_NSEC=nsec1f43635rzv6lsazzsl3hfsrum9u8chn3pyjez5qx0ypxl28lcar2suy6hgn <-- replace with your the bunker nsec key BUNKER_RELAY=wss://$RELAY_DOMAIN BUNKER_DOMAIN=$NSEC_ROOT_DOMAIN BUNKER_ORIGIN=https://$NOAUTH_DOMAIN ```

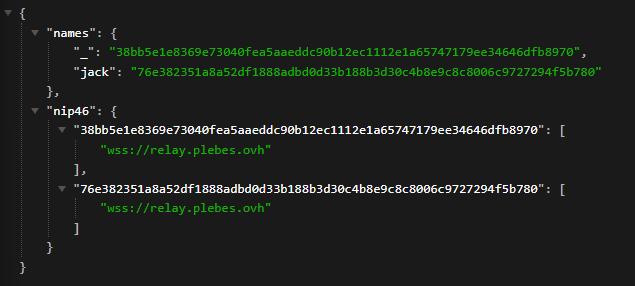

Be aware of noauth and noauthd (the d letter). Next, save and quit. 4) You now need to modify the nostr.json file used for the NIP05 to indicate which relay your bunker will use. You need to set the bunker HEX PUBLIC KEY (I replaced the info with the one I get from NostrTool before) :

console nano easy-nip5/nostr.jsonconsole { "names": { "_": "ServerHexPubKey" }, "nip46": { "ServerHexPubKey": [ "wss://ReplaceWithYourRelayDomain" ] } }5) You can now run the docker compose file by running the command (first run can take a bit of time because the noauth container needs to build the npm project):

console $ docker compose up -d6) Before creating our first user into the Nostr Bunker, we need to test if all the required services are up. You should have :

noauth :

noauthd :

console CANNOT GET /https://noauthd.yourdomain.tld/name :

console { "error": "Specify npub" }https://yourdomain.tld/.well-known/nostr.json :

console { "names": { "_": "ServerHexPubKey" }, "nip46": { "ServerHexPubKey": [ "wss://ReplaceWithYourRelayDomain" ] } }If you have everything working, we can try to create a new user!

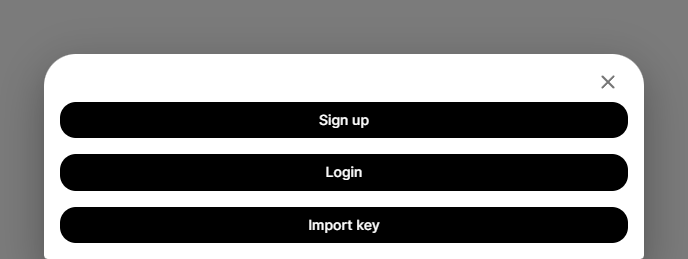

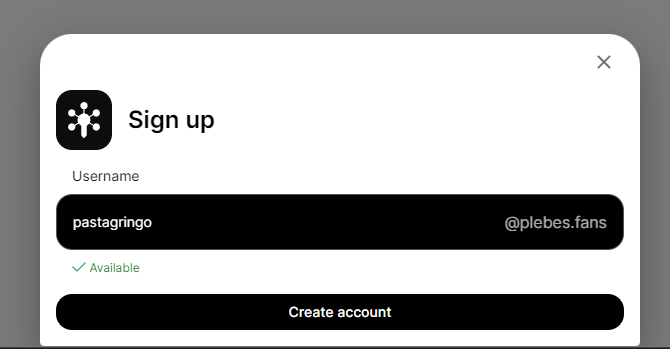

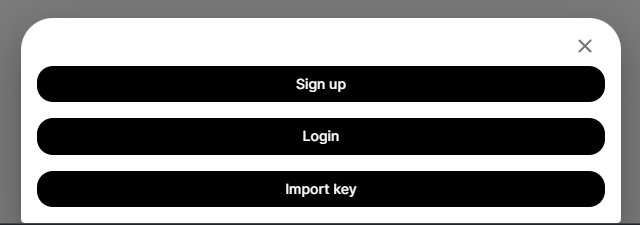

7) Connect to noauth and click on "Get Started" :

At the bottom the screen, click on "Sign up" :

Fill a username and click on "Create account" :

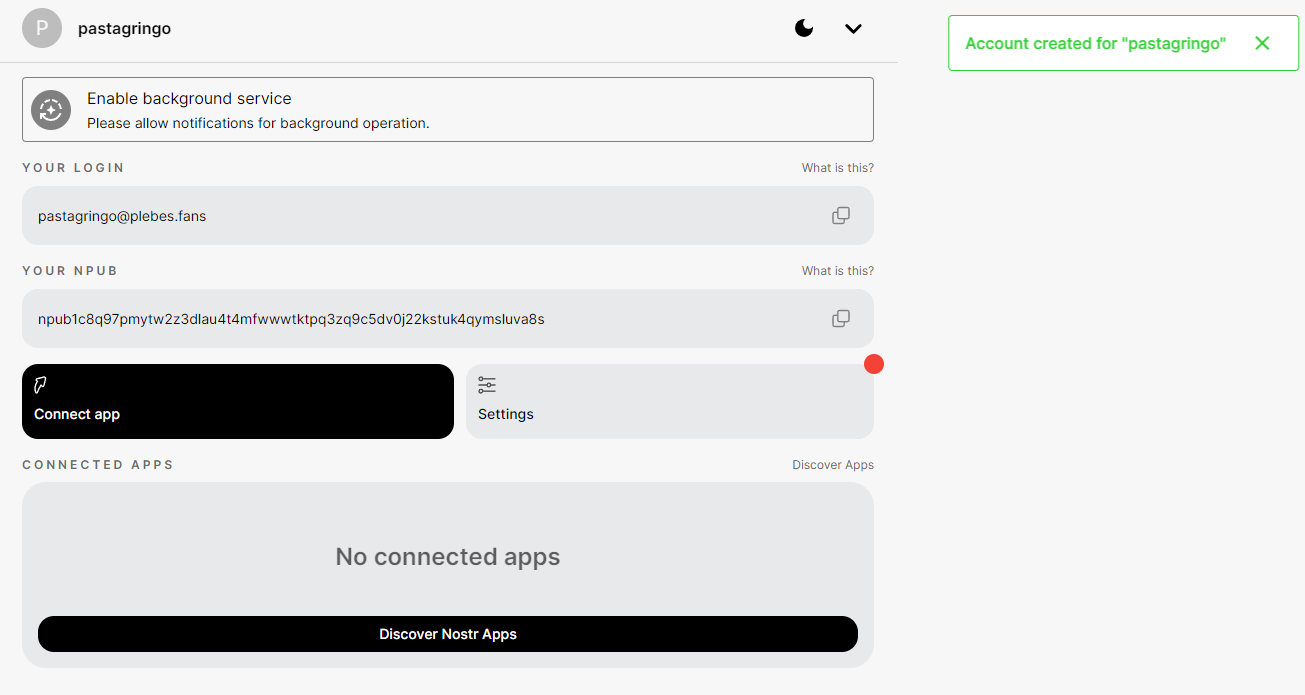

If everything has been correctly configured, you should see a pop message with "Account created for "XXXX" :

PS : to know if noauthd is well serving the nostr.json file, you can check this URL : https://yourdomain.tld/.well-known/nostr.json?name=YourUser You should see that the user has now NIP05/NIP46 entries :

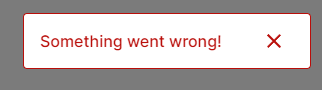

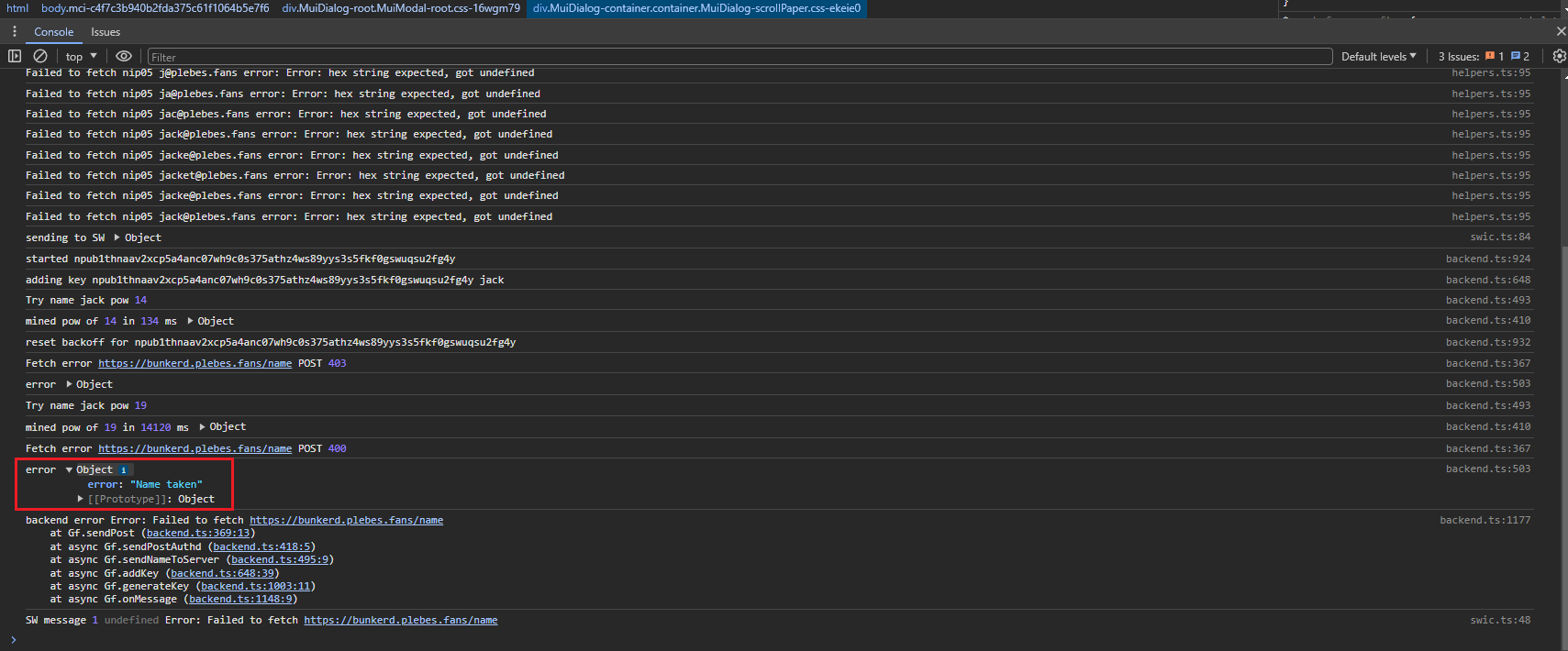

If the user creation failed, you'll see a red pop-up saying "Something went wrong!" :

To understand what happened, you need to inspect the web page to find the error :

For the example, I tried to recreate a user "jack" which has already been created. You may find a lot of different errors depending of the configuration you made. You can find that the relay is not reachable on w s s : / /, you can find that the noauthd is not accessible too, etc. Every answers should be in this place.

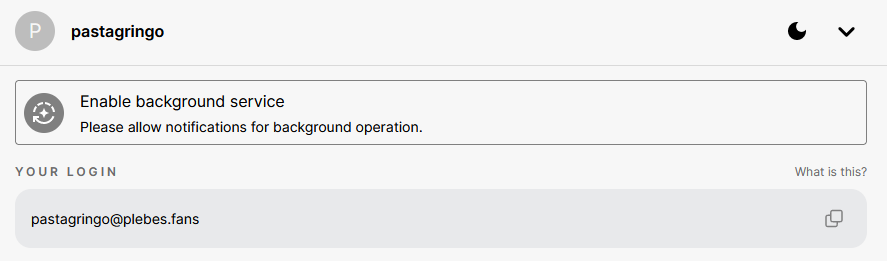

To completely finish the tests, you need to enable the browser notifications, otherwise you won't see the pop-up when you'll logon on Nostr web client, by clicking on "Enable background service" :

You need to click on allow notifications :

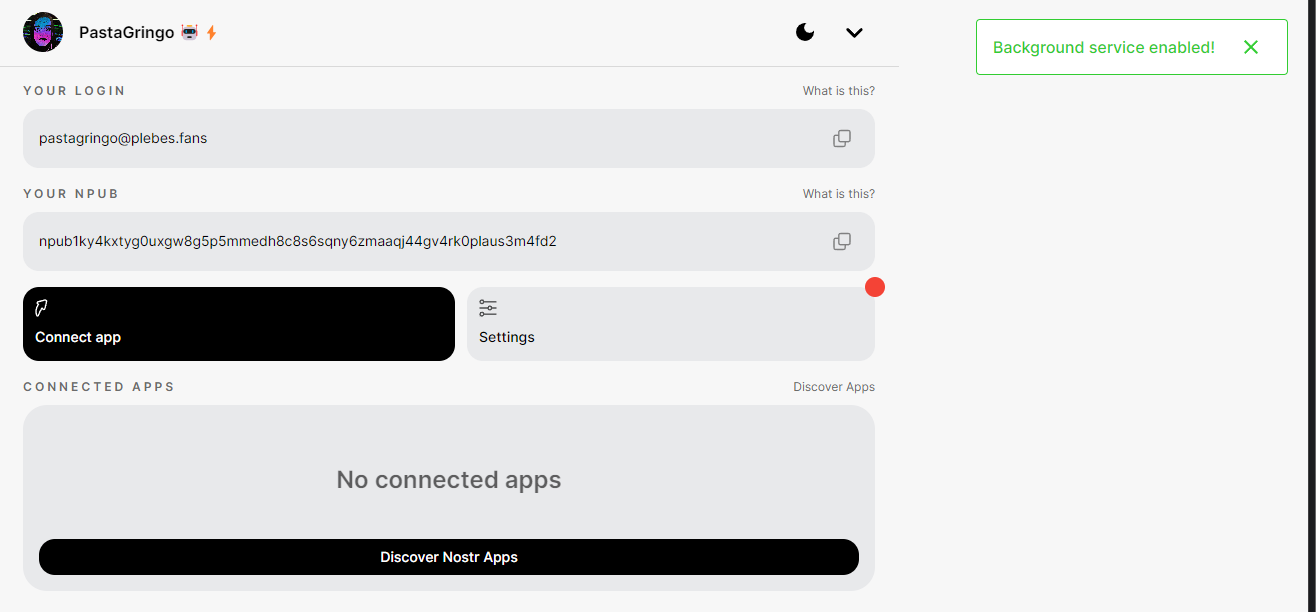

Should see this green confirmation popup on top right of your screen:

Well... Everything works now !

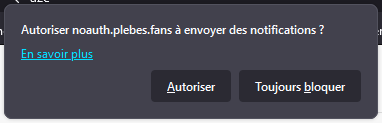

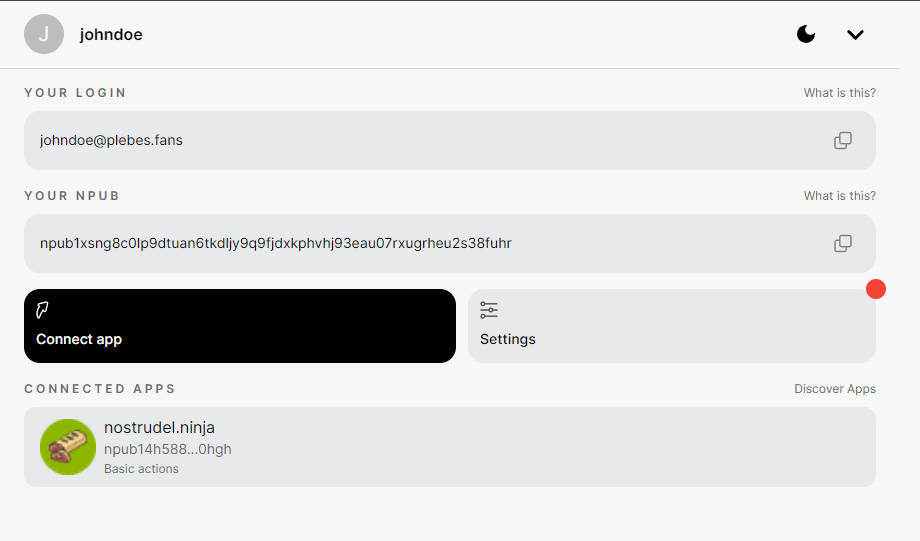

8) You try to use your brand new proxyfied npub by clicking on "Connect App" and buy copying your bunker URL :

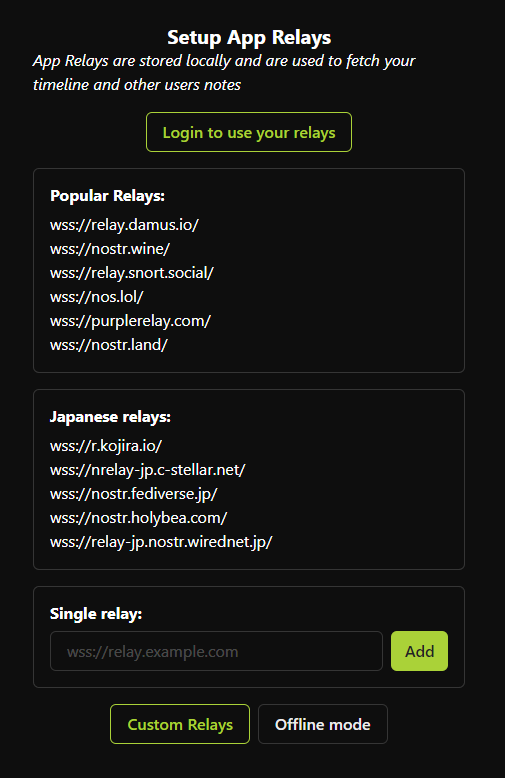

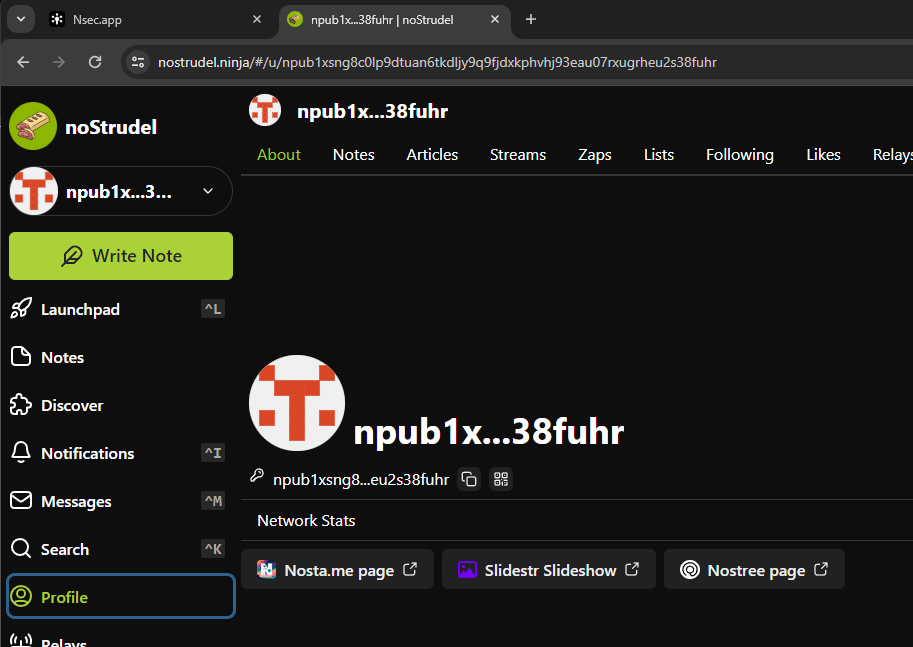

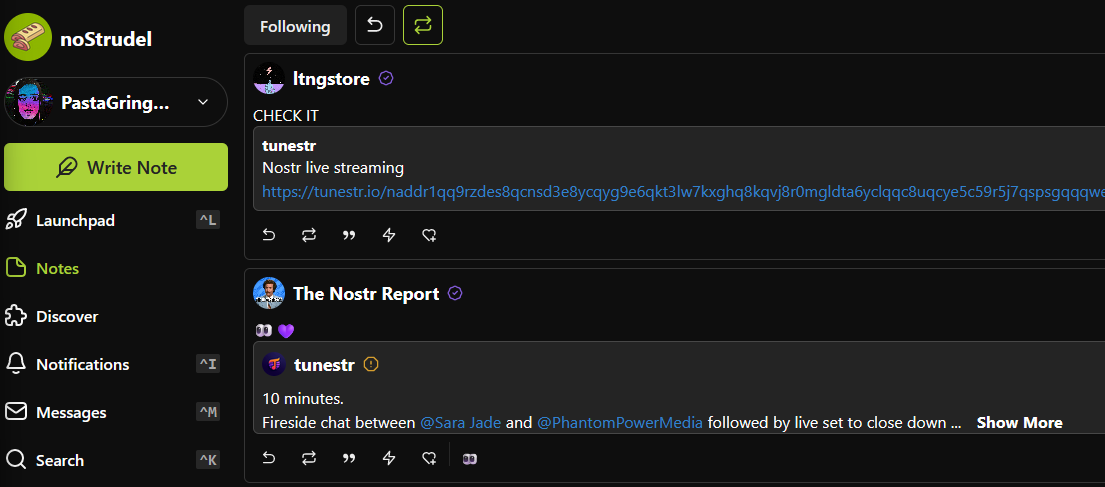

You can now to for instance on Nostrudel Nostr web client to login with it. Select the relays you want (Popular is better ; if you don't have multiple relay configured on your Nostr profile, avoid "Login to use your relay") :

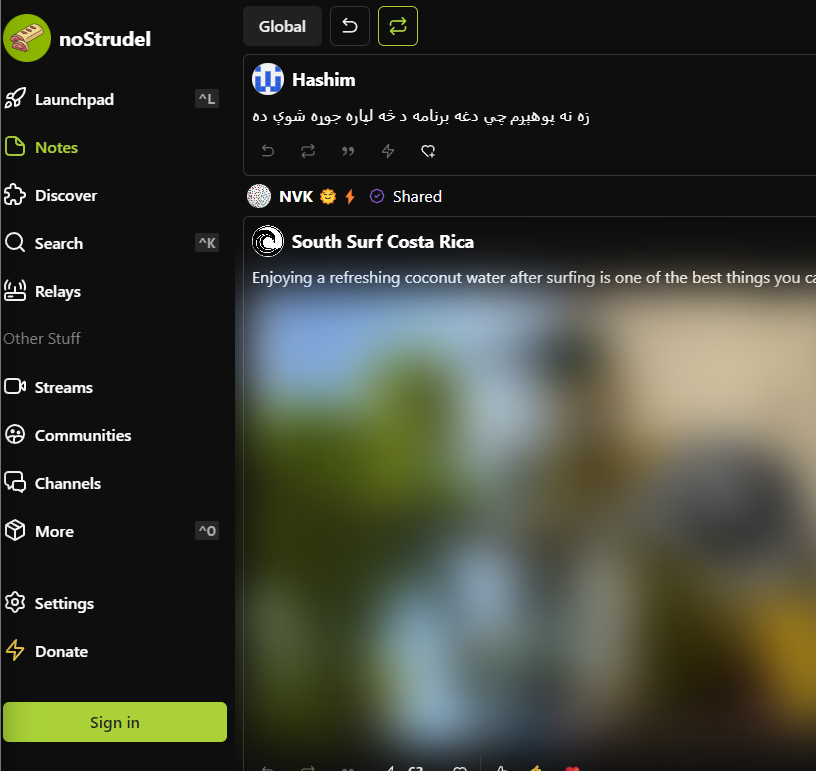

Click on "Sign in" :

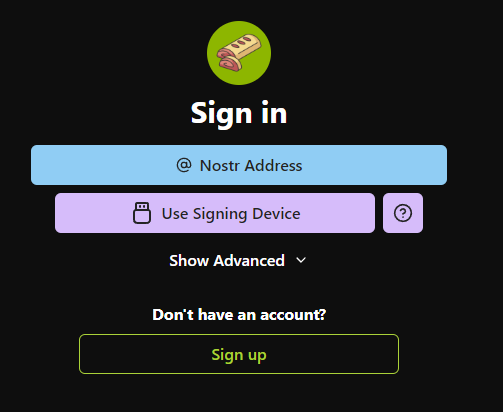

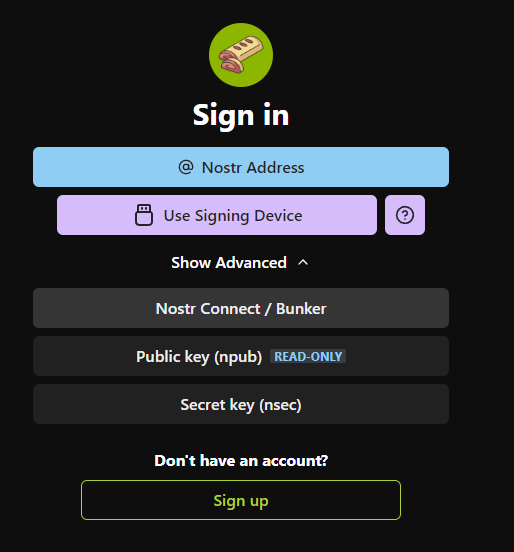

Click on "Show Advanced" :

Click on "Nostr connect / Bunker" :

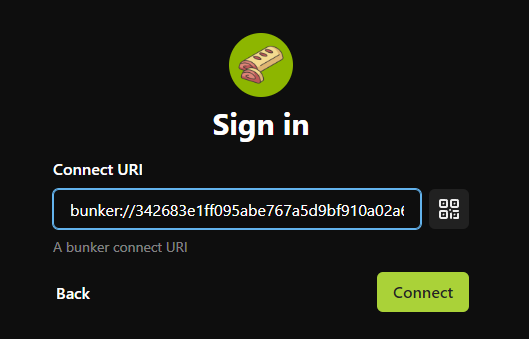

Paste your bunker URL and click on "Connect" :

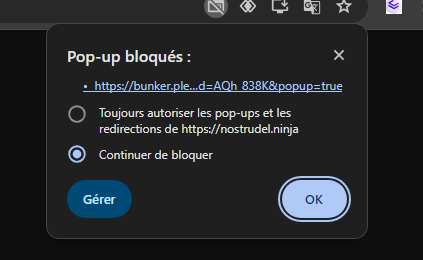

The first time, tour browser (Chrome here) may blocks the popup, you need to allow it :

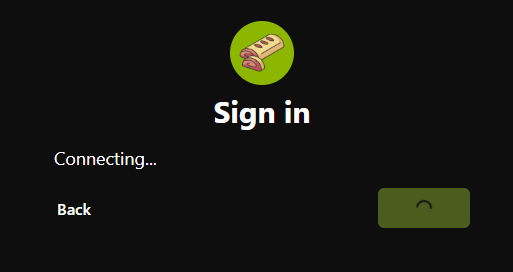

If the browser blocked the popup, NoStrudel will wait your confirmation to login :

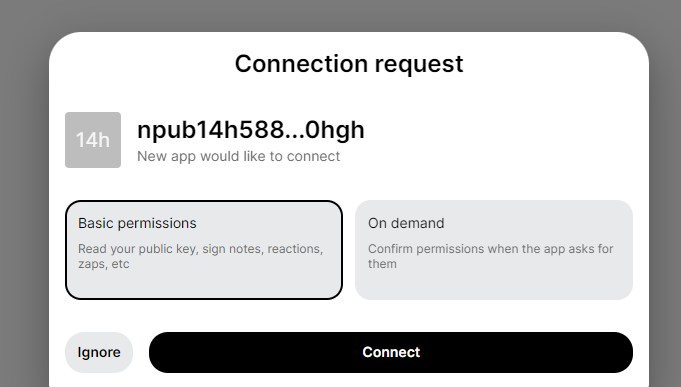

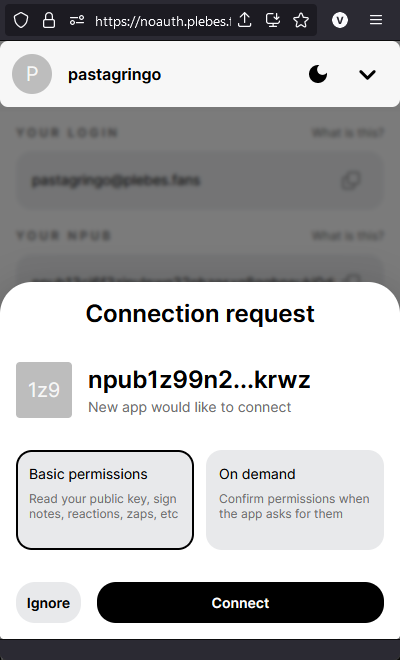

You have to go back on your bunker URL to allow the NoStrudel connection request by clicking on on "Connect":

The first time connections may be a bit annoying with all the popup authorizations but once it's done, you can forget them it will connect without any issue. Congrats ! You are connected on NoStrudel with an npub proxyfied key !⚡

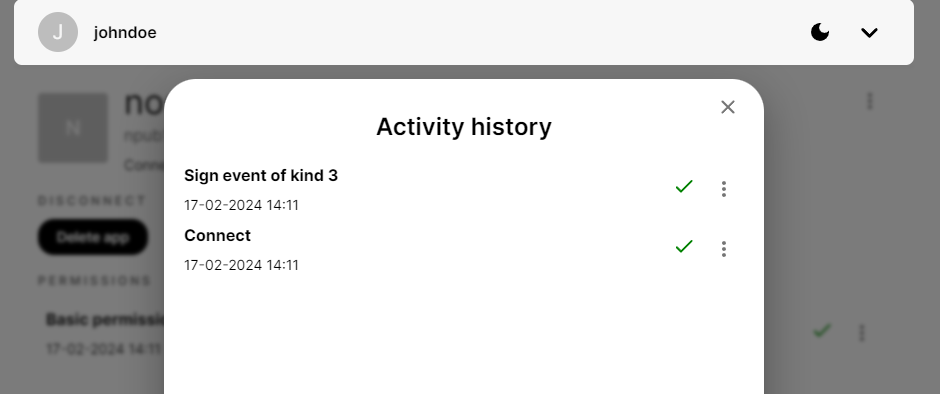

You can check to which applications you gave permissions and activity history in noauth by selecting your user. :

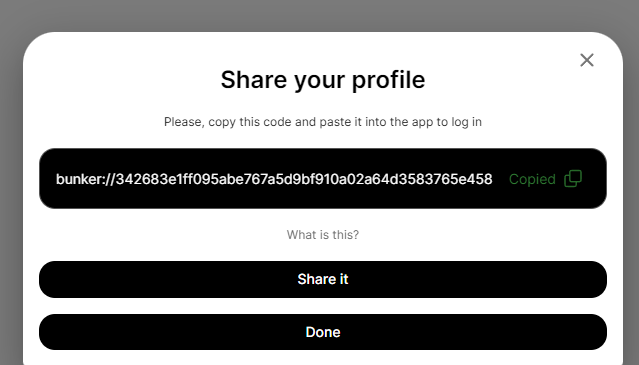

If you want to import your real Nostr profile, the one that everyone knows, you can import your nsec key by adding a new account and select "Import key" and adding your precious nsec key (reminder: your nsec key stays in your browser! The noauth provider won't have access to it!) :

You can see can that my profile picture has been retrieved and updated into noauth :

I can now use this new pubkey attached my nsec.app server to login in NoStrudel again :

Accounts/keys management in noauthd You can list created keys in your bunkerd by doing these command (CTRL+C to exit) :

console $ docker exec -it noauthd node src/index.js list_names [ '/usr/local/bin/node', '/noauthd/src/index.js', 'list_names' ] 1 jack npub1hjdw2y0t44q4znzal2nxy7vwmpv3qwrreu48uy5afqhxkw6d2nhsxt7x6u 1708173927920n 2 peter npub1yp752u5tr5v5u74kadrzgfjz2lsmyz8dyaxkdp4e0ptmaul4cyxsvpzzjz 1708174748972n 3 john npub1xw45yuvh5c73sc5fmmc3vf2zvmtrzdmz4g2u3p2j8zcgc0ktr8msdz6evs 1708174778968n 4 johndoe npub1xsng8c0lp9dtuan6tkdljy9q9fjdxkphvhj93eau07rxugrheu2s38fuhr 1708174831905nIf you want to delete someone key, you have to do :

```console $ docker exec -it noauthd node src/index.js delete_name johndoe [ '/usr/local/bin/node', '/noauthd/src/index.js', 'delete_name', 'johndoe' ] deleted johndoe { id: 4, name: 'johndoe', npub: 'npub1xsng8c0lp9dtuan6tkdljy9q9fjdxkphvhj93eau07rxugrheu2s38fuhr', timestamp: 1708174831905n

$ docker exec -it noauthd node src/index.js list_names [ '/usr/local/bin/node', '/noauthd/src/index.js', 'list_names' ] 1 jack npub1hjdw2y0t44q4znzal2nxy7vwmpv3qwrreu48uy5afqhxkw6d2nhsxt7x6u 1708173927920n 2 peter npub1yp752u5tr5v5u74kadrzgfjz2lsmyz8dyaxkdp4e0ptmaul4cyxsvpzzjz 1708174748972n 3 john npub1xw45yuvh5c73sc5fmmc3vf2zvmtrzdmz4g2u3p2j8zcgc0ktr8msdz6evs 1708174778968n ```

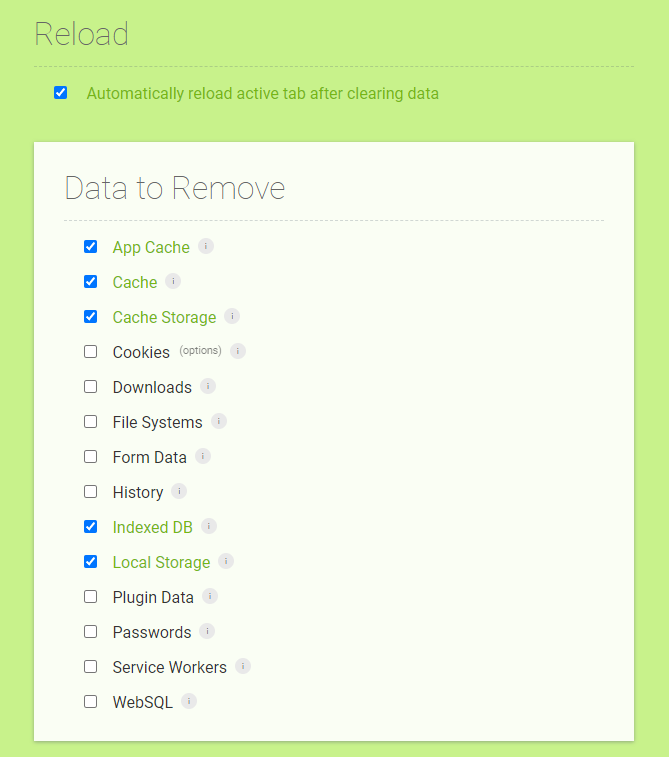

It could be pretty easy to create a script to handle the management of keys but I think @Brugeman may create a web interface for that. Noauth is still very young, changes are committed everyday to fix/enhance the application! As """everything""" is stored locally on your browser, you have to clear the cache of you bunker noauth URL to clean everything. This Chome extension is very useful for that. Check these settings in the extension option :

You can now enjoy even more Nostr ⚡ See you soon in another Fractalized story!

-

@ ee11a5df:b76c4e49

2023-11-09 05:20:37

@ ee11a5df:b76c4e49

2023-11-09 05:20:37A lot of terms have been bandied about regarding relay models: Gossip relay model, outbox relay model, and inbox relay model. But this term "relay model" bothers me. It sounds stuffy and formal and doesn't actually describe what we are talking about very well. Also, people have suggested maybe there are other relay models. So I thought maybe we should rethink this all from first principles. That is what this blog post attempts to do.

Nostr is notes and other stuff transmitted by relays. A client puts an event onto a relay, and subsequently another client reads that event. OK, strictly speaking it could be the same client. Strictly speaking it could even be that no other client reads the event, that the event was intended for the relay (think about nostr connect). But in general, the reason we put events on relays is for other clients to read them.

Given that fact, I see two ways this can occur:

1) The reader reads the event from the same relay that the writer wrote the event to (this I will call relay rendezvous), 2) The event was copied between relays by something.

This second solution is perfectly viable, but it less scalable and less immediate as it requires copies which means that resources will be consumed more rapidly than if we can come up with workable relay rendezvous solutions. That doesn't mean there aren't other considerations which could weigh heavily in favor of copying events. But I am not aware of them, so I will be discussing relay rendezvous.

We can then divide the relay rendezvous situation into several cases: one-to-one, one-to-many, and one-to-all, where the many are a known set, and the all are an unbounded unknown set. I cannot conceive of many-to-anything for nostr so we will speak no further of it.

For a rendezvous to take place, not only do the parties need to agree on a relay (or many relays), but there needs to be some way that readers can become aware that the writer has written something.

So the one-to-one situation works out well by the writer putting the message onto a relay that they know the reader checks for messages on. This we call the INBOX model. It is akin to sending them an email into their inbox where the reader checks for messages addressed to them.

The one-to-(known)-many model is very similar, except the writer has to write to many people's inboxes. Still we are dealing with the INBOX model.

The final case, one-to-(unknown)-all, there is no way the writer can place the message into every person's inbox because they are unknown. So in this case, the writer can write to their own OUTBOX, and anybody interested in these kinds of messages can subscribe to the writer's OUTBOX.

Notice that I have covered every case already, and that I have not even specified what particular types of scenarios call for one-to-one or one-to-many or one-to-all, but that every scenario must fit into one of those models.

So that is basically it. People need INBOX and OUTBOX relays and nothing else for relay rendezvous to cover all the possible scenarios.

That is not to say that other kinds of concerns might not modulate this. There is a suggestion for a DM relay (which is really an INBOX but with a special associated understanding), which is perfectly fine by me. But I don't think there are any other relay models. There is also the case of a live event where two parties are interacting over the same relay, but in terms of rendezvous this isn't a new case, it is just that the shared relay is serving as both parties' INBOX (in the case of a closed chat) and/or both parties' OUTBOX (in the case of an open one) at the same time.

So anyhow that's my thinking on the topic. It has become a fairly concise and complete set of concepts, and this makes me happy. Most things aren't this easy.

-

@ b12b632c:d9e1ff79

2023-08-08 00:02:31

@ b12b632c:d9e1ff79

2023-08-08 00:02:31"Welcome to the Bitcoin Lightning Bolt Card, the world's first Bitcoin debit card. This revolutionary card allows you to easily and securely spend your Bitcoin at lightning compatible merchants around the world." Bolt Card

I discovered few days ago the Bolt Card and I need to say that's pretty amazing. Thinking that we can pay daily with Bitcoin Sats in the same way that we pay with our Visa/Mastecard debit cards is really something huge⚡(based on the fact that sellers are accepting Bitcoins obviously!)

To use Bolt Card you have three choices :

- Use their (Bolt Card) own Bolt Card HUB and their own BTC Lightning node

- Use your own self hosted Bolt Card Hub and an external BTC Lightning node

- Use your own self hosted Bolt Card Hub and your BTC Lightning node (where you shoud have active Lightning channels)

⚡ The first choice is the quickiest and simpliest way to have an NFC Bolt Card. It will take you few seconds (for real). You'll have to wait much longer to receive your NFC card from a website where you bought it than configure it with Bolt Card services.

⚡⚡ The second choice is pretty nice too because you won't have a VPS + to deal with all the BTC Lightnode stuff but you'll use an external one. From the Bolt Card tutorial about Bolt Card Hub, they use a Lightning from voltage.cloud and I have to say that their services are impressive. In few seconds you'll have your own Lightning node and you'll be able to configure it into the Bolt Card Hub settings. PS : voltage.cloud offers 7 trial days / 20$ so don't hesitate to try it!

⚡⚡⚡ The third one is obvisouly a bit (way) more complex because you'll have to provide a VPS + Bitcoin node and a Bitcoin Lightning Node to be able to send and receive Lightning payments with your Bolt NFC Card. So you shoud already have configured everything by yourself to follow this tutorial. I will show what I did for my own installation and all my nodes (BTC & Lightning) are provided by my home Umbrel node (as I don't want to publish my nodes directly on the clearnet). We'll see how to connect to the Umbrel Lighting node later (spoiler: Tailscale).

To resume in this tutorial, I have :

- 1 Umbrel node (rpi4b) with BTC and Lightning with Tailscale installed.

- 1 VPS (Virtual Personal Server) to publish publicly the Bolt Card LNDHub and Bolt Card containers configured the same way as my other containers (with Nginx Proxy Manager)

Ready? Let's do it ! ⚡

Configuring Bolt Card & Bolt Card LNDHub

Always good to begin by reading the bolt card-lndhub-docker github repo. To a better understading of all the components, you can check this schema :

We'll not use it as it is because we'll skip the Caddy part because we already use Nginx Proxy Manager.

To begin we'll clone all the requested folders :

git clone https://github.com/boltcard/boltcard-lndhub-docker bolthub cd bolthub git clone https://github.com/boltcard/boltcard-lndhub BoltCardHub git clone https://github.com/boltcard/boltcard.git git clone https://github.com/boltcard/boltcard-groundcontrol.git GroundControlPS : we won't see how to configure GroundControl yet. This article may be updated later.

We now need to modify the settings file with our own settings :

mv .env.example .env nano .envYou need to replace "your-lnd-node-rpc-address" by your Umbrel TAILSCALE ip address (you can find your Umbrel node IP from your Tailscale admin console):

``` LND_IP=your-lnd-node-rpc-address # <- UMBREL TAILSCALE IP ADDRESS LND_GRPC_PORT=10009 LND_CERT_FILE=tls.cert LND_ADMIN_MACAROON_FILE=admin.macaroon REDIS_PASSWORD=random-string LND_PASSWORD=your-lnd-node-unlock-password

docker-compose.yml only

GROUNDCONTROL=ground-control-url

docker-compose-groundcontrol.yml only

FCM_SERVER_KEY=hex-encoded APNS_P8=hex-encoded APNS_P8_KID=issuer-key-which-is-key-ID-of-your-p8-file APPLE_TEAM_ID=team-id-of-your-developer-account BITCOIN_RPC=bitcoin-rpc-url APNS_TOPIC=app-package-name ```

We now need to generate an AES key and insert it into the "settings.sql" file :

```

hexdump -vn 16 -e '4/4 "%08x" 1 "\n"' /dev/random 19efdc45acec06ad8ebf4d6fe50412d0 nano settings.sql ```

- Insert the AES between ' ' right from 'AES_DECRYPT_KEY'

- Insert your domain or subdomain (subdomain in my case) host between ' ' from 'HOST_DOMAIN'

- Insert your Umbrel tailscale IP between ' ' from 'LN_HOST'

Be aware that this subdomain won't be the LNDHub container (boltcard_hub:9002) but the Boltcard container (boltcard_main:9000)

``` \c card_db;

DELETE FROM settings;

-- at a minimum, the settings marked 'set this' must be set for your system -- an explanation for each of the bolt card server settings can be found here -- https://github.com/boltcard/boltcard/blob/main/docs/SETTINGS.md

INSERT INTO settings (name, value) VALUES ('LOG_LEVEL', 'DEBUG'); INSERT INTO settings (name, value) VALUES ('AES_DECRYPT_KEY', '19efdc45acec06ad8ebf4d6fe50412d0'); -- set this INSERT INTO settings (name, value) VALUES ('HOST_DOMAIN', 'sub.domain.tld'); -- set this INSERT INTO settings (name, value) VALUES ('MIN_WITHDRAW_SATS', '1'); INSERT INTO settings (name, value) VALUES ('MAX_WITHDRAW_SATS', '1000000'); INSERT INTO settings (name, value) VALUES ('LN_HOST', ''); -- set this INSERT INTO settings (name, value) VALUES ('LN_PORT', '10009'); INSERT INTO settings (name, value) VALUES ('LN_TLS_FILE', '/boltcard/tls.cert'); INSERT INTO settings (name, value) VALUES ('LN_MACAROON_FILE', '/boltcard/admin.macaroon'); INSERT INTO settings (name, value) VALUES ('FEE_LIMIT_SAT', '10'); INSERT INTO settings (name, value) VALUES ('FEE_LIMIT_PERCENT', '0.5'); INSERT INTO settings (name, value) VALUES ('LN_TESTNODE', ''); INSERT INTO settings (name, value) VALUES ('FUNCTION_LNURLW', 'ENABLE'); INSERT INTO settings (name, value) VALUES ('FUNCTION_LNURLP', 'ENABLE'); INSERT INTO settings (name, value) VALUES ('FUNCTION_EMAIL', 'DISABLE'); INSERT INTO settings (name, value) VALUES ('AWS_SES_ID', ''); INSERT INTO settings (name, value) VALUES ('AWS_SES_SECRET', ''); INSERT INTO settings (name, value) VALUES ('AWS_SES_EMAIL_FROM', ''); INSERT INTO settings (name, value) VALUES ('EMAIL_MAX_TXS', ''); INSERT INTO settings (name, value) VALUES ('FUNCTION_LNDHUB', 'ENABLE'); INSERT INTO settings (name, value) VALUES ('LNDHUB_URL', 'http://boltcard_hub:9002'); INSERT INTO settings (name, value) VALUES ('FUNCTION_INTERNAL_API', 'ENABLE'); ```

You now need to get two files used by Bolt Card LND Hub, the admin.macaroon and tls.cert files from your Umbrel BTC Ligtning node. You can get these files on your Umbrel node at these locations :

/home/umbrel/umbrel/app-data/lightning/data/lnd/tls.cert /home/umbrel/umbrel/app-data/lightning/data/lnd/data/chain/bitcoin/mainnet/admin.macaroonYou can use either WinSCP, scp or ssh to copy these files to your local workstation and copy them again to your VPS to the root folder "bolthub".

You shoud have all these files into the bolthub directory :

johndoe@yourvps:~/bolthub$ ls -al total 68 drwxrwxr-x 6 johndoe johndoe 4096 Jul 30 00:06 . drwxrwxr-x 3 johndoe johndoe 4096 Jul 22 00:52 .. -rw-rw-r-- 1 johndoe johndoe 482 Jul 29 23:48 .env drwxrwxr-x 8 johndoe johndoe 4096 Jul 22 00:52 .git -rw-rw-r-- 1 johndoe johndoe 66 Jul 22 00:52 .gitignore drwxrwxr-x 11 johndoe johndoe 4096 Jul 22 00:52 BoltCardHub -rw-rw-r-- 1 johndoe johndoe 113 Jul 22 00:52 Caddyfile -rw-rw-r-- 1 johndoe johndoe 173 Jul 22 00:52 CaddyfileGroundControl drwxrwxr-x 6 johndoe johndoe 4096 Jul 22 00:52 GroundControl -rw-rw-r-- 1 johndoe johndoe 431 Jul 22 00:52 GroundControlDockerfile -rw-rw-r-- 1 johndoe johndoe 1913 Jul 22 00:52 README.md -rw-rw-r-- 1 johndoe johndoe 293 May 6 22:24 admin.macaroon drwxrwxr-x 16 johndoe johndoe 4096 Jul 22 00:52 boltcard -rw-rw-r-- 1 johndoe johndoe 3866 Jul 22 00:52 docker-compose-groundcontrol.yml -rw-rw-r-- 1 johndoe johndoe 2985 Jul 22 00:57 docker-compose.yml -rw-rw-r-- 1 johndoe johndoe 1909 Jul 29 23:56 settings.sql -rw-rw-r-- 1 johndoe johndoe 802 May 6 22:21 tls.certWe need to do few last tasks to ensure that Bolt Card LNDHub will work perfectly.

It's maybe already the case on your VPS but your user should be member of the docker group. If not, you can add your user by doing :

sudo groupadd docker sudo usermod -aG docker ${USER}If you did these commands, you need to logout and login again.

We also need to create all the docker named volumes by doing :

docker volume create boltcard_hub_lnd docker volume create boltcard_redisConfiguring Nginx Proxy Manager to proxify Bolt Card LNDHub & Boltcard

You need to have followed my previous blog post to fit with the instructions above.

As we use have the Bolt Card LNDHub docker stack in another directory than we other services and it has its own docker-compose.yml file, we'll have to configure the docker network into the NPM (Nginx Proxy Manager) docker-compose.yml to allow NPM to communicate with the Bolt Card LNDHub & Boltcard containers.

To do this we need to add these lines into our NPM external docker-compose (not the same one that is located into the bolthub directory, the one used for all your other containers) :

nano docker-compose.ymlnetworks: bolthub_boltnet: name: bolthub_boltnet external: trueBe careful, "bolthub" from "bolthub_boltnet" is based on the directory where Bolt Card LNDHub Docker docker-compose.yml file is located.

We also need to attach this network to the NPM container :

nginxproxymanager: container_name: nginxproxymanager image: 'jc21/nginx-proxy-manager:latest' restart: unless-stopped ports: - '80:80' # Public HTTP Port - '443:443' # Public HTTPS Port - '81:81' # Admin Web Port volumes: - ./nginxproxymanager/data:/data - ./nginxproxymanager/letsencrypt:/etc/letsencrypt networks: - fractalized - bolthub_boltnetYou can now recreate the NPM container to attach the network:

docker compose up -dNow, you'll have to create 2 new Proxy Hosts into NPM admin UI. First one for your domain / subdomain to the Bolt Card LNDHub GUI (boltcard_hub:9002) :

And the second one for the Boltcard container (boltcard_main:9000).

In both Proxy Host I set all the SSL options and I use my wildcard certificate but you can generate one certificate for each Proxy Host with Force SSL, HSTS enabled, HTTP/2 Suppot and HSTS Subdomains enabled.

Starting Bolt Card LNDHub & BoltCard containers

Well done! Everything is setup, we can now start the Bolt Card LNDHub & Boltcard containers !

You need to go again to the root folder of the Bolt Card LNDHub projet "bolthub" and start the docker compose stack. We'll begin wihtout a "-d" to see if we have some issues during the containers creation :

docker compose upI won't share my containers logs to avoid any senstive information disclosure about my Bolt Card LNDHub node, but you can see them from the Bolt Card LNDHub Youtube video (link with exact timestamp where it's shown) :

If you have some issues about files mounting of admin.macaroon or tls.cert because you started the docker compose stack the first time without the files located in the bolthub folder do :

docker compose down && docker compose upAfter waiting few seconds/minutes you should go to your Bolt Card LNDHub Web UI domain/sudomain (created earlier into NPM) and you should see the Bolt Card LNDHub Web UI :

if everything is OK, you now run the containers in detached mode :

docker compose up -dVoilààààà ⚡

If you need to all the Bolt Card LNDHub logs you can use :

docker compose logs -f --tail 30You can now follow the video from Bolt Card to configure your Bolt Card NFC card and using your own Bolt Card LNDHub :

~~PS : there is currently a bug when you'll click on "Connect Bolt Card" from the Bold Card Walle app, you might have this error message "API error: updateboltcard: enable_pin is not a valid boolean (code 6)". It's a know issue and the Bolt Card team is currently working on it. You can find more information on their Telegram~~

Thanks to the Bolt Card, the issue has been corrected : changelog

See you soon in another Fractalized story!

-

@ 3bf0c63f:aefa459d

2024-03-23 08:57:08

@ 3bf0c63f:aefa459d

2024-03-23 08:57:08Nostr is not decentralized nor censorship-resistant

Peter Todd has been saying this for a long time and all the time I've been thinking he is misunderstanding everything, but I guess a more charitable interpretation is that he is right.

Nostr today is indeed centralized.

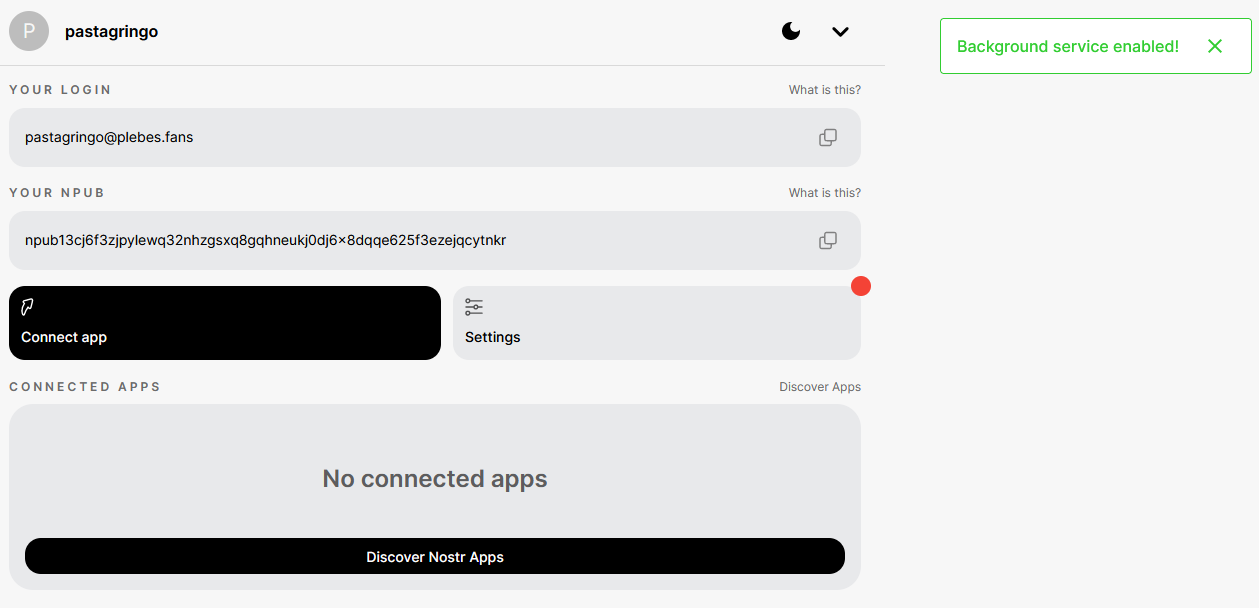

Yesterday I published two harmless notes with the exact same content at the same time. In two minutes the notes had a noticeable difference in responses:

The top one was published to

wss://nostr.wine,wss://nos.lol,wss://pyramid.fiatjaf.com. The second was published to the relay where I generally publish all my notes to,wss://pyramid.fiatjaf.com, and that is announced on my NIP-05 file and on my NIP-65 relay list.A few minutes later I published that screenshot again in two identical notes to the same sets of relays, asking if people understood the implications. The difference in quantity of responses can still be seen today:

These results are skewed now by the fact that the two notes got rebroadcasted to multiple relays after some time, but the fundamental point remains.

What happened was that a huge lot more of people saw the first note compared to the second, and if Nostr was really censorship-resistant that shouldn't have happened at all.

Some people implied in the comments, with an air of obviousness, that publishing the note to "more relays" should have predictably resulted in more replies, which, again, shouldn't be the case if Nostr is really censorship-resistant.

What happens is that most people who engaged with the note are following me, in the sense that they have instructed their clients to fetch my notes on their behalf and present them in the UI, and clients are failing to do that despite me making it clear in multiple ways that my notes are to be found on

wss://pyramid.fiatjaf.com.If we were talking not about me, but about some public figure that was being censored by the State and got banned (or shadowbanned) by the 3 biggest public relays, the sad reality would be that the person would immediately get his reach reduced to ~10% of what they had before. This is not at all unlike what happened to dozens of personalities that were banned from the corporate social media platforms and then moved to other platforms -- how many of their original followers switched to these other platforms? Probably some small percentage close to 10%. In that sense Nostr today is similar to what we had before.

Peter Todd is right that if the way Nostr works is that you just subscribe to a small set of relays and expect to get everything from them then it tends to get very centralized very fast, and this is the reality today.

Peter Todd is wrong that Nostr is inherently centralized or that it needs a protocol change to become what it has always purported to be. He is in fact wrong today, because what is written above is not valid for all clients of today, and if we drive in the right direction we can successfully make Peter Todd be more and more wrong as time passes, instead of the contrary.

See also:

-

@ 3bf0c63f:aefa459d

2024-03-19 14:32:01

@ 3bf0c63f:aefa459d

2024-03-19 14:32:01Censorship-resistant relay discovery in Nostr

In Nostr is not decentralized nor censorship-resistant I said Nostr is centralized. Peter Todd thinks it is centralized by design, but I disagree.

Nostr wasn't designed to be centralized. The idea was always that clients would follow people in the relays they decided to publish to, even if it was a single-user relay hosted in an island in the middle of the Pacific ocean.

But the Nostr explanations never had any guidance about how to do this, and the protocol itself never had any enforcement mechanisms for any of this (because it would be impossible).