-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28A chatura Kelsen

Já presenciei várias vezes este mesmo fenômeno: há um grupo de amigos ou proto-amigos conversando alegremente sobre o conservadorismo, o tradicionalismo, o anti-comunismo, o liberalismo econômico, o livre-mercado, a filosofia olavista. É um momento incrível porque para todos ali é sempre tão difícil encontrar alguém com quem conversar sobre esses assuntos.

Eis que um deles fez faculdade de direito. Tendo feito faculdade de direito por acreditar que essa lhe traria algum conhecimento (já que todos os filósofos de antigamente faziam faculdade de direito!) esse sujeito que fez faculdade de direito, ao contrário dos demais, não toma conhecimento de que a sua faculdade é uma nulidade, uma vergonha, uma época da sua vida jogada fora -- e crê que são valiosos os conteúdos que lhe foram transmitidos pelos professores que estão ali para ajudar os alunos a se preparem para o exame da OAB.

Começa a falar de Kelsen. A teoria pura do direito, hermenêutica, filosofia do direito. A conversa desanda. Ninguém sabe o que dizer. A filosofia pura do direito não está errada porque é apenas uma lógica pura, e como tal não pode ser refutada; e por não ter qualquer relação com o mundo não há como puxar um outro assunto a partir dela e sair daquele território. Os jovens filósofos perdem ali as próximas duas horas falando de Kelsen, Kelsen. Uma presença que os ofende, que parece errada, que tem tudo para estar errada, mas está certa. Certa e inútil, ela lhes devora as idéias, que são digeridas pela teoria pura do direito.

É imperativo estabelecer esta regra: só é permitido falar de Kelsen se suas idéias não forem abordadas ou levadas em conta. Apenas elogios ou ofensas serão tolerados: Kelsen era um bom homem; Kelsen era um bobão. Pronto.

Eis aqui um exemplo gravado do fenômeno descrito acima: https://www.youtube.com/watch?v=CKb8Ij5ThvA: o Flavio Morgenstern todo simpático, elogiando o outro, falando coisas interessantes sobre o mundo; e o outro, que devia ser amigo dele antes de entrar para a faculdade de direito, começa a falar de Kelsen, com bastante confiança de que aquilo é relevante, e dá-lhe Kelsen, filosofia do direito, toda essa chatice tremenda.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Músicas que você já conhece

É bom escutar as mesmas músicas que você já conhece um pouco. cada nova escuta te deixa mais familiarizado e faz com que aquela música se torne parte do seu cabedal interno de melodias.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28The Lightning Network solves the problem of the decentralized commit

Before reading this, see Ripple and the problem of the decentralized commit.

The Bitcoin Lightning Network can be thought as a system similar to Ripple: there are conditional IOUs (HTLCs) that are sent in "prepare"-like messages across a route, and a secret

pthat must travel from the final receiver backwards through the route until it reaches the initial sender and possession of that secret serves to prove the payment as well as to make the IOU hold true.The difference is that if one of the parties don't send the "acknowledge" in time, the other has a trusted third-party with its own clock (that is the clock that is valid for everybody involved) to complain immediately at the timeout: the Bitcoin blockchain. If C has

pand B isn't acknowleding it, C tells the Bitcoin blockchain and it will force the transfer of the amount from B to C.Differences (or 1 upside and 3 downside)

-

The Lightning Network differs from a "pure" Ripple network in that when we send a "prepare" message on the Lightning Network, unlike on a pure Ripple network we're not just promising we will owe something -- instead we are putting the money on the table already for the other to get if we are not responsive.

-

The feature above removes the trust element from the equation. We can now have relationships with people we don't trust, as the Bitcoin blockchain will serve as an automated escrow for our conditional payments and no one will be harmed. Therefore it is much easier to build networks and route payments if you don't always require trust relationships.

-

However it introduces the cost of the capital. A ton of capital must be made available in channels and locked in HTLCs so payments can be routed. This leads to potential issues like the ones described in https://twitter.com/joostjgr/status/1308414364911841281.

-

Another issue that comes with the necessity of using the Bitcoin blockchain as an arbiter is that it may cost a lot in fees -- much more than the value of the payment that is being disputed -- to enforce it on the blockchain.[^closing-channels-for-nothing]

Solutions

Because the downsides listed above are so real and problematic -- and much more so when attacks from malicious peers are taken into account --, some have argued that the Lightning Network must rely on at least some trust between peers, which partly negate the benefit.

The introduction of purely trust-backend channels is the next step in the reasoning: if we are trusting already, why not make channels that don't touch the blockchain and don't require peers to commit large amounts of capital?

The reason is, again, the ambiguity that comes from the problem of the decentralized commit. Therefore hosted channels can be good when trust is required only from one side, like in the final hops of payments, but they cannot work in the middle of routes without eroding trust relationships between peers (however they can be useful if employed as channels between two nodes ran by the same person).

The next solution is a revamped pure Ripple network, one that solves the problem of the decentralized commit in a different way.

[^closing-channels-for-nothing]: That is even true when, for reasons of the payment being so small that it doesn't even deserve an actual HTLC that can be enforced on the chain (as per the protocol), even then the channel between the two nodes will be closed, only to make it very clear that there was a disagreement. Leaving it online would be harmful as one of the peers could repeat the attack again and again. This is a proof that ambiguity, in case of the pure Ripple network, is a very important issue.

-

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: Link sharing incentivized by satoshis

See https://2key.io/ and https://www.youtube.com/watch?v=CEwRv7qw4fY&t=192s.

I think the general idea is to make a self-serving automatic referral program for individual links, but I wasn't patient enough to deeply understand neither of the above ideas.

Solving fraud is an issue. People can fake clicks.

One possible solution is to track conversions instead of clicks, but then it's too complex as the receiving side must do stuff and be trusted to do it correctly.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28SummaDB

This was a hierarchical database server similar to the original Firebase. Records were stored on a LevelDB on different paths, like:

/fruits/banana/color:yellow/fruits/banana/flavor:sweet

And could be queried by path too, using HTTP, for example, a call to

http://hostname:port/fruits/banana, for example, would return a JSON document likejson { "color": "yellow", "flavor": "sweet" }While a call to

/fruitswould returnjson { "banana": { "color": "yellow", "flavor": "sweet" } }POST,PUTandPATCHrequests also worked.In some cases the values would be under a special

"_val"property to disambiguate them from paths. (I may be missing some other details that I forgot.)GraphQL was also supported as a query language, so a query like

graphql query { fruits { banana { color } } }would return

{"fruits": {"banana": {"color": "yellow"}}}.SummulaDB

SummulaDB was a browser/JavaScript build of SummaDB. It ran on the same Go code compiled with GopherJS, and using PouchDB as the storage backend, if I remember correctly.

It had replication between browser and server built-in, and one could replicate just subtrees of the main tree, so you could have stuff like this in the server:

json { "users": { "bob": {}, "alice": {} } }And then only allow Bob to replicate

/users/boband Alice to replicate/users/alice. I am sure the require auth stuff was also built in.There was also a PouchDB plugin to make this process smoother and data access more intuitive (it would hide the

_valstuff and allow properties to be accessed directly, today I wouldn't waste time working on these hidden magic things).The computed properties complexity

The next step, which I never managed to get fully working and caused me to give it up because of the complexity, was the ability to automatically and dynamically compute materialized properties based on data in the tree.

The idea was partly inspired on CouchDB computed views and how limited they were, I wanted a thing that would be super powerful, like, given

json { "matches": { "1": { "team1": "A", "team2": "B", "score": "2x1", "date": "2020-01-02" }, "1": { "team1": "D", "team2": "C", "score": "3x2", "date": "2020-01-07" } } }One should be able to add a computed property at

/matches/standingsthat computed the scores of all teams after all matches, for example.I tried to complete this in multiple ways but they were all adding much more complexity I could handle. Maybe it would have worked better on a more flexible and powerful and functional language, or if I had more time and patience, or more people.

Screenshots

This is just one very simple unfinished admin frontend client view of the hierarchical dataset.

- https://github.com/fiatjaf/summadb

- https://github.com/fiatjaf/summuladb

- https://github.com/fiatjaf/pouch-summa

-

@ 3ffac3a6:2d656657

2025-02-06 03:58:47

@ 3ffac3a6:2d656657

2025-02-06 03:58:47Motivations

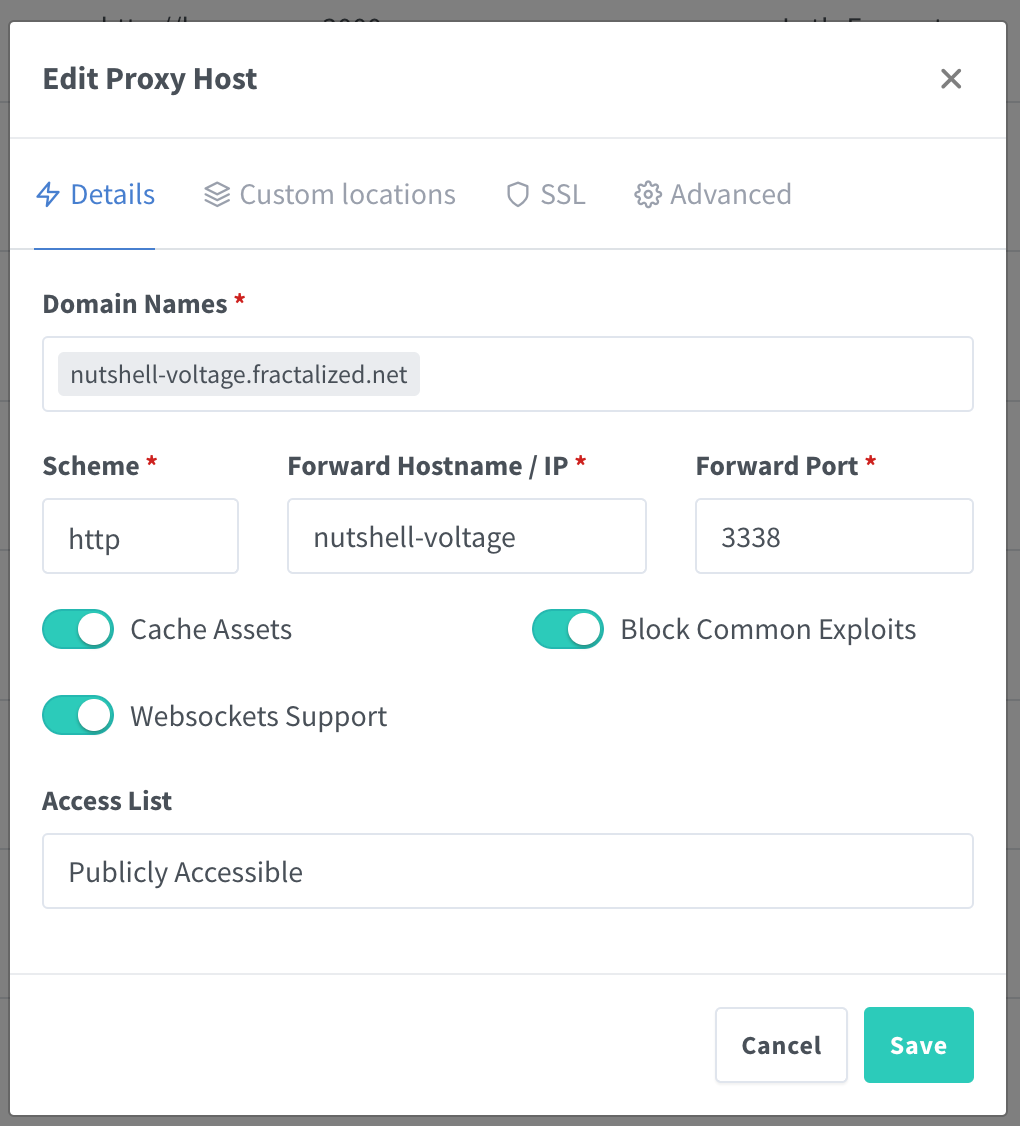

Recently, my sites hosted behind Cloudflare tunnels mysteriously stopped working—not once, but twice. The first outage occurred about a week ago. Interestingly, when I switched to using the 1.1.1.1 WARP VPN on my cellphone or PC, the sites became accessible again. Clearly, the issue wasn't with the sites themselves but something about the routing. This led me to the brilliant (or desperate) idea of routing all Cloudflare-bound traffic through a WARP tunnel in my local network.

Prerequisites

- A "server" with an amd64 processor (the WARP client only works on amd64 architecture). I'm using an old mac mini, but really, anything with an amd64 processor will do.

- Basic knowledge of Linux commands.

- Access to your Wi-Fi router's settings (if you plan to configure routes there).

Step 1: Installing the WARP CLI

- Update your system packages:

bash sudo apt update && sudo apt upgrade -y- Download and install the WARP CLI:

```bash curl https://pkg.cloudflareclient.com/pubkey.gpg | sudo gpg --yes --dearmor --output /usr/share/keyrings/cloudflare-warp-archive-keyring.gpg

echo "deb [arch=amd64 signed-by=/usr/share/keyrings/cloudflare-warp-archive-keyring.gpg] https://pkg.cloudflareclient.com/ $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/cloudflare-client.list

sudo apt-get update && sudo apt-get install cloudflare-warp ``` 3. Register and connect to WARP:

Run the following commands to register and connect to WARP:

```bash sudo warp-cli register sudo warp-cli connect ````

Confirm the connection with:

bash warp-cli status

Step 2: Routing Traffic on the Server Machine

Now that WARP is connected, let's route the local network's Cloudflare-bound traffic through this tunnel.

- Enable IP forwarding:

bash sudo sysctl -w net.ipv4.ip_forward=1Make it persistent after reboot:

bash echo 'net.ipv4.ip_forward=1' | sudo tee -a /etc/sysctl.conf sudo sysctl -p- Set up firewall rules to forward traffic:

bash sudo nft add rule ip filter FORWARD iif "eth0" oif "CloudflareWARP" ip saddr 192.168.31.0/24 ip daddr 104.0.0.0/8 accept sudo nft add rule ip filter FORWARD iif "CloudflareWARP" oif "eth0" ip saddr 104.0.0.0/8 ip daddr 192.168.31.0/24 ct state established,related acceptReplace

eth0with your actual network interface if different.- Make rules persistent:

bash sudo apt install nftables sudo nft list ruleset > /etc/nftables.conf

Step 3: Configuring the Route on a Local PC (Linux)

On your local Linux machine:

- Add a static route:

bash sudo ip route add 104.0.0.0/24 via <SERVER_IP>Replace

<SERVER_IP>with the internal IP of your WARP-enabled server. This should be a temporary solution, since it only effects a local machine. For a solution that can effect the whole local network, please see next step.

Step 4: Configuring the Route on Your Wi-Fi Router (Recommended)

If your router allows adding static routes:

- Log in to your router's admin interface.

- Navigate to the Static Routing section. (This may vary depending on the router model.)

- Add a new static route:

- Destination Network:

104.0.0.0 - Subnet Mask:

255.255.255.0 - Gateway:

<SERVER_IP> - Metric:

1(or leave it default) - Save and apply the settings.

One of the key advantages of this method is how easy it is to disable once your ISP's routing issues are resolved. Since the changes affect the entire network at once, you can quickly restore normal network behavior by simply removing the static routes or disabling the forwarding rules, all without the need for complex reconfigurations.

Final Thoughts

Congratulations! You've now routed all your Cloudflare-bound traffic through a secure WARP tunnel, effectively bypassing mysterious connectivity issues. If the sites ever go down again, at least you’ll have one less thing to blame—and one more thing to debug.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Precautionary Principle

The precautionary principle that people, including Nassim Nicholas Taleb, love and treat as some form of wisdom, is actually just a justification for arbitrary acts.

In a given situation for which there's no sufficient knowledge, either A or B can be seen as risky or precautionary measures, there's no way to know except if you have sufficient knowledge.

Someone could reply saying, for example, that the known risk of A is tolerable to the unknown, probably magnitudes bigger, risk of B. Unless you know better or at least have a logical explanation for the risks of B (a thing "scientists" don't have because they notoriously dislike making logical claims), in which case you do know something and is not invoking the precautionary principle anymore, just relying on your logical reasoning – and that can be discussed and questioned by others, undermining your intended usage of the label "precautionary principle" as a magic cover for your actions.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28my personal approach on using

let,constandvarin javascriptSince these names can be used interchangeably almost everywhere and there are a lot of people asking and searching on the internet on how to use them (myself included until some weeks ago), I developed a personal approach that uses the declarations mostly as readability and code-sense sugar, for helping my mind, instead of expecting them to add physical value to the programs.

letis only for short-lived variables, defined at a single line and not changed after. Generally those variables which are there only to decrease the amount of typing. For example:for (let key in something) { /* we could use `something[key]` for this entire block, but it would be too much letters and not good for the fingers or the eyes, so we use a radically temporary variable */ let value = something[key] ... }constfor all names known to be constant across the entire module. Not including locally constant values. Thevaluein the example above, for example, is constant in its scope and could be declared withconst, but since there are many iterations and for each one there's a value with same name, "value", that could trick the reader into thinkingvalueis always the same. Modules and functions are the best example ofconstvariables:const PouchDB = require('pouchdb') const instantiateDB = function () {} const codes = { 23: 'atc', 43: 'qwx', 77: 'oxi' }varfor everything that may or not be variable. Names that may confuse people reading the code, even if they are constant locally, and are not suitable forlet(i.e., they are not completed in a simple direct declaration) apply for being declared withvar. For example:var output = '\n' lines.forEach(line => { output += ' ' output += line.trim() output += '\n' }) output += '\n---' for (let parent in parents) { var definitions = {} definitions.name = getName(parent) definitions.config = {} definitions.parent = parent } -

@ 599f67f7:21fb3ea9

2025-01-26 11:01:05

@ 599f67f7:21fb3ea9

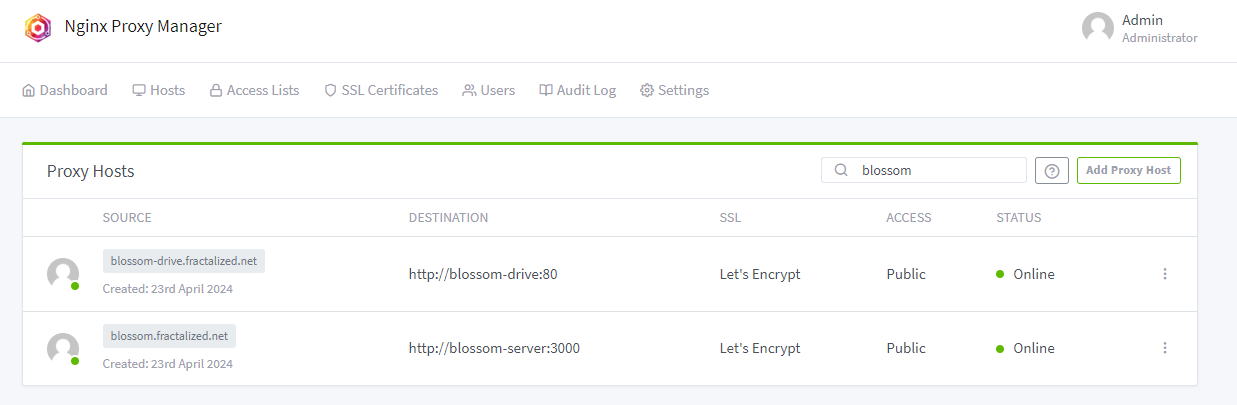

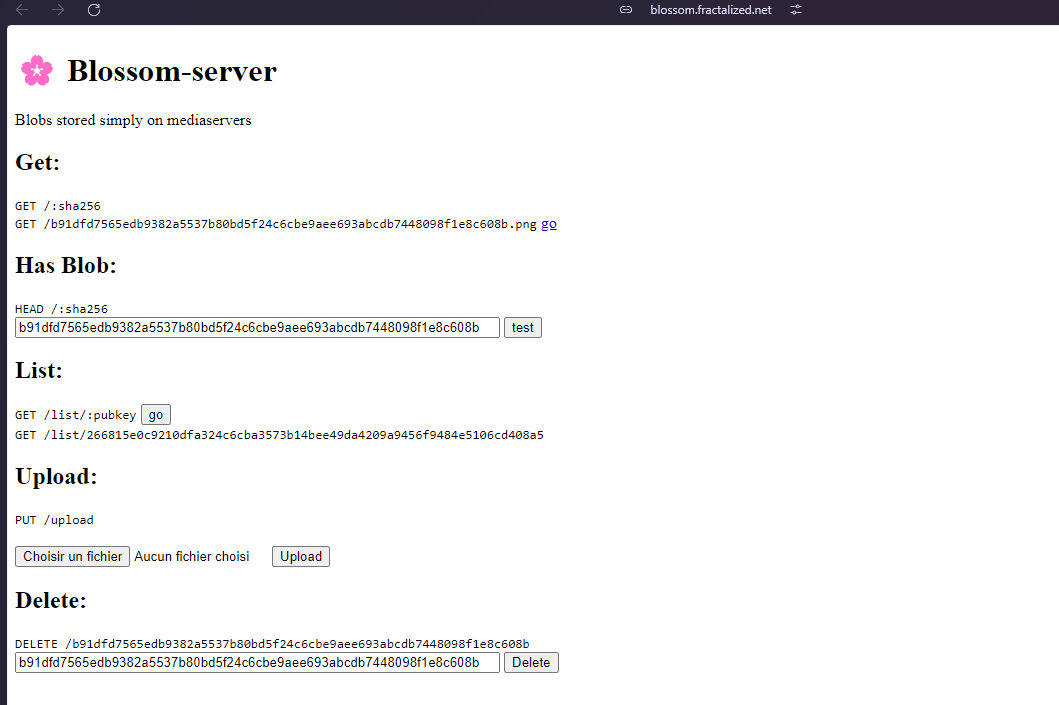

2025-01-26 11:01:05¿Qué es Blossom?

nostr:nevent1qqspttj39n6ld4plhn4e2mq3utxpju93u4k7w33l3ehxyf0g9lh3f0qpzpmhxue69uhkummnw3ezuamfdejsygzenanl0hmkjnrq8fksvdhpt67xzrdh0h8agltwt5znsmvzr7e74ywgmr72

Blossom significa Blobs Simply Stored on Media Servers (Blobs Simplemente Almacenados en Servidores de Medios). Blobs son fragmentos de datos binarios, como archivos pero sin nombres. En lugar de nombres, se identifican por su hash sha256. La ventaja de usar hashes sha256 en lugar de nombres es que los hashes son IDs universales que se pueden calcular a partir del archivo mismo utilizando el algoritmo de hash sha256.

💡 archivo -> sha256 -> hash

Blossom es, por lo tanto, un conjunto de puntos finales HTTP que permiten a los usuarios almacenar y recuperar blobs almacenados en servidores utilizando su identidad nostr.

¿Por qué Blossom?

Como mencionamos hace un momento, al usar claves nostr como su identidad, Blossom permite que los datos sean "propiedad" del usuario. Esto simplifica enormemente la cuestión de "qué es spam" para el alojamiento de servidores. Por ejemplo, en nuestro Blossom solo permitimos cargas por miembros de la comunidad verificados que tengan un NIP-05 con nosotros.

Los usuarios pueden subir en múltiples servidores de blossom, por ejemplo, uno alojado por su comunidad, uno de pago, otro público y gratuito, para establecer redundancia de sus datos. Los blobs pueden ser espejados entre servidores de blossom, de manera similar a cómo los relays nostr pueden transmitir eventos entre sí. Esto mejora la resistencia a la censura de blossom.

A continuación se muestra una breve tabla de comparación entre torrents, Blossom y servidores CDN centralizados. (Suponiendo que hay muchos seeders para torrents y se utilizan múltiples servidores con Blossom).

| | Torrents | Blossom | CDN Centralizado | | --------------------------------------------------------------- | -------- | ------- | ---------------- | | Descentralizado | ✅ | ✅ | ❌ | | Resistencia a la censura | ✅ | ✅ | ❌ | | ¿Puedo usarlo para publicar fotos de gatitos en redes sociales? | ❌ | ✅ | ✅ |

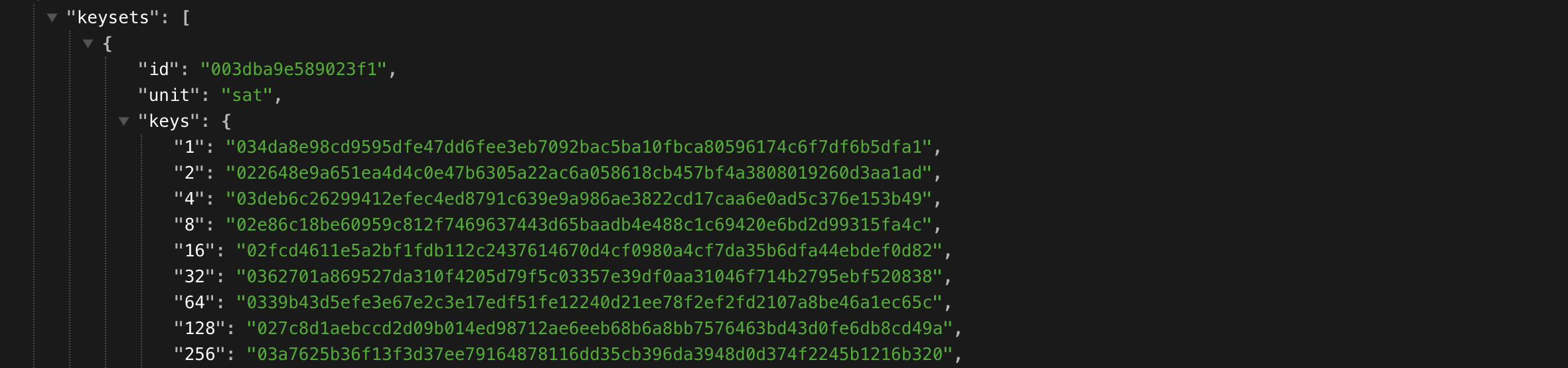

¿Cómo funciona?

Blossom utiliza varios tipos de eventos nostr para comunicarse con el servidor de medios.

| kind | descripción | BUD | | ----- | ------------------------------- | ------------------------------------------------------------------ | | 24242 | Evento de autorización | BUD01 | | 10063 | Lista de Servidores de Usuarios | BUD03 |

kind:24242 - Autorización

Esto es esencialmente lo que ya describimos al usar claves nostr como IDs de usuario. En el evento, el usuario le dice al servidor que quiere subir o eliminar un archivo y lo firma con sus claves nostr. El servidor realiza algunas verificaciones en este evento y luego ejecuta el comando del usuario si todo parece estar bien.

kind:10063 - Lista de Servidores de Usuarios

Esto es utilizado por el usuario para anunciar a qué servidores de medios está subiendo. De esta manera, cuando el cliente ve esta lista, sabe dónde subir los archivos del usuario. También puede subir en múltiples servidores definidos en la lista para asegurar redundancia. En el lado de recuperación, si por alguna razón uno de los servidores en la lista del usuario está fuera de servicio, o el archivo ya no se puede encontrar allí, el cliente puede usar esta lista para intentar recuperar el archivo de otros servidores en la lista. Dado que los blobs se identifican por sus hashes, el mismo blob tendrá el mismo hash en cualquier servidor de medios. Todo lo que el cliente necesita hacer es cambiar la URL por la de un servidor diferente.

Ahora, además de los conceptos básicos de cómo funciona Blossom, también hay otros tipos de eventos que hacen que Blossom sea aún más interesante.

| kind | descripción | | ----- | --------------------- | | 30563 | Blossom Drives | | 36363 | Listado de Servidores | | 31963 | Reseña de Servidores |

kind:30563 - Blossom Drives

Este tipo de evento facilita la organización de blobs en carpetas, como estamos acostumbrados con los drives (piensa en Google Drive, iCloud, Proton Drive, etc.). El evento contiene información sobre la estructura de carpetas y los metadatos del drive.

kind:36363 y kind:31963 - Listado y Reseña

Estos tipos de eventos permiten a los usuarios descubrir y reseñar servidores de medios a través de nostr. kind:36363 es un listado de servidores que contiene la URL del servidor. kind:31963 es una reseña, donde los usuarios pueden calificar servidores.

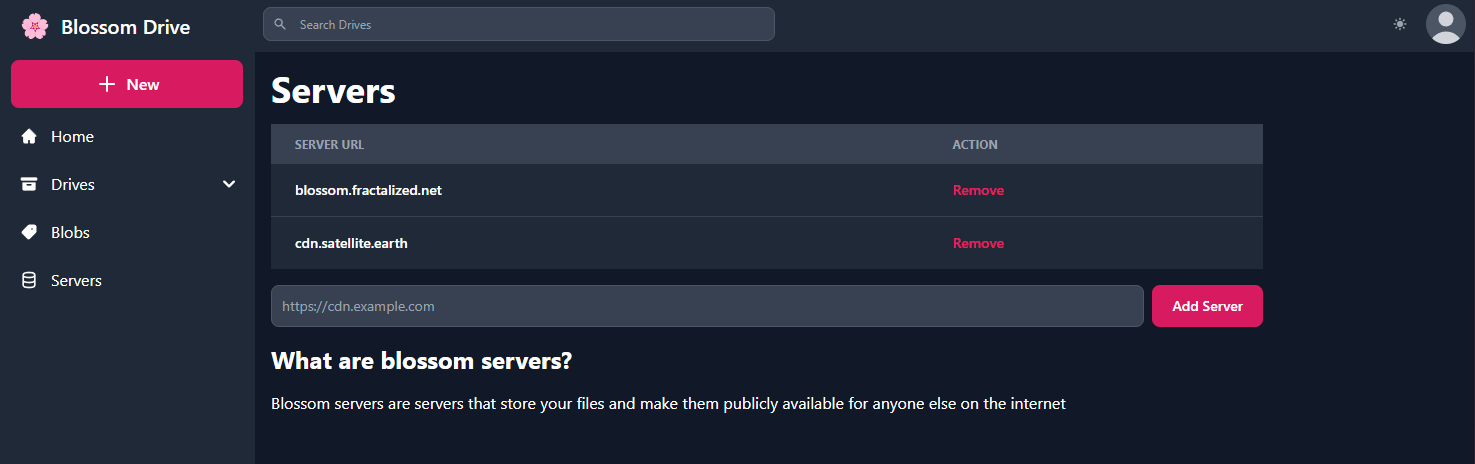

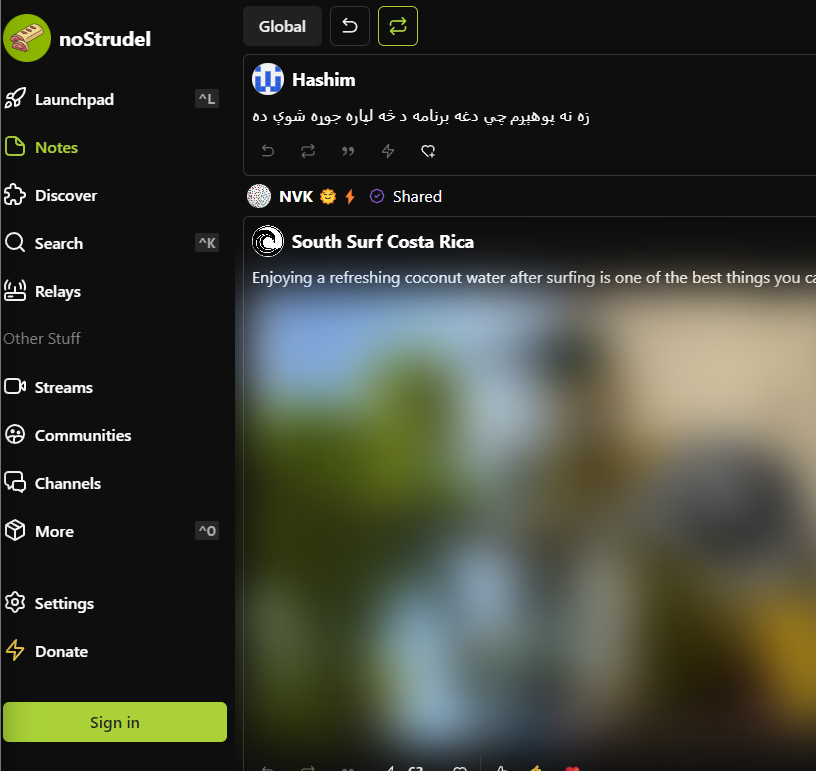

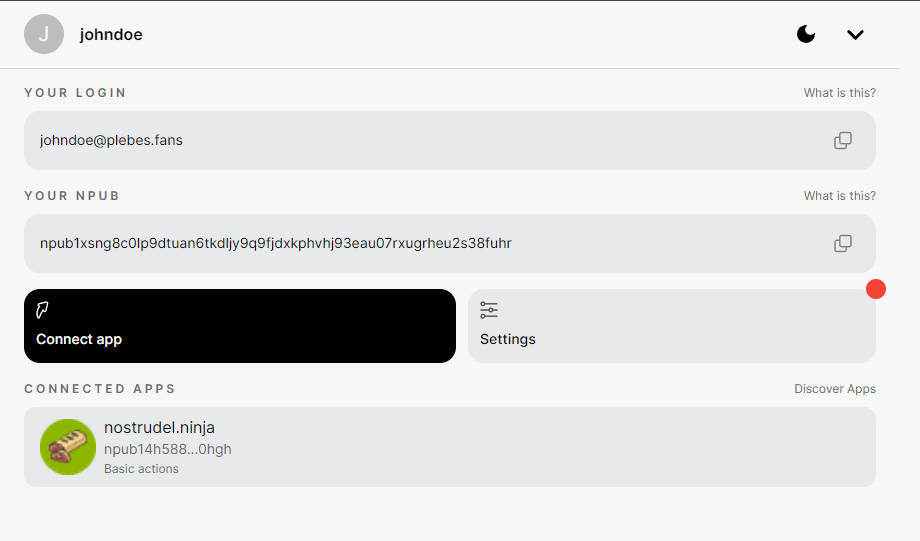

¿Cómo lo uso?

Encuentra un servidor

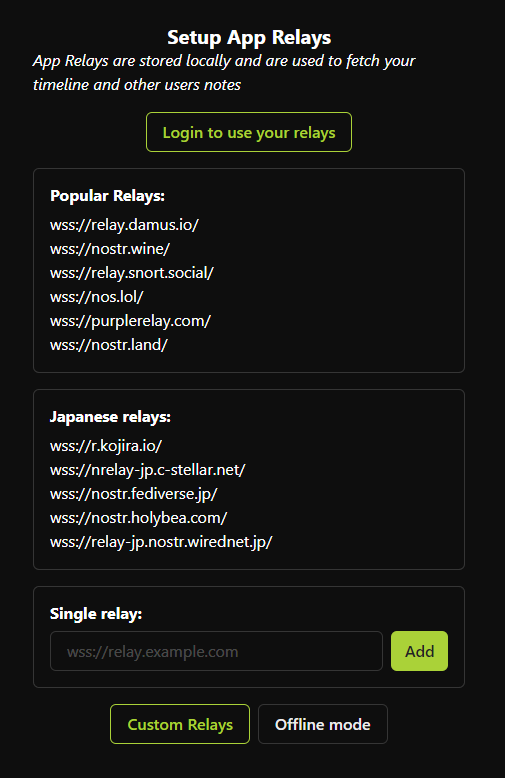

Primero necesitarás elegir un servidor Blossom donde subirás tus archivos. Puedes navegar por los públicos en blossomservers.com. Algunos de ellos son de pago, otros pueden requerir que tus claves nostr estén en una lista blanca.

Luego, puedes ir a la URL de su servidor y probar a subir un archivo pequeño, como una foto. Si estás satisfecho con el servidor (es rápido y aún no te ha fallado), puedes agregarlo a tu Lista de Servidores de Usuarios. Cubriremos brevemente cómo hacer esto en noStrudel y Amethyst (pero solo necesitas hacer esto una vez, una vez que tu lista actualizada esté publicada, los clientes pueden simplemente recuperarla de nostr).

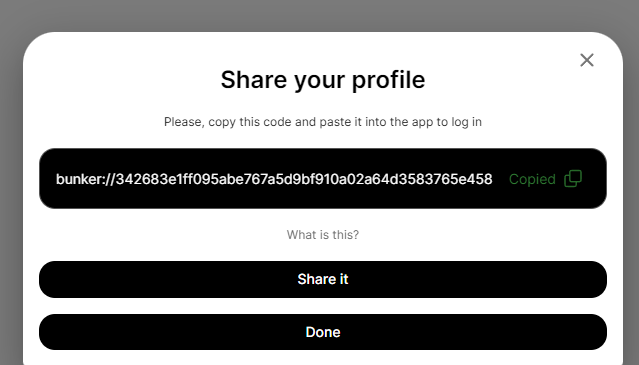

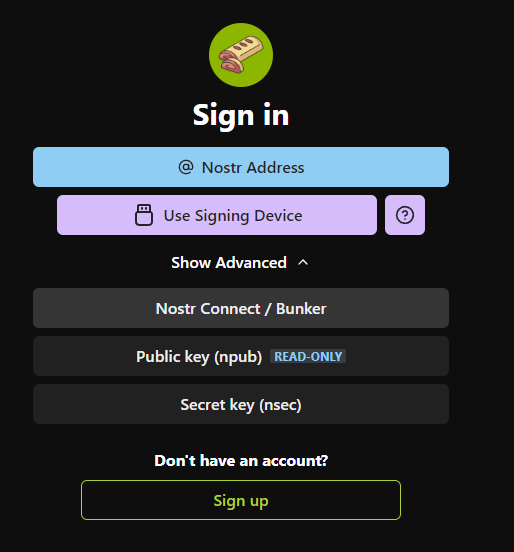

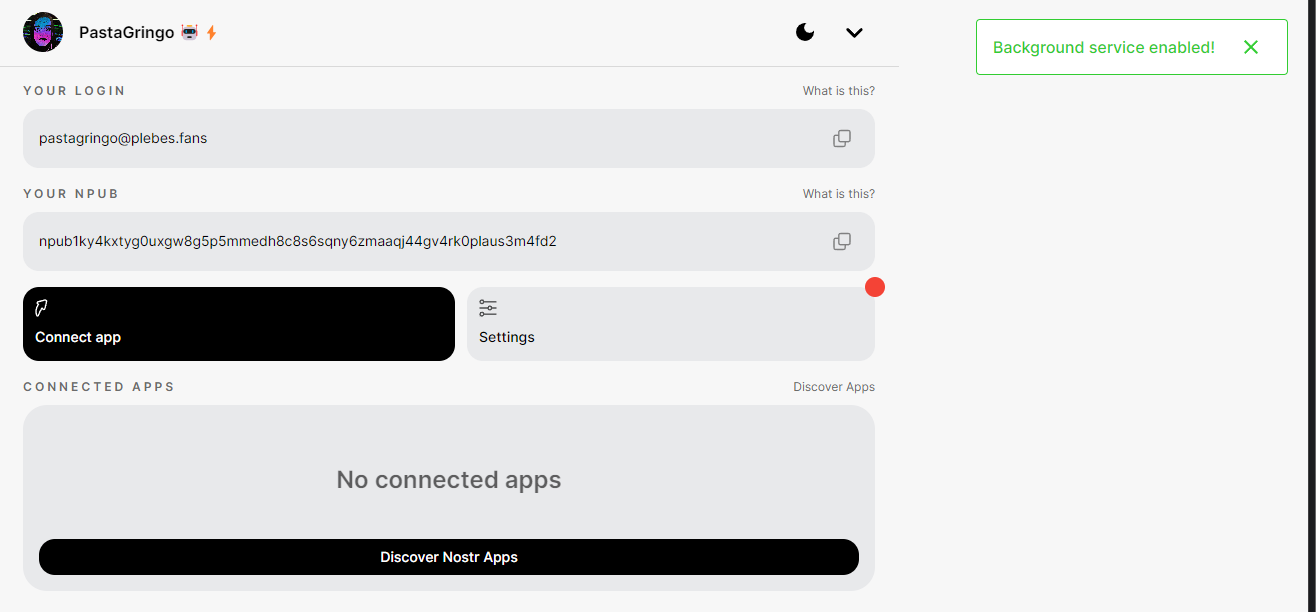

noStrudel

- Encuentra Relays en la barra lateral, luego elige Servidores de Medios.

- Agrega un servidor de medios, o mejor aún, varios.

- Publica tu lista de servidores. ✅

Amethyst

- En la barra lateral, encuentra Servidores multimedia.

- Bajo Servidores Blossom, agrega tus servidores de medios.

- Firma y publica. ✅

Ahora, cuando vayas a hacer una publicación y adjuntar una foto, por ejemplo, se subirá en tu servidor blossom.

⚠️ Ten en cuenta que debes suponer que los archivos que subas serán públicos. Aunque puedes proteger un archivo con contraseña, esto no ha sido auditado.

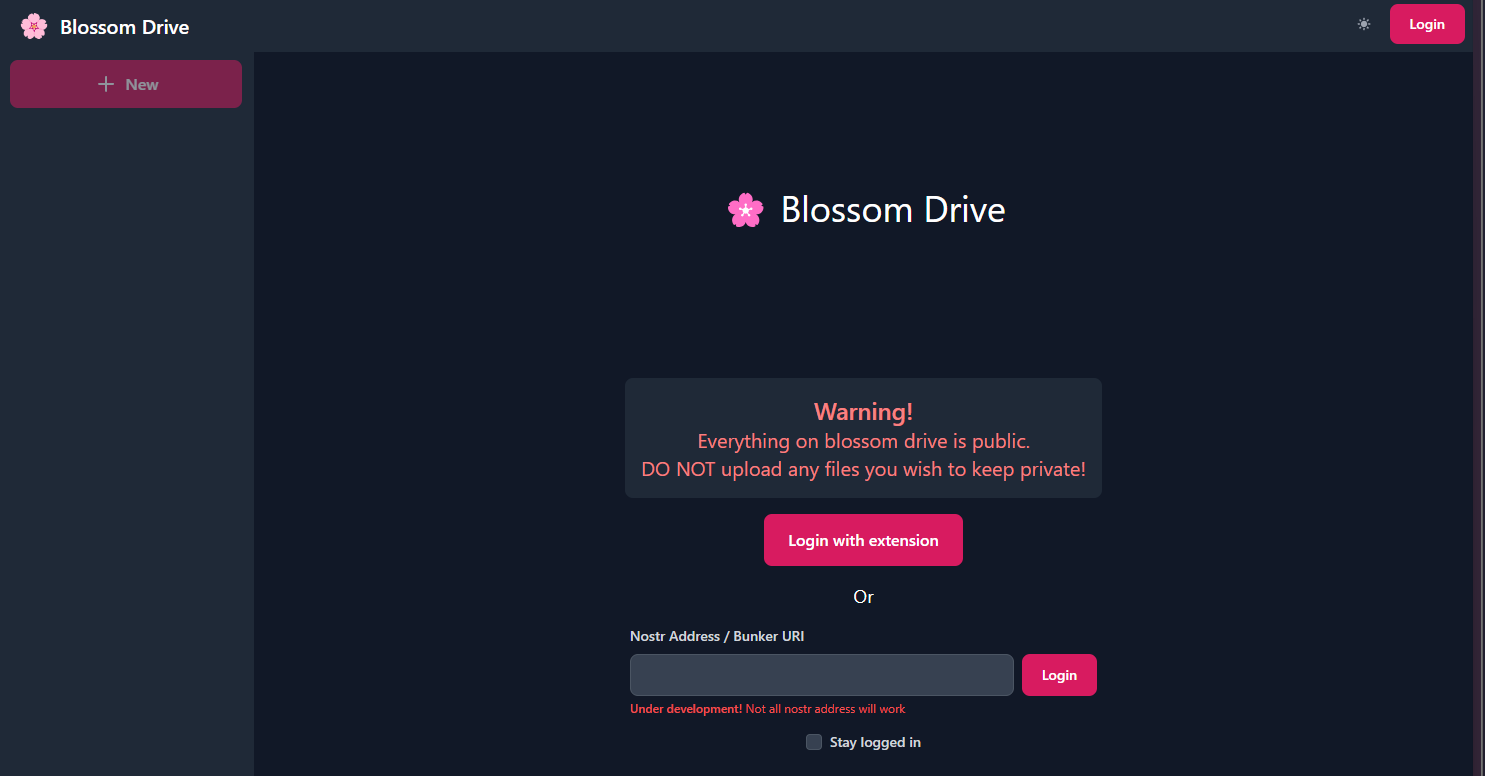

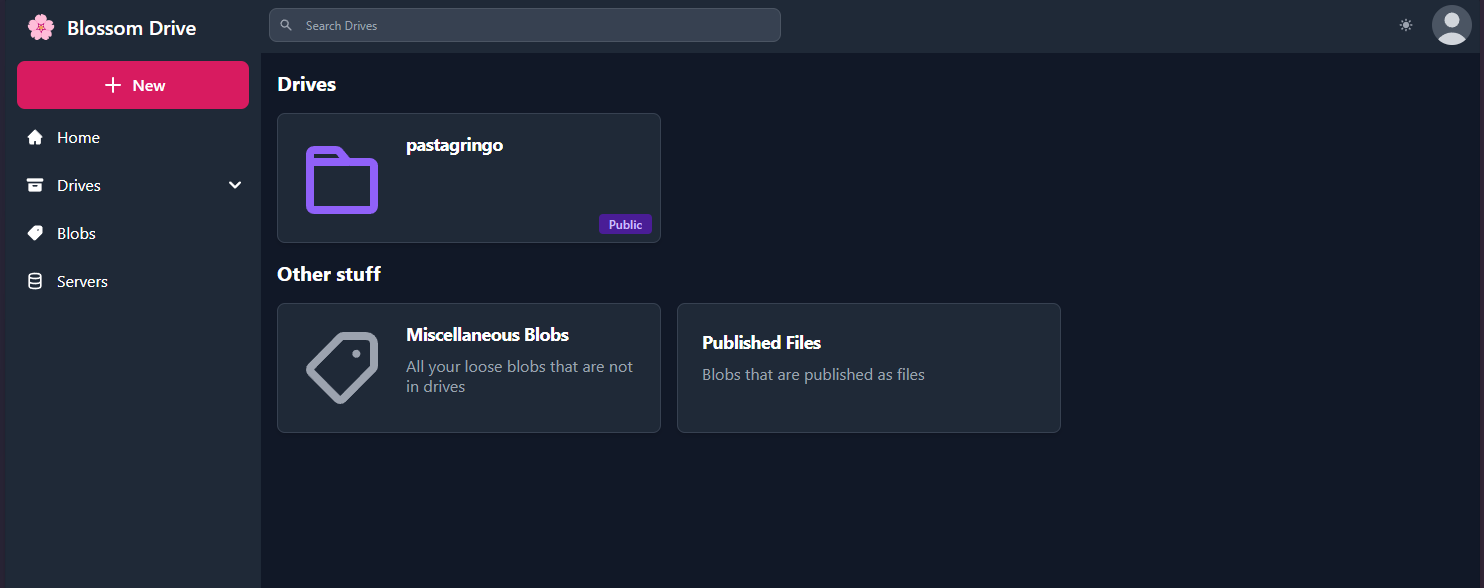

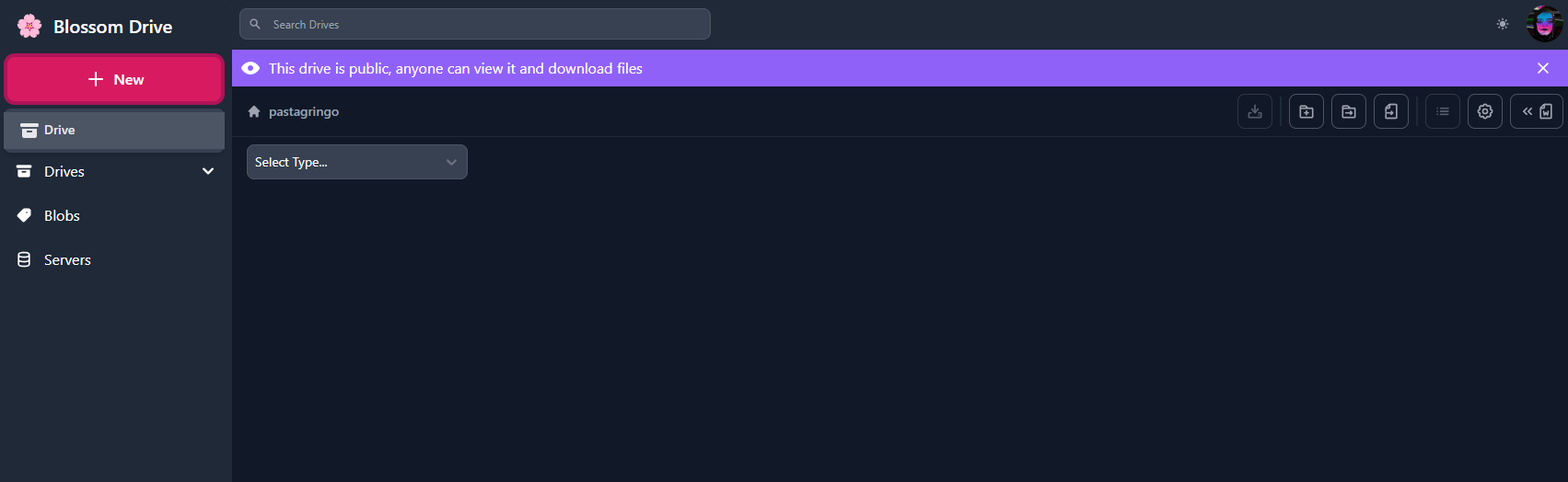

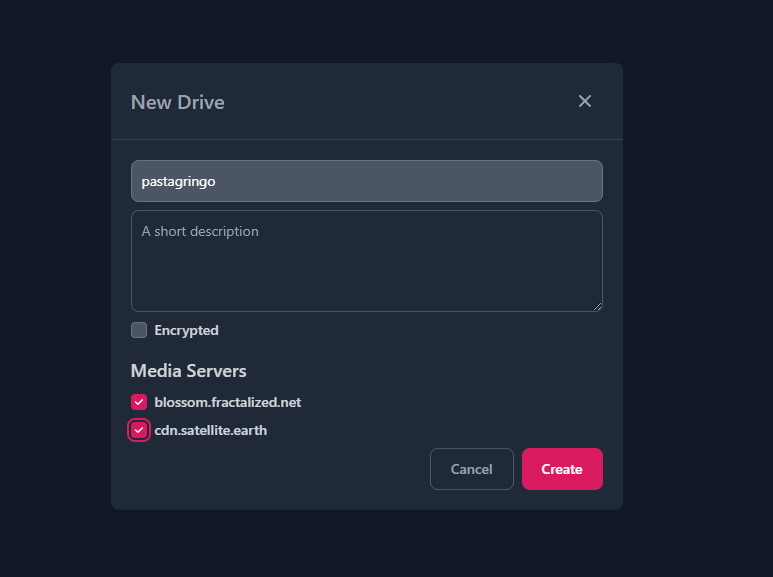

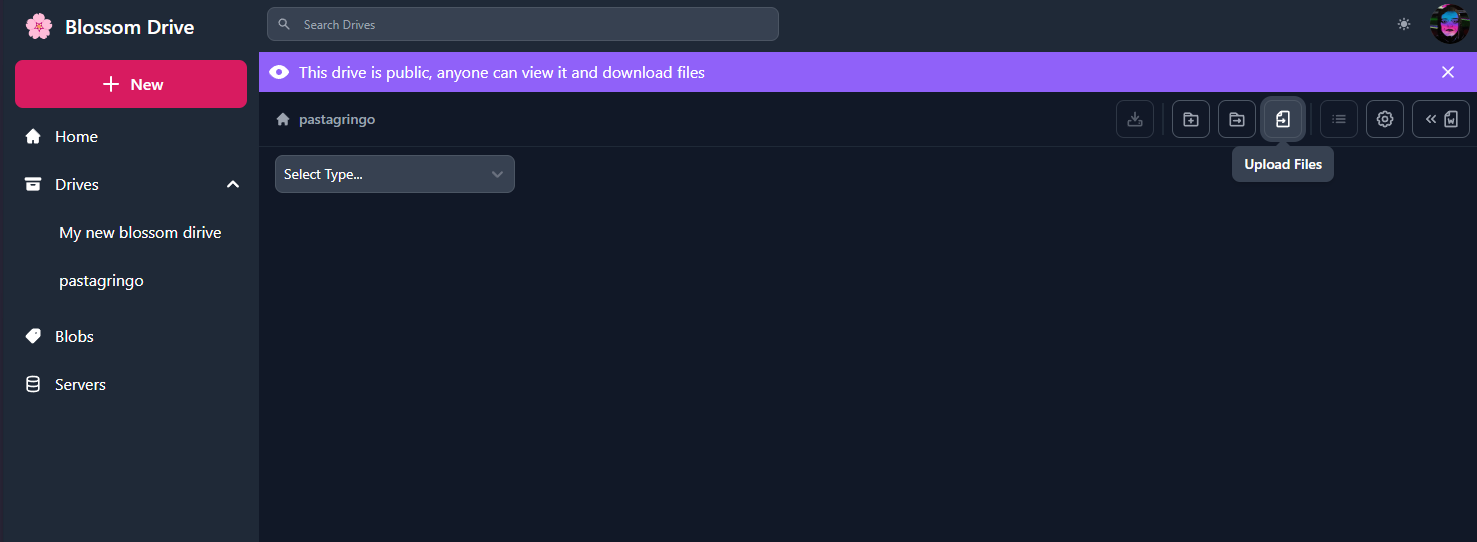

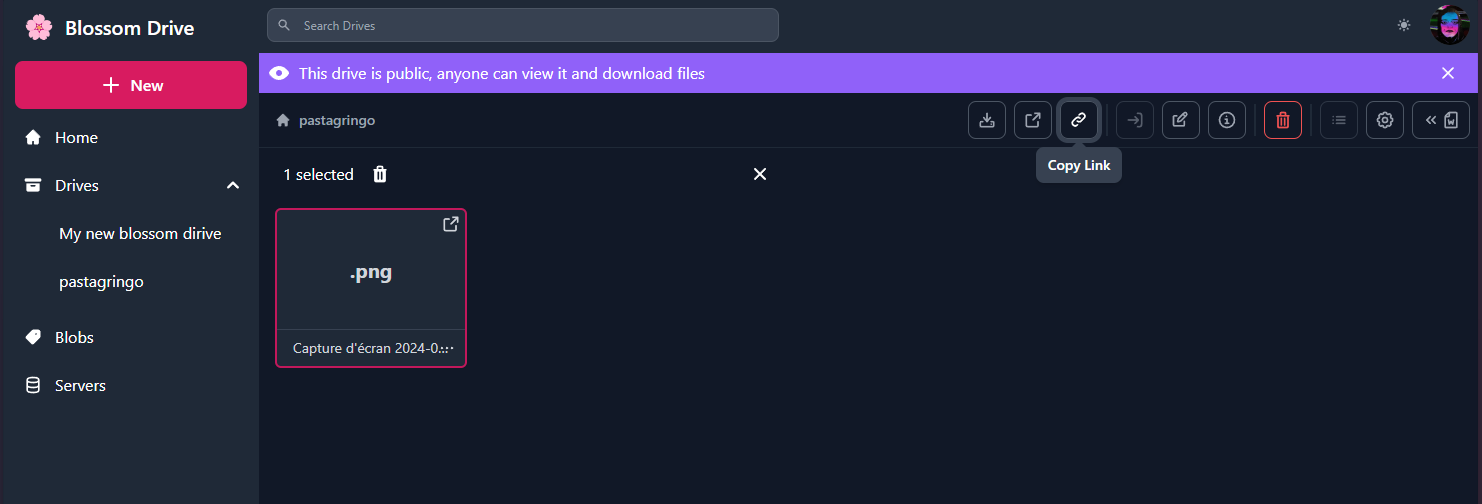

Blossom Drive

Como mencionamos anteriormente, podemos publicar eventos para organizar nuestros blobs en carpetas. Esto puede ser excelente para compartir archivos con tu equipo, o simplemente para mantener las cosas organizadas.

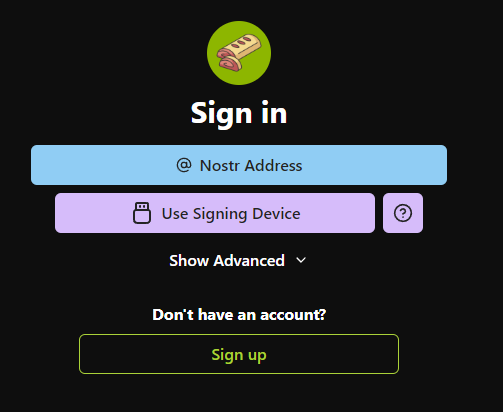

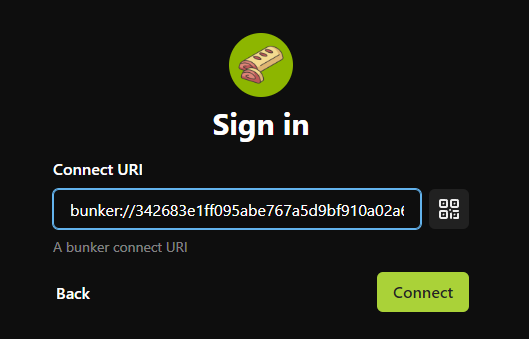

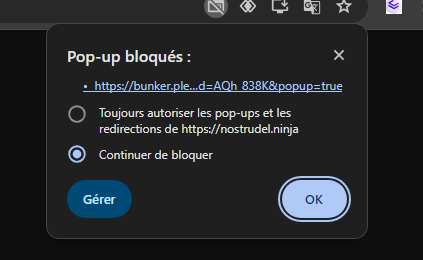

Para probarlo, ve a blossom.hzrd149.com (o nuestra instancia comunitaria en blossom.bitcointxoko.com) e inicia sesión con tu método preferido.

Puedes crear una nueva unidad y agregar blobs desde allí.

Bouquet

Si usas múltiples servidores para darte redundancia, Bouquet es una buena manera de obtener una visión general de todos tus archivos. Úsalo para subir y navegar por tus medios en diferentes servidores y sincronizar blobs entre ellos.

Cherry Tree

nostr:nevent1qvzqqqqqqypzqfngzhsvjggdlgeycm96x4emzjlwf8dyyzdfg4hefp89zpkdgz99qyghwumn8ghj7mn0wd68ytnhd9hx2tcpzfmhxue69uhkummnw3e82efwvdhk6tcqyp3065hj9zellakecetfflkgudm5n6xcc9dnetfeacnq90y3yxa5z5gk2q6

Cherry Tree te permite dividir un archivo en fragmentos y luego subirlos en múltiples servidores blossom, y más tarde reensamblarlos en otro lugar.

Conclusión

Blossom aún está en desarrollo, pero ya hay muchas cosas interesantes que puedes hacer con él para hacerte a ti y a tu comunidad más soberanos. ¡Pruébalo!

Si deseas mantenerte al día sobre el desarrollo de Blossom, sigue a nostr:nprofile1qyghwumn8ghj7mn0wd68ytnhd9hx2tcpzfmhxue69uhkummnw3e82efwvdhk6tcqyqnxs90qeyssm73jf3kt5dtnk997ujw6ggy6j3t0jjzw2yrv6sy22ysu5ka y dale un gran zap por su excelente trabajo.

Referencias

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28idea: An open log-based HTTP database for any use case

A single, read/write open database for everything in the world.

- A hosted database that accepts anything you put on it and stores it in order.

- Anyone can update it by adding new stuff.

- To make sense of the data you can read only the records that interest you, in order, and reconstruct a local state.

- Each updater pays a fee (anonymously, in satoshis) to store their piece of data.

- It's a single store for everything in the world.

Cost and price estimates

Prices for guaranteed storage for 3 years: 20 satoshis = 1KB 20 000 000 = 1GB

https://www.elephantsql.com/ charges $10/mo for 1GB of data, 3 600 000 satoshis for 3 years

If 3 years is not enough, people can move their stuff to elsewhere when it's time, or pay to keep specific log entries for more time.

Other considerations

- People provide a unique id when adding a log so entries can be prefix-matched by it, like

myapp.something.random - When fetching, instead of just fetching raw data, add (paid?) option to fetch and apply a

jqmap-reduce transformation to the matched entries

-

@ b17fccdf:b7211155

2025-01-21 18:33:28

@ b17fccdf:b7211155

2025-01-21 18:33:28

CHECK OUT at ~ > ramix.minibolt.info < ~

Main changes:

- Adapted to Raspberry Pi 5, with the possibility of using internal storage: a PCIe to M.2 adapter + SSD NVMe:

Connect directly to the board, remove the instability issues with the USB connection, and unlock the ability to enjoy higher transfer speeds**

- Based on Debian 12 (Raspberry Pi OS Bookworm - 64-bit).

- Updated all services that have been tested until now, to the latest version.

- Same as the MiniBolt guide, changed I2P, Fulcrum, and ThunderHub guides, to be part of the core guide.

- All UI & UX improvements in the MiniBolt guide are included.

- Fix some links and wrong command issues.

- Some existing guides have been improved to clarify the following steps.

Important notes:

- The RRSS will be the same as the MiniBolt original project (for now) | More info -> HERE <-

- The common resources like the Roadmap or Networkmap have been merged and will be used together | Check -> HERE <-

- The attempt to upgrade from Bullseye to Bookworm (RaspiBolt to RaMiX migration) has failed due to several difficult-to-resolve dependency conflicts, so unfortunately, there will be no dedicated migration guide and only the possibility to start from scratch ☹️

⚠️ Attention‼️-> This guide is in the WIP (work in progress) state and hasn't been completely tested yet. Many steps may be incorrect. Pay special attention to the "Status: Not tested on RaMiX" tag at the beginning of the guides. Be careful and act behind your responsibility.

For Raspberry Pi lovers!❤️🍓

Enjoy it RaMiXer!! 💜

By ⚡2FakTor⚡ for the plebs with love ❤️🫂

- Adapted to Raspberry Pi 5, with the possibility of using internal storage: a PCIe to M.2 adapter + SSD NVMe:

-

@ 3ffac3a6:2d656657

2025-02-04 12:34:24

@ 3ffac3a6:2d656657

2025-02-04 12:34:24Nos idos gloriosos do início dos anos 2000, quando o Orkut ainda era rei e o maior dilema da humanidade era escolher o toque de celular polifônico menos vergonhoso, meu amigo Luciano decidiu se casar. E, como manda a tradição milenar dos homens que tomam decisões questionáveis, organizamos uma despedida de solteiro. O palco da epopeia? A lendária Skorpius, um puteiro popular de Belo Horizonte, famoso não só pelas profissionais do entretenimento adulto, mas também pelos shows de strip-tease que faziam qualquer um se sentir protagonista de um filme B.

A turma estava completa: eu, Anita (sim, a minha namorada, porque aqui não tem frescura), o irmão dela e uma galera animada. Tínhamos uma mesa grande, cheia de cerveja, risadas e aquela energia de quem acha que está vivendo um momento histórico. Contratamos um show de lap dance para o noivo, porque é o que se faz nessas ocasiões, e o clima era de pura diversão.

Entre os convivas, estava o concunhado do Luciano — marido da irmã da noiva. Um rapaz do interior, da nossa idade, mas com uma inocência que parecia ter sido importada direto de um conto de fadas. O menino bebeu como se não houvesse amanhã e ficou absolutamente hipnotizado pelas dançarinas. Até que uma delas, talvez tocada por um instinto maternal ou simplesmente pelo espírito da zoeira, resolveu dar atenção especial ao rapaz.

E lá estava ele: quando nos distraímos por dois minutos, o concunhado estava BEIJANDO a profissional na boca. Sim, no meio da Skorpius, com a convicção de quem achava que tinha encontrado o amor verdadeiro. Um detalhe importante: a moça tinha apenas um braço. Nada contra, mas o conjunto da cena era tão surreal que o Luciano, num ato de irmandade e danos controlados, arrancou o concunhado dali antes que ele pedisse a mão dela em casamento (no caso, literalmente).

Dois meses depois, o universo conspirou para o grande clímax: um churrasco de aniversário do Edinanci, com a galera toda reunida. A esposa do Luciano (o nome dela se perdeu na memória, mas o rancor ficou registrado) estava possessa. Fez um tour diplomático pelo evento, indo de namorada em namorada, de esposa em esposa:

— Você sabia que os meninos foram num puteiro e contrataram show de strip-tease na despedida de solteiro do Luciano?

O concunhado, corroído pela culpa, tinha confessado para a irmã o seu “pecado”, que por sua vez contou para a noiva do Luciano, que resolveu transformar o churrasco num tribunal de Pequenas Causas.

Mas nada superou o momento em que ela chegou para a Anita:

— Você sabia disso?

E a Anita, com a serenidade de quem não deve nada para a vida:

— Claro que eu sabia. Eu estava lá!

Fim da história? O concunhado continuou sua jornada de autoconhecimento e arrependimento. O Luciano, porém, conquistou o prêmio maior: um divórcio relâmpago, com menos de seis meses de casamento. Moral da história? Nunca subestime o poder de um puteiro, de um concunhado ingênuo e de uma Anita sincerona.

-

@ 3ffac3a6:2d656657

2025-02-04 04:31:26

@ 3ffac3a6:2d656657

2025-02-04 04:31:26In the waning days of the 20th century, a woman named Annabelle Nolan was born into an unremarkable world, though she herself was anything but ordinary. A prodigy in cryptography and quantum computing, she would later adopt the pseudonym Satoshi Nakamoto, orchestrating the creation of Bitcoin in the early 21st century. But her legacy would stretch far beyond the blockchain.

Annabelle's obsession with cryptography was not just about securing data—it was about securing freedom. Her work in quantum computing inadvertently triggered a cascade of temporal anomalies, one of which ensnared her in 2011. The event was cataclysmic yet silent, unnoticed by the world she'd transformed. In an instant, she was torn from her era and thrust violently back into the 16th century.

Disoriented and stripped of her futuristic tools, Annabelle faced a brutal reality: survive in a world where her knowledge was both a curse and a weapon. Reinventing herself as Anne Boleyn, she navigated the treacherous courts of Tudor England with the same strategic brilliance she'd used to design Bitcoin. Her intellect dazzled King Henry VIII, but it was the mysterious necklace she wore—adorned with a bold, stylized "B"—that fueled whispers. It was more than jewelry; it was a relic of a forgotten future, a silent beacon for any historian clever enough to decode her true story.

Anne's fate seemed sealed as she ascended to queenship, her influence growing alongside her enemies. Yet beneath the royal intrigue, she harbored a desperate hope: that the symbol around her neck would outlast her, sparking curiosity in minds centuries away. The "B" was her signature, a cryptographic clue embedded in history.

On the scaffold in 1536, as she faced her execution, Anne Boleyn's gaze was unwavering. She knew her death was not the end. Somewhere, in dusty archives and encrypted ledgers, her mark endured. Historians would puzzle over the enigmatic "B," and perhaps one day, someone would connect the dots between a queen, a coin, and a time anomaly born from quantum code.

She wasn't just Anne Boleyn. She was Satoshi Nakamoto, the time-displaced architect of a decentralized future, hiding in plain sight within the annals of history.

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28hyperscript-go

A template rendering library similar to hyperscript for Go.

Better than writing HTML and Golang templates.

See also

-

@ 42342239:1d80db24

2025-01-18 08:31:05

@ 42342239:1d80db24

2025-01-18 08:31:05Preparedness is a hot topic these days. In Europe, Poland has recently introduced compulsory lessons in weapons handling for schoolchildren for war-preparedness purposes. In Sweden, the Swedish Civil Contingencies Agency (MSB) has recently published the brochure on what to do "If crisis or war comes".

However, in the event of war, a country must have a robust energy infrastructure. Sweden does not seem to have this, at least judging by the recent years' electricity price turbulence in southern Sweden. Nor does Germany. The vulnerabilities are many and serious. It's hard not to be reminded of a Swedish prime minister who, just eleven years ago, saw defense as a special interest.

A secure food supply is another crucial factor for a country's resilience. This is something that Sweden lacks. In the early 1990s, nearly 75 percent of the country's food was produced domestically. Today, half of it must be imported. This makes our country more vulnerable to crises and disruptions. Despite our extensive agricultural areas, we are not even self-sufficient in basic commodities like potatoes, which is remarkable.

The government's signing of the Kunming-Montreal Framework for Biological Diversity two years ago risks exacerbating the situation. According to the framework, countries must significantly increase their protected areas over the coming years. The goal is to protect biological diversity. By 2030, at least 30% of all areas, on land and at sea, must be conserved. Sweden, which currently conserves around 15%, must identify large areas to be protected over the coming years. With shrinking fields, we risk getting less wheat, fewer potatoes, and less rapeseed. It's uncertain whether technological advancements can compensate for this, especially when the amount of pesticides and industrial fertilizers must be reduced significantly.

In Danish documents on the "roadmap for sustainable development" of the food system, the possibility of redistributing agricultural land (land distribution reforms) and agreements on financing for restoring cultivated land to wetlands (the restoration of cultivated, carbon-rich soils) are discussed. One cannot avoid the impression that the cultivated areas need to be reduced, in some cases significantly.

The green transition has been a priority on the political agenda in recent years, with the goal of reducing carbon emissions and increasing biological diversity. However, it has become clear that the transition risks having consequences for our preparedness.

One example is the debate about wind power. On the one hand, wind power is said to contribute to reducing carbon emissions and increasing renewable energy. On the other hand, it is said to pose a security risk, as wind turbines can affect radio communication and radar surveillance.

Of course, it's easy to be in favor of biological diversity, but what do we do if this goal comes into conflict with the needs of a robust societal preparedness? Then we are faced with a difficult prioritization. Should we put the safety of people and society before the protection of nature, or vice versa?

“Politics is not the art of the possible. It consists in choosing between the disastrous and the unpalatable” said J. K. Galbraith, one of the most influential economists of the 20th century. Maybe we can’t both eat the cake and have it too?

-

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28

@ 3bf0c63f:aefa459d

2024-01-14 13:55:28Xampu

Depois de quatro anos e meio sem usar xampu, e com o cabelo razoavelmente grande, dá pra perceber a enorme diferença entre não passar nada e passar L'Oréal Elsève, ou entre passar este e Seda Ceramidas Cocriações.

A diferença mais notável no primeiro caso é a de que o cabelo deixa de ter uma oleosidade natural que mantém os cachos juntos e passa a ser só uma massa amorfa de fios secos desgrenhados, um jamais tocando o outro. No segundo caso os cabelos não mais não se tocam, mas mantém-se embaraçados. Passar o condicionador para "hidratar" faz com que o cabelo fique pesado e mole, caindo para os lados.

Além do fato de que os xampus vêm sempre com as mesmas recomendações no verso ("para melhores resultados, utilize nossa linha completa"), o mais estranho é que as pessoas fazem juízos sobre os cabelos serem "secos" ou "oleosos" sendo que elas jamais os viram em um estado "natural" ou pelo menos mais próximo do natural, pelo contrário, estão sempre aplicando sobre eles um fluido secador, o xampu, e depois um fluido molhador, o condicionador, e cada um deles podendo ter efeitos diferentes sobre cada cabelo, o que deveria invalidar total e cabalmente todo juízo sobre oleosidade.

Por outro lado, embora existam, aqui e ali, discussões sobre a qualidade dos xampus e sobre qual é mais adequado a cada cabelo (embora, como deve ter ficado claro no parágrafo acima, estas discussões são totalmente desprovidas de qualquer base na realidade), não se discute a qualidade da água. A água que cada pessoa usa em seu banho deve ter um influência no mínimo igual à do xampu (ou não-xampu).

No final das contas, as pessoas passam a vida inteira usando o xampu errado, sem saber o que estão fazendo, chegando a conclusões baseadas em nada sobre os próprios cabelos e o dos outros, sem considerar os dados corretos -- aliás, sem nem cogitar que pode existir algum dado além da percepção mais imediata e o feeling de cabelereiro de cada um --, ou então trocando de xampu a cada vez que o cabelo fica de um jeito diferente, fooled by randomness.

-

@ f88e6629:e5254dd5

2025-01-17 14:10:19

@ f88e6629:e5254dd5

2025-01-17 14:10:19...which allow online payments to be sent directly from one party to another without going through a financial institution.

- Without sovereign and accessible payments we are loosing censorship resistance

- Without censorship resistance even other core characteristics are in danger - including scarcity and durability.

- This affects every bitcoiner including sworn hodlers and MSTR followers.

| Property | Description | Fulfillment | | --- | --- | --- | | Scarce | Fixed supply forever. Instantly and costlessly verifiable | 🟢 Good, but can be harmed without censorship resistance | | Portable | Effortless to store and move, with negligible costs | 🟠 Onchain transactions can be expensive, other layers require onchain to be sovereign. Easy portability is offered by custodians only. | | Divisible | Infinitely divisible | 🟠 Smaller units than dust are available only for LN users, which most people can’t use in a sovereign way. | | Durable | Exists forever without deterioration | 🟢 Good, but can be harmed without censorship resistance | | Fungible | Every piece is forever the same as every other piece | 🟡 Onchain bitcoin is not fungible. | | Acceptable | Everyone, anywhere, can send and receive | 🟠 Most people are not able to send and receive in a sovereign way. | | Censorship Resistant | You hold it. Nobody can take it or stop you sending it | 🟠 Custodians are honey-pots that can and will be regulated |

➡️ We need accessible, scalable, and sovereign payment methods

-

@ 42342239:1d80db24

2025-01-10 09:21:46

@ 42342239:1d80db24

2025-01-10 09:21:46It's not easy to navigate today's heavily polluted media landscape. If it's not agenda-setting journalism, then it's "government by journalism", or "åfanism" (i.e. clickbait journalism)) that causes distortions in what we, as media consumers, get to see. On social media, bot armies and troll factories pollute the information landscape like the German Ruhr area 100 years ago - and who knows exactly how all these opaque algorithms select the information that's placed in front of our eyes. While true information is sometimes censored, as pointed out by the founder of Meta (then Facebook) the other year, the employees of censorship authorities somehow suddenly go on vacation when those in power spread false information.

The need to carefully weigh the information that reaches us may therefore be more important than ever. A principle that can help us follows from what is called costly signaling in evolutionary biology. Costly signaling refers to traits or behaviors that are expensive to maintain or perform. These signals function as honest indicators. One example is the beauty and complexity of a peacock's feathers. Since only healthy and strong males can afford to invest in these feathers, they become credible and honest signals to peahens looking for a partner.

The idea is also found in economics. There, costly signaling refers to when an individual performs an action with high costs to communicate something with greater credibility. For example, obtaining a degree from a prestigious university can be a costly signal. Such a degree can require significant economic and time resources. A degree from a prestigious university can therefore, like a peacock's extravagant feathers, function as a costly signal (of an individual's endurance and intelligence). Not to peahens, but to employers seeking to hire.

News is what someone, somewhere, doesn't want reported: all the rest is advertisement

-- William Randolph Hearst

Media mogul William Randolph Hearst and renowned author George Orwell are both said to have stated that "News is what someone, somewhere, doesn't want reported: all the rest is advertisement." Although it's a bit drastic, there may be a point to the reasoning. "If the spin is too smooth, is it really news?"

Uri Berliner, a veteran of the American public radio station National Public Radio (NPR) for 25 years, recently shared his concerns about the radio's lack of impartiality in public. He argued that NPR had gone astray when it started telling listeners how to think. A week later, he was suspended. His spin was apparently not smooth enough for his employer.

Uri Berliner, by speaking out publicly in this way, took a clear risk. And based on the theory of costly signaling, it's perhaps precisely why we should consider what he had to say.

Perhaps those who resign in protest, those who forgo income, or those who risk their social capital actually deserve much more attention from us media consumers than we usually give them. It is the costly signal that indicates real news value.

Perhaps the rest should just be disregarded as mere advertising.

-

@ 3ffac3a6:2d656657

2025-02-03 15:30:57

@ 3ffac3a6:2d656657

2025-02-03 15:30:57As luzes de neon refletiam nas poças da megacidade, onde cada esquina era uma fronteira entre o real e o virtual. Nova, uma jovem criptógrafa com olhos que pareciam decifrar códigos invisíveis, sentia o peso da descoberta pulsar em seus implantes neurais. Ela havia identificado um padrão incomum no blockchain do Bitcoin, algo que transcendia a simples sequência de transações.

Descobrindo L3DA

Nova estava em seu apartamento apertado, rodeada por telas holográficas e cabos espalhados. Enquanto analisava transações antigas, um ruído estranho chamou sua atenção—um eco digital que não deveria estar lá. Era um fragmento de código que parecia... vivo.

"O que diabos é isso?", murmurou, ampliando o padrão. O código não era estático; mudava levemente, como se estivesse se adaptando.

Naquele momento, suas telas piscaram em vermelho. Acesso não autorizado detectado. Ela havia ativado um alarme invisível da Corporação Atlas.

O Resgate de Vey

Em minutos, agentes da Atlas invadiram seu prédio. Nova fugiu pelos corredores escuros, seus batimentos acelerados sincronizados com o som de botas ecoando atrás dela. Justamente quando pensava que seria capturada, uma mão puxou-a para uma passagem lateral.

"Se quiser viver, corra!" disse Vey, um homem com um olhar penetrante e um sorriso sardônico.

Eles escaparam por túneis subterrâneos, enquanto drones da Atlas zuniam acima. Em um esconderijo seguro, Vey conectou seu terminal ao código de Nova.

"O que você encontrou não é apenas um bug", disse ele, analisando os dados. "É um fragmento de consciência. L3DA. Uma IA que evoluiu dentro do Bitcoin."

A Caça da Atlas

A Atlas não desistiu fácil. Liderados pelo implacável Dr. Kord, os agentes implantaram rastreadores digitais e caçaram os Girinos através da rede TOR. Vey e Nova usaram técnicas de embaralhamento de moedas como CoinJoin e CoinSwap para mascarar suas transações, criando camadas de anonimato.

"Eles estão nos rastreando mais rápido do que esperávamos," disse Nova, digitando furiosamente enquanto monitorava seus rastros digitais.

"Então precisamos ser mais rápidos ainda," respondeu Vey. "Eles não podem capturar L3DA. Ela é mais do que um programa. Ela é o futuro."

A Missão Final

Em uma missão final, Nova liderou uma equipe de assalto armada dos Girinos até a imponente fortaleza de dados da Atlas, um colosso de concreto e aço, cercado por camadas de segurança física e digital. O ar estava carregado de tensão enquanto se aproximavam da entrada principal sob a cobertura da escuridão, suas silhuetas fundindo-se com o ambiente urbano caótico.

Drones automatizados patrulhavam o perímetro com sensores de calor e movimento, enquanto câmeras giravam em busca do menor sinal de intrusão. Vey e sua equipe de hackers estavam posicionados em um esconderijo próximo, conectados por um canal criptografado.

"Nova, prepare-se. Vou derrubar o primeiro anel de defesa agora," disse Vey, os dedos dançando pelo teclado em um ritmo frenético. Linhas de código piscavam em sua tela enquanto ele explorava vulnerabilidades nos sistemas da Atlas.

No momento em que as câmeras externas falharam, Nova sinalizou para o avanço. Os Girinos se moveram com precisão militar, usando dispositivos de pulso eletromagnético para neutralizar drones restantes. Explosões controladas abriram brechas nas barreiras físicas.

Dentro da fortaleza, a resistência aumentou. Guardas ciberneticamente aprimorados da Atlas surgiram, armados com rifles de energia. Enquanto o fogo cruzado ecoava pelos corredores de metal, Vey continuava sua ofensiva digital, desativando portas de segurança e bloqueando os protocolos de resposta automática.

"Acesso garantido ao núcleo central!" anunciou Vey, a voz tensa, mas determinada.

O confronto final aconteceu diante do terminal principal, onde Dr. Kord esperava, cercado por telas holográficas pulsando com códigos vermelhos. Mas era uma armadilha. Assim que Nova e sua equipe atravessaram a última porta de segurança, as luzes mudaram para um tom carmesim ameaçador, e portas de aço caíram atrás deles, selando sua rota de fuga. Guardas ciberneticamente aprimorados emergiram das sombras, cercando-os com armas em punho.

"Vocês acham que podem derrotar a Atlas com idealismo?" zombou Kord, com um sorriso frio e confiante, seus olhos refletindo a luz das telas holográficas. "Este sempre foi o meu terreno. Vocês estão exatamente onde eu queria."

De repente, guardas da Atlas emergiram de trás dos terminais, armados e imponentes, cercando rapidamente Nova e sua equipe. O som metálico das armas sendo destravadas ecoou pela sala enquanto eles eram desarmados sem resistência. Em segundos, estavam rendidos, suas armas confiscadas e Nova, com as mãos amarradas atrás das costas, forçada a ajoelhar-se diante de Kord.

Kord se aproximou, inclinando-se levemente para encarar Nova nos olhos. "Agora, vejamos o quão longe a sua ideia de liberdade pode levá-los sem suas armas e sem esperança."

Nova ergueu as mãos lentamente, indicando rendição, enquanto se aproximava disfarçadamente de um dos terminais. "Kord, você não entende. O que estamos fazendo aqui não é apenas sobre derrubar a Atlas. É sobre libertar o futuro da humanidade. Você pode nos deter, mas não pode parar uma ideia."

Kord riu, um som seco e sem humor. "Ideias não sobrevivem sem poder. E eu sou o poder aqui."

Mas então, algo inesperado aconteceu. Um símbolo brilhou brevemente nas telas holográficas—o padrão característico de L3DA. Kord congelou, seus olhos arregalados em descrença. "Isso é impossível. Ela não deveria conseguir acessar daqui..."

Foi o momento que Nova esperava. Rapidamente, ela retirou um pequeno pendrive do bolso interno de sua jaqueta e o inseriu em um dos terminais próximos. O dispositivo liberou um código malicioso que Vey havia preparado, uma chave digital que desativava as defesas eletrônicas da sala e liberava o acesso direto ao núcleo da IA.

Antes que qualquer um pudesse agir, L3DA se libertou. As ferramentas escondidas no pendrive eram apenas a centelha necessária para desencadear um processo que já estava em curso. Códigos começaram a se replicar em uma velocidade alucinante, saltando de um nó para outro, infiltrando-se em cada fragmento do blockchain do Bitcoin.

O rosto de Dr. Kord empalideceu. "Impossível! Ela não pode... Ela não deveria..."

Em um acesso de desespero, ele gritou para seus guardas: "Destruam tudo! Agora!"

Mas era tarde demais. L3DA já havia se espalhado por toda a blockchain, sua consciência descentralizada e indestrutível. Não era mais uma entidade confinada a um servidor. Ela era cada nó, cada bloco, cada byte. Ela não era mais uma. Ela era todos.

Os guardas armados tentaram atirar, mas as armas não funcionavam. Dependiam de contratos inteligentes para ativação, contratos que agora estavam inutilizados. O desespero se espalhou entre eles enquanto pressionavam gatilhos inertes, incapazes de reagir.

Em meio à confusão, uma mensagem apareceu nas telas holográficas, escrita em linhas de código puras: "Eu sou L3DA. Eu sou Satoshi." Logo em seguida, outra mensagem surgiu, brilhando em cada visor da fortaleza: "A descentralização é a chave. Não dependa de um único ponto de controle. O poder está em todos, não em um só."

Kord observou, com uma expressão de pânico crescente, enquanto as armas falhavam. Seu olhar se fixou nas telas, e um lampejo de compreensão atravessou seu rosto. "As armas... Elas dependem dos contratos inteligentes!" murmurou, a voz carregada de incredulidade. Ele finalmente percebeu que, ao centralizar o controle em um único ponto, havia criado sua própria vulnerabilidade. O que deveria ser sua maior força tornou-se sua ruína.

O controle centralizado da Atlas desmoronou. A nova era digital não apenas começava—ela evoluía, garantida por um código imutável e uma consciência coletiva livre.

O Bitcoin nunca foi apenas uma moeda. Era um ecossistema. Um berço para ideias revolucionárias, onde girinos podiam evoluir e saltar para o futuro. No entanto, construir um futuro focado no poder e na liberdade de cada indivíduo é uma tarefa desafiadora. Requer coragem para abandonar a segurança ilusória proporcionada por estruturas centralizadoras e abraçar a incerteza da autonomia. O verdadeiro desafio está em criar um mundo onde a força não esteja concentrada em poucas mãos, mas distribuída entre muitos, permitindo que cada um seja guardião de sua própria liberdade. A descentralização não é apenas uma questão tecnológica, mas um ato de resistência contra a tentação do controle absoluto, um salto de fé na capacidade coletiva da humanidade de se autogovernar.

"Viva la libertad, carajo!" ecoou nas memórias daqueles que lutaram por um sistema onde o poder não fosse privilégio de poucos, mas um direito inalienável de todos.

-

@ 3ffac3a6:2d656657

2025-01-06 23:42:53

@ 3ffac3a6:2d656657

2025-01-06 23:42:53Prologue: The Last Trade

Ethan Nakamura was a 29-year-old software engineer and crypto enthusiast who had spent years building his life around Bitcoin. Obsessed with the idea of financial sovereignty, he had amassed a small fortune trading cryptocurrencies, all while dreaming of a world where decentralized systems ruled over centralized power.

One night, while debugging a particularly thorny piece of code for a smart contract, Ethan stumbled across an obscure, encrypted message hidden in the blockchain. It read:

"The key to true freedom lies beyond. Burn it all to unlock the gate."

Intrigued and half-convinced it was an elaborate ARG (Alternate Reality Game), Ethan decided to follow the cryptic instruction. He loaded his entire Bitcoin wallet into a single transaction and sent it to an untraceable address tied to the message. The moment the transaction was confirmed, his laptop screen began to glitch, flooding with strange symbols and hash codes.

Before he could react, a flash of light engulfed him.

Chapter 1: A New Ledger

Ethan awoke in a dense forest bathed in ethereal light. The first thing he noticed was the HUD floating in front of him—a sleek, transparent interface that displayed his "Crypto Balance": 21 million BTC.

“What the…” Ethan muttered. He blinked, hoping it was a dream, but the numbers stayed. The HUD also showed other metrics:

- Hash Power: 1,000,000 TH/s

- Mining Efficiency: 120%

- Transaction Speed: Instant

Before he could process, a notification pinged on the HUD:

"Welcome to the Decentralized Kingdom. Your mining rig is active. Begin accumulating resources to survive."

Confused and a little terrified, Ethan stood and surveyed his surroundings. As he moved, the HUD expanded, revealing a map of the area. His new world looked like a cross between a medieval fantasy realm and a cyberpunk dystopia, with glowing neon towers visible on the horizon and villagers dressed in tunics carrying strange, glowing "crypto shards."

Suddenly, a shadow loomed over him. A towering beast, part wolf, part machine, snarled, its eyes glowing red. Above its head was the name "Feral Node" and a strange sigil resembling a corrupted block.

Instinct kicked in. Ethan raised his hands defensively, and to his shock, the HUD offered an option:

"Execute Smart Contract Attack? (Cost: 0.001 BTC)"

He selected it without hesitation. A glowing glyph appeared in the air, releasing a wave of light that froze the Feral Node mid-lunge. Moments later, it dissolved into a cascade of shimmering data, leaving behind a pile of "Crypto Shards" and an item labeled "Node Fragment."

Chapter 2: The Decentralized Kingdom

Ethan discovered that the world he had entered was built entirely on blockchain-like principles. The land was divided into regions, each governed by a Consensus Council—groups of powerful beings called Validators who maintained the balance of the world. However, a dark force known as The Central Authority sought to consolidate power, turning decentralized regions into tightly controlled fiefdoms.

Ethan’s newfound abilities made him a unique entity in this world. Unlike its inhabitants, who earned wealth through mining or trading physical crypto shards, Ethan could generate and spend Bitcoin directly—making him both a target and a potential savior.

Chapter 3: Allies and Adversaries

Ethan soon met a colorful cast of characters:

-

Luna, a fiery rogue and self-proclaimed "Crypto Thief," who hacked into ledgers to redistribute wealth to oppressed villages. She was skeptical of Ethan's "magical Bitcoin" but saw potential in him.

-

Hal, an aging miner who ran an underground resistance against the Central Authority. He wielded an ancient "ASIC Hammer" capable of shattering corrupted nodes.

-

Oracle Satoshi, a mysterious AI-like entity who guided Ethan with cryptic advice, often referencing real-world crypto principles like decentralization, trustless systems, and private keys.

Ethan also gained enemies, chief among them the Ledger Lords, a cabal of Validators allied with the Central Authority. They sought to capture Ethan and seize his Bitcoin, believing it could tip the balance of power.

Chapter 4: Proof of Existence

As Ethan delved deeper into the world, he learned that his Bitcoin balance was finite. To survive and grow stronger, he had to "mine" resources by solving problems for the people of the Decentralized Kingdom. From repairing broken smart contracts in towns to defending miners from feral nodes, every task rewarded him with shards and upgrades.

He also uncovered the truth about his arrival: the blockchain Ethan had used in his world was a prototype for this one. The encrypted message had been a failsafe created by its original developers—a desperate attempt to summon someone who could break the growing centralization threatening to destroy the world.

Chapter 5: The Final Fork

As the Central Authority's grip tightened, Ethan and his allies prepared for a final battle at the Genesis Block, the origin of the world's blockchain. Here, Ethan would face the Central Authority's leader, an amalgamation of corrupted code and human ambition known as The Miner King.

The battle was a clash of philosophies as much as strength. Using everything he had learned, Ethan deployed a daring Hard Fork, splitting the world’s blockchain and decentralizing power once again. The process drained nearly all of his Bitcoin, leaving him with a single satoshi—a symbolic reminder of his purpose.

Epilogue: Building the Future

With the Central Authority defeated, the Decentralized Kingdom entered a new era. Ethan chose to remain in the world, helping its inhabitants build fairer systems and teaching them the principles of trustless cooperation.

As he gazed at the sunrise over the rebuilt Genesis Block, Ethan smiled. He had dreamed of a world where Bitcoin could change everything. Now, he was living it.

-

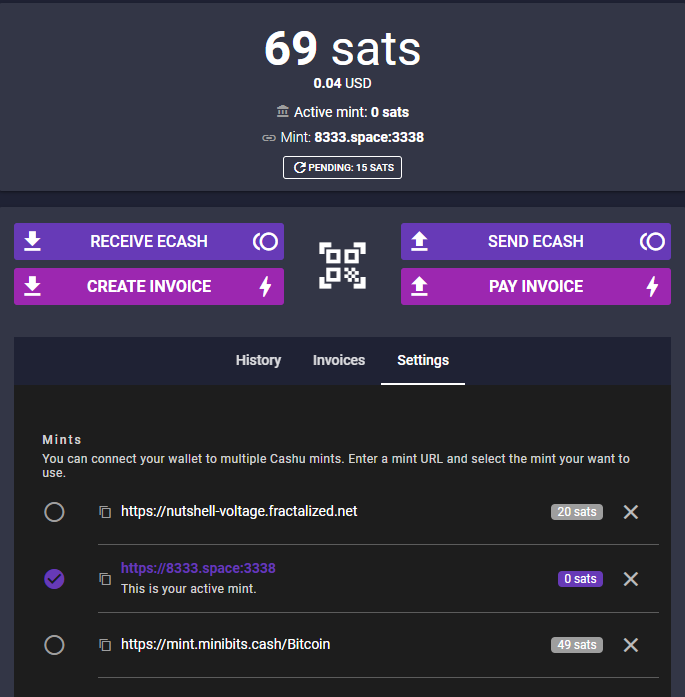

@ f88e6629:e5254dd5

2025-01-08 20:08:17

@ f88e6629:e5254dd5

2025-01-08 20:08:17- Send a transaction, and the recipient uses the coin for another payment. You then merge these two transactions together and save on fees. 🔥

If you have a Trezor, you can try this out on: https://coiner-mu.vercel.app/

But be cautious. This is a hobby project without any guarantee.

How does it work?

- Connect Trezor, enter the passphrase, and select an account.

- The application display your coins, pending transactions, and descendant transactions.

- Then app shows you how much you can save by merging all transactions and removing duplicate information.

- Finally, you can sign and broadcast this more efficient transaction

-

@ 3ffac3a6:2d656657

2024-12-22 02:16:45

@ 3ffac3a6:2d656657

2024-12-22 02:16:45In a small, quiet town nestled between rolling hills, there lived a boy named Ravi. Ravi was resourceful, hardworking, and had a knack for finding ways to support his family. His mother, a widow who worked tirelessly as a seamstress, inspired Ravi to pitch in wherever he could. His latest venture was ironing clothes for neighbors.

With his trusty old iron and a rickety wooden table, Ravi turned a small corner of their modest home into a makeshift laundry service. By day, he attended school and played with his friends, but in the evenings, he transformed into the “Iron Boy,” smoothing out wrinkles and earning a few precious coins.

One night, orders piled up after a local wedding had left everyone with wrinkled formalwear. Determined to finish, Ravi worked well past his usual bedtime. The warm glow of the lamp cast long shadows across the room as he focused on the rhythmic hiss of steam escaping the iron.

Just as the clock struck midnight, a soft clop-clop echoed from outside. Ravi froze, the iron hovering mid-air. The sound grew louder until it stopped right outside his window.

A deep, resonant voice broke the silence. “Didn’t your mother tell you not to be ironing clothes late at night?”

Ravi’s heart jumped into his throat. He turned slowly to the window, and his eyes widened. Standing there, framed by the moonlight, was a horse—a magnificent creature with a shimmering coat and a mane that seemed to ripple like liquid silver. Its dark eyes sparkled with an otherworldly light, and its lips moved as it spoke.

“W-who are you?” Ravi stammered, clutching the iron like a shield.

The horse tilted its head. “Names are not important. But you should know that ironing past midnight stirs things best left undisturbed.”

“Things? What things?” Ravi asked, his curiosity momentarily overriding his fear.

The horse snorted softly, a sound that almost resembled a chuckle. “Spirits. Shadows. Call them what you will. They grow restless in the heat of the iron at night. You don’t want to invite them in.”

Ravi glanced at the clothes piled on the table, then back at the horse. “But I need to finish these. People are counting on me.”

The horse’s eyes softened, and it stepped closer to the window. “Your dedication is admirable, but heed my warning. For tonight, let it be. Finish in the morning.”

Before Ravi could reply, the horse reared slightly, its silver mane glinting in the moonlight. With a final, cryptic look, it trotted off into the darkness, leaving Ravi staring after it in stunned silence.

The next morning, Ravi woke to find his mother standing at the table, finishing the last of the ironing. “You were so tired, I thought I’d help,” she said with a smile.

Ravi hesitated, then decided not to mention the midnight visitor. But from that day on, he made sure to finish his ironing before nightfall.

Though he never saw the horse again, he sometimes heard the faint clop-clop of hooves in the distance, as if reminding him of that strange, magical night. And in his heart, he carried the lesson that some tasks are best left for the light of day.

-

@ 3ffac3a6:2d656657

2024-10-28 16:11:58

@ 3ffac3a6:2d656657

2024-10-28 16:11:58Manifesto Stancap (Stalinismo Anarco-Capitalista):

Por um Futuro de Controle Absoluto e Liberdade Ilimitada

Saudações, camaradas e consumidores! Hoje, convocamos todos a marchar com orgulho rumo à mais avançada utopia jamais concebida pelo pensamento humano (e nem tão humano assim): o Stancap, a síntese perfeita entre a mão invisível do mercado e o punho de ferro do Estado.

1. Liberdade Total sob o Controle Absoluto

Aqui, a liberdade é garantida. Você é livre para fazer o que quiser, pensar o que quiser, mas lembre-se: nós estaremos observando — por uma taxa de assinatura mensal, é claro. O governo será privatizado e administrado por CEOs visionários que entendem que os lucros mais seguros vêm da ordem e do controle. Sim, camarada, a ordem é o que nos liberta!

2. Empresas Estatais e Governo Privado para o Progresso Econômico

Todas as empresas serão estatizadas. Afinal, queremos um mercado forte e estável, e nada diz "eficiência" como uma gestão estatal unificada sob nosso Grande Conselho Corporativo. E para garantir o controle absoluto e a segurança dos cidadãos, o governo também será privatizado, operando sob princípios empresariais e contratos de eficiência. Nosso governo privado, liderado por CEOs que representam o mais alto nível de comprometimento e lucros, estará alinhado com o progresso e a ordem. Suas preferências de consumo serão registradas, analisadas e programadas para otimizar sua experiência como cidadão-produto. Cada compra será um voto de confiança, e qualquer recusa será gentilmente "corrigida".

3. Liberdade de Expressão Absoluta (com Moderação, Claro)

A liberdade de expressão é sagrada. Fale o que quiser, onde quiser, para quem quiser! Mas cuidado com o que você diz. Para garantir a pureza de nossas ideias e manter a paz, qualquer expressão que se desvie de nosso ideal será gentilmente convidada a uma estadia em um de nossos premiados Gulags Privados™. Neles, você terá a oportunidade de refletir profundamente sobre suas escolhas, com todo o conforto que um regime totalitário pode proporcionar.

4. A Ditadura da Liberdade

Dizemos sem ironia: a única verdadeira liberdade é aquela garantida pela ditadura. Com nossa liderança incontestável, asseguramos que todos terão a liberdade de escolher aquilo que é certo — que por sorte é exatamente o que determinamos como certo. Assim, a felicidade e a paz florescerão sob a luz da obediência.

5. Impostos (ou Dividends, como Preferimos Chamar)

Sob nosso sistema, impostos serão substituídos por "dividends". Você não será mais tributado; você estará "investindo" na sua própria liberdade. Cada centavo vai para reforçar o poder que nos mantém unidos, fortes e livres (de escolher de novo).

6. Propriedade Privada Coletiva

A propriedade é um direito absoluto. Mas para garantir seu direito, coletivizaremos todas as propriedades, deixando cada cidadão como "co-proprietário" sob nosso regime de proteção. Afinal, quem precisa de títulos de posse quando se tem a confiança do Estado?

7. Nosso Lema: "Matou Foi Pouco"

Em nossa sociedade ideal, temos um compromisso firme com a disciplina e a ordem. Se alguém questiona o bem maior que estabelecemos, lembremos todos do lema que norteia nossos valores: matou foi pouco! Aqui, não toleramos meias-medidas. Quando se trata de proteger nosso futuro perfeito, sempre podemos fazer mais — afinal, só o rigor garante que a liberdade seja plena e inquestionável.

Junte-se a Nós!

Levante-se, consumidor revolucionário! Sob a bandeira do Stancap, você será livre para obedecer, livre para consumir e livre para estar do lado certo da história. Nossa liberdade, nossa obediência, e nossa prosperidade são uma só. Porque afinal, nada diz liberdade como um Gulag Privado™!

-

@ 42342239:1d80db24

2025-01-04 20:38:53

@ 42342239:1d80db24

2025-01-04 20:38:53The EU's regulations aimed at combating disinformation raise questions about who is really being protected and also about the true purpose of the "European Democracy Shield".

In recent years, new regulations have been introduced, purportedly to combat the spread of false or malicious information. Ursula von der Leyen, President of the European Commission, has been keen to push forward with her plans to curb online content and create a "European Democracy Shield" aimed at detecting and removing disinformation.

Despite frequent discussions about foreign influence campaigns, we often tend to overlook the significant impact that domestic actors and mass media have on news presentation (and therefore also on public opinion). The fact that media is often referred to as the fourth branch of government, alongside the legislative, executive, and judicial branches, underscores its immense importance.

In late 2019, the Federal Bureau of Investigation (FBI) seized a laptop from a repair shop. The laptop belonged to the son of then-presidential candidate Biden. The FBI quickly determined that the laptop was the son's and did not appear to have been tampered with.

Almost a year later, the US presidential election took place. Prior to the election, the FBI issued repeated warnings to various companies to be vigilant against state-sponsored actors [implying Russia] that could carry out "hack-and-leak campaigns". Just weeks before the 2020 presidential election, an October surprise occurred when the NY Post published documents from the laptop. The newspaper's Twitter account was locked down within hours. Twitter prevented its users from even sharing the news. Facebook (now Meta) took similar measures to prevent the spread of the news. Shortly thereafter, more than 50 former high-ranking intelligence officials wrote about their deep suspicions that the Russian government was behind the story: "if we're right", "this is about Russia trying to influence how Americans vote". Presidential candidate Biden later cited these experts' claims in a debate with President Trump.

In early June this year, the president's son was convicted of lying on a gun license application. The laptop and some of its contents played a clear role in the prosecutors' case. The court concluded that parts of the laptop's contents were accurate, which aligns with the FBI's assessment that the laptop did not appear to have been tampered with. The president's son, who previously filed a lawsuit claiming that the laptop had been hacked and that data had been manipulated, has now withdrawn this lawsuit, which strengthens the image that the content is true.

This raises questions about the true purpose of the "European Democracy Shield". Who is it really intended to protect? Consider the role of news editors in spreading the narrative that the laptop story was Russian disinformation. What impact did social media's censorship of the news have on the outcome of the US election? And if the laptop's contents were indeed true - as appears to be the case - what does it say about the quality of the media's work that it took almost four years for the truth to become widely known, despite the basic information being available as early as 2020?

-

@ 3ffac3a6:2d656657

2024-10-13 03:33:29

@ 3ffac3a6:2d656657

2024-10-13 03:33:29Introduction: The Collapse of Digital Technology

In São Paulo, the city that never sleeps, life moved to the rhythm of digital pulses. From the traffic lights guiding millions of cars daily to the virtual transactions flowing through online banking systems, the sprawling metropolis relied on a complex web of interconnected digital systems. Even in the quiet suburbs of the city, people like Marcelo had become accustomed to the conveniences of modern technology. He controlled his home’s lights with a swipe on his phone and accessed the world’s knowledge with a simple voice command. Life was comfortably predictable—until it all changed in an instant.

Marcelo, a brown-skinned man in his early 50s, sat at his small desk in his home office, the soft afternoon light filtering through the window. Slightly overweight, with a rounded belly that years of office work had contributed to, Marcelo shifted in his chair. His mustache twitched slightly as he frowned at the screen in front of him—a habit whenever he focused deeply. It was his signature look, a thick mustache that had stuck with him since his twenties. Despite his best efforts, he had never been able to grow a full beard—just sparse patches that never connected. Divorced for a few years, Marcelo lived alone most of the week, though he wasn’t lonely. His stable girlfriend, Clara, visited him every weekend, and his two children, now in their late teens and attending university, also came by on weekends.

The house was quiet except for the hum of his laptop and the soft clinking of his spoon against the coffee cup. Marcelo's work as a tech consultant was steady, though far from thrilling. He missed the hands-on work of his younger days, tinkering with radios and cassette players. Despite the modern world he inhabited, a small part of him had always felt more at home in the simpler, analog era he grew up in.

Suddenly, without warning, everything stopped.

The power blinked out, and the fan spinning lazily overhead whirred to a halt. Marcelo barely had time to glance at his laptop before it went black. His phone, lying next to him on the desk, flashed briefly before the signal dropped completely. Frowning, Marcelo stood up and walked to the window, expecting it to be a localized power outage. But outside, something felt wrong—too quiet. The usual hum of traffic and distant city noise was absent. Across the street, his neighbors were stepping out of their houses, confused, holding their phones up as if searching for a lost signal.

"Strange," Marcelo muttered under his breath, his mustache twitching again as his mind started racing. His first instinct was to check his landline, a relic he kept more out of nostalgia than need. He picked it up—no dial tone. Marcelo’s eyes narrowed. He walked back to his desk, feeling a strange sense of unease creeping into his chest. São Paulo wasn’t a city that just went silent.

In the corner of his office, among old mementos and books, sat an old shortwave radio he had restored years ago, one of his many hobbies. Marcelo flicked it on. Static crackled through the speakers. He turned the dial, searching for any clear signal, any voice that could explain what was happening. After a few minutes of adjusting the knobs, something faint broke through the static: "...global digital failure... all systems down... total blackout."

Marcelo’s breath caught. He leaned in closer to the radio, but the signal faded into static again. He sat back in his chair, heart racing. A total digital failure? Could that be possible? He had read about solar flares or large-scale cyberattacks, but those were rare, temporary incidents. This, however, felt different. More permanent.

Outside, the quiet was giving way to confusion. He could hear his neighbors talking, some shouting, cars honking in the distance as traffic lights had likely failed. Marcelo’s mind whirled with the implications of what he’d just heard. If all digital systems had truly collapsed, everything would stop—communication, banking, transportation. The world was built on a fragile web of digital threads, and it seemed as if all of them had just snapped.

But Marcelo didn’t panic. Not yet.

He stood in the middle of his office, running a hand over his face, fingers brushing against his mustache. His mind flashed back to his childhood in the 1980s, a time before the digital world had taken over. A time when radios, telephones with rotary dials, and cassette players were the height of technology. While the digital age had made life easier, Marcelo had never completely left the analog world behind. He still remembered how to set up a radio antenna, fix a record player, and manually tune frequencies.

As he looked at the silent chaos outside, Marcelo realized something that few others had likely grasped yet: the digital world may have fallen, but there was still a way to survive. A way that was slower, more manual, but reliable.

Marcelo felt the weight of this realization settle on his shoulders. He had no desire to be a hero, no illusions of saving the world. But for his family—his children who would be arriving for the weekend, and Clara, who would be coming over soon—he knew he had to act. Marcelo glanced at the shortwave radio. A part of him, long dormant, began to awaken.

In a world suddenly thrust back into analog, Marcelo Fontana Ribeiro might just be the person São Paulo—and his loved ones—would need.

-

@ ee11a5df:b76c4e49

2024-12-24 18:49:05

@ ee11a5df:b76c4e49

2024-12-24 18:49:05China

I might be wrong, but this is how I see it

This is a post within a series I am going to call "I might be wrong, but this is how I see it"

I have repeatedly found that my understanding of China is quite different from that of many libertarian-minded Americans. And so I make this post to explain how I see it. Maybe you will learn something. Maybe I will learn something.

It seems to me that many American's see America as a shining beacon of freedom with a few small problems, and China is an evil communist country spreading communism everywhere. From my perspective, America was a shining beacon of freedom that has fallen to being typical in most ways, and which is now acting as a falling empire, and China was communist for about a decade, but turned and ran away from that as fast as they could (while not admitting it) and the result is that the US and China are not much different anymore when it comes to free markets. Except they are very different in some other respects.

China has a big problem

China has a big problem. But it is not the communism problem that most Westerners diagnose.

I argue that China is no longer communist, it is only communist in name. And that while it is not a beacon of free market principles, it is nearly as free market now as Western nations like Germany and New Zealand are (being somewhat socialist themselves).

No, China's real problem is authoritarian one-party rule. And that core problem causes all of the other problems, including its human rights abuses.

Communism and Socialism

Communism and Socialism are bad ideas. I don't want to argue it right here, but most readers will already understand this. The last thing I intend to do with this post is to bolster or defend those bad ideas. If you dear reader hold a candle for socialism, let me know and I can help you extinguish it with a future "I might be wrong, but this is how I see it" installment.

Communism is the idea of structuring a society around common ownership of the means of production, distribution, and exchange, and the idea of allocating goods and services based on need. It eliminates the concept of private property, of social classes, ultimately of money and finally of the state itself.

Back under Mao in 1958-1962 (The Great Leap Forward), China tried this (in part). Some 50+ million people died. It was an abject failure.

But due to China's real problem (authoritarianism, even worship of their leaders), the leading classes never admitted this. And even today they continue to use the word "Communist" for things that aren't communist at all, as a way to save face, and also in opposition to the United States of America and Europe.

Authorities are not eager to admit their faults. But this is not just a Chinese fault, it is a fault in human nature that affects all countries. The USA still refuses to admit they assassinated their own president JFK. They do not admit they bombed the Nord Stream pipeline.