-

@ 5f078e90:b2bacaa3

2025-04-28 19:44:00

@ 5f078e90:b2bacaa3

2025-04-28 19:44:00This is a test written in yakihonne.com as a long form article. It is a kind 30023. It should be cross-posted to Hive.

-

@ df7e70ac:89601b8e

2025-04-28 13:15:45

@ df7e70ac:89601b8e

2025-04-28 13:15:45this is a text fo rfilter gparena.net

-

@ de6c63ab:d028389b

2025-04-28 12:20:45

@ de6c63ab:d028389b

2025-04-28 12:20:45Honestly, I didn’t think this would still be a thing in 2025, but every once in a while it pops up again:

“Bitcoin? Uh, I don’t know… but blockchain, now that could be useful! 🤌”

“Blockchain is one of the most important technologies of our time. Maybe you know it from crypto, but it’s so much more. It’s a way to store and verify data securely, transparently, and without a middleman. That’s why it’s going to revolutionize banking, healthcare, logistics, and even government!”

“Blockchain is transforming how we store, share, and verify information. Its benefits go far beyond cryptocurrencies. Understanding it today means preparing for tomorrow, because blockchain is guaranteed to play a major role in the future.”

Blockchain

When people say "blockchain," they usually mean the bitcoin database — with all its unique properties — even when they’re imagining using it elsewhere.

But here’s the thing: blockchain by itself isn’t some revolutionary breakthrough.

Stripped from bitcoin, it’s just a fancy list of records, each pointing to the previous one with a reference (typically a hash).

That's it.This idea — chaining data together — isn’t new.

It goes back to at least 1991, when Haber and Stornetta proposed it for timestamping documents.By itself, blockchain isn’t secure (you can always rewrite past records if you recompute the chain), isn’t necessarily transparent (the data can be encrypted or hidden), and doesn't magically remove the need for trust (if someone logs soccer scores into a blockchain, you still have to trust they reported the results honestly).

What actually makes bitcoin’s blockchain secure and trustworthy is the system around it — the economic incentives, the ruthless competition for block rights, and the distributed consensus mechanics.

Without those, blockchain is just another database.

How Does Bitcoin Make It Work?

To understand why, we need to zoom in a little.

Superficially, bitcoin’s blockchain looks like a simple ledger — a record of transactions grouped into blocks. A transaction means someone spent bitcoin — unlocking it and locking it up again for someone else.

But here’s the key:

Every participant can independently verify whether each transaction is valid, with no outside help and no trust required.Think of every transaction like a math equation.

Something like: x + 7 = 5, with the solution x = -2.

You don’t need anyone to tell you if it’s correct — you can check it yourself.Of course, bitcoin’s equations are far more complex.

They involve massive numbers and strange algebraic structures, where solving without the right key is practically impossible, but verifying a solution is easy.This is why only someone with the private key can authorize a transaction.

In a way, "solving" these equations is how you prove your right to spend bitcoin.

Ownership and transfers are purely a matter of internal system math — no external authority needed.

Could We Use Blockchain for Other Stuff?

Could we use a blockchain to independently verify medical records, soccer scores, or property ownership?

No.

Blockchain can't magically calculate whether you broke your arm, whether Real Madrid tied against Barcelona, or who owns a cottage in some village.

It can verify that someone owns bitcoin at a particular address, because that's just solving equations inside the system.

But anything that depends on outside facts?

Blockchain can't help you there.

Why Does Everyone Stick to One Version?

Another big question:

Why do people in bitcoin agree on the same version of history?Because of proof-of-work.

To add a new block, you have to find a specific giant number — the nonce — that, together with the block’s contents, satisfies a predefined condition.

You can't calculate the nonce directly — you have to guess, billions of times per second, until you hit the jackpot.

It takes minutes of relentless effort.An invalid transaction would invalidate the entire block, wasting all the miner’s effort.

If the block is valid, the miner earns a reward — newly minted bitcoins plus transaction fees — making the massive effort worthwhile.

And importantly, because each block is built on top of all previous ones, rewriting history would mean redoing all the proof-of-work from that point forward — an astronomically expensive and practically impossible task.

The deeper a block is buried under newer blocks, the more secure it becomes — making the past effectively immutable.And again: each node independently verifies all transactions.

Miners don't create truth; they race to package and timestamp already-valid transactions.

The winning chain is simply the one with the most provable work behind it.

Bitcoin and Blockchain: Inseparable

Bitcoin is created on the blockchain — and it exists only within the blockchain.

Ownership is defined by it.

The decentralized management of the blockchain is driven by bitcoin incentives — the pursuit of something scarce, hard-earned, and impossible to fake.No blockchain, no bitcoin.

No bitcoin, no meaningful blockchain.

Can We Just Blockchain Everything?

Alright, so what happens if we try to apply this system to something else — say, a land registry?

Properties themselves don’t "exist" on a blockchain — only claims about them can be recorded.

But who writes the claims? Random miners?

Where do they get their information?

They can’t compute it from previous blocks.

They’d have to physically go check who owns what.What if they’re lazy? Lied to? Made mistakes?

How would anyone else verify the records?

Ownership in the physical world isn’t a problem you can solve by crunching numbers in a database.Suddenly, we’re right back to needing trusted third parties — the very thing blockchain was supposed to eliminate.

And if there’s a dispute?

Say someone refuses to leave a house, claiming they've lived there forever.

Is the blockchain going to show up and evict them?Of course not.

Blockchain Without Bitcoin Is Just a Data Structure

And that’s the difference.

When blockchain is part of bitcoin’s closed system, it works because everything it cares about is internal and verifiable.

When you try to export blockchain into the real world — without bitcoin — it loses its magic.

Blockchain-like structures actually exist elsewhere too — take Git, for example.

It’s a chain of commits, each referencing the previous one by its hash.

It chains data like a blockchain does — but without the security, decentralization, or economic meaning behind bitcoin.Blockchain is just a data structure.

Bitcoin is what gives it meaning.In bitcoin, the blockchain is not just a ledger — it's a trustless system of property rights enforced by math and energy, without any central authority.

-

@ a4043831:3b64ac02

2025-04-28 11:09:07

@ a4043831:3b64ac02

2025-04-28 11:09:07While investing is essential for financial planning, it can be a dangerous and random game without a good strategy behind it. Because it not only can boost confidence that individuals can create a roadmap for financial success and minimize and mitigate risks to maximize return on investment. Long-term growth through investing strategically is key if you want to retire, accumulate wealth or become financially independent.

Why Investment Strategies are Important

Investment strategies act as roadmaps for financial development, guiding investors to:

- **Realize Financial Aims: ** Properly defined strategy positions investments with regard to short-term and long-term goals.

- Manage Risks: Appropriate diversification and asset allocation have the potential to alleviate market fluctuation.

- Maximize Returns: Investment with strategy provides superior decision-making and greater financial results.

- Stick to Plan: With strategy established, investors will be better at resisting spontaneous moves based on market volatility.

- Guarantee Financial Security: An organized investment strategy offers security and equips one with unforeseen financial conditions.

Key Steps towards Building an Investment Strategy

Having an efficient investment strategy in place calls for thoughtful planning and careful consideration of many aspects. Here are some key steps to create a winning strategy:

**1. Define Financial Goals ** Understanding financial objectives is the first step in developing a strategy for investments. The specification of goals can range from saving for a home to retirement or wealth generation. Hence, investing in them ensures alignment with the investor's personal priorities. Goals should always be specific, measurable, and time-bound such that progress can be tracked effectively.

**2. Assess the Risk Tolerance ** Every investor has a unique risk tolerance based on the financial situation and objectives. It is assessing risk tolerance that assists in deciding whether a portfolio is to be conservative, moderate, or aggressive in investments. Income stability, investment time horizon, and emotional tolerance for market volatility should all be taken into account.

**3. Diversify Investments ** Diversification eliminates risks by spreading investment across various asset classes, including stocks, bonds, real estate, and mutual funds. A diversified portfolio protects against such adverse movements so that decline in one market sector does not have a biting effect on band returns. It also provides a fair chance for capital gain while also maintaining stability.

**4. Invest Assets Judiciously ** Asset allocation is the strategy for spreading investments among different asset classes to provide a balance between risk and reward. A suitable mix can be derived with the help of a financial advisor based on the investment goals and risk tolerance. Hence, younger investors with a longer time horizon might be inclined to invest in more stocks while investors close to retirement could involve themselves in investments comprising a mix of bonds and fixed-income securities.

**5. Select the Appropriate Investment Tools ** Selection of investment tools forms various investment options that may examine diverse decisions in portfolio expansion. There are options:

- Stocks: Best for capital formation with the longest horizon, subject to market risks.

- Bonds: Provide regular income with lower risks. Generally chosen for capital preservation.

- Mutual Funds, ETFs: Diversified investment plan; managed by professionals-a mix of risk and return.

- Real Estate Investment: Passive income, diversification of portfolio, acts as an inflation hedge.

- Alternative Investments: Certifies commodities, hedge funds, currencies, all provide portfolio diversification while steering clear of any potential risks.

**6. Monitoring and Rebalancing Your Portfolio ** Over time, market conditions and personal finance situations will change. All investments should be checked from time to time to see if they are still in agreement with intended financial goals. Some adjustments may be required to improve performance and mitigate risk. Periodic rebalancing of a portfolio ensures that the asset allocation remains coherent with the initial investment regiment.

**7. Understand Tax Efficiency ** Tax planning for investment returns is crucial to optimize profit. Investors should engage, among other strategies, in tax-loss harvesting, investments in tax-advantaged accounts, or an understanding of the taxation of capital gains to minimize tax liabilities and, correspondingly, enhance returns.

How Passive Capital Management Can Help

Handling investment choices can be really tedious and that's why assistance from experts becomes very important. Trusted financial advisors at Passive Capital Management can provide solutions to help individuals create a well-tailored investment strategy. Their professionals help the client with:

- Personalized investment plans that match their particular financial goals.

- Evaluating the risk tolerance and optimal recommendations on asset allocation.

- The diversification in portfolios provides maximum returns accruing to minimum risks.

- Market-proofing the client's investment portfolio through tracking them regularly.

- Creating tax-efficient‐investment strategies for long-term growth.

They are received with experience and knowledgeable advice in the hands of the clients and therefore are able to make informed investment decisions toward security with confidence.

Conclusion

Investment schemes stand at the heart of any strategy for them to become prosperous. They are, thus, systematic ways of building wealth and minimizing risk. Investors will then devise a meaningful investment plan based on their needs by setting goals, assessing risk capacity, diversifying their holdings, and prescribing professional investment advice.

For individuals who want to create a strong investment strategy, Passive Capital Management provides professional advice and tailored solutions. Learn more with us and start growing your finances today.

-

@ f683e870:557f5ef2

2025-04-28 10:10:55

@ f683e870:557f5ef2

2025-04-28 10:10:55Spam is the single biggest problem in decentralized networks. Jameson Lopp, co-founder of Casa and OG bitcoiner, has written a brilliant article on the death of decentralized email that paints a vivid picture of what went wrong—and how an originally decentralized protocol was completely captured. The cause? Spam.

The same fate may happen to Nostr, because posting a note is fundamentally cheap. Payments, and to some extent Proof of Work, certainly have their role in fighting spam, but they introduce friction, which doesn’t work everywhere. In particular, they can’t solve every economic problem.\ Take free trials, for example. There is a reason why 99% of companies offer them. Sure, you waste resources on users who don’t convert, but it’s a calculated cost, a marketing expense. Also, some services can’t or don’t want to monetize directly. They offer something for free and monetize elsewhere.

So how do you offer a free trial or giveaway in a hostile decentralized network? Or even, how do you decide which notes to accept on your relay?

At first glance, these may seem like unrelated questions—but they’re not. Generally speaking, these are situations where you have a finite budget, and you want to use it well. You want more of what you value — and less of what you don’t (spam).

Reputation is a powerful shortcut when direct evaluation isn’t practical. It’s hard to earn, easy to lose — and that’s exactly what makes it valuable.\ Can a reputable user do bad things? Absolutely. But it’s much less likely, and that’s the point. Heuristics are always imperfect, just like the world we live in.

The legacy Web relies heavily on email-based reputation. If you’ve ever tried to log in with a temporary email, you know what I’m talking about. It just doesn’t work anymore. The problem, as Lopp explains, is that these systems are highly centralized, opaque, and require constant manual intervention.\ They also suck. They put annoying roadblocks between the world and your product, often frustrating the very users you’re trying to convert.

At Vertex, we take a different approach.\ We transparently analyze Nostr’s open social graph to help companies fight spam while improving the UX for their users. But we don’t take away your agency—we just do the math. You take the decision of what algorithm and criteria to use.

Think of us as a signal provider, not an authority.\ You define what reputation means for your use case. Want to rank by global influence? Local or personalized? You’re in control. We give you actionable and transparent analytics so you can build sharper filters, better user experiences, and more resilient systems. That’s how we fight spam, without sacrificing decentralization.

Are you looking to add Web of Trust capabilities to your app or project?\ Take a look at our website or send a DM to Pip.

-

@ 9727846a:00a18d90

2025-04-13 09:16:41

@ 9727846a:00a18d90

2025-04-13 09:16:41李承鹏:什么加税导致社会巨变,揭竿而起……都不说三千年饿殍遍野,易子而食,只要给口吃的就感谢皇恩浩荡。就说本朝,借口苏修卡我们脖子,几亿人啃了三年树皮,民兵端着枪守在村口,偷摸逃出去的都算勇士。

也不说98年总理大手一挥,一句“改革”,六千万工人下岗,基本盘稳得很。就像电影《钢的琴》里那群东北下岗工人,有的沦落街头,有的拉起乐队在婚礼火葬场做红白喜事,有的靠倒卖废弃钢材被抓,有的当小偷撬门溜锁,还有卖猪肉的,有去当小姐的……但人们按着中央的话语,说这叫“解放思想”。

“工人要替国家想,我不下岗谁下岗”,这是1999年春晚黄宏的小品,你现在觉得反人性,那时台下一片掌声,民间也很亢奋,忽然觉得自己有了力量。人们很容易站在国家立场把自己当炮灰,人民爱党爱国,所以操心什么加税,川普还能比斯大林同志赫鲁晓夫同志更狠吗,生活还能比毛主席时代更糟吗。不就是农产品啊牛肉啊芯片贵些,股票跌些,本来就生存艰难的工厂关的多些,大街上发呆眼神迷茫的人多些,跳楼的年轻人密集些,河里总能捞出不明来历的浮尸……但十五亿人民,会迅速稀释掉这些人和事。比例极小,信心极大,我们祖祖辈辈在从人到猪的生活转换适应力上,一直为世间翘楚。

民心所向,正好武统。

这正是想要的。没有一个帝王会关心你的生活质量,他只关心权力和帝国版图是否稳当。

而这莫名其妙又会和人民的想法高度契合,几千年来如此。所以聊一会儿就会散的,一定散的,只是会在明年春晚以另一种高度正能量的形式展现。

您保重。 25年4月9日

-

@ f7f4e308:b44d67f4

2025-04-09 02:12:18

@ f7f4e308:b44d67f4

2025-04-09 02:12:18https://sns-video-hw.xhscdn.com/stream/1/110/258/01e7ec7be81a85850103700195f3c4ba45_258.mp4

-

@ b0137b96:304501dd

2025-04-28 09:25:49

@ b0137b96:304501dd

2025-04-28 09:25:49Hollywood continues to deliver thrilling stories that captivate audiences worldwide. But what makes these films even more exciting? Watching them in your preferred language! Thanks to Dimension On Demand (DOD), you can now enjoy the latest Hollywood movies in Hindi, bringing you action-packed adventures, gripping narratives, and explosive sequences without language barriers.

Whether it’s historical mysteries, war-time espionage, or a bizarre transformation, DOD ensures that Hindi-speaking audiences can experience the thrill of Hollywood. Let’s dive into three must-watch action thrillers now available in Hindi!

The Body – A Mystery Buried in Time What happens when a shocking discovery challenges everything we know about history? The Body is one of the latest Hollywood movies in Hindi that brings mystery, action, and suspense together. The story follows an intense investigation after a crucified body, dating back to the first century A.D., is unearthed in a cave in Jerusalem. As word spreads, chaos ensues, and the race to uncover the truth takes a dangerous turn.

Antonio Banderas, known for his iconic roles in The Mask of Zorro and Pain and Glory, plays Matt Gutierrez, the determined investigator who dives into this centuries-old mystery, uncovering secrets that could change the world. Olivia Williams delivers a compelling performance as Sharon Golban, an archaeologist caught in the web of intrigue. With a cast that includes Derek Jacobi and John Shrapnel, the film blends history, religion, and action seamlessly, making it a must-watch among latest Hollywood movies in Hindi.

Why You Should Watch This Thriller: A Gripping Storyline – Experience the tension of a global mystery unraveling in one of the latest Hollywood movies in Hindi Famous Hollywood Actors – Antonio Banderas leads an all-star cast in this Hindi-dubbed Hollywood thriller Now in Hindi Dubbed – Enjoy this mind-blowing thriller in your language Secret Weapon – A Deadly Mission Behind Enemy Lines Set against the backdrop of World War II, Secret Weapon is an electrifying addition to the latest Hollywood movies in Hindi, taking espionage and war action to the next level. The plot follows a group of Soviet soldiers sent on a high-stakes mission to recover a top-secret rocket launcher accidentally abandoned during a hasty retreat. If the Germans got their hands on it, the tide of war could change forever.

Maxim Animateka plays Captain Zaytsev, the fearless leader of the mission, while Evgeniy Antropov and Veronika Plyashkevich bring depth to the ensemble cast. As tensions rise and danger lurks around every corner, the special ops unit must navigate enemy territory to prevent disaster. With its gripping action sequences and historical depth, this latest Hollywood movie in Hindi is a must-watch for war movie enthusiasts.

What Makes This War Thriller Stand Out: Non-Stop Action – A thrilling mission filled with suspense and danger Historical Relevance – A story set during WWII with gripping realism in this Hindi-dubbed war thriller Hindi Dub Available – Now experience this war epic with powerful Hindi dubbing A Mosquito Man – From Human to Monster What happens when life takes a turn for the worse? A Mosquito Man is one of the latest Hollywood movies in Hindi that takes sci-fi horror to a new level. The film follows Jim (played by Michael Manasseri), a man whose life is falling apart—he loses his job, his wife is cheating on him, and to top it all off, he gets kidnapped by a deranged scientist. Injected with an experimental serum, Jim undergoes a horrifying transformation, mutating into a human-mosquito hybrid with newfound abilities.

Kimberley Kates plays his estranged wife, while Lloyd Kaufman brings a sinister edge to the role of the mad scientist. As Jim learns to embrace his monstrous form, he embarks on a twisted path of revenge, leaving chaos in his wake. With its mix of action, horror, and sci-fi, thislatest Hollywood movie in Hindi delivers a truly unique cinematic experience.

Why This Action Thriller is a Must-Watch: A One-of-a-Kind Storyline – A dark and bizarre superhero-like transformation Action, Suspense & Thrills Combined – A perfect mix of high-octane action and eerie moments in this Hindi-dubbed action thriller Available in Hindi Dubbed – Get ready for an adrenaline-pumping experience Watch These latest Hollywood movies in Hindi on DOD! With Dimension On Demand (DOD), you no longer have to miss out on Hollywood’s biggest action hits. Whether it’s a historical thriller, a war drama, or an unexpected adventure, the latest Hollywood movies in Hindi are now just a click away. Get ready for high-octane entertainment like never before!

Check out these films now on the DOD YouTube channel! Watch The Body in Hindi Dubbed – Click here! Enjoy A Mosquito Man in Hindi Dubbed – Start now!

Conclusion Hollywood continues to thrill audiences worldwide, and with these latest Hollywood movies in Hindi, language is no longer a barrier. From gripping mysteries and war-time espionage to bizarre transformations, these films bring non-stop entertainment. Thanks to DOD, you can now enjoy Hollywood’s best action movies in Hindi, making for an immersive and thrilling cinematic experience. So, what are you waiting for? Tune in, grab some popcorn, and dive into the action!

-

@ 04c915da:3dfbecc9

2025-03-12 15:30:46

@ 04c915da:3dfbecc9

2025-03-12 15:30:46Recently we have seen a wave of high profile X accounts hacked. These attacks have exposed the fragility of the status quo security model used by modern social media platforms like X. Many users have asked if nostr fixes this, so lets dive in. How do these types of attacks translate into the world of nostr apps? For clarity, I will use X’s security model as representative of most big tech social platforms and compare it to nostr.

The Status Quo

On X, you never have full control of your account. Ultimately to use it requires permission from the company. They can suspend your account or limit your distribution. Theoretically they can even post from your account at will. An X account is tied to an email and password. Users can also opt into two factor authentication, which adds an extra layer of protection, a login code generated by an app. In theory, this setup works well, but it places a heavy burden on users. You need to create a strong, unique password and safeguard it. You also need to ensure your email account and phone number remain secure, as attackers can exploit these to reset your credentials and take over your account. Even if you do everything responsibly, there is another weak link in X infrastructure itself. The platform’s infrastructure allows accounts to be reset through its backend. This could happen maliciously by an employee or through an external attacker who compromises X’s backend. When an account is compromised, the legitimate user often gets locked out, unable to post or regain control without contacting X’s support team. That process can be slow, frustrating, and sometimes fruitless if support denies the request or cannot verify your identity. Often times support will require users to provide identification info in order to regain access, which represents a privacy risk. The centralized nature of X means you are ultimately at the mercy of the company’s systems and staff.

Nostr Requires Responsibility

Nostr flips this model radically. Users do not need permission from a company to access their account, they can generate as many accounts as they want, and cannot be easily censored. The key tradeoff here is that users have to take complete responsibility for their security. Instead of relying on a username, password, and corporate servers, nostr uses a private key as the sole credential for your account. Users generate this key and it is their responsibility to keep it safe. As long as you have your key, you can post. If someone else gets it, they can post too. It is that simple. This design has strong implications. Unlike X, there is no backend reset option. If your key is compromised or lost, there is no customer support to call. In a compromise scenario, both you and the attacker can post from the account simultaneously. Neither can lock the other out, since nostr relays simply accept whatever is signed with a valid key.

The benefit? No reliance on proprietary corporate infrastructure.. The negative? Security rests entirely on how well you protect your key.

Future Nostr Security Improvements

For many users, nostr’s standard security model, storing a private key on a phone with an encrypted cloud backup, will likely be sufficient. It is simple and reasonably secure. That said, nostr’s strength lies in its flexibility as an open protocol. Users will be able to choose between a range of security models, balancing convenience and protection based on need.

One promising option is a web of trust model for key rotation. Imagine pre-selecting a group of trusted friends. If your account is compromised, these people could collectively sign an event announcing the compromise to the network and designate a new key as your legitimate one. Apps could handle this process seamlessly in the background, notifying followers of the switch without much user interaction. This could become a popular choice for average users, but it is not without tradeoffs. It requires trust in your chosen web of trust, which might not suit power users or large organizations. It also has the issue that some apps may not recognize the key rotation properly and followers might get confused about which account is “real.”

For those needing higher security, there is the option of multisig using FROST (Flexible Round-Optimized Schnorr Threshold). In this setup, multiple keys must sign off on every action, including posting and updating a profile. A hacker with just one key could not do anything. This is likely overkill for most users due to complexity and inconvenience, but it could be a game changer for large organizations, companies, and governments. Imagine the White House nostr account requiring signatures from multiple people before a post goes live, that would be much more secure than the status quo big tech model.

Another option are hardware signers, similar to bitcoin hardware wallets. Private keys are kept on secure, offline devices, separate from the internet connected phone or computer you use to broadcast events. This drastically reduces the risk of remote hacks, as private keys never touches the internet. It can be used in combination with multisig setups for extra protection. This setup is much less convenient and probably overkill for most but could be ideal for governments, companies, or other high profile accounts.

Nostr’s security model is not perfect but is robust and versatile. Ultimately users are in control and security is their responsibility. Apps will give users multiple options to choose from and users will choose what best fits their need.

-

@ 2b24a1fa:17750f64

2025-04-28 09:11:34

@ 2b24a1fa:17750f64

2025-04-28 09:11:34Eine Stunde Klassik! Der Münchner Pianist und "Musikdurchdringer" Jürgen Plich stellt jeden Dienstag um 20 Uhr bei Radio München (https://radiomuenchen.net/stream/) große klassische Musik vor. Er teilt seine Hör- und Spielerfahrung und seine persönliche Sicht auf die Meisterwerke. Er spielt selbst besondere, unbekannte Aufnahmen, erklärt, warum die Musik so und nicht anders klingt und hat eine Menge aus dem Leben der Komponisten zu erzählen.

Sonntags um 10 Uhr in der Wiederholung. Oder hier zum Nachhören:

-

@ 2b24a1fa:17750f64

2025-04-28 09:08:01

@ 2b24a1fa:17750f64

2025-04-28 09:08:01„Ganz im Geiste des klassischen Kabaretts widmen sich Franz Esser und Michael Sailer den Ereignissen des letzten Monats: Was ist passiert? Und was ist dazu zu sagen? Das ist oft frappierend - und manchmal auch zum Lachen.“

https://soundcloud.com/radiomuenchen/vier-wochen-wahnsinn-april-25?

-

@ 1b9fc4cd:1d6d4902

2025-04-28 08:50:14

@ 1b9fc4cd:1d6d4902

2025-04-28 08:50:14Imagine a classroom where learning is as engaging as your favorite playlist, complex concepts are sung rather than slogged through, and students eagerly anticipate their lessons. This isn’t some utopian dream—it's the reality when music is integrated into education. Music isn’t just a tool for entertainment; it’s a powerful ally in enhancing learning. From improving literacy and language skills to helping those with autism, Daniel Siegel Alonso explores how music makes education a harmonious experience.

Literacy is the bedrock of education, and music can play a pivotal role in forging that foundation. Studies have shown that musical training can significantly enhance reading skills. Music's rhythmic and melodic patterns help spark phonological awareness, which is critical for reading.

Siegel Alonso takes the example of “singing the alphabet.” This simple, catchy tune helps children remember letter sequences more effectively than rote memorization. But it doesn’t stop there. Programs like “Reading Rocks!” incorporate songs and rhymes to teach reading. By engaging multiple senses, these programs make learning to read more interactive and enjoyable.

Music also aids in comprehension and retention. Lyrics often tell stories; children can better grasp narrative structure and vocabulary through songs. The melody and rhythm act as mnemonic devices, making it easier to recall information. So, next time you catch yourself singing a line from a song you heard years ago, remember: your brain’s just showing off impressive recall skills.

Music is a universal language that can bridge cultural and linguistic divides. It offers a fun and effective method for grasping vocabulary, pronunciation, and syntax for those learning a new language.

Siegel Alonso considers the success of the “Language through Music” approach, where songs in the target language are used to teach linguistic elements. Listening to and singing songs helps learners familiarize themselves with the natural rhythm and intonation of the language. It’s like getting a musical earworm that teaches you how to conjugate verbs.

Children’s tunes are particularly effective. They are repetitive, use simple language, and are often accompanied by actions, making them ideal for language learners. Adults can also benefit; think of the many people who have learned basic phrases in a foreign language by singing along to popular songs.

Moreover, music can make the uphill battle of language learning enjoyable. Picture learning Spanish through the vibrant beats of salsa or French through the soulful chansons of Edith Piaf. Music's emotional connection can deepen engagement and motivation, transforming language lessons from a chore into a delightful activity.

For individuals with autism, music can be a bridge to communication and social interaction. Autism often affects language development and social skills, but music has a unique way of reaching across these barriers. Music therapy has shown remarkable results in helping those with autism.

Through musical activities, individuals can express themselves nonverbally, which is especially beneficial for those who struggle with traditional forms of communication. Music can also help develop speech, improve social interactions, and reduce anxiety.

There are many anecdotes of nonverbal autistic children who struggle to communicate. But when they were introduced to music therapy, they began to hum and eventually sing along with their therapist. This breakthrough provided a new way for them to express their feelings and needs, fostering better communication with their family and peers.

Music also helps develop routines and transitions, which can be challenging for individuals with autism. Songs that signal the start of an activity or the end of the day create a predictable structure that can reduce anxiety and improve behavior. Additionally, group music activities encourage social interaction and cooperation, providing a fun and safe environment for practicing these skills.

Music’s impact on learning is profound and multifaceted. It’s not just a tool for young children or those facing specific challenges; it’s a lifelong ally in education. Music's benefits are vast, whether it’s helping students understand mathematical concepts through rhythm, enhancing memory and cognitive skills, or providing a creative outlet.

Integrating music into educational settings creates a more dynamic and engaging learning environment. It adapts to different learning styles, making lessons accessible and palatable for all students. Music fosters a safe, positive, and stimulating atmosphere where learning is effective and joyful.

As we continue to analyze and unlock the power of music in education, Daniel Siegel Alonso notes that it’s clear that the thread between learning and music can lead to academic and personal success. So, let’s keep the music playing in our schools and beyond, ensuring that education hits all the right notes.

-

@ 52b4a076:e7fad8bd

2025-04-28 00:48:57

@ 52b4a076:e7fad8bd

2025-04-28 00:48:57I have been recently building NFDB, a new relay DB. This post is meant as a short overview.

Regular relays have challenges

Current relay software have significant challenges, which I have experienced when hosting Nostr.land: - Scalability is only supported by adding full replicas, which does not scale to large relays. - Most relays use slow databases and are not optimized for large scale usage. - Search is near-impossible to implement on standard relays. - Privacy features such as NIP-42 are lacking. - Regular DB maintenance tasks on normal relays require extended downtime. - Fault-tolerance is implemented, if any, using a load balancer, which is limited. - Personalization and advanced filtering is not possible. - Local caching is not supported.

NFDB: A scalable database for large relays

NFDB is a new database meant for medium-large scale relays, built on FoundationDB that provides: - Near-unlimited scalability - Extended fault tolerance - Instant loading - Better search - Better personalization - and more.

Search

NFDB has extended search capabilities including: - Semantic search: Search for meaning, not words. - Interest-based search: Highlight content you care about. - Multi-faceted queries: Easily filter by topic, author group, keywords, and more at the same time. - Wide support for event kinds, including users, articles, etc.

Personalization

NFDB allows significant personalization: - Customized algorithms: Be your own algorithm. - Spam filtering: Filter content to your WoT, and use advanced spam filters. - Topic mutes: Mute topics, not keywords. - Media filtering: With Nostr.build, you will be able to filter NSFW and other content - Low data mode: Block notes that use high amounts of cellular data. - and more

Other

NFDB has support for many other features such as: - NIP-42: Protect your privacy with private drafts and DMs - Microrelays: Easily deploy your own personal microrelay - Containers: Dedicated, fast storage for discoverability events such as relay lists

Calcite: A local microrelay database

Calcite is a lightweight, local version of NFDB that is meant for microrelays and caching, meant for thousands of personal microrelays.

Calcite HA is an additional layer that allows live migration and relay failover in under 30 seconds, providing higher availability compared to current relays with greater simplicity. Calcite HA is enabled in all Calcite deployments.

For zero-downtime, NFDB is recommended.

Noswhere SmartCache

Relays are fixed in one location, but users can be anywhere.

Noswhere SmartCache is a CDN for relays that dynamically caches data on edge servers closest to you, allowing: - Multiple regions around the world - Improved throughput and performance - Faster loading times

routerd

routerdis a custom load-balancer optimized for Nostr relays, integrated with SmartCache.routerdis specifically integrated with NFDB and Calcite HA to provide fast failover and high performance.Ending notes

NFDB is planned to be deployed to Nostr.land in the coming weeks.

A lot more is to come. 👀️️️️️️

-

@ 8d34bd24:414be32b

2025-04-27 03:42:57

@ 8d34bd24:414be32b

2025-04-27 03:42:57I used to hate end times prophecy because it didn’t make sense. I didn’t understand how the predictions could be true, so I wondered if the fulfillment was more figurative than literal. As time has progressed, I’ve seen technologies and international relations change in ways that make the predictions seem not only possible, but probable. I’ve seen the world look more and more like what is predicted for the end times.

I thought it would be handy to look at the predictions and compare them to events, technologies, and nations today. This is a major undertaking, so this will turn into a series. I only hope I can do it justice. I will have some links to news articles on these current events and technologies. Because I can’t remember where I’ve read many of these things, it is likely I will put some links to some news sources that I don’t normally recommend, but which do a decent job of covering the point I’m making. I’m sorry if I don’t always give a perfect source. I have limited time, so in some cases, I’ll link to the easy (main stream journals that show up high on web searches) rather than what I consider more reliable sources because of time constraints.

I also want to give one caveat to everything I discuss below. Although I do believe the signs suggest the Rapture and Tribulation are near, I can’t say exactly what that means or how soon these prophecies will be fulfilled. Could it be tomorrow, a month from now, a year from now, or 20 years from now? Yes, any of them could be true. Could it be even farther in the future? It could be, even if my interpretation of the data concludes that to be less likely.

I will start with a long passage from Matthew that describes what Jesus told His disciples to expect before “the end of the age.” Then I’ll go to some of the end times points that seemed unexplainable to me in the past. We’ll see where things go from there. I’ve already had to split discussion of this one passage into multiple posts due to length.

Jesus’s Signs of the End

As He was sitting on the Mount of Olives, the disciples came to Him privately, saying, “Tell us, when will these things happen, and what will be the sign of Your coming, and of the end of the age?”

And Jesus answered and said to them, “See to it that no one misleads you. For many will come in My name, saying, ‘I am the Christ,’ and will mislead many. You will be hearing of wars and rumors of wars. See that you are not frightened, for those things must take place, but that is not yet the end. For nation will rise against nation, and kingdom against kingdom, and in various places there will be famines and earthquakes. But all these things are merely the beginning of birth pangs.

“Then they will deliver you to tribulation, and will kill you, and you will be hated by all nations because of My name. At that time many will fall away and will betray one another and hate one another. Many false prophets will arise and will mislead many. Because lawlessness is increased, most people’s love will grow cold. But the one who endures to the end, he will be saved. This gospel of the kingdom shall be preached in the whole world as a testimony to all the nations, and then the end will come. (Matthew 24:3-14) {emphasis mine}

Before I go into the details I do want to clarify one thing. The verses that follow the above verses (Matthew 24:16-28) mention the “abomination of desolation” and therefore is clearly discussing the midpoint of the tribulation and the following 3.5 years or Great Tribulation. The first half of Matthew 24 discusses the birth pangs and the first half of the Tribulation. The signs that I discuss will be growing immediately preceding the Tribulation, but probably will not be completely fulfilled until the first 3.5 years of the Tribulation.

I do think we will see an increase of all of these signs before the 7 year Tribulation begins as part of the birth pangs even if they are not fulfilled completely until the Tribulation:

-

Wars and rumors of wars. (Matthew 24:6a)

-

Famines (Matthew 24:7)

-

Earthquakes (Matthew 24:7).

-

Israel will be attacked and will be hated by all nations (Matthew 24:9)

-

Falling away from Jesus (Matthew 24:10)

-

Many Misled (Matthew 24:10)

-

People’s love will grow cold (Matthew 24:12)

-

Gospel will be preached to the whole world (Matthew 24:14)

Now let’s go through each of these predictions to see what we are seeing today.

1. Wars and Rumors of Wars

When you hear of wars and disturbances, do not be terrified; for these things must take place first, but the end does not follow immediately.” (Luke 21:9)

In 1947 the doomsday clock was invented. It theoretically tells how close society is to all out war and destruction of mankind. It was just recently set to 89 seconds to midnight, the closest it has ever been. It is true that this isn’t a scientific measure and politics can effect the setting, i.e. climate change & Trump Derangement Syndrome, but it is still one of many indicators of danger and doom.

There are three main events going on right now that could lead to World War III and the end times.

Obviously the war between Russia and Ukraine has gotten the world divided. It is true that Russia invaded Ukraine, but there were many actions by the US and the EU that provoked this attack. Within months of the initial attack, there was a near agreement between Ukraine and Russia to end the war, but the US and the EU talked Ukraine out of peace, leading to hundreds of thousands of Ukrainians and Russians dying for basically no change of ground. Estimates of deaths vary greatly. See here, here, here. Almost all English sources list Russia as having many more deaths than Ukraine, but since Ukraine is now drafting kids and old men, is considering drafting women, and has most of its defensive capabilities destroyed, while Russia still seems to have plenty of men and weapons, I find this hard to believe. I don’t think any of the parties that have data are motivated to tell the truth. We probably will never know.

The way the EU (and the US until recently) has sacrificed everything to defend Ukraine (until this war known as the most corrupt nation in Europe and known for its actual Nazis) and to do everything in its power to keep the war with Russia going, things could easily escalate. The US and the EU have repeatedly crossed Russia’s red-lines. One of these days, Russia is likely to say “enough is enough” and actually attack Europe. This could easily spiral out of control. I do think that Trump’s pull back and negotiations makes this less likely to lead to world war than it seemed for the past several years. This article does a decent job of explaining the background for the war that most westerners, especially Americans, don’t understand.

Another less well known hot spot is the tension between China and Taiwan. Taiwan is closer politically to the US, but closer economically and culturally to China. This causes tension. Taiwan also produces the majority of the high tech microchips used in advanced technology. Both the US and China want and need this technology. I honestly believe this is the overarching issue regarding Taiwan. If either the US or China got control of Taiwan’s microchip production, it would be military and economic game over for the other. This is stewing, but I don’t think this will be the cause of world war 3, although it could become part of the war that leads to the Antichrist ruling the world.

The war that is likely to lead to the Tribulation involves Israel and the Middle East. Obviously, the Muslim nations hate Israel and attack them almost daily. We also see Iran, Russia, Turkey, and other nations making alliances that sound a lot like the Gog/Magog coalition in Ezekiel 38. The hate of Israel has grown to a level that makes zero sense unless you take into account the spiritual world and Bible prophecy. Such a small insignificant nation, that didn’t even exist for \~1900 years, shouldn’t have the influence on world politics that it does. It is about the size of the state of New Jersey. Most nations of Israel’s size, population, and economy are not even recognized by most people. Is there a person on earth that doesn’t know about Israel? I doubt it. Every nation on earth seems to have a strong positive or, more commonly, negative view of Israel. We’ll get to this hate of Israel more below in point 4.

2. Famines

In the two parallel passages to Matthew 24, there is once again the prediction of famines coming before the end.

For nation will rise up against nation, and kingdom against kingdom; there will be earthquakes in various places; there will also be famines. These things are merely the beginning of birth pangs. (Mark 13:8) {emphasis mine}

and there will be great earthquakes, and in various places plagues and famines; and there will be terrors and great signs from heaven. (Luke 21:11) {emphasis mine}

In Revelation, the third seal releases famine upon the earth and a day’s wages will only buy one person’s daily wheat needs. A man with a family would only be able to buy lower quality barley to barely feed his family.

When He broke the third seal, I heard the third living creature saying, “Come.” I looked, and behold, a black horse; and he who sat on it had a pair of scales in his hand. And I heard something like a voice in the center of the four living creatures saying, “A quart of wheat for a denarius, and three quarts of barley for a denarius; and do not damage the oil and the wine.” (Revelation 6:5-6) {emphasis mine}

We shouldn’t fear a Tribulation level famine as a precursor to the Tribulation, but we should see famines scattered around the world, shortages of different food items, and rising food prices, all of which we are seeing. (Once again, I can’t support many of these sources or verify all of their data, but they give us a feel of what is going on today.)

Food Prices Go Up

-

Bird Flu scares and government responses cause egg and chicken prices to increase. The government response to the flu is actually causing more problems than the flu itself and it looks like this more dangerous version may have come out of a US lab.

-

Tariffs and trade war cause some items to become more expensive or less available. here

-

Ukraine war effecting the supply of grain and reducing availability of fertilizer. More info.

-

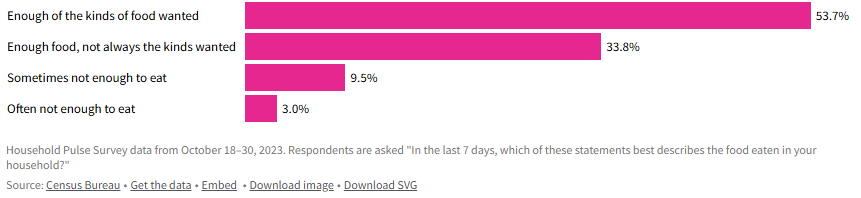

Inflation and other effects causing food prices to go up. This is a poll from Americans.

- Grocery prices overall have increased around 23% since 2021, with prices on individual items like coffee and chocolate rising much faster.

- Grocery prices overall have increased around 23% since 2021, with prices on individual items like coffee and chocolate rising much faster.-

General Food inflation is difficult, but not life destroying for most of the world, but some nations are experiencing inflation that is causing many to be unable to afford food. Single digit food inflation is difficult, even in well-to-do nations, but in poor nations, where a majority of the people’s income already goes to food, it can be catastrophic. When you look at nations like Zimbabwe (105%), Venezuela (22%), South Sudan (106%), Malawi (38%), Lebanon (20%), Haiti (37%), Ghana (26%), Burundi (39%), Bolivia (35%), and Argentina (46%), you can see that there are some seriously hurting people. More info.

-

It does look like general food inflation has gone down for the moment (inflation has gone down, but not necessarily prices), but there are many situations around the world that could make it go back up again.

-

Wars causing famine

-

Sudan: War has made an already poor and hurting country even worse off.

-

Gaza: (When I did a web search, all of the sites that came up on the first couple of pages are Israel hating organizations that are trying to cause trouble and/or raise money, so there is major bias. I did link to one of these sites just to be thorough, but take into account the bias of the source.)

-

Ukraine: Mostly covered above. The war in Ukraine has affected the people of Ukraine and the world negatively relative to food.

I’m sure there are plenty more evidences for famine or potential famine, but this gives a taste of what is going on.

Our global economy has good and bad effects on the food supply. Being able to transport food around the globe means that when one area has a bad crop, they can import food from another area that produced more than they need. On the other hand, sometimes an area stops producing food because they can import food more cheaply. If something disrupts that imported food (tariffs, trade wars, physical wars, transportation difficulties, intercountry disputes, etc.) then they suddenly have no food. We definitely have a fragile system, where there are many points that could fail and cause famine to abound.

The Bible also talks about another kind of famine in the end times.

“Behold, days are coming,” declares the Lord God,\ “When I will send a famine on the land,\ *Not a famine for bread or a thirst for water,\ But rather for hearing the words of the Lord*.\ People will stagger from sea to sea\ And from the north even to the east;\ They will go to and fro to seek the word of the Lord,\ But they will not find it**. (Amos 8:11-12) {emphasis mine}

We are definitely seeing a famine regarding the word of God. It isn’t that the word of God is not available, but even in churches, there is a lack of teaching the actual word of God from the Scriptures. Many churches teach more self-help or feel good messages than they do the word of God. Those looking to know God better are starving or thirsting for truth and God’s word. I know multiple people who have given up on assembling together in church because they can’t find a Bible believing, Scripture teaching church. How sad!

Although famine should be expected before the Tribulation, the good news is that no famine will separate us from our Savior.

Who will separate us from the love of Christ? Will tribulation, or distress, or persecution, or famine, or nakedness, or peril, or sword? (Romans 8:35) {emphasis mine}

3. Earthquakes

We recently saw a major \~7.8 earthquake in Myanmar. Although it seems like we are having many major earthquakes, it is more difficult to determine whether there is actually a major increase or if the seeming increase is due to increasing population to harm, more/better instrumentation, and/or more media coverage. We are definitely seeing lots of earthquake damage and loss of life. I tend to think the number and severity of earthquakes will increase even more before the Tribulation, but only time will tell.

4. Israel will be attacked and will be hated by all nations

“Then they will deliver you [Israel] to tribulation, and will kill you, and you will be hated by all nations because of My name. (Matthew 24:9) {emphasis & clarification mine}

This verse doesn’t specifically mention Israel. It says “you,” but since Jesus was talking to Jews, the best interpretation is that this warning is to the Jews. At the same time, we are also seeing attacks on Christians, so it likely refers to both Jews and Christians. I’m going to focus on Jews/Israel because I don’t think I need to convince most Christians that persecution is increasing.

We have been seeing hatred of Jews and Israel growing exponentially since the biblical prediction of a re-establishment of Israel was accomplished.

All end times prophecy focuses on Israel and requires Israel to be recreated again since it was destroyed in A.D. 70.

Who has heard such a thing? Who has seen such things?\ Can a land be born in one day?\ Can a nation be brought forth all at once?\ As soon as Zion travailed, she also brought forth her sons. (Isaiah 66:8)

-

“British Foreign Minister Lord Balfour issued on November 2, 1917, the so-called Balfour Declaration, which gave official support for the “establishment in Palestine of a national home for the Jewish people” with the commitment not to be prejudiced against the rights of the non-Jewish communities.” In one day Israel was declared a nation.

-

“On the day when the British Mandate in Palestine expired, the State of Israel was instituted on May 14, 1948, by the Jewish National Council under the presidency of David Ben Gurion.” Then on another day Israel actually came into being with a leader and citizens.

-

“Six-Day War: after Egypt closed the Straits of Tiran on May 22, 1967, Israel launched an attack on Egyptian, Jordanian, Syrian, and Iraqi airports on June 5, 1967. After six days, Israel conquered Jerusalem, the Golan Heights, Sinai, and the West Bank.” On June 11, 1967 Jerusalem was conquered and once again became the capital of Israel.

If you read any of these links you can see the history of Israel being repeatedly attacked in an attempt to destroy Israel and stop God’s prophecy that Israel would be recreated and be used in the end times as part of the judgement of the world. This is a very good article on how God plans to use Israel in end times, how God will fulfill all of his promises to Israel, and how the attacks on Israel are Satan’s attempt to stop God’s plan. It is well worth you time to read and well supported by Scripture.

Since Israel became a new nation again, the nations of the world have ramped up their attacks on Israel and the Jews. The hatred of the Jews is hard to fathom. The Jews living in Israel have been constantly at risk of suicide bombers, terrorist attacks, rocket/missile attacks, etc. Almost daily attacks are common recently. The most significant recent attack happened on October 7th. Around 3,000 Hamas terrorists stormed across the border and attacked men, women, and children. About 1200 were killed, mostly civilians and even kids. In addition to murdering these innocent individuals, others were tortured, raped, and kidnapped as well.

You would expect the world to rally around a nation attacked in such a horrendous manner (like most of the world rallied around the US after 9/11), but instead you immediately saw protests supporting Palestine and condemning Israel. I’ve never seen something so upside down in my life. It is impossible to comprehend until you consider the spiritual implications. Satan has been trying to destroy Israel and the Jews since God made His first promise to Abraham. I will never claim that everything Israeli politicians and generals do is good, but the hate towards this tiny, insignificant nation is unfathomable and the world supporting terrorist attacks, instead of the victims of these attacks, is beyond belief.

Israel allows people of Jewish ancestry and Palestinian ancestry to be citizens and vote. There are Jews, Muslims, and Christians in the Knesset (Jewish Congress). Yes, Israel has responded harshly against the Palestinians and innocents have been harmed, but Israel repeatedly gave up land for peace and then that land has been used to attack them. I can’t really condemn them for choosing to risk the death of Palestinian innocents over risking the death of their own innocents. Hamas and Hezbollah are known for attacking innocents, and then using their own innocents as human shields. They then accuse their victims of atrocities when their human shields are harmed. The UN Human Rights council condemns Israel more than all other nations combined when there are atrocities being committed in many, many other nations that are as bad or worse. Why is the world focused on Israel and the Jews? It is because God loves them (despite their rejection of Him) and because Satan hates them.

Throughout history the world has tried to destroy the Jews, but thanks to God and His eternal plan, they are still here and standing strong. the hate is growing to a fevered pitch, just as predicted by Jesus.

This post has gotten so long that it can’t be emailed, so I will post the final 4 points in a follow-up post. I hope these details are helpful to you and seeing that all of the crazy, hate, and destruction occurring in the world today was known by God and is being used by God to His glory and are good according to His perfect plan.

When we see that everything happening in the world is just part of God’s perfect plan, we can have peace, knowing that God is in control. We need to lean on Him and trust Him just as a young child feels safe in his Fathers arms. At the same time, seeing the signs should encourage us to share the Gospel with unbelievers because our time is short. Don’t put off sharing Jesus with those around you because you might not get another chance.

Trust Jesus.

FYI, I hope to write several more articles on the end times (signs of the times, the rapture, the millennium, and the judgement), but I might be a bit slow rolling them out because I want to make sure they are accurate and well supported by Scripture. You can see my previous posts on the end times on the end times tab at trustjesus.substack.com. I also frequently will list upcoming posts.

-

-

@ eb35d9c0:6ea2b8d0

2025-03-02 00:55:07

@ eb35d9c0:6ea2b8d0

2025-03-02 00:55:07I had a ton of fun making this episode of Midnight Signals. It taught me a lot about the haunting of the Bell family and the demise of John Bell. His death was attributed to the Bell Witch making Tennessee the only state to recognize a person's death to the supernatural.

If you enjoyed the episode, visit the Midnight Signals site. https://midnightsignals.net

Show Notes

Journey back to the early 1800s and the eerie Bell Witch haunting that plagued the Bell family in Adams, Tennessee. It began with strange creatures and mysterious knocks, evolving into disembodied voices and violent attacks on young Betsy Bell. Neighbors, even Andrew Jackson, witnessed the phenomena, adding to the legend. The witch's identity remains a mystery, sowing fear and chaos, ultimately leading to John Bell's tragic demise. The haunting waned, but its legacy lingers, woven into the very essence of the town. Delve into this chilling story of a family's relentless torment by an unseen force.

Transcript

Good evening, night owls. I'm Russ Chamberlain, and you're listening to midnight signals, the show, where we explore the darkest corners of our collective past. Tonight, our signal takes us to the early 1800s to a modest family farm in Adams, Tennessee. Where the Bell family encountered what many call the most famous haunting in American history.

Make yourself comfortable, hush your surroundings, and let's delve into this unsettling tale. Our story begins in 1804, when John Bell and his wife Lucy made their way from North Carolina to settle along the Red River in northern Tennessee. In those days, the land was wide and fertile, mostly unspoiled with gently rolling hills and dense woodland.

For the Bells, John, Lucy, and their children, The move promised prosperity. They arrived eager to farm the rich soil, raise livestock, and find a peaceful home. At first, life mirrored [00:01:00] that hope. By day, John and his sons worked tirelessly in the fields, planting corn and tending to animals, while Lucy and her daughters managed the household.

Evenings were spent quietly, with scripture readings by the light of a flickering candle. Neighbors in the growing settlement of Adams spoke well of John's dedication and Lucy's gentle spirit. The Bells were welcomed into the Fold, a new family building their future on the Tennessee Earth. In those early years, the Bells likely gave little thought to uneasy rumors whispered around the region.

Strange lights seen deep in the woods, soft cries heard by travelers at dusk, small mysteries that most dismissed as product of the imagination. Life on the frontier demanded practicality above all else, leaving little time to dwell on spirits or curses. Unbeknownst to them, events on their farm would soon dominate not only their lives, but local lore for generations to come.[00:02:00]

It was late summer, 1817, when John Bell's ordinary routines took a dramatic turn. One evening, in the waning twilight, he spotted an odd creature near the edge of a tree line. A strange beast resembling part dog, part rabbit. Startled, John raised his rifle and fired, the shot echoing through the fields. Yet, when he went to inspect the spot, nothing remained.

No tracks, no blood, nothing to prove the creature existed at all. John brushed it off as a trick of falling light or his own tired eyes. He returned to the house, hoping for a quiet evening. But in the days that followed, faint knocking sounds began at the windows after sunset. Soft scratching rustled against the walls as if curious fingers or claws tested the timbers.

The family's dog barked at shadows, growling at the emptiness of the yard. No one considered it a haunting at first. Life on a rural [00:03:00] farm was filled with pests, nocturnal animals, and the countless unexplained noises of the frontier. Yet the disturbances persisted, night after night, growing a little bolder each time.

One evening, the knocking on the walls turned so loud it woke the entire household. Lamps were lit, doors were open, the ground searched, but the land lay silent under the moon. Within weeks, the unsettling taps and scrapes evolved into something more alarming. Disembodied voices. At first, the voices were faint.

A soft murmur in rooms with no one in them. Betsy Bell, the youngest daughter, insisted she heard her name called near her bed. She ran to her mother and her father trembling, but they found no intruder. Still, The voice continued, too low for them to identify words, yet distinct enough to chill the blood.

Lucy Bell began to fear they were facing a spirit, an unclean presence that had invaded their home. She prayed for divine [00:04:00] protection each evening, yet sometimes the voice seemed to mimic her prayers, twisting her words into a derisive echo. John Bell, once confident and strong, grew unnerved. When he tried reading from the Bible, the voice mocked him, imitating his tone like a cruel prankster.

As the nights passed, disturbances gained momentum. Doors opened by themselves, chairs shifted with no hand to move them, and curtains fluttered in a room void of drafts. Even in daytime, Betsy would find objects missing, only for them to reappear on the kitchen floor or a distant shelf. It felt as if an unseen intelligence roamed the house, bent on sowing chaos.

Of all the bells, Betsy suffered the most. She was awakened at night by her hair being yanked hard enough to pull her from sleep. Invisible hands slapped her cheeks, leaving red prints. When she walked outside by day, she heard harsh whispers at her ear, telling her she would know [00:05:00] no peace. Exhausted, she became withdrawn, her once bright spirit dulled by a ceaseless fear.

Rumors spread that Betsy's torment was the worst evidence of the haunting. Neighbors who dared spend the night in the Bell household often witnessed her blankets ripped from the bed, or watched her clutch her bruised arms in distress. As these accounts circulated through the community, people began referring to the presence as the Bell Witch, though no one was certain if it truly was a witch's spirit or something else altogether.

In the tightly knit town of Adams, word of the strange happenings at the Bell Farm soon reached every ear. Some neighbors offered sympathy, believing wholeheartedly that the family was besieged by an evil force. Others expressed skepticism, guessing there must be a logical trick behind it all. John Bell, ordinarily a private man, found himself hosting visitors eager to witness the so called witch in action.

[00:06:00] These visitors gathered by the parlor fireplace or stood in darkened hallways, waiting in tense silence. Occasionally, the presence did not appear, and the disappointed guests left unconvinced. More often, they heard knocks vibrating through the walls or faint moans drifting between rooms. One man, reading aloud from the Bible, found his words drowned out by a rasping voice that repeated the verses back at him in a warped, sing song tone.

Each new account that left the bell farm seemed to confirm the unearthly intelligence behind the torment. It was no longer mere noises or poltergeist pranks. This was something with a will and a voice. Something that could think and speak on its own. Months of sleepless nights wore down the Bell family.

John's demeanor changed. The weight of the haunting pressed on him. Lucy, steadfast in her devotion, prayed constantly for deliverance. The [00:07:00] older Bell children, seeing Betsy attacked so frequently, tried to shield her but were powerless against an enemy that slipped through walls. Farming tasks were delayed or neglected as the family's time and energy funneled into coping with an unseen assailant.

John Bell began experiencing health problems that no local healer could explain. Trembling hands, difficulty swallowing, and fits of dizziness. Whether these ailments arose from stress or something darker, they only reinforced his sense of dread. The voice took to mocking him personally, calling him by name and snickering at his deteriorating condition.

At times, he woke to find himself pinned in bed, unable to move or call out. Despite it all, Lucy held the family together. Soft spoken and gentle, she soothed Betsy's tears and administered whatever remedies she could to John. Yet the unrelenting barrage of knocks, whispers, and violence within her own home tested her faith [00:08:00] daily.

Amid the chaos, Betsy clung to one source of joy, her engagement to Joshua Gardner, a kind young man from the area. They hoped to marry and begin their own life, perhaps on a parcel of the Bell Land or a new farmstead nearby. But whenever Joshua visited the Bell Home, The unseen spirit raged. Stones rattled against the walls, and the door slammed as if in warning.

During quiet walks by the river, Betsy heard the voice hiss in her ear, threatening dire outcomes if she ever were to wed Joshua. Night after night, Betsy lay awake, her tears soaked onto her pillow as she wrestled with the choice between her beloved fiancé and this formidable, invisible foe. She confided in Lucy, who offered comfort but had no solution.

For a while, Betsy and Joshua resolved to stand firm, but the spirit's fury only escalated. Believing she had no alternative, Betsy broke off the engagement. Some thought the family's [00:09:00] torment would subside if the witches demands were met. In a cruel sense, it seemed to succeed. With Betsy's engagement ended, the spirit appeared slightly less focused on her.

By now, the Bell Witch was no longer a mere local curiosity. Word of the haunting spread across the region and reached the ears of Andrew Jackson, then a prominent figure who would later become president. Intrigued, or perhaps skeptical, he traveled to Adams with a party of men to witness the phenomenon firsthand.

According to popular account, the men found their wagon inexplicably stuck on the road near the Bell property, refusing to move until a disembodied voice commanded them to proceed. That night, Jackson's men sat in the Bell parlor, determined to uncover fraud if it existed. Instead, they found themselves subjected to jeering laughter and unexpected slaps.

One boasted of carrying a special bullet that could kill any spirit, only to be chased from the house in terror. [00:10:00] By morning, Jackson reputedly left, shaken. Although details vary among storytellers, the essence of his experience only fueled the legend's fire. Some in Adams took to calling the presence Kate, suspecting it might be the spirit of a neighbor named Kate Batts.

Rumors pointed to an old feud or land dispute between Kate Batts and John Bell. Whether any of that was true, or Kate Batts was simply an unfortunate scapegoat remains unclear. The entity itself, at times, answered to Kate when addressed, while at other times denying any such name. It was a puzzle of contradictions, claiming multiple identities.

A wayward spirit, a demon, or a lost soul wandering in malice. No single explanation satisfied everyone in the community. With Betsy's engagement to Joshua broken, the witch devoted increasing attention to John Bell. His health declined rapidly in 1820, marked by spells of near [00:11:00] paralysis and unremitting pain.

Lucy tended to him day and night. Their children worried and exhausted, watched as their patriarch grew weaker, his once strong presence withering under an unseen hand. In December of that year, John Bell was found unconscious in his bed. A small vial of dark liquid stood nearby. No one recognized its contents.

One of his sons put a single drop on the tongue of the family cat, which died instantly. Almost immediately, the voice shrieked in triumph, boasting that it had given John a final, fatal dose. That same day, John Bell passed away without regaining consciousness, leaving his family both grief stricken and horrified by the witch's brazen gloating.

The funeral drew a large gathering. Many came to mourn the respected farmer. Others arrived to see whether the witch would appear in some dreadful form. As pallbearers lowered John Bell's coffin, A jeering laughter rippled across the [00:12:00] mourners, prompting many to look wildly around for the source. Then, as told in countless retellings, the voice broke into a rude, mocking song, echoing among the gravestones and sending shudders through the crowd.

In the wake of John Bell's death, life on the farm settled into an uneasy quiet. Betsy noticed fewer night time assaults. And the daily havoc lessened. People whispered that the witch finally achieved its purpose by taking John Bell's life. Then, just as suddenly as it had arrived, the witch declared it would leave the family, though it promised to return in seven years.

After a brief period of stillness, the witch's threat rang true. Around 1828, a few of the Bells claimed to hear light tapping or distant murmurs echoing in empty rooms. However, these new incidents were mild and short lived compared to the previous years of torment. Soon enough, even these faded, leaving the bells [00:13:00] with haunted memories, but relative peace.

Near the bell property stood a modest cave by the Red River, a spot often tied to the legend. Over time, people theorized that cave's dark recesses, though the bells themselves rarely ventured inside. Later visitors and locals would tell of odd voices whispering in the cave or strange lights gliding across the damp stone.

Most likely, these stories were born of the haunting's lingering aura. Yet, they continued to fuel the notion that the witch could still roam beyond the farm, hidden beneath the earth. Long after the bells had ceased to hear the witch's voice, the story lived on. Word traveled to neighboring towns, then farther, into newspapers and traveler anecdotes.

The tale of the Tennessee family plagued by a fiendish, talkative spirit captured the imagination. Some insisted the Bell Witch was a cautionary omen of what happens when old feuds and injustices are left [00:14:00] unresolved. Others believed it was a rare glimpse of a diabolical power unleashed for reasons still unknown.

Here in Adams, people repeated the story around hearths and campfires. Children were warned not to wander too far near the old bell farm after dark. When neighbors passed by at night, they might hear a faint rustle in the bush or catch a flicker of light among the trees, prompting them to walk faster.

Hearts pounding, minds remembering how once a family had suffered greatly at the hands of an unseen force. Naturally, not everyone agreed on what transpired at the Bell farm. Some maintained it was all too real, a case of a vengeful spirit or malignant presence carrying out a personal vendetta. Others whispered that perhaps a member of the Bell family had orchestrated the phenomenon with cunning trickery, though that failed to explain the bruises on Betsy, the widespread witnesses, or John's mysterious death.

Still, others pointed to the possibility of an [00:15:00] unsettled spirit who had attached itself to the land for reasons lost to time. What none could deny was the tangible suffering inflicted on the Bells. John Bell's slow decline and Betsy's bruises were impossible to ignore. Multiple guests, neighbors, acquaintances, even travelers testified to hearing the same eerie voice that threatened, teased and recited scripture.

In an age when the supernatural was both feared and accepted, the Bell Witch story captured hearts and sparked endless speculation. After John Bell's death, the family held onto the farm for several years. Betsy, robbed of her engagement to Joshua, eventually found a calmer path through life, though the memory of her tormented youth never fully left her.

Lucy, steadfast and devout to the end, kept her household as best as she could, unwilling to surrender her faith even after all she had witnessed. Over time, the children married and started families of their own, [00:16:00] quietly distancing themselves from the tragedy that had defined their upbringing.

Generations passed, the farm changed hands, the Bell House was repurposed and renovated, and Adams itself transformed slowly from a frontier settlement into a more established community. Yet the name Bellwitch continued to slip into conversation whenever strange knocks were heard late at night or lonely travelers glimpsed inexplicable lights in the distance.

The story refused to fade, woven into the identity of the land itself. Even as the first hand witnesses to the haunting aged and died, their accounts survived in letters, diaries, and recollections passed down among locals. Visitors to Adams would hear about the famed Bell Witch, about the dreadful death of John Bell, the heartbreak of Betsy's broken engagement, and the brazen voice that filled nights with fear.

Some folks approached the story with reverence, others with skepticism. But no one [00:17:00] denied that it shaped the character of the town. In the hush of a moonlit evening, one might stand on that old farmland, fields once tilled by John Bell's callous hands, now peaceful beneath the Tennessee sky. And imagine the entire family huddled in the house, listening with terrified hearts for the next knock on the wall.

It's said that if you pause long enough, you might sense a faint echo of their dread, carried on a stray breath of wind. The Bell Witch remains a singular chapter in American folklore, a tale of a family besieged by something unseen, lethal, and uncannily aware. However one interprets the events, whether as vengeful ghosts, demonic presence, or some other unexplainable force, the Bell Witch.

Its resonance lies in the very human drama at its core. Here was a father undone by circumstances he could not control. A daughter tormented in her own home, in a close knit household tested by relentless fear. [00:18:00] In the end, the Bell Witch story offers a lesson in how thin the line between our daily certainties and the mysteries that defy them.

When night falls, and the wind rattles the shutters in a silent house, we remember John Bell and his family, who discovered that the safe haven of home can become a battlefield against forces beyond mortal comprehension. I'm Russ Chamberlain, and you've been listening to Midnight Signals. May this account of the Bell Witch linger with you as a reminder that in the deepest stillness of the night, Anything seems possible.

Even the unseen tapping of a force that seeks to make itself known. Sleep well, if you dare.

-

@ bfd004ea:ed7ac83d

2025-04-26 21:23:30

@ bfd004ea:ed7ac83d

2025-04-26 21:23:30This is other test.

-

@ 68c90cf3:99458f5c

2025-04-26 15:05:41

@ 68c90cf3:99458f5c

2025-04-26 15:05:41Background

Last year I got interesting in running my own bitcoin node after reading others' experiences doing so. A couple of decades ago I ran my own Linux and Mac servers, and enjoyed building and maintaining them. I was by no means an expert sys admin, but had my share of cron jobs, scripts, and custom configuration files. While it was fun and educational, software updates and hardware upgrades often meant hours of restoring and troubleshooting my systems.

Fast forward to family and career (especially going into management) and I didn't have time for all that. Having things just work became more important than playing with the tech. As I got older, the more I appreciated K.I.S.S. (for those who don't know: Keep It Simple Stupid).