-

@ 04c915da:3dfbecc9

2025-03-10 23:31:30

Bitcoin has always been rooted in freedom and resistance to authority. I get that many of you are conflicted about the US Government stacking but by design we cannot stop anyone from using bitcoin. Many have asked me for my thoughts on the matter, so let’s rip it.

**Concern**

One of the most glaring issues with the strategic bitcoin reserve is its foundation, built on stolen bitcoin. For those of us who value private property this is an obvious betrayal of our core principles. Rather than proof of work, the bitcoin that seeds this reserve has been taken by force. The US Government should return the bitcoin stolen from Bitfinex and the Silk Road.

Usually stolen bitcoin for the reserve creates a perverse incentive. If governments see a bitcoin as a valuable asset, they will ramp up efforts to confiscate more bitcoin. The precedent is a major concern, and I stand strongly against it, but it should be also noted that governments were already seizing coin before the reserve so this is not really a change in policy.

Ideally all seized bitcoin should be burned, by law. This would align incentives properly and make it less likely for the government to actively increase coin seizures. Due to the truly scarce properties of bitcoin, all burned bitcoin helps existing holders through increased purchasing power regardless. This change would be unlikely but those of us in policy circles should push for it regardless. It would be best case scenario for American bitcoiners and would create a strong foundation for the next century of American leadership.

**Optimism**

The entire point of bitcoin is that we can spend or save it without permission. That said, it is a massive benefit to not have one of the strongest governments in human history actively trying to ruin our lives.

Since the beginning, bitcoiners have faced horrible regulatory trends. KYC, surveillance, and legal cases have made using bitcoin and building bitcoin businesses incredibly difficult. It is incredibly important to note that over the past year that trend has reversed for the first time in a decade. A strategic bitcoin reserve is a key driver of this shift. By holding bitcoin, the strongest government in the world has signaled that it is not just a fringe technology but rather truly valuable, legitimate, and worth stacking.

This alignment of incentives changes everything. The US Government stacking proves bitcoin’s worth. The resulting purchasing power appreciation helps all of us who are holding coin and as bitcoin succeeds our government receives direct benefit. A beautiful positive feedback loop.

**Realism**

We are trending in the right direction. A strategic bitcoin reserve is a sign that the state sees bitcoin as an asset worth embracing rather than destroying. That said, there is a lot of work left to be done. We cannot be lulled into complacency, the time to push forward is now, and we cannot take our foot off the gas. We have a seat at the table for the first time ever. Let's make it worth it.

We must protect the right to free usage of bitcoin and other digital technologies. Freedom in the digital age must be taken and defended, through both technical and political avenues. Multiple privacy focused developers are facing long jail sentences for building tools that protect our freedom. These cases are not just legal battles. They are attacks on the soul of bitcoin. We need to rally behind them, fight for their freedom, and ensure the ethos of bitcoin survives this new era of government interest. The strategic reserve is a step in the right direction, but it is up to us to hold the line and shape the future.

-

@ 2b1964b8:851949fa

2025-03-02 19:00:56

### Routine Picture-in-Picture American Sign Language Interpretation in American Broadcasting

(PiP, ASL)

Picture-in-picture sign language interpretation is a standard feature in news broadcasts across the globe. Why hasn’t America become a leader in picture-in-picture implementation too?

**Misconception.**

There are prevalent misunderstandings about the necessity of ASL interpreters in the media and beyond. As recently as January 2025, an American influencer with ~10M social followers on Instagram and X combined, referred to sign language interpreters during emergency briefings as a distraction.

Such views overlook the fact that, for many deaf individuals, American Sign Language is their primary language. It is wrongly assumed that deaf Americans know—or should know—English. American Sign Language differs in grammatical structure from English. Moreover, human interpreters are able to convey nuances that captions often miss, such as non-manual markers; facial expressions, body movements, head positions utilized in sign language to convey meaning. English is the native language for many hearing Americans, who have access to it throughout the United States without any additional expectation placed upon them.

A deeper understanding reveals that many nations have their own unique signed languages, reflecting their local deaf culture and community — Brazilian Sign Language, British Sign Language, Finnish Sign Language, French Sign Language, Japanese Sign Language, Mexican Sign Language, Nigerian Sign Language, and South African Sign Language, among numerous others.

**Bottom Line:** American Sign Language is the native language for many American-born deaf individuals, and English is the native language for many American-born hearing individuals. It is a one-for-one relationship; both are equal.

In an era where information dissemination is instantaneous, ensuring that mainstream broadcasts are accessible to all citizens is paramount.

### Public Figures Including Language Access In Their Riders

**What's a rider?**

A rider is an addendum or supplemental clause added to a contract that expands or adjusts the contract's terms. Riders are commonly used in agreements for public figures to specify additional requirements such as personal preferences or technical needs.

**A Simple Yet Powerful Action**

Public figures have a unique ability to shape industry standards, and by including language access in their riders, they can make a profound impact with minimal effort.

* On-site American Sign Language interpretation ensures that deaf and hard-of-hearing individuals can fully engage with speeches and live events.

* Open captions (burned-in captions) for all live and post-production interview segments guarantee accessibility across platforms, making spoken content instantly available to a wider audience.

These implements don’t just benefit deaf constitutents—they also support language learners, individuals in sound-sensitive environments and any person who relies on, or simply refers, visual reinforcement to engage with spoken content.

For public figures, adding these 2 requests to a rider is one of the most efficient and immediate ways to promote accessibility. By normalizing language access as a standard expectation, you encourage event organizers, broadcasters, and production teams to adopt these practices universally.

As a result, there will be an industry shift from accessibility as an occasional accommodation to an industry norm, ensuring that future events, interviews, and media content are more accessible for all.

Beyond immediate accessibility, the regular presence of interpreters in public spaces increases awareness of sign language. Seeing interpreters in mainstream media can spark interest among both deaf and hearing children to pursue careers in interpretation, expanding future language access and representation.

### Year-Round Commitment to Accessibility

Too often, language access is only considered when an immediate demand arises, which leads to rushed or inadequate solutions. While some events may include interpretation or captioning, these efforts can fall short when they lack the expertise and coordination necessary for true disability justice. Thoughtful, proactive planning ensures that language access is seamlessly integrated into events, rather than being a reactive measure.

Best practices happen when all key players are involved from the start:

* Accessibility leads with combined production and linguistic knowledge who can ensure accessibility remains central to the purpose rather than allowing themselves to be caught up in the spectacle of an event.

* Language experts who ensure accuracy and cultural competency.

* Production professionals who understand event logistics.

By prioritizing accessibility year-round, organizations create spaces where disability justice is not just accommodated, but expected—ensuring that every audience member, regardless of language needs, has access to information and engagement.

-

@ 97c70a44:ad98e322

2025-01-30 17:15:37

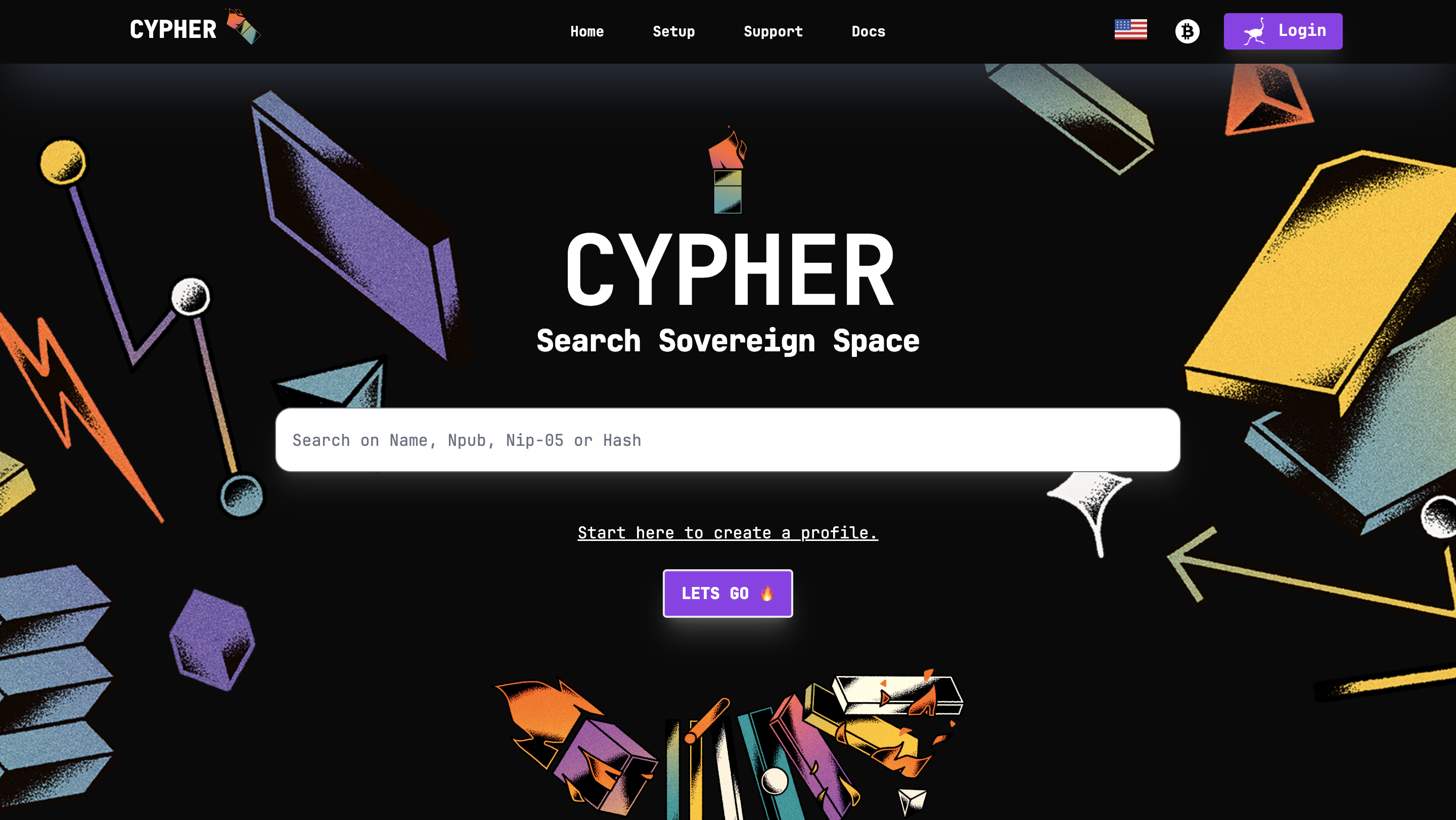

There was a slight dust up recently over a website someone runs removing a listing for an app someone built based on entirely arbitrary criteria. I'm not to going to attempt to speak for either wounded party, but I would like to share my own personal definition for what constitutes a "nostr app" in an effort to help clarify what might be an otherwise confusing and opaque purity test.

In this post, I will be committing the "no true Scotsman" fallacy, in which I start with the most liberal definition I can come up with, and gradually refine it until all that is left is the purest, gleamingest, most imaginary and unattainable nostr app imaginable. As I write this, I wonder if anything built yet will actually qualify. In any case, here we go.

# It uses nostr

The lowest bar for what a "nostr app" might be is an app ("application" - i.e. software, not necessarily a native app of any kind) that has some nostr-specific code in it, but which doesn't take any advantage of what makes nostr distinctive as a protocol.

Examples might include a scraper of some kind which fulfills its charter by fetching data from relays (regardless of whether it validates or retains signatures). Another might be a regular web 2.0 app which provides an option to "log in with nostr" by requesting and storing the user's public key.

In either case, the fact that nostr is involved is entirely neutral. A scraper can scrape html, pdfs, jsonl, whatever data source - nostr relays are just another target. Likewise, a user's key in this scenario is treated merely as an opaque identifier, with no appreciation for the super powers it brings along.

In most cases, this kind of app only exists as a marketing ploy, or less cynically, because it wants to get in on the hype of being a "nostr app", without the developer quite understanding what that means, or having the budget to execute properly on the claim.

# It leverages nostr

Some of you might be wondering, "isn't 'leverage' a synonym for 'use'?" And you would be right, but for one connotative difference. It's possible to "use" something improperly, but by definition leverage gives you a mechanical advantage that you wouldn't otherwise have. This is the second category of "nostr app".

This kind of app gets some benefit out of the nostr protocol and network, but in an entirely selfish fashion. The intention of this kind of app is not to augment the nostr network, but to augment its own UX by borrowing some nifty thing from the protocol without really contributing anything back.

Some examples might include:

- Using nostr signers to encrypt or sign data, and then store that data on a proprietary server.

- Using nostr relays as a kind of low-code backend, but using proprietary event payloads.

- Using nostr event kinds to represent data (why), but not leveraging the trustlessness that buys you.

An application in this category might even communicate to its users via nostr DMs - but this doesn't make it a "nostr app" any more than a website that emails you hot deals on herbal supplements is an "email app". These apps are purely parasitic on the nostr ecosystem.

In the long-term, that's not necessarily a bad thing. Email's ubiquity is self-reinforcing. But in the short term, this kind of "nostr app" can actually do damage to nostr's reputation by over-promising and under-delivering.

# It complements nostr

Next up, we have apps that get some benefit out of nostr as above, but give back by providing a unique value proposition to nostr users as nostr users. This is a bit of a fine distinction, but for me this category is for apps which focus on solving problems that nostr isn't good at solving, leaving the nostr integration in a secondary or supporting role.

One example of this kind of app was Mutiny (RIP), which not only allowed users to sign in with nostr, but also pulled those users' social graphs so that users could send money to people they knew and trusted. Mutiny was doing a great job of leveraging nostr, as well as providing value to users with nostr identities - but it was still primarily a bitcoin wallet, not a "nostr app" in the purest sense.

Other examples are things like Nostr Nests and Zap.stream, whose core value proposition is streaming video or audio content. Both make great use of nostr identities, data formats, and relays, but they're primarily streaming apps. A good litmus test for things like this is: if you got rid of nostr, would it be the same product (even if inferior in certain ways)?

A similar category is infrastructure providers that benefit nostr by their existence (and may in fact be targeted explicitly at nostr users), but do things in a centralized, old-web way; for example: media hosts, DNS registrars, hosting providers, and CDNs.

To be clear here, I'm not casting aspersions (I don't even know what those are, or where to buy them). All the apps mentioned above use nostr to great effect, and are a real benefit to nostr users. But they are not True Scotsmen.

# It embodies nostr

Ok, here we go. This is the crème de la crème, the top du top, the meilleur du meilleur, the bee's knees. The purest, holiest, most chaste category of nostr app out there. The apps which are, indeed, nostr indigitate.

This category of nostr app (see, no quotes this time) can be defined by the converse of the previous category. If nostr was removed from this type of application, would it be impossible to create the same product?

To tease this apart a bit, apps that leverage the technical aspects of nostr are dependent on nostr the *protocol*, while apps that benefit nostr exclusively via network effect are integrated into nostr the *network*. An app that does both things is working in symbiosis with nostr as a whole.

An app that embraces both nostr's protocol and its network becomes an organic extension of every other nostr app out there, multiplying both its competitive moat and its contribution to the ecosystem:

- In contrast to apps that only borrow from nostr on the technical level but continue to operate in their own silos, an application integrated into the nostr network comes pre-packaged with existing users, and is able to provide more value to those users because of other nostr products. On nostr, it's a good thing to advertise your competitors.

- In contrast to apps that only market themselves to nostr users without building out a deep integration on the protocol level, a deeply integrated app becomes an asset to every other nostr app by becoming an organic extension of them through interoperability. This results in increased traffic to the app as other developers and users refer people to it instead of solving their problem on their own. This is the "micro-apps" utopia we've all been waiting for.

Credible exit doesn't matter if there aren't alternative services. Interoperability is pointless if other applications don't offer something your app doesn't. Marketing to nostr users doesn't matter if you don't augment their agency _as nostr users_.

If I had to choose a single NIP that represents the mindset behind this kind of app, it would be NIP 89 A.K.A. "Recommended Application Handlers", which states:

> Nostr's discoverability and transparent event interaction is one of its most interesting/novel mechanics. This NIP provides a simple way for clients to discover applications that handle events of a specific kind to ensure smooth cross-client and cross-kind interactions.

These handlers are the glue that holds nostr apps together. A single event, signed by the developer of an application (or by the application's own account) tells anyone who wants to know 1. what event kinds the app supports, 2. how to link to the app (if it's a client), and (if the pubkey also publishes a kind 10002), 3. which relays the app prefers.

_As a sidenote, NIP 89 is currently focused more on clients, leaving DVMs, relays, signers, etc somewhat out in the cold. Updating 89 to include tailored listings for each kind of supporting app would be a huge improvement to the protocol. This, plus a good front end for navigating these listings (sorry nostrapp.link, close but no cigar) would obviate the evil centralized websites that curate apps based on arbitrary criteria._

Examples of this kind of app obviously include many kind 1 clients, as well as clients that attempt to bring the benefits of the nostr protocol and network to new use cases - whether long form content, video, image posts, music, emojis, recipes, project management, or any other "content type".

To drill down into one example, let's think for a moment about forms. What's so great about a forms app that is built on nostr? Well,

- There is a [spec](https://github.com/nostr-protocol/nips/pull/1190) for forms and responses, which means that...

- Multiple clients can implement the same data format, allowing for credible exit and user choice, even of...

- Other products not focused on forms, which can still view, respond to, or embed forms, and which can send their users via NIP 89 to a client that does...

- Cryptographically sign forms and responses, which means they are self-authenticating and can be sent to...

- Multiple relays, which reduces the amount of trust necessary to be confident results haven't been deliberately "lost".

Show me a forms product that does all of those things, and isn't built on nostr. You can't, because it doesn't exist. Meanwhile, there are plenty of image hosts with APIs, streaming services, and bitcoin wallets which have basically the same levels of censorship resistance, interoperability, and network effect as if they weren't built on nostr.

# It supports nostr

Notice I haven't said anything about whether relays, signers, blossom servers, software libraries, DVMs, and the accumulated addenda of the nostr ecosystem are nostr apps. Well, they are (usually).

This is the category of nostr app that gets none of the credit for doing all of the work. There's no question that they qualify as beautiful nostrcorns, because their value propositions are entirely meaningless outside of the context of nostr. Who needs a signer if you don't have a cryptographic identity you need to protect? DVMs are literally impossible to use without relays. How are you going to find the blossom server that will serve a given hash if you don't know which servers the publishing user has selected to store their content?

In addition to being entirely contextualized by nostr architecture, this type of nostr app is valuable because it does things "the nostr way". By that I mean that they don't simply try to replicate existing internet functionality into a nostr context; instead, they create entirely new ways of putting the basic building blocks of the internet back together.

A great example of this is how Nostr Connect, Nostr Wallet Connect, and DVMs all use relays as brokers, which allows service providers to avoid having to accept incoming network connections. This opens up really interesting possibilities all on its own.

So while I might hesitate to call many of these things "apps", they are certainly "nostr".

# Appendix: it smells like a NINO

So, let's say you've created an app, but when you show it to people they politely smile, nod, and call it a NINO (Nostr In Name Only). What's a hacker to do? Well, here's your handy-dandy guide on how to wash that NINO stench off and Become a Nostr.

You app might be a NINO if:

- There's no NIP for your data format (or you're abusing NIP 78, 32, etc by inventing a sub-protocol inside an existing event kind)

- There's a NIP, but no one knows about it because it's in a text file on your hard drive (or buried in your project's repository)

- Your NIP imposes an incompatible/centralized/legacy web paradigm onto nostr

- Your NIP relies on trusted third (or first) parties

- There's only one implementation of your NIP (yours)

- Your core value proposition doesn't depend on relays, events, or nostr identities

- One or more relay urls are hard-coded into the source code

- Your app depends on a specific relay implementation to work (*ahem*, relay29)

- You don't validate event signatures

- You don't publish events to relays you don't control

- You don't read events from relays you don't control

- You use legacy web services to solve problems, rather than nostr-native solutions

- You use nostr-native solutions, but you've hardcoded their pubkeys or URLs into your app

- You don't use NIP 89 to discover clients and services

- You haven't published a NIP 89 listing for your app

- You don't leverage your users' web of trust for filtering out spam

- You don't respect your users' mute lists

- You try to "own" your users' data

Now let me just re-iterate - it's ok to be a NINO. We need NINOs, because nostr can't (and shouldn't) tackle every problem. You just need to decide whether your app, as a NINO, is actually contributing to the nostr ecosystem, or whether you're just using buzzwords to whitewash a legacy web software product.

If you're in the former camp, great! If you're in the latter, what are you waiting for? Only you can fix your NINO problem. And there are lots of ways to do this, depending on your own unique situation:

- Drop nostr support if it's not doing anyone any good. If you want to build a normal company and make some money, that's perfectly fine.

- Build out your nostr integration - start taking advantage of webs of trust, self-authenticating data, event handlers, etc.

- Work around the problem. Think you need a special relay feature for your app to work? Guess again. Consider encryption, AUTH, DVMs, or better data formats.

- Think your idea is a good one? Talk to other devs or open a PR to the [nips repo](https://github.com/nostr-protocol/nips). No one can adopt your NIP if they don't know about it.

- Keep going. It can sometimes be hard to distinguish a research project from a NINO. New ideas have to be built out before they can be fully appreciated.

- Listen to advice. Nostr developers are friendly and happy to help. If you're not sure why you're getting traction, ask!

I sincerely hope this article is useful for all of you out there in NINO land. Maybe this made you feel better about not passing the totally optional nostr app purity test. Or maybe it gave you some actionable next steps towards making a great NINON (Nostr In Not Only Name) app. In either case, GM and PV.

-

@ 50809a53:e091f164

2025-01-20 22:30:01

For starters, anyone who is interested in curating and managing "notes, lists, bookmarks, kind-1 events, or other stuff" should watch this video:

https://youtu.be/XRpHIa-2XCE

Now, assuming you have watched it, I will proceed assuming you are aware of many of the applications that exist for a very similar purpose. I'll break them down further, following a similar trajectory in order of how I came across them, and a bit about my own path on this journey.

We'll start way back in the early 2000s, before Bitcoin existed. We had https://zim-wiki.org/

It is tried and true, and to this day stands to present an option for people looking for a very simple solution to a potentially complex problem. Zim-Wiki works. But it is limited.

Let's step into the realm of proprietary. Obsidian, Joplin, and LogSeq. The first two are entirely cloud-operative applications, with more of a focus on the true benefit of being a paid service. I will assume anyone reading this is capable of exploring the marketing of these applications, or trying their freemium product, to get a feeling for what they are capable of.

I bring up Obsidian because it is very crucial to understand the market placement of publication. We know social media handles the 'hosting' problem of publishing notes "and other stuff" by harvesting data and making deals with advertisers. But- what Obsidian has evolved to offer is a full service known as 'publish'. This means users can stay in the proprietary pipeline, "from thought to web." all for $8/mo.

See: https://obsidian.md/publish

THIS IS NOSTR'S PRIMARY COMPETITION. WE ARE HERE TO DISRUPT THIS MARKET, WITH NOTES AND OTHER STUFF. WITH RELAYS. WITH THE PROTOCOL.

Now, on to Joplin. I have never used this, because I opted to study the FOSS market and stayed free of any reliance on a paid solution. Many people like Joplin, and I gather the reason is because it has allowed itself to be flexible and good options that integrate with Joplin seems to provide good solutions for users who need that functionality. I see Nostr users recommending Joplin, so I felt it was worthwhile to mention as a case-study option. I myself need to investigate it more, but have found comfort in other solutions.

LogSeq - This is my "other solutions." It seems to be trapped in its proprietary web of funding and constraint. I use it because it turns my desktop into a power-house of note archival. But by using it- I AM TRAPPED TOO. This means LogSeq is by no means a working solution for Nostr users who want a long-term archival option.

But the trap is not a cage. It's merely a box. My notes can be exported to other applications with graphing and node-based information structure. Specifically, I can export these notes to:

- Text

- OPML

- HTML

- and, PNG, for whatever that is worth.

Let's try out the PNG option, just for fun. Here's an exported PNG of my "Games on Nostr" list, which has long been abandoned. I once decided to poll some CornyChat users to see what games they enjoyed- and I documented them in a LogSeq page for my own future reference. You can see it here:

https://i.postimg.cc/qMBPDTwr/image.png

This is a very simple example of how a single "page" or "list" in LogSeq can be multipurpose. It is a small list, with multiple "features" or variables at play. First, I have listed out a variety of complex games that might make sense with "multiplayer" identification that relies on our npubs or nip-05 addresses to aggregate user data. We can ALL imagine playing games like Tetris, Snake, or Catan together with our Nostr identities. But of course we are a long way from breaking into the video game market.

On a mostly irrelevant sidenote- you might notice in my example list, that I seem to be excited about a game called Dot.Hack. I discovered this small game on Itch.io and reached out to the developer on Twitter, in an attempt to purple-pill him, but moreso to inquire about his game. Unfortunately there was no response, even without mention of Nostr. Nonetheless, we pioneer on. You can try the game here: https://propuke.itch.io/planethack

So instead let's focus on the structure of "one working list." The middle section of this list is where I polled users, and simply listed out their suggestions. Of course we discussed these before I documented, so it is note a direct result of a poll, but actually a working interaction of poll results! This is crucial because it separates my list from the aggregated data, and implies its relevance/importance.

The final section of this ONE list- is the beginnings of where I conceptually connect nostr with video game functionality. You can look at this as the beginning of a new graph, which would be "Video Game Operability With Nostr".

These three sections make up one concept within my brain. It exists in other users' brains too- but of course they are not as committed to the concept as myself- the one managing the communal discussion.

With LogSeq- I can grow and expand these lists. These lists can become graphs. Those graphs can become entire catalogues of information than can be shared across the web.

I can replicate this system with bookmarks, ideas, application design, shopping lists, LLM prompting, video/music playlists, friend lists, RELAY lists, the LIST goes ON forever!

So where does that lead us? I think it leads us to kind-1 events. We don't have much in the way of "kind-1 event managers" because most developers would agree that "storing kind-1 events locally" is.. at the very least, not so important. But it could be! If only a superapp existed that could interface seamlessly with nostr, yada yada.. we've heard it all before. We aren't getting a superapp before we have microapps. Basically this means frameworking the protocol before worrying about the all-in-one solution.

So this article will step away from the deep desire for a Nostr-enabled, Rust-built, FOSS, non-commercialized FREEDOM APP, that will exist one day, we hope.

Instead, we will focus on simple attempts of the past. I encourage others to chime in with their experience.

Zim-Wiki is foundational. The user constructs pages, and can then develop them into books.

LogSeq has the right idea- but is constrained in too many ways to prove to be a working solution at this time. However, it is very much worth experimenting with, and investigating, and modelling ourselves after.

https://workflowy.com/ is next on our list. This is great for users who think LogSeq is too complex. They "just want simple notes." Get a taste with WorkFlowy. You will understand why LogSeq is powerful if you see value in WF.

I am writing this article in favor of a redesign of LogSeq to be compatible with Nostr. I have been drafting the idea since before Nostr existed- and with Nostr I truly believe it will be possible. So, I will stop to thank everyone who has made Nostr what it is today. I wouldn't be publishing this without you!

One app I need to investigate more is Zettlr. I will mention it here for others to either discuss or investigate, as it is also mentioned some in the video I opened with. https://www.zettlr.com/

On my path to finding Nostr, before its inception, was a service called Deta.Space. This was an interesting project, not entirely unique or original, but completely fresh and very beginner-friendly. DETA WAS AN AWESOME CLOUD OS. And we could still design a form of Nostr ecosystem that is managed in this way. But, what we have now is excellent, and going forward I only see "additional" or supplemental.

Along the timeline, Deta sunsetted their Space service and launched https://deta.surf/

You might notice they advertise that "This is the future of bookmarks."

I have to wonder if perhaps I got through to them that bookmarking was what their ecosystem could empower. While I have not tried Surf, it looks interested, but does not seem to address what I found most valuable about Deta.Space: https://webcrate.app/

WebCrate was an early bookmarking client for Deta.Space which was likely their most popular application. What was amazing about WebCrate was that it delivered "simple bookmarking." At one point I decided to migrate my bookmarks from other apps, like Pocket and WorkFlowy, into WebCrate.

This ended up being an awful decision, because WebCrate is no longer being developed. However, to much credit of Deta.Space, my WebCrate instance is still running and completely functional. I have since migrated what I deem important into a local LogSeq graph, so my bookmarks are safe. But, the development of WebCrate is note.

WebCrate did not provide a working directory of crates. All creates were contained within a single-level directory. Essentially there were no layers. Just collections of links. This isn't enough for any user to effectively manage their catalogue of notes. With some pressure, I did encourage the German developer to flesh out a form of tagging, which did alleviate the problem to some extent. But as we see with Surf, they have pioneered in another direction.

That brings us back to Nostr. Where can we look for the best solution? There simply isn't one yet. But, we can look at some other options for inspiration.

HedgeDoc: https://hedgedoc.org/

I am eager for someone to fork HedgeDoc and employ Nostr sign-in. This is a small step toward managing information together within the Nostr ecosystem. I will attempt this myself eventually, if no one else does, but I am prioritizing my development in this way:

1. A nostr client that allows the cataloguing and management of relays locally.

2. A LogSeq alternative with Nostr interoperability.

3. HedgeDoc + Nostr is #3 on my list, despite being the easiest option.

Check out HedgeDoc 2.0 if you have any interest in a cooperative Markdown experience on Nostr: https://docs.hedgedoc.dev/

Now, this article should catch up all of my dearest followers, and idols, to where I stand with "bookmarking, note-taking, list-making, kind-1 event management, frameworking, and so on..."

Where it leads us to, is what's possible. Let's take a look at what's possible, once we forego ALL OF THE PROPRIETARY WEB'S BEST OPTIONS:

https://denizaydemir.org/

https://denizaydemir.org/graph/how-logseq-should-build-a-world-knowledge-graph/

https://subconscious.network/

Nostr is even inspired by much of the history that has gone into information management systems. nostr:npub1jlrs53pkdfjnts29kveljul2sm0actt6n8dxrrzqcersttvcuv3qdjynqn I know looks up to Gordon Brander, just as I do. You can read his articles here: https://substack.com/@gordonbrander and they are very much worth reading! Also, I could note that the original version of Highlighter by nostr:npub1l2vyh47mk2p0qlsku7hg0vn29faehy9hy34ygaclpn66ukqp3afqutajft was also inspired partially by WorkFlowy.

About a year ago, I was mesmerized coming across SubText and thinking I had finally found the answer Nostr might even be looking for. But, for now I will just suggest that others read the Readme.md on the SubText Gtihub, as well as articles by Brander.

Good luck everyone. I am here to work with ANYONE who is interested in these type of solution on Nostr.

My first order of business in this space is to spearhead a community of npubs who share this goal. Everyone who is interested in note-taking or list-making or bookmarking is welcome to join. I have created an INVITE-ONLY relay for this very purpose, and anyone is welcome to reach out if they wish to be added to the whitelist. It should be freely readable in the near future, if it is not already, but for now will remain a closed-to-post community to preemptively mitigate attack or spam. Please reach out to me if you wish to join the relay. https://logstr.mycelium.social/

With this article, I hope people will investigate and explore the options available. We have lots of ground to cover, but all of the right resources and manpower to do so. Godspeed, Nostr.

#Nostr #Notes #OtherStuff #LogSec #Joplin #Obsidian

-

@ 7bdef7be:784a5805

2025-01-13 22:36:47

The following script try, using [nak](https://github.com/fiatjaf/nak), to find out the last ten people who have followed a `target_pubkey`, sorted by the most recent. It's possibile to shorten `search_timerange` to speed up the search.

```

#!/usr/bin/env fish

# Target pubkey we're looking for in the tags

set target_pubkey "6e468422dfb74a5738702a8823b9b28168abab8655faacb6853cd0ee15deee93"

set current_time (date +%s)

set search_timerange (math $current_time - 600) # 24 hours = 86400 seconds

set pubkeys (nak req --kind 3 -s $search_timerange wss://relay.damus.io/ wss://nos.lol/ 2>/dev/null | \

jq -r --arg target "$target_pubkey" '

select(. != null and type == "object" and has("tags")) |

select(.tags[] | select(.[0] == "p" and .[1] == $target)) |

.pubkey

' | sort -u)

if test -z "$pubkeys"

exit 1

end

set all_events ""

set extended_search_timerange (math $current_time - 31536000) # One year

for pubkey in $pubkeys

echo "Checking $pubkey"

set events (nak req --author $pubkey -l 5 -k 3 -s $extended_search_timerange wss://relay.damus.io wss://nos.lol 2>/dev/null | \

jq -c --arg target "$target_pubkey" '

select(. != null and type == "object" and has("tags")) |

select(.tags[][] == $target)

' 2>/dev/null)

set count (echo "$events" | jq -s 'length')

if test "$count" -eq 1

set all_events $all_events $events

end

end

if test -n "$all_events"

echo -e "Last people following $target_pubkey:"

echo -e ""

set sorted_events (printf "%s\n" $all_events | jq -r -s '

unique_by(.id) |

sort_by(-.created_at) |

.[] | @json

')

for event in $sorted_events

set npub (echo $event | jq -r '.pubkey' | nak encode npub)

set created_at (echo $event | jq -r '.created_at')

if test (uname) = "Darwin"

set follow_date (date -r "$created_at" "+%Y-%m-%d %H:%M")

else

set follow_date (date -d @"$created_at" "+%Y-%m-%d %H:%M")

end

echo "$follow_date - $npub"

end

end

```

-

@ 0d97beae:c5274a14

2025-01-11 16:52:08

This article hopes to complement the article by Lyn Alden on YouTube: https://www.youtube.com/watch?v=jk_HWmmwiAs

## The reason why we have broken money

Before the invention of key technologies such as the printing press and electronic communications, even such as those as early as morse code transmitters, gold had won the competition for best medium of money around the world.

In fact, it was not just gold by itself that became money, rulers and world leaders developed coins in order to help the economy grow. Gold nuggets were not as easy to transact with as coins with specific imprints and denominated sizes.

However, these modern technologies created massive efficiencies that allowed us to communicate and perform services more efficiently and much faster, yet the medium of money could not benefit from these advancements. Gold was heavy, slow and expensive to move globally, even though requesting and performing services globally did not have this limitation anymore.

Banks took initiative and created derivatives of gold: paper and electronic money; these new currencies allowed the economy to continue to grow and evolve, but it was not without its dark side. Today, no currency is denominated in gold at all, money is backed by nothing and its inherent value, the paper it is printed on, is worthless too.

Banks and governments eventually transitioned from a money derivative to a system of debt that could be co-opted and controlled for political and personal reasons. Our money today is broken and is the cause of more expensive, poorer quality goods in the economy, a larger and ever growing wealth gap, and many of the follow-on problems that have come with it.

## Bitcoin overcomes the "transfer of hard money" problem

Just like gold coins were created by man, Bitcoin too is a technology created by man. Bitcoin, however is a much more profound invention, possibly more of a discovery than an invention in fact. Bitcoin has proven to be unbreakable, incorruptible and has upheld its ability to keep its units scarce, inalienable and counterfeit proof through the nature of its own design.

Since Bitcoin is a digital technology, it can be transferred across international borders almost as quickly as information itself. It therefore severely reduces the need for a derivative to be used to represent money to facilitate digital trade. This means that as the currency we use today continues to fare poorly for many people, bitcoin will continue to stand out as hard money, that just so happens to work as well, functionally, along side it.

Bitcoin will also always be available to anyone who wishes to earn it directly; even China is unable to restrict its citizens from accessing it. The dollar has traditionally become the currency for people who discover that their local currency is unsustainable. Even when the dollar has become illegal to use, it is simply used privately and unofficially. However, because bitcoin does not require you to trade it at a bank in order to use it across borders and across the web, Bitcoin will continue to be a viable escape hatch until we one day hit some critical mass where the world has simply adopted Bitcoin globally and everyone else must adopt it to survive.

Bitcoin has not yet proven that it can support the world at scale. However it can only be tested through real adoption, and just as gold coins were developed to help gold scale, tools will be developed to help overcome problems as they arise; ideally without the need for another derivative, but if necessary, hopefully with one that is more neutral and less corruptible than the derivatives used to represent gold.

## Bitcoin blurs the line between commodity and technology

Bitcoin is a technology, it is a tool that requires human involvement to function, however it surprisingly does not allow for any concentration of power. Anyone can help to facilitate Bitcoin's operations, but no one can take control of its behaviour, its reach, or its prioritisation, as it operates autonomously based on a pre-determined, neutral set of rules.

At the same time, its built-in incentive mechanism ensures that people do not have to operate bitcoin out of the good of their heart. Even though the system cannot be co-opted holistically, It will not stop operating while there are people motivated to trade their time and resources to keep it running and earn from others' transaction fees. Although it requires humans to operate it, it remains both neutral and sustainable.

Never before have we developed or discovered a technology that could not be co-opted and used by one person or faction against another. Due to this nature, Bitcoin's units are often described as a commodity; they cannot be usurped or virtually cloned, and they cannot be affected by political biases.

## The dangers of derivatives

A derivative is something created, designed or developed to represent another thing in order to solve a particular complication or problem. For example, paper and electronic money was once a derivative of gold.

In the case of Bitcoin, if you cannot link your units of bitcoin to an "address" that you personally hold a cryptographically secure key to, then you very likely have a derivative of bitcoin, not bitcoin itself. If you buy bitcoin on an online exchange and do not withdraw the bitcoin to a wallet that you control, then you legally own an electronic derivative of bitcoin.

Bitcoin is a new technology. It will have a learning curve and it will take time for humanity to learn how to comprehend, authenticate and take control of bitcoin collectively. Having said that, many people all over the world are already using and relying on Bitcoin natively. For many, it will require for people to find the need or a desire for a neutral money like bitcoin, and to have been burned by derivatives of it, before they start to understand the difference between the two. Eventually, it will become an essential part of what we regard as common sense.

## Learn for yourself

If you wish to learn more about how to handle bitcoin and avoid derivatives, you can start by searching online for tutorials about "Bitcoin self custody".

There are many options available, some more practical for you, and some more practical for others. Don't spend too much time trying to find the perfect solution; practice and learn. You may make mistakes along the way, so be careful not to experiment with large amounts of your bitcoin as you explore new ideas and technologies along the way. This is similar to learning anything, like riding a bicycle; you are sure to fall a few times, scuff the frame, so don't buy a high performance racing bike while you're still learning to balance.

-

@ dd664d5e:5633d319

2025-01-09 21:39:15

# Instructions

1. Place 2 medium-sized, boiled potatoes and a handful of sliced leeks in a pot.

2. Fill the pot with water or vegetable broth, to cover the potatoes twice over.

3. Add a splash of white wine, if you like, and some bouillon powder, if you went with water instead of broth.

4. Bring the soup to a boil and then simmer for 15 minutes.

5. Puree the soup, in the pot, with a hand mixer. It shouldn't be completely smooth, when you're done, but rather have small bits and pieces of the veggies floating around.

6. Bring the soup to a boil, again, and stir in one container (200-250 mL) of heavy cream.

7. Thicken the soup, as needed, and then simmer for 5 more minutes.

8. Garnish with croutons and veggies (here I used sliced green onions and radishes) and serve.

Guten Appetit!

-

@ 3f770d65:7a745b24

2025-01-05 18:56:33

New Year’s resolutions often feel boring and repetitive. Most revolve around getting in shape, eating healthier, or giving up alcohol. While the idea is interesting—using the start of a new calendar year as a catalyst for change—it also seems unnecessary. Why wait for a specific date to make a change? If you want to improve something in your life, you can just do it. You don’t need an excuse.

That’s why I’ve never been drawn to the idea of making a list of resolutions. If I wanted a change, I’d make it happen, without worrying about the calendar. At least, that’s how I felt until now—when, for once, the timing actually gave me a real reason to embrace the idea of New Year’s resolutions.

Enter [Olas](https://olas.app).

If you're a visual creator, you've likely experienced the relentless grind of building a following on platforms like Instagram—endless doomscrolling, ever-changing algorithms, and the constant pressure to stay relevant. But what if there was a better way? Olas is a Nostr-powered alternative to Instagram that prioritizes community, creativity, and value-for-value exchanges. It's a game changer.

Instagram’s failings are well-known. Its algorithm often dictates whose content gets seen, leaving creators frustrated and powerless. Monetization hurdles further alienate creators who are forced to meet arbitrary follower thresholds before earning anything. Additionally, the platform’s design fosters endless comparisons and exposure to negativity, which can take a significant toll on mental health.

Instagram’s algorithms are notorious for keeping users hooked, often at the cost of their mental health. I've spoken about this extensively, most recently at Nostr Valley, explaining how legacy social media is bad for you. You might find yourself scrolling through content that leaves you feeling anxious or drained. Olas takes a fresh approach, replacing "doomscrolling" with "bloomscrolling." This is a common theme across the Nostr ecosystem. The lack of addictive rage algorithms allows the focus to shift to uplifting, positive content that inspires rather than exhausts.

Monetization is another area where Olas will set itself apart. On Instagram, creators face arbitrary barriers to earning—needing thousands of followers and adhering to restrictive platform rules. Olas eliminates these hurdles by leveraging the Nostr protocol, enabling creators to earn directly through value-for-value exchanges. Fans can support their favorite artists instantly, with no delays or approvals required. The plan is to enable a brand new Olas account that can get paid instantly, with zero followers - that's wild.

Olas addresses these issues head-on. Operating on the open Nostr protocol, it removes centralized control over one's content’s reach or one's ability to monetize. With transparent, configurable algorithms, and a community that thrives on mutual support, Olas creates an environment where creators can grow and succeed without unnecessary barriers.

Join me on my New Year's resolution. Join me on Olas and take part in the [#Olas365](https://olas.app/search/olas365) challenge! It’s a simple yet exciting way to share your content. The challenge is straightforward: post at least one photo per day on Olas (though you’re welcome to share more!).

[Download on iOS](https://testflight.apple.com/join/2FMVX2yM).

[Download on Android](https://github.com/pablof7z/olas/releases/) or download via Zapstore.

Let's make waves together.

-

@ e6817453:b0ac3c39

2025-01-05 14:29:17

## The Rise of Graph RAGs and the Quest for Data Quality

As we enter a new year, it’s impossible to ignore the boom of retrieval-augmented generation (RAG) systems, particularly those leveraging graph-based approaches. The previous year saw a surge in advancements and discussions about Graph RAGs, driven by their potential to enhance large language models (LLMs), reduce hallucinations, and deliver more reliable outputs. Let’s dive into the trends, challenges, and strategies for making the most of Graph RAGs in artificial intelligence.

## Booming Interest in Graph RAGs

Graph RAGs have dominated the conversation in AI circles. With new research papers and innovations emerging weekly, it’s clear that this approach is reshaping the landscape. These systems, especially those developed by tech giants like Microsoft, demonstrate how graphs can:

* **Enhance LLM Outputs:** By grounding responses in structured knowledge, graphs significantly reduce hallucinations.

* **Support Complex Queries:** Graphs excel at managing linked and connected data, making them ideal for intricate problem-solving.

Conferences on linked and connected data have increasingly focused on Graph RAGs, underscoring their central role in modern AI systems. However, the excitement around this technology has brought critical questions to the forefront: How do we ensure the quality of the graphs we’re building, and are they genuinely aligned with our needs?

## Data Quality: The Foundation of Effective Graphs

A high-quality graph is the backbone of any successful RAG system. Constructing these graphs from unstructured data requires attention to detail and rigorous processes. Here’s why:

* **Richness of Entities:** Effective retrieval depends on graphs populated with rich, detailed entities.

* **Freedom from Hallucinations:** Poorly constructed graphs amplify inaccuracies rather than mitigating them.

Without robust data quality, even the most sophisticated Graph RAGs become ineffective. As a result, the focus must shift to refining the graph construction process. Improving data strategy and ensuring meticulous data preparation is essential to unlock the full potential of Graph RAGs.

## Hybrid Graph RAGs and Variations

While standard Graph RAGs are already transformative, hybrid models offer additional flexibility and power. Hybrid RAGs combine structured graph data with other retrieval mechanisms, creating systems that:

* Handle diverse data sources with ease.

* Offer improved adaptability to complex queries.

Exploring these variations can open new avenues for AI systems, particularly in domains requiring structured and unstructured data processing.

## Ontology: The Key to Graph Construction Quality

Ontology — defining how concepts relate within a knowledge domain — is critical for building effective graphs. While this might sound abstract, it’s a well-established field blending philosophy, engineering, and art. Ontology engineering provides the framework for:

* **Defining Relationships:** Clarifying how concepts connect within a domain.

* **Validating Graph Structures:** Ensuring constructed graphs are logically sound and align with domain-specific realities.

Traditionally, ontologists — experts in this discipline — have been integral to large enterprises and research teams. However, not every team has access to dedicated ontologists, leading to a significant challenge: How can teams without such expertise ensure the quality of their graphs?

## How to Build Ontology Expertise in a Startup Team

For startups and smaller teams, developing ontology expertise may seem daunting, but it is achievable with the right approach:

1. **Assign a Knowledge Champion:** Identify a team member with a strong analytical mindset and give them time and resources to learn ontology engineering.

2. **Provide Training:** Invest in courses, workshops, or certifications in knowledge graph and ontology creation.

3. **Leverage Partnerships:** Collaborate with academic institutions, domain experts, or consultants to build initial frameworks.

4. **Utilize Tools:** Introduce ontology development tools like Protégé, OWL, or SHACL to simplify the creation and validation process.

5. **Iterate with Feedback:** Continuously refine ontologies through collaboration with domain experts and iterative testing.

So, it is not always affordable for a startup to have a dedicated oncologist or knowledge engineer in a team, but you could involve consulters or build barefoot experts.

You could read about barefoot experts in my article :

Even startups can achieve robust and domain-specific ontology frameworks by fostering in-house expertise.

## How to Find or Create Ontologies

For teams venturing into Graph RAGs, several strategies can help address the ontology gap:

1. **Leverage Existing Ontologies:** Many industries and domains already have open ontologies. For instance:

* **Public Knowledge Graphs:** Resources like Wikipedia’s graph offer a wealth of structured knowledge.

* **Industry Standards:** Enterprises such as Siemens have invested in creating and sharing ontologies specific to their fields.

* **Business Framework Ontology (BFO):** A valuable resource for enterprises looking to define business processes and structures.

1. **Build In-House Expertise:** If budgets allow, consider hiring knowledge engineers or providing team members with the resources and time to develop expertise in ontology creation.

2. **Utilize LLMs for Ontology Construction:** Interestingly, LLMs themselves can act as a starting point for ontology development:

* **Prompt-Based Extraction:** LLMs can generate draft ontologies by leveraging their extensive training on graph data.

* **Domain Expert Refinement:** Combine LLM-generated structures with insights from domain experts to create tailored ontologies.

## Parallel Ontology and Graph Extraction

An emerging approach involves extracting ontologies and graphs in parallel. While this can streamline the process, it presents challenges such as:

* **Detecting Hallucinations:** Differentiating between genuine insights and AI-generated inaccuracies.

* **Ensuring Completeness:** Ensuring no critical concepts are overlooked during extraction.

Teams must carefully validate outputs to ensure reliability and accuracy when employing this parallel method.

## LLMs as Ontologists

While traditionally dependent on human expertise, ontology creation is increasingly supported by LLMs. These models, trained on vast amounts of data, possess inherent knowledge of many open ontologies and taxonomies. Teams can use LLMs to:

* **Generate Skeleton Ontologies:** Prompt LLMs with domain-specific information to draft initial ontology structures.

* **Validate and Refine Ontologies:** Collaborate with domain experts to refine these drafts, ensuring accuracy and relevance.

However, for validation and graph construction, formal tools such as OWL, SHACL, and RDF should be prioritized over LLMs to minimize hallucinations and ensure robust outcomes.

## Final Thoughts: Unlocking the Power of Graph RAGs

The rise of Graph RAGs underscores a simple but crucial correlation: improving graph construction and data quality directly enhances retrieval systems. To truly harness this power, teams must invest in understanding ontologies, building quality graphs, and leveraging both human expertise and advanced AI tools.

As we move forward, the interplay between Graph RAGs and ontology engineering will continue to shape the future of AI. Whether through adopting existing frameworks or exploring innovative uses of LLMs, the path to success lies in a deep commitment to data quality and domain understanding.

Have you explored these technologies in your work? Share your experiences and insights — and stay tuned for more discussions on ontology extraction and its role in AI advancements. Cheers to a year of innovation!

-

@ a4a6b584:1e05b95b

2025-01-02 18:13:31

## The Four-Layer Framework

### Layer 1: Zoom Out

Start by looking at the big picture. What’s the subject about, and why does it matter? Focus on the overarching ideas and how they fit together. Think of this as the 30,000-foot view—it’s about understanding the "why" and "how" before diving into the "what."

**Example**: If you’re learning programming, start by understanding that it’s about giving logical instructions to computers to solve problems.

- **Tip**: Keep it simple. Summarize the subject in one or two sentences and avoid getting bogged down in specifics at this stage.

_Once you have the big picture in mind, it’s time to start breaking it down._

---

### Layer 2: Categorize and Connect

Now it’s time to break the subject into categories—like creating branches on a tree. This helps your brain organize information logically and see connections between ideas.

**Example**: Studying biology? Group concepts into categories like cells, genetics, and ecosystems.

- **Tip**: Use headings or labels to group similar ideas. Jot these down in a list or simple diagram to keep track.

_With your categories in place, you’re ready to dive into the details that bring them to life._

---

### Layer 3: Master the Details

Once you’ve mapped out the main categories, you’re ready to dive deeper. This is where you learn the nuts and bolts—like formulas, specific techniques, or key terminology. These details make the subject practical and actionable.

**Example**: In programming, this might mean learning the syntax for loops, conditionals, or functions in your chosen language.

- **Tip**: Focus on details that clarify the categories from Layer 2. Skip anything that doesn’t add to your understanding.

_Now that you’ve mastered the essentials, you can expand your knowledge to include extra material._

---

### Layer 4: Expand Your Horizons

Finally, move on to the extra material—less critical facts, trivia, or edge cases. While these aren’t essential to mastering the subject, they can be useful in specialized discussions or exams.

**Example**: Learn about rare programming quirks or historical trivia about a language’s development.

- **Tip**: Spend minimal time here unless it’s necessary for your goals. It’s okay to skim if you’re short on time.

---

## Pro Tips for Better Learning

### 1. Use Active Recall and Spaced Repetition

Test yourself without looking at notes. Review what you’ve learned at increasing intervals—like after a day, a week, and a month. This strengthens memory by forcing your brain to actively retrieve information.

### 2. Map It Out

Create visual aids like [diagrams or concept maps](https://excalidraw.com/) to clarify relationships between ideas. These are particularly helpful for organizing categories in Layer 2.

### 3. Teach What You Learn

Explain the subject to someone else as if they’re hearing it for the first time. Teaching **exposes any gaps** in your understanding and **helps reinforce** the material.

### 4. Engage with LLMs and Discuss Concepts

Take advantage of tools like ChatGPT or similar large language models to **explore your topic** in greater depth. Use these tools to:

- Ask specific questions to clarify confusing points.

- Engage in discussions to simulate real-world applications of the subject.

- Generate examples or analogies that deepen your understanding.

**Tip**: Use LLMs as a study partner, but don’t rely solely on them. Combine these insights with your own critical thinking to develop a well-rounded perspective.

---

## Get Started

Ready to try the Four-Layer Method? Take 15 minutes today to map out the big picture of a topic you’re curious about—what’s it all about, and why does it matter? By building your understanding step by step, you’ll master the subject with less stress and more confidence.

-

@ 3f770d65:7a745b24

2024-12-31 17:03:46

Here are my predictions for Nostr in 2025:

**Decentralization:** The outbox and inbox communication models, sometimes referred to as the Gossip model, will become the standard across the ecosystem. By the end of 2025, all major clients will support these models, providing seamless communication and enhanced decentralization. Clients that do not adopt outbox/inbox by then will be regarded as outdated or legacy systems.

**Privacy Standards:** Major clients such as Damus and Primal will move away from NIP-04 DMs, adopting more secure protocol possibilities like NIP-17 or NIP-104. These upgrades will ensure enhanced encryption and metadata protection. Additionally, NIP-104 MLS tools will drive the development of new clients and features, providing users with unprecedented control over the privacy of their communications.

**Interoperability:** Nostr's ecosystem will become even more interconnected. Platforms like the Olas image-sharing service will expand into prominent clients such as Primal, Damus, Coracle, and Snort, alongside existing integrations with Amethyst, Nostur, and Nostrudel. Similarly, audio and video tools like Nostr Nests and Zap.stream will gain seamless integration into major clients, enabling easy participation in live events across the ecosystem.

**Adoption and Migration:** Inspired by early pioneers like Fountain and Orange Pill App, more platforms will adopt Nostr for authentication, login, and social systems. In 2025, a significant migration from a high-profile application platform with hundreds of thousands of users will transpire, doubling Nostr’s daily activity and establishing it as a cornerstone of decentralized technologies.

-

@ 6e468422:15deee93

2024-12-21 19:25:26

We didn't hear them land on earth, nor did we see them. The spores were not visible to the naked eye. Like dust particles, they softly fell, unhindered, through our atmosphere, covering the earth. It took us a while to realize that something extraordinary was happening on our planet. In most places, the mushrooms didn't grow at all. The conditions weren't right. In some places—mostly rocky places—they grew large enough to be noticeable. People all over the world posted pictures online. "White eggs," they called them. It took a bit until botanists and mycologists took note. Most didn't realize that we were dealing with a species unknown to us.

We aren't sure who sent them. We aren't even sure if there is a "who" behind the spores. But once the first portals opened up, we learned that these mushrooms aren't just a quirk of biology. The portals were small at first—minuscule, even. Like a pinhole camera, we were able to glimpse through, but we couldn't make out much. We were only able to see colors and textures if the conditions were right. We weren't sure what we were looking at.

We still don't understand why some mushrooms open up, and some don't. Most don't. What we do know is that they like colder climates and high elevations. What we also know is that the portals don't stay open for long. Like all mushrooms, the flush only lasts for a week or two. When a portal opens, it looks like the mushroom is eating a hole into itself at first. But the hole grows, and what starts as a shimmer behind a grey film turns into a clear picture as the egg ripens. When conditions are right, portals will remain stable for up to three days. Once the fruit withers, the portal closes, and the mushroom decays.

The eggs grew bigger year over year. And with it, the portals. Soon enough, the portals were big enough to stick your finger through. And that's when things started to get weird...

-

@ 20986fb8:cdac21b3

2024-12-18 03:19:36

### **English**

#### Introducing YakiHonne: Write Without Limits

YakiHonne is the ultimate text editor designed to help you express yourself creatively, no matter the language.

**Features you'll love:**

- 🌟 **Rich Formatting**: Add headings, bold, italics, and more.

- 🌏 **Multilingual Support**: Seamlessly write in English, Chinese, Arabic, and Japanese.

- 🔗 **Interactive Links**: [Learn more about YakiHonne](https://yakihonne.com).

**Benefits:**

1. Easy to use.

2. Enhance readability with customizable styles.

3. Supports various complex formats including LateX.

> "YakiHonne is a game-changer for content creators."

-

@ fe32298e:20516265

2024-12-16 20:59:13

Today I learned how to install [NVapi](https://github.com/sammcj/NVApi) to monitor my GPUs in Home Assistant.

**NVApi** is a lightweight API designed for monitoring NVIDIA GPU utilization and enabling automated power management. It provides real-time GPU metrics, supports integration with tools like Home Assistant, and offers flexible power management and PCIe link speed management based on workload and thermal conditions.

- **GPU Utilization Monitoring**: Utilization, memory usage, temperature, fan speed, and power consumption.

- **Automated Power Limiting**: Adjusts power limits dynamically based on temperature thresholds and total power caps, configurable per GPU or globally.

- **Cross-GPU Coordination**: Total power budget applies across multiple GPUs in the same system.

- **PCIe Link Speed Management**: Controls minimum and maximum PCIe link speeds with idle thresholds for power optimization.

- **Home Assistant Integration**: Uses the built-in RESTful platform and template sensors.

## Getting the Data

```

sudo apt install golang-go

git clone https://github.com/sammcj/NVApi.git

cd NVapi

go run main.go -port 9999 -rate 1

curl http://localhost:9999/gpu

```

Response for a single GPU:

```

[

{

"index": 0,

"name": "NVIDIA GeForce RTX 4090",

"gpu_utilisation": 0,

"memory_utilisation": 0,

"power_watts": 16,

"power_limit_watts": 450,

"memory_total_gb": 23.99,

"memory_used_gb": 0.46,

"memory_free_gb": 23.52,

"memory_usage_percent": 2,

"temperature": 38,

"processes": [],

"pcie_link_state": "not managed"

}

]

```

Response for multiple GPUs:

```

[

{

"index": 0,

"name": "NVIDIA GeForce RTX 3090",

"gpu_utilisation": 0,

"memory_utilisation": 0,

"power_watts": 14,

"power_limit_watts": 350,

"memory_total_gb": 24,

"memory_used_gb": 0.43,

"memory_free_gb": 23.57,

"memory_usage_percent": 2,

"temperature": 36,

"processes": [],

"pcie_link_state": "not managed"

},

{

"index": 1,

"name": "NVIDIA RTX A4000",

"gpu_utilisation": 0,

"memory_utilisation": 0,

"power_watts": 10,

"power_limit_watts": 140,

"memory_total_gb": 15.99,

"memory_used_gb": 0.56,

"memory_free_gb": 15.43,

"memory_usage_percent": 3,

"temperature": 41,

"processes": [],

"pcie_link_state": "not managed"

}

]

```

# Start at Boot

Create `/etc/systemd/system/nvapi.service`:

```

[Unit]

Description=Run NVapi

After=network.target

[Service]

Type=simple

Environment="GOPATH=/home/ansible/go"

WorkingDirectory=/home/ansible/NVapi

ExecStart=/usr/bin/go run main.go -port 9999 -rate 1

Restart=always

User=ansible

# Environment="GPU_TEMP_CHECK_INTERVAL=5"

# Environment="GPU_TOTAL_POWER_CAP=400"

# Environment="GPU_0_LOW_TEMP=40"

# Environment="GPU_0_MEDIUM_TEMP=70"

# Environment="GPU_0_LOW_TEMP_LIMIT=135"

# Environment="GPU_0_MEDIUM_TEMP_LIMIT=120"

# Environment="GPU_0_HIGH_TEMP_LIMIT=100"

# Environment="GPU_1_LOW_TEMP=45"

# Environment="GPU_1_MEDIUM_TEMP=75"

# Environment="GPU_1_LOW_TEMP_LIMIT=140"

# Environment="GPU_1_MEDIUM_TEMP_LIMIT=125"

# Environment="GPU_1_HIGH_TEMP_LIMIT=110"

[Install]

WantedBy=multi-user.target

```

## Home Assistant

Add to Home Assistant `configuration.yaml` and restart HA (completely).

For a single GPU, this works:

```

sensor:

- platform: rest

name: MYPC GPU Information

resource: http://mypc:9999

method: GET

headers:

Content-Type: application/json

value_template: "{{ value_json[0].index }}"

json_attributes:

- name

- gpu_utilisation

- memory_utilisation

- power_watts

- power_limit_watts

- memory_total_gb

- memory_used_gb

- memory_free_gb

- memory_usage_percent

- temperature

scan_interval: 1 # seconds

- platform: template

sensors:

mypc_gpu_0_gpu:

friendly_name: "MYPC {{ state_attr('sensor.mypc_gpu_information', 'name') }} GPU"

value_template: "{{ state_attr('sensor.mypc_gpu_information', 'gpu_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_0_memory:

friendly_name: "MYPC {{ state_attr('sensor.mypc_gpu_information', 'name') }} Memory"

value_template: "{{ state_attr('sensor.mypc_gpu_information', 'memory_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_0_power:

friendly_name: "MYPC {{ state_attr('sensor.mypc_gpu_information', 'name') }} Power"

value_template: "{{ state_attr('sensor.mypc_gpu_information', 'power_watts') }}"

unit_of_measurement: "W"

mypc_gpu_0_power_limit:

friendly_name: "MYPC {{ state_attr('sensor.mypc_gpu_information', 'name') }} Power Limit"

value_template: "{{ state_attr('sensor.mypc_gpu_information', 'power_limit_watts') }}"

unit_of_measurement: "W"

mypc_gpu_0_temperature:

friendly_name: "MYPC {{ state_attr('sensor.mypc_gpu_information', 'name') }} Temperature"

value_template: "{{ state_attr('sensor.mypc_gpu_information', 'temperature') }}"

unit_of_measurement: "°C"

```

For multiple GPUs:

```

rest:

scan_interval: 1

resource: http://mypc:9999

sensor:

- name: "MYPC GPU0 Information"

value_template: "{{ value_json[0].index }}"

json_attributes_path: "$.0"

json_attributes:

- name

- gpu_utilisation

- memory_utilisation

- power_watts

- power_limit_watts

- memory_total_gb

- memory_used_gb

- memory_free_gb

- memory_usage_percent

- temperature

- name: "MYPC GPU1 Information"

value_template: "{{ value_json[1].index }}"

json_attributes_path: "$.1"

json_attributes:

- name

- gpu_utilisation

- memory_utilisation

- power_watts

- power_limit_watts

- memory_total_gb

- memory_used_gb

- memory_free_gb

- memory_usage_percent

- temperature

- platform: template

sensors:

mypc_gpu_0_gpu:

friendly_name: "MYPC GPU0 GPU"

value_template: "{{ state_attr('sensor.mypc_gpu0_information', 'gpu_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_0_memory:

friendly_name: "MYPC GPU0 Memory"

value_template: "{{ state_attr('sensor.mypc_gpu0_information', 'memory_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_0_power:

friendly_name: "MYPC GPU0 Power"

value_template: "{{ state_attr('sensor.mypc_gpu0_information', 'power_watts') }}"

unit_of_measurement: "W"

mypc_gpu_0_power_limit:

friendly_name: "MYPC GPU0 Power Limit"

value_template: "{{ state_attr('sensor.mypc_gpu0_information', 'power_limit_watts') }}"

unit_of_measurement: "W"

mypc_gpu_0_temperature:

friendly_name: "MYPC GPU0 Temperature"

value_template: "{{ state_attr('sensor.mypc_gpu0_information', 'temperature') }}"

unit_of_measurement: "C"

- platform: template

sensors:

mypc_gpu_1_gpu:

friendly_name: "MYPC GPU1 GPU"

value_template: "{{ state_attr('sensor.mypc_gpu1_information', 'gpu_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_1_memory:

friendly_name: "MYPC GPU1 Memory"

value_template: "{{ state_attr('sensor.mypc_gpu1_information', 'memory_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_1_power:

friendly_name: "MYPC GPU1 Power"

value_template: "{{ state_attr('sensor.mypc_gpu1_information', 'power_watts') }}"

unit_of_measurement: "W"

mypc_gpu_1_power_limit:

friendly_name: "MYPC GPU1 Power Limit"

value_template: "{{ state_attr('sensor.mypc_gpu1_information', 'power_limit_watts') }}"

unit_of_measurement: "W"

mypc_gpu_1_temperature:

friendly_name: "MYPC GPU1 Temperature"

value_template: "{{ state_attr('sensor.mypc_gpu1_information', 'temperature') }}"

unit_of_measurement: "C"

```

Basic entity card:

```

type: entities

entities:

- entity: sensor.mypc_gpu_0_gpu

secondary_info: last-updated

- entity: sensor.mypc_gpu_0_memory

secondary_info: last-updated

- entity: sensor.mypc_gpu_0_power

secondary_info: last-updated

- entity: sensor.mypc_gpu_0_power_limit

secondary_info: last-updated

- entity: sensor.mypc_gpu_0_temperature

secondary_info: last-updated

```

# Ansible Role

```

---

- name: install go

become: true

package:

name: golang-go

state: present

- name: git clone

git:

repo: "https://github.com/sammcj/NVApi.git"

dest: "/home/ansible/NVapi"

update: yes

force: true

# go run main.go -port 9999 -rate 1

- name: install systemd service

become: true

copy:

src: nvapi.service

dest: /etc/systemd/system/nvapi.service

- name: Reload systemd daemons, enable, and restart nvapi

become: true

systemd:

name: nvapi

daemon_reload: yes

enabled: yes

state: restarted

```

-

@ 6f6b50bb:a848e5a1

2024-12-15 15:09:52

Che cosa significherebbe trattare l'IA come uno strumento invece che come una persona?

Dall’avvio di ChatGPT, le esplorazioni in due direzioni hanno preso velocità.

La prima direzione riguarda le capacità tecniche. Quanto grande possiamo addestrare un modello? Quanto bene può rispondere alle domande del SAT? Con quanta efficienza possiamo distribuirlo?

La seconda direzione riguarda il design dell’interazione. Come comunichiamo con un modello? Come possiamo usarlo per un lavoro utile? Quale metafora usiamo per ragionare su di esso?

La prima direzione è ampiamente seguita e enormemente finanziata, e per una buona ragione: i progressi nelle capacità tecniche sono alla base di ogni possibile applicazione. Ma la seconda è altrettanto cruciale per il campo e ha enormi incognite. Siamo solo a pochi anni dall’inizio dell’era dei grandi modelli. Quali sono le probabilità che abbiamo già capito i modi migliori per usarli?

Propongo una nuova modalità di interazione, in cui i modelli svolgano il ruolo di applicazioni informatiche (ad esempio app per telefoni): fornendo un’interfaccia grafica, interpretando gli input degli utenti e aggiornando il loro stato. In questa modalità, invece di essere un “agente” che utilizza un computer per conto dell’essere umano, l’IA può fornire un ambiente informatico più ricco e potente che possiamo utilizzare.

### Metafore per l’interazione

Al centro di un’interazione c’è una metafora che guida le aspettative di un utente su un sistema. I primi giorni dell’informatica hanno preso metafore come “scrivanie”, “macchine da scrivere”, “fogli di calcolo” e “lettere” e le hanno trasformate in equivalenti digitali, permettendo all’utente di ragionare sul loro comportamento. Puoi lasciare qualcosa sulla tua scrivania e tornare a prenderlo; hai bisogno di un indirizzo per inviare una lettera. Man mano che abbiamo sviluppato una conoscenza culturale di questi dispositivi, la necessità di queste particolari metafore è scomparsa, e con esse i design di interfaccia skeumorfici che le rafforzavano. Come un cestino o una matita, un computer è ora una metafora di se stesso.

La metafora dominante per i grandi modelli oggi è modello-come-persona. Questa è una metafora efficace perché le persone hanno capacità estese che conosciamo intuitivamente. Implica che possiamo avere una conversazione con un modello e porgli domande; che il modello possa collaborare con noi su un documento o un pezzo di codice; che possiamo assegnargli un compito da svolgere da solo e che tornerà quando sarà finito.

Tuttavia, trattare un modello come una persona limita profondamente il nostro modo di pensare all’interazione con esso. Le interazioni umane sono intrinsecamente lente e lineari, limitate dalla larghezza di banda e dalla natura a turni della comunicazione verbale. Come abbiamo tutti sperimentato, comunicare idee complesse in una conversazione è difficile e dispersivo. Quando vogliamo precisione, ci rivolgiamo invece a strumenti, utilizzando manipolazioni dirette e interfacce visive ad alta larghezza di banda per creare diagrammi, scrivere codice e progettare modelli CAD. Poiché concepiamo i modelli come persone, li utilizziamo attraverso conversazioni lente, anche se sono perfettamente in grado di accettare input diretti e rapidi e di produrre risultati visivi. Le metafore che utilizziamo limitano le esperienze che costruiamo, e la metafora modello-come-persona ci impedisce di esplorare il pieno potenziale dei grandi modelli.

Per molti casi d’uso, e specialmente per il lavoro produttivo, credo che il futuro risieda in un’altra metafora: modello-come-computer.

### Usare un’IA come un computer