-

@ 4961e68d:a2212e1c

2025-05-02 07:47:16

@ 4961e68d:a2212e1c

2025-05-02 07:47:16热死人了

-

@ 4961e68d:a2212e1c

2025-05-02 07:46:46

@ 4961e68d:a2212e1c

2025-05-02 07:46:46热死人了!

-

@ 21335073:a244b1ad

2025-05-01 01:51:10

@ 21335073:a244b1ad

2025-05-01 01:51:10Please respect Virginia Giuffre’s memory by refraining from asking about the circumstances or theories surrounding her passing.

Since Virginia Giuffre’s death, I’ve reflected on what she would want me to say or do. This piece is my attempt to honor her legacy.

When I first spoke with Virginia, I was struck by her unshakable hope. I had grown cynical after years in the anti-human trafficking movement, worn down by a broken system and a government that often seemed complicit. But Virginia’s passion, creativity, and belief that survivors could be heard reignited something in me. She reminded me of my younger, more hopeful self. Instead of warning her about the challenges ahead, I let her dream big, unburdened by my own disillusionment. That conversation changed me for the better, and following her lead led to meaningful progress.

Virginia was one of the bravest people I’ve ever known. As a survivor of Epstein, Maxwell, and their co-conspirators, she risked everything to speak out, taking on some of the world’s most powerful figures.

She loved when I said, “Epstein isn’t the only Epstein.” This wasn’t just about one man—it was a call to hold all abusers accountable and to ensure survivors find hope and healing.

The Epstein case often gets reduced to sensational details about the elite, but that misses the bigger picture. Yes, we should be holding all of the co-conspirators accountable, we must listen to the survivors’ stories. Their experiences reveal how predators exploit vulnerabilities, offering lessons to prevent future victims.

You’re not powerless in this fight. Educate yourself about trafficking and abuse—online and offline—and take steps to protect those around you. Supporting survivors starts with small, meaningful actions. Free online resources can guide you in being a safe, supportive presence.

When high-profile accusations arise, resist snap judgments. Instead of dismissing survivors as “crazy,” pause to consider the trauma they may be navigating. Speaking out or coping with abuse is never easy. You don’t have to believe every claim, but you can refrain from attacking accusers online.

Society also fails at providing aftercare for survivors. The government, often part of the problem, won’t solve this. It’s up to us. Prevention is critical, but when abuse occurs, step up for your loved ones and community. Protect the vulnerable. it’s a challenging but a rewarding journey.

If you’re contributing to Nostr, you’re helping build a censorship resistant platform where survivors can share their stories freely, no matter how powerful their abusers are. Their voices can endure here, offering strength and hope to others. This gives me great hope for the future.

Virginia Giuffre’s courage was a gift to the world. It was an honor to know and serve her. She will be deeply missed. My hope is that her story inspires others to take on the powerful.

-

@ 52b4a076:e7fad8bd

2025-04-28 00:48:57

@ 52b4a076:e7fad8bd

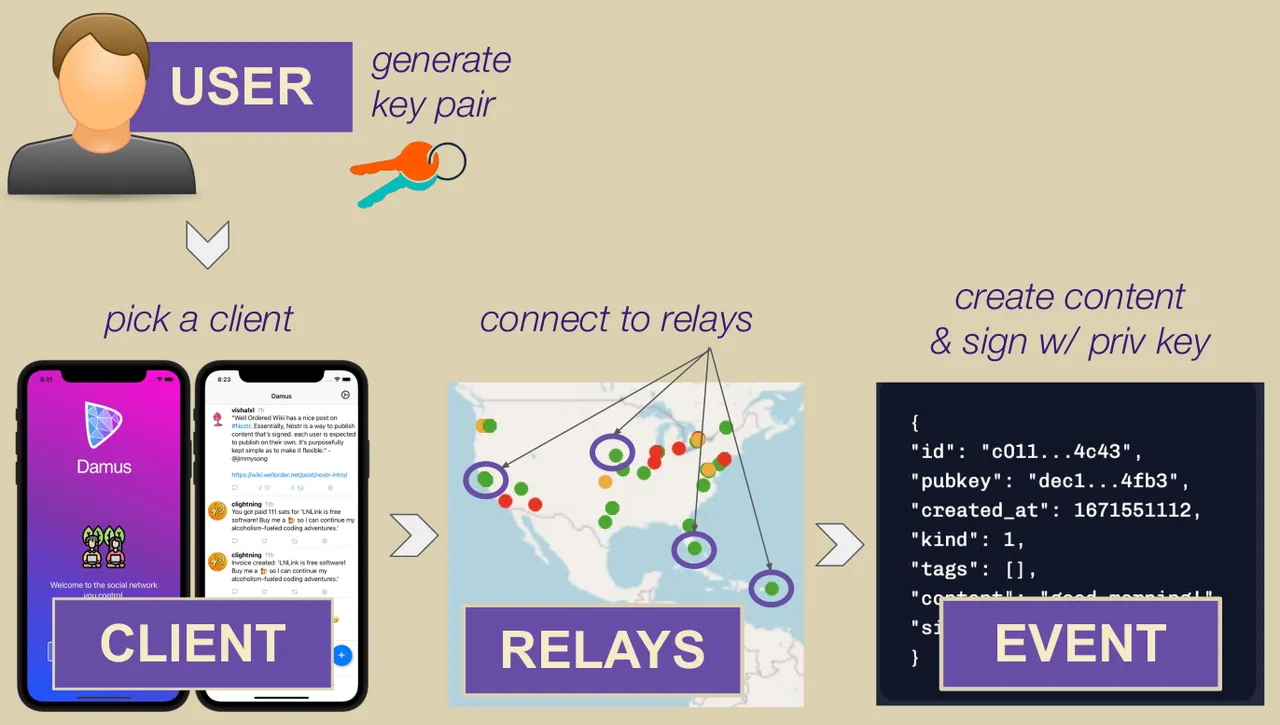

2025-04-28 00:48:57I have been recently building NFDB, a new relay DB. This post is meant as a short overview.

Regular relays have challenges

Current relay software have significant challenges, which I have experienced when hosting Nostr.land: - Scalability is only supported by adding full replicas, which does not scale to large relays. - Most relays use slow databases and are not optimized for large scale usage. - Search is near-impossible to implement on standard relays. - Privacy features such as NIP-42 are lacking. - Regular DB maintenance tasks on normal relays require extended downtime. - Fault-tolerance is implemented, if any, using a load balancer, which is limited. - Personalization and advanced filtering is not possible. - Local caching is not supported.

NFDB: A scalable database for large relays

NFDB is a new database meant for medium-large scale relays, built on FoundationDB that provides: - Near-unlimited scalability - Extended fault tolerance - Instant loading - Better search - Better personalization - and more.

Search

NFDB has extended search capabilities including: - Semantic search: Search for meaning, not words. - Interest-based search: Highlight content you care about. - Multi-faceted queries: Easily filter by topic, author group, keywords, and more at the same time. - Wide support for event kinds, including users, articles, etc.

Personalization

NFDB allows significant personalization: - Customized algorithms: Be your own algorithm. - Spam filtering: Filter content to your WoT, and use advanced spam filters. - Topic mutes: Mute topics, not keywords. - Media filtering: With Nostr.build, you will be able to filter NSFW and other content - Low data mode: Block notes that use high amounts of cellular data. - and more

Other

NFDB has support for many other features such as: - NIP-42: Protect your privacy with private drafts and DMs - Microrelays: Easily deploy your own personal microrelay - Containers: Dedicated, fast storage for discoverability events such as relay lists

Calcite: A local microrelay database

Calcite is a lightweight, local version of NFDB that is meant for microrelays and caching, meant for thousands of personal microrelays.

Calcite HA is an additional layer that allows live migration and relay failover in under 30 seconds, providing higher availability compared to current relays with greater simplicity. Calcite HA is enabled in all Calcite deployments.

For zero-downtime, NFDB is recommended.

Noswhere SmartCache

Relays are fixed in one location, but users can be anywhere.

Noswhere SmartCache is a CDN for relays that dynamically caches data on edge servers closest to you, allowing: - Multiple regions around the world - Improved throughput and performance - Faster loading times

routerd

routerdis a custom load-balancer optimized for Nostr relays, integrated with SmartCache.routerdis specifically integrated with NFDB and Calcite HA to provide fast failover and high performance.Ending notes

NFDB is planned to be deployed to Nostr.land in the coming weeks.

A lot more is to come. 👀️️️️️️

-

@ 91bea5cd:1df4451c

2025-04-26 10:16:21

@ 91bea5cd:1df4451c

2025-04-26 10:16:21O Contexto Legal Brasileiro e o Consentimento

No ordenamento jurídico brasileiro, o consentimento do ofendido pode, em certas circunstâncias, afastar a ilicitude de um ato que, sem ele, configuraria crime (como lesão corporal leve, prevista no Art. 129 do Código Penal). Contudo, o consentimento tem limites claros: não é válido para bens jurídicos indisponíveis, como a vida, e sua eficácia é questionável em casos de lesões corporais graves ou gravíssimas.

A prática de BDSM consensual situa-se em uma zona complexa. Em tese, se ambos os parceiros são adultos, capazes, e consentiram livre e informadamente nos atos praticados, sem que resultem em lesões graves permanentes ou risco de morte não consentido, não haveria crime. O desafio reside na comprovação desse consentimento, especialmente se uma das partes, posteriormente, o negar ou alegar coação.

A Lei Maria da Penha (Lei nº 11.340/2006)

A Lei Maria da Penha é um marco fundamental na proteção da mulher contra a violência doméstica e familiar. Ela estabelece mecanismos para coibir e prevenir tal violência, definindo suas formas (física, psicológica, sexual, patrimonial e moral) e prevendo medidas protetivas de urgência.

Embora essencial, a aplicação da lei em contextos de BDSM pode ser delicada. Uma alegação de violência por parte da mulher, mesmo que as lesões ou situações decorram de práticas consensuais, tende a receber atenção prioritária das autoridades, dada a presunção de vulnerabilidade estabelecida pela lei. Isso pode criar um cenário onde o parceiro masculino enfrenta dificuldades significativas em demonstrar a natureza consensual dos atos, especialmente se não houver provas robustas pré-constituídas.

Outros riscos:

Lesão corporal grave ou gravíssima (art. 129, §§ 1º e 2º, CP), não pode ser justificada pelo consentimento, podendo ensejar persecução penal.

Crimes contra a dignidade sexual (arts. 213 e seguintes do CP) são de ação pública incondicionada e independem de representação da vítima para a investigação e denúncia.

Riscos de Falsas Acusações e Alegação de Coação Futura

Os riscos para os praticantes de BDSM, especialmente para o parceiro que assume o papel dominante ou que inflige dor/restrição (frequentemente, mas não exclusivamente, o homem), podem surgir de diversas frentes:

- Acusações Externas: Vizinhos, familiares ou amigos que desconhecem a natureza consensual do relacionamento podem interpretar sons, marcas ou comportamentos como sinais de abuso e denunciar às autoridades.

- Alegações Futuras da Parceira: Em caso de término conturbado, vingança, arrependimento ou mudança de perspectiva, a parceira pode reinterpretar as práticas passadas como abuso e buscar reparação ou retaliação através de uma denúncia. A alegação pode ser de que o consentimento nunca existiu ou foi viciado.

- Alegação de Coação: Uma das formas mais complexas de refutar é a alegação de que o consentimento foi obtido mediante coação (física, moral, psicológica ou econômica). A parceira pode alegar, por exemplo, que se sentia pressionada, intimidada ou dependente, e que seu "sim" não era genuíno. Provar a ausência de coação a posteriori é extremamente difícil.

- Ingenuidade e Vulnerabilidade Masculina: Muitos homens, confiando na dinâmica consensual e na parceira, podem negligenciar a necessidade de precauções. A crença de que "isso nunca aconteceria comigo" ou a falta de conhecimento sobre as implicações legais e o peso processual de uma acusação no âmbito da Lei Maria da Penha podem deixá-los vulneráveis. A presença de marcas físicas, mesmo que consentidas, pode ser usada como evidência de agressão, invertendo o ônus da prova na prática, ainda que não na teoria jurídica.

Estratégias de Prevenção e Mitigação

Não existe um método infalível para evitar completamente o risco de uma falsa acusação, mas diversas medidas podem ser adotadas para construir um histórico de consentimento e reduzir vulnerabilidades:

- Comunicação Explícita e Contínua: A base de qualquer prática BDSM segura é a comunicação constante. Negociar limites, desejos, palavras de segurança ("safewords") e expectativas antes, durante e depois das cenas é crucial. Manter registros dessas negociações (e-mails, mensagens, diários compartilhados) pode ser útil.

-

Documentação do Consentimento:

-

Contratos de Relacionamento/Cena: Embora a validade jurídica de "contratos BDSM" seja discutível no Brasil (não podem afastar normas de ordem pública), eles servem como forte evidência da intenção das partes, da negociação detalhada de limites e do consentimento informado. Devem ser claros, datados, assinados e, idealmente, reconhecidos em cartório (para prova de data e autenticidade das assinaturas).

-

Registros Audiovisuais: Gravar (com consentimento explícito para a gravação) discussões sobre consentimento e limites antes das cenas pode ser uma prova poderosa. Gravar as próprias cenas é mais complexo devido a questões de privacidade e potencial uso indevido, mas pode ser considerado em casos específicos, sempre com consentimento mútuo documentado para a gravação.

Importante: a gravação deve ser com ciência da outra parte, para não configurar violação da intimidade (art. 5º, X, da Constituição Federal e art. 20 do Código Civil).

-

-

Testemunhas: Em alguns contextos de comunidade BDSM, a presença de terceiros de confiança durante negociações ou mesmo cenas pode servir como testemunho, embora isso possa alterar a dinâmica íntima do casal.

- Estabelecimento Claro de Limites e Palavras de Segurança: Definir e respeitar rigorosamente os limites (o que é permitido, o que é proibido) e as palavras de segurança é fundamental. O desrespeito a uma palavra de segurança encerra o consentimento para aquele ato.

- Avaliação Contínua do Consentimento: O consentimento não é um cheque em branco; ele deve ser entusiástico, contínuo e revogável a qualquer momento. Verificar o bem-estar do parceiro durante a cena ("check-ins") é essencial.

- Discrição e Cuidado com Evidências Físicas: Ser discreto sobre a natureza do relacionamento pode evitar mal-entendidos externos. Após cenas que deixem marcas, é prudente que ambos os parceiros estejam cientes e de acordo, talvez documentando por fotos (com data) e uma nota sobre a consensualidade da prática que as gerou.

- Aconselhamento Jurídico Preventivo: Consultar um advogado especializado em direito de família e criminal, com sensibilidade para dinâmicas de relacionamento alternativas, pode fornecer orientação personalizada sobre as melhores formas de documentar o consentimento e entender os riscos legais específicos.

Observações Importantes

- Nenhuma documentação substitui a necessidade de consentimento real, livre, informado e contínuo.

- A lei brasileira protege a "integridade física" e a "dignidade humana". Práticas que resultem em lesões graves ou que violem a dignidade de forma não consentida (ou com consentimento viciado) serão ilegais, independentemente de qualquer acordo prévio.

- Em caso de acusação, a existência de documentação robusta de consentimento não garante a absolvição, mas fortalece significativamente a defesa, ajudando a demonstrar a natureza consensual da relação e das práticas.

-

A alegação de coação futura é particularmente difícil de prevenir apenas com documentos. Um histórico consistente de comunicação aberta (whatsapp/telegram/e-mails), respeito mútuo e ausência de dependência ou controle excessivo na relação pode ajudar a contextualizar a dinâmica como não coercitiva.

-

Cuidado com Marcas Visíveis e Lesões Graves Práticas que resultam em hematomas severos ou lesões podem ser interpretadas como agressão, mesmo que consentidas. Evitar excessos protege não apenas a integridade física, mas também evita questionamentos legais futuros.

O que vem a ser consentimento viciado

No Direito, consentimento viciado é quando a pessoa concorda com algo, mas a vontade dela não é livre ou plena — ou seja, o consentimento existe formalmente, mas é defeituoso por alguma razão.

O Código Civil brasileiro (art. 138 a 165) define várias formas de vício de consentimento. As principais são:

Erro: A pessoa se engana sobre o que está consentindo. (Ex.: A pessoa acredita que vai participar de um jogo leve, mas na verdade é exposta a práticas pesadas.)

Dolo: A pessoa é enganada propositalmente para aceitar algo. (Ex.: Alguém mente sobre o que vai acontecer durante a prática.)

Coação: A pessoa é forçada ou ameaçada a consentir. (Ex.: "Se você não aceitar, eu termino com você" — pressão emocional forte pode ser vista como coação.)

Estado de perigo ou lesão: A pessoa aceita algo em situação de necessidade extrema ou abuso de sua vulnerabilidade. (Ex.: Alguém em situação emocional muito fragilizada é induzida a aceitar práticas que normalmente recusaria.)

No contexto de BDSM, isso é ainda mais delicado: Mesmo que a pessoa tenha "assinado" um contrato ou dito "sim", se depois ela alegar que seu consentimento foi dado sob medo, engano ou pressão psicológica, o consentimento pode ser considerado viciado — e, portanto, juridicamente inválido.

Isso tem duas implicações sérias:

-

O crime não se descaracteriza: Se houver vício, o consentimento é ignorado e a prática pode ser tratada como crime normal (lesão corporal, estupro, tortura, etc.).

-

A prova do consentimento precisa ser sólida: Mostrando que a pessoa estava informada, lúcida, livre e sem qualquer tipo de coação.

Consentimento viciado é quando a pessoa concorda formalmente, mas de maneira enganada, forçada ou pressionada, tornando o consentimento inútil para efeitos jurídicos.

Conclusão

Casais que praticam BDSM consensual no Brasil navegam em um terreno que exige não apenas confiança mútua e comunicação excepcional, mas também uma consciência aguçada das complexidades legais e dos riscos de interpretações equivocadas ou acusações mal-intencionadas. Embora o BDSM seja uma expressão legítima da sexualidade humana, sua prática no Brasil exige responsabilidade redobrada. Ter provas claras de consentimento, manter a comunicação aberta e agir com prudência são formas eficazes de se proteger de falsas alegações e preservar a liberdade e a segurança de todos os envolvidos. Embora leis controversas como a Maria da Penha sejam "vitais" para a proteção contra a violência real, os praticantes de BDSM, e em particular os homens nesse contexto, devem adotar uma postura proativa e prudente para mitigar os riscos inerentes à potencial má interpretação ou instrumentalização dessas práticas e leis, garantindo que a expressão de sua consensualidade esteja resguardada na medida do possível.

Importante: No Brasil, mesmo com tudo isso, o Ministério Público pode denunciar por crime como lesão corporal grave, estupro ou tortura, independente de consentimento. Então a prudência nas práticas é fundamental.

Aviso Legal: Este artigo tem caráter meramente informativo e não constitui aconselhamento jurídico. As leis e interpretações podem mudar, e cada situação é única. Recomenda-se buscar orientação de um advogado qualificado para discutir casos específicos.

Se curtiu este artigo faça uma contribuição, se tiver algum ponto relevante para o artigo deixe seu comentário.

-

@ 3bf0c63f:aefa459d

2025-04-25 18:55:52

@ 3bf0c63f:aefa459d

2025-04-25 18:55:52Report of how the money Jack donated to the cause in December 2022 has been misused so far.

Bounties given

March 2025

- Dhalsim: 1,110,540 - Work on Nostr wiki data processing

February 2025

- BOUNTY* NullKotlinDev: 950,480 - Twine RSS reader Nostr integration

- Dhalsim: 2,094,584 - Work on Hypothes.is Nostr fork

- Constant, Biz and J: 11,700,588 - Nostr Special Forces

January 2025

- Constant, Biz and J: 11,610,987 - Nostr Special Forces

- BOUNTY* NullKotlinDev: 843,840 - Feeder RSS reader Nostr integration

- BOUNTY* NullKotlinDev: 797,500 - ReadYou RSS reader Nostr integration

December 2024

- BOUNTY* tijl: 1,679,500 - Nostr integration into RSS readers yarr and miniflux

- Constant, Biz and J: 10,736,166 - Nostr Special Forces

- Thereza: 1,020,000 - Podcast outreach initiative

November 2024

- Constant, Biz and J: 5,422,464 - Nostr Special Forces

October 2024

- Nostrdam: 300,000 - hackathon prize

- Svetski: 5,000,000 - Latin America Nostr events contribution

- Quentin: 5,000,000 - nostrcheck.me

June 2024

- Darashi: 5,000,000 - maintaining nos.today, searchnos, search.nos.today and other experiments

- Toshiya: 5,000,000 - keeping the NIPs repo clean and other stuff

May 2024

- James: 3,500,000 - https://github.com/jamesmagoo/nostr-writer

- Yakihonne: 5,000,000 - spreading the word in Asia

- Dashu: 9,000,000 - https://github.com/haorendashu/nostrmo

February 2024

- Viktor: 5,000,000 - https://github.com/viktorvsk/saltivka and https://github.com/viktorvsk/knowstr

- Eric T: 5,000,000 - https://github.com/tcheeric/nostr-java

- Semisol: 5,000,000 - https://relay.noswhere.com/ and https://hist.nostr.land relays

- Sebastian: 5,000,000 - Drupal stuff and nostr-php work

- tijl: 5,000,000 - Cloudron, Yunohost and Fraidycat attempts

- Null Kotlin Dev: 5,000,000 - AntennaPod attempt

December 2023

- hzrd: 5,000,000 - Nostrudel

- awayuki: 5,000,000 - NOSTOPUS illustrations

- bera: 5,000,000 - getwired.app

- Chris: 5,000,000 - resolvr.io

- NoGood: 10,000,000 - nostrexplained.com stories

October 2023

- SnowCait: 5,000,000 - https://nostter.vercel.app/ and other tools

- Shaun: 10,000,000 - https://yakihonne.com/, events and work on Nostr awareness

- Derek Ross: 10,000,000 - spreading the word around the world

- fmar: 5,000,000 - https://github.com/frnandu/yana

- The Nostr Report: 2,500,000 - curating stuff

- james magoo: 2,500,000 - the Obsidian plugin: https://github.com/jamesmagoo/nostr-writer

August 2023

- Paul Miller: 5,000,000 - JS libraries and cryptography-related work

- BOUNTY tijl: 5,000,000 - https://github.com/github-tijlxyz/wikinostr

- gzuus: 5,000,000 - https://nostree.me/

July 2023

- syusui-s: 5,000,000 - rabbit, a tweetdeck-like Nostr client: https://syusui-s.github.io/rabbit/

- kojira: 5,000,000 - Nostr fanzine, Nostr discussion groups in Japan, hardware experiments

- darashi: 5,000,000 - https://github.com/darashi/nos.today, https://github.com/darashi/searchnos, https://github.com/darashi/murasaki

- jeff g: 5,000,000 - https://nostr.how and https://listr.lol, plus other contributions

- cloud fodder: 5,000,000 - https://nostr1.com (open-source)

- utxo.one: 5,000,000 - https://relaying.io (open-source)

- Max DeMarco: 10,269,507 - https://www.youtube.com/watch?v=aA-jiiepOrE

- BOUNTY optout21: 1,000,000 - https://github.com/optout21/nip41-proto0 (proposed nip41 CLI)

- BOUNTY Leo: 1,000,000 - https://github.com/leo-lox/camelus (an old relay thing I forgot exactly)

June 2023

- BOUNTY: Sepher: 2,000,000 - a webapp for making lists of anything: https://pinstr.app/

- BOUNTY: Kieran: 10,000,000 - implement gossip algorithm on Snort, implement all the other nice things: manual relay selection, following hints etc.

- Mattn: 5,000,000 - a myriad of projects and contributions to Nostr projects: https://github.com/search?q=owner%3Amattn+nostr&type=code

- BOUNTY: lynn: 2,000,000 - a simple and clean git nostr CLI written in Go, compatible with William's original git-nostr-tools; and implement threaded comments on https://github.com/fiatjaf/nocomment.

- Jack Chakany: 5,000,000 - https://github.com/jacany/nblog

- BOUNTY: Dan: 2,000,000 - https://metadata.nostr.com/

April 2023

- BOUNTY: Blake Jakopovic: 590,000 - event deleter tool, NIP dependency organization

- BOUNTY: koalasat: 1,000,000 - display relays

- BOUNTY: Mike Dilger: 4,000,000 - display relays, follow event hints (Gossip)

- BOUNTY: kaiwolfram: 5,000,000 - display relays, follow event hints, choose relays to publish (Nozzle)

- Daniele Tonon: 3,000,000 - Gossip

- bu5hm4nn: 3,000,000 - Gossip

- BOUNTY: hodlbod: 4,000,000 - display relays, follow event hints

March 2023

- Doug Hoyte: 5,000,000 sats - https://github.com/hoytech/strfry

- Alex Gleason: 5,000,000 sats - https://gitlab.com/soapbox-pub/mostr

- verbiricha: 5,000,000 sats - https://badges.page/, https://habla.news/

- talvasconcelos: 5,000,000 sats - https://migrate.nostr.com, https://read.nostr.com, https://write.nostr.com/

- BOUNTY: Gossip model: 5,000,000 - https://camelus.app/

- BOUNTY: Gossip model: 5,000,000 - https://github.com/kaiwolfram/Nozzle

- BOUNTY: Bounty Manager: 5,000,000 - https://nostrbounties.com/

February 2023

- styppo: 5,000,000 sats - https://hamstr.to/

- sandwich: 5,000,000 sats - https://nostr.watch/

- BOUNTY: Relay-centric client designs: 5,000,000 sats https://bountsr.org/design/2023/01/26/relay-based-design.html

- BOUNTY: Gossip model on https://coracle.social/: 5,000,000 sats

- Nostrovia Podcast: 3,000,000 sats - https://nostrovia.org/

- BOUNTY: Nostr-Desk / Monstr: 5,000,000 sats - https://github.com/alemmens/monstr

- Mike Dilger: 5,000,000 sats - https://github.com/mikedilger/gossip

January 2023

- ismyhc: 5,000,000 sats - https://github.com/Galaxoid-Labs/Seer

- Martti Malmi: 5,000,000 sats - https://iris.to/

- Carlos Autonomous: 5,000,000 sats - https://github.com/BrightonBTC/bija

- Koala Sat: 5,000,000 - https://github.com/KoalaSat/nostros

- Vitor Pamplona: 5,000,000 - https://github.com/vitorpamplona/amethyst

- Cameri: 5,000,000 - https://github.com/Cameri/nostream

December 2022

- William Casarin: 7 BTC - splitting the fund

- pseudozach: 5,000,000 sats - https://nostr.directory/

- Sondre Bjellas: 5,000,000 sats - https://notes.blockcore.net/

- Null Dev: 5,000,000 sats - https://github.com/KotlinGeekDev/Nosky

- Blake Jakopovic: 5,000,000 sats - https://github.com/blakejakopovic/nostcat, https://github.com/blakejakopovic/nostreq and https://github.com/blakejakopovic/NostrEventPlayground

-

@ 21211e6f:8cd9242f

2025-04-22 12:48:33

@ 21211e6f:8cd9242f

2025-04-22 12:48:33This is a public note.

-

@ 21211e6f:8cd9242f

2025-04-21 18:30:48

@ 21211e6f:8cd9242f

2025-04-21 18:30:48This is a public post.

-

@ e3ba5e1a:5e433365

2025-04-15 11:03:15

@ e3ba5e1a:5e433365

2025-04-15 11:03:15Prelude

I wrote this post differently than any of my others. It started with a discussion with AI on an OPSec-inspired review of separation of powers, and evolved into quite an exciting debate! I asked Grok to write up a summary in my overall writing style, which it got pretty well. I've decided to post it exactly as-is. Ultimately, I think there are two solid ideas driving my stance here:

- Perfect is the enemy of the good

- Failure is the crucible of success

Beyond that, just some hard-core belief in freedom, separation of powers, and operating from self-interest.

Intro

Alright, buckle up. I’ve been chewing on this idea for a while, and it’s time to spit it out. Let’s look at the U.S. government like I’d look at a codebase under a cybersecurity audit—OPSEC style, no fluff. Forget the endless debates about what politicians should do. That’s noise. I want to talk about what they can do, the raw powers baked into the system, and why we should stop pretending those powers are sacred. If there’s a hole, either patch it or exploit it. No half-measures. And yeah, I’m okay if the whole thing crashes a bit—failure’s a feature, not a bug.

The Filibuster: A Security Rule with No Teeth

You ever see a firewall rule that’s more theater than protection? That’s the Senate filibuster. Everyone acts like it’s this untouchable guardian of democracy, but here’s the deal: a simple majority can torch it any day. It’s not a law; it’s a Senate preference, like choosing tabs over spaces. When people call killing it the “nuclear option,” I roll my eyes. Nuclear? It’s a button labeled “press me.” If a party wants it gone, they’ll do it. So why the dance?

I say stop playing games. Get rid of the filibuster. If you’re one of those folks who thinks it’s the only thing saving us from tyranny, fine—push for a constitutional amendment to lock it in. That’s a real patch, not a Post-it note. Until then, it’s just a vulnerability begging to be exploited. Every time a party threatens to nuke it, they’re admitting it’s not essential. So let’s stop pretending and move on.

Supreme Court Packing: Because Nine’s Just a Number

Here’s another fun one: the Supreme Court. Nine justices, right? Sounds official. Except it’s not. The Constitution doesn’t say nine—it’s silent on the number. Congress could pass a law tomorrow to make it 15, 20, or 42 (hitchhiker’s reference, anyone?). Packing the court is always on the table, and both sides know it. It’s like a root exploit just sitting there, waiting for someone to log in.

So why not call the bluff? If you’re in power—say, Trump’s back in the game—say, “I’m packing the court unless we amend the Constitution to fix it at nine.” Force the issue. No more shadowboxing. And honestly? The court’s got way too much power anyway. It’s not supposed to be a super-legislature, but here we are, with justices’ ideologies driving the bus. That’s a bug, not a feature. If the court weren’t such a kingmaker, packing it wouldn’t even matter. Maybe we should be talking about clipping its wings instead of just its size.

The Executive Should Go Full Klingon

Let’s talk presidents. I’m not saying they should wear Klingon armor and start shouting “Qapla’!”—though, let’s be real, that’d be awesome. I’m saying the executive should use every scrap of power the Constitution hands them. Enforce the laws you agree with, sideline the ones you don’t. If Congress doesn’t like it, they’ve got tools: pass new laws, override vetoes, or—here’s the big one—cut the budget. That’s not chaos; that’s the system working as designed.

Right now, the real problem isn’t the president overreaching; it’s the bureaucracy. It’s like a daemon running in the background, eating CPU and ignoring the user. The president’s supposed to be the one steering, but the administrative state’s got its own agenda. Let the executive flex, push the limits, and force Congress to check it. Norms? Pfft. The Constitution’s the spec sheet—stick to it.

Let the System Crash

Here’s where I get a little spicy: I’m totally fine if the government grinds to a halt. Deadlock isn’t a disaster; it’s a feature. If the branches can’t agree, let the president veto, let Congress starve the budget, let enforcement stall. Don’t tell me about “essential services.” Nothing’s so critical it can’t take a breather. Shutdowns force everyone to the table—debate, compromise, or expose who’s dropping the ball. If the public loses trust? Good. They’ll vote out the clowns or live with the circus they elected.

Think of it like a server crash. Sometimes you need a hard reboot to clear the cruft. If voters keep picking the same bad admins, well, the country gets what it deserves. Failure’s the best teacher—way better than limping along on autopilot.

States Are the Real MVPs

If the feds fumble, states step up. Right now, states act like junior devs waiting for the lead engineer to sign off. Why? Federal money. It’s a leash, and it’s tight. Cut that cash, and states will remember they’re autonomous. Some will shine, others will tank—looking at you, California. And I’m okay with that. Let people flee to better-run states. No bailouts, no excuses. States are like competing startups: the good ones thrive, the bad ones pivot or die.

Could it get uneven? Sure. Some states might turn into sci-fi utopias while others look like a post-apocalyptic vidya game. That’s the point—competition sorts it out. Citizens can move, markets adjust, and failure’s a signal to fix your act.

Chaos Isn’t the Enemy

Yeah, this sounds messy. States ignoring federal law, external threats poking at our seams, maybe even a constitutional crisis. I’m not scared. The Supreme Court’s there to referee interstate fights, and Congress sets the rules for state-to-state play. But if it all falls apart? Still cool. States can sort it without a babysitter—it’ll be ugly, but freedom’s worth it. External enemies? They’ll either unify us or break us. If we can’t rally, we don’t deserve the win.

Centralizing power to avoid this is like rewriting your app in a single thread to prevent race conditions—sure, it’s simpler, but you’re begging for a deadlock. Decentralized chaos lets states experiment, lets people escape, lets markets breathe. States competing to cut regulations to attract businesses? That’s a race to the bottom for red tape, but a race to the top for innovation—workers might gripe, but they’ll push back, and the tension’s healthy. Bring it—let the cage match play out. The Constitution’s checks are enough if we stop coddling the system.

Why This Matters

I’m not pitching a utopia. I’m pitching a stress test. The U.S. isn’t a fragile porcelain doll; it’s a rugged piece of hardware built to take some hits. Let it fail a little—filibuster, court, feds, whatever. Patch the holes with amendments if you want, or lean into the grind. Either way, stop fearing the crash. It’s how we debug the republic.

So, what’s your take? Ready to let the system rumble, or got a better way to secure the code? Hit me up—I’m all ears.

-

@ 91bea5cd:1df4451c

2025-04-15 06:27:28

@ 91bea5cd:1df4451c

2025-04-15 06:27:28Básico

bash lsblk # Lista todos os diretorios montados.Para criar o sistema de arquivos:

bash mkfs.btrfs -L "ThePool" -f /dev/sdxCriando um subvolume:

bash btrfs subvolume create SubVolMontando Sistema de Arquivos:

bash mount -o compress=zlib,subvol=SubVol,autodefrag /dev/sdx /mntLista os discos formatados no diretório:

bash btrfs filesystem show /mntAdiciona novo disco ao subvolume:

bash btrfs device add -f /dev/sdy /mntLista novamente os discos do subvolume:

bash btrfs filesystem show /mntExibe uso dos discos do subvolume:

bash btrfs filesystem df /mntBalancea os dados entre os discos sobre raid1:

bash btrfs filesystem balance start -dconvert=raid1 -mconvert=raid1 /mntScrub é uma passagem por todos os dados e metadados do sistema de arquivos e verifica as somas de verificação. Se uma cópia válida estiver disponível (perfis de grupo de blocos replicados), a danificada será reparada. Todas as cópias dos perfis replicados são validadas.

iniciar o processo de depuração :

bash btrfs scrub start /mntver o status do processo de depuração Btrfs em execução:

bash btrfs scrub status /mntver o status do scrub Btrfs para cada um dos dispositivos

bash btrfs scrub status -d / data btrfs scrub cancel / dataPara retomar o processo de depuração do Btrfs que você cancelou ou pausou:

btrfs scrub resume / data

Listando os subvolumes:

bash btrfs subvolume list /ReportsCriando um instantâneo dos subvolumes:

Aqui, estamos criando um instantâneo de leitura e gravação chamado snap de marketing do subvolume de marketing.

bash btrfs subvolume snapshot /Reports/marketing /Reports/marketing-snapAlém disso, você pode criar um instantâneo somente leitura usando o sinalizador -r conforme mostrado. O marketing-rosnap é um instantâneo somente leitura do subvolume de marketing

bash btrfs subvolume snapshot -r /Reports/marketing /Reports/marketing-rosnapForçar a sincronização do sistema de arquivos usando o utilitário 'sync'

Para forçar a sincronização do sistema de arquivos, invoque a opção de sincronização conforme mostrado. Observe que o sistema de arquivos já deve estar montado para que o processo de sincronização continue com sucesso.

bash btrfs filsystem sync /ReportsPara excluir o dispositivo do sistema de arquivos, use o comando device delete conforme mostrado.

bash btrfs device delete /dev/sdc /ReportsPara sondar o status de um scrub, use o comando scrub status com a opção -dR .

bash btrfs scrub status -dR / RelatóriosPara cancelar a execução do scrub, use o comando scrub cancel .

bash $ sudo btrfs scrub cancel / ReportsPara retomar ou continuar com uma depuração interrompida anteriormente, execute o comando de cancelamento de depuração

bash sudo btrfs scrub resume /Reportsmostra o uso do dispositivo de armazenamento:

btrfs filesystem usage /data

Para distribuir os dados, metadados e dados do sistema em todos os dispositivos de armazenamento do RAID (incluindo o dispositivo de armazenamento recém-adicionado) montados no diretório /data , execute o seguinte comando:

sudo btrfs balance start --full-balance /data

Pode demorar um pouco para espalhar os dados, metadados e dados do sistema em todos os dispositivos de armazenamento do RAID se ele contiver muitos dados.

Opções importantes de montagem Btrfs

Nesta seção, vou explicar algumas das importantes opções de montagem do Btrfs. Então vamos começar.

As opções de montagem Btrfs mais importantes são:

**1. acl e noacl

**ACL gerencia permissões de usuários e grupos para os arquivos/diretórios do sistema de arquivos Btrfs.

A opção de montagem acl Btrfs habilita ACL. Para desabilitar a ACL, você pode usar a opção de montagem noacl .

Por padrão, a ACL está habilitada. Portanto, o sistema de arquivos Btrfs usa a opção de montagem acl por padrão.

**2. autodefrag e noautodefrag

**Desfragmentar um sistema de arquivos Btrfs melhorará o desempenho do sistema de arquivos reduzindo a fragmentação de dados.

A opção de montagem autodefrag permite a desfragmentação automática do sistema de arquivos Btrfs.

A opção de montagem noautodefrag desativa a desfragmentação automática do sistema de arquivos Btrfs.

Por padrão, a desfragmentação automática está desabilitada. Portanto, o sistema de arquivos Btrfs usa a opção de montagem noautodefrag por padrão.

**3. compactar e compactar-forçar

**Controla a compactação de dados no nível do sistema de arquivos do sistema de arquivos Btrfs.

A opção compactar compacta apenas os arquivos que valem a pena compactar (se compactar o arquivo economizar espaço em disco).

A opção compress-force compacta todos os arquivos do sistema de arquivos Btrfs, mesmo que a compactação do arquivo aumente seu tamanho.

O sistema de arquivos Btrfs suporta muitos algoritmos de compactação e cada um dos algoritmos de compactação possui diferentes níveis de compactação.

Os algoritmos de compactação suportados pelo Btrfs são: lzo , zlib (nível 1 a 9) e zstd (nível 1 a 15).

Você pode especificar qual algoritmo de compactação usar para o sistema de arquivos Btrfs com uma das seguintes opções de montagem:

- compress=algoritmo:nível

- compress-force=algoritmo:nível

Para obter mais informações, consulte meu artigo Como habilitar a compactação do sistema de arquivos Btrfs .

**4. subvol e subvolid

**Estas opções de montagem são usadas para montar separadamente um subvolume específico de um sistema de arquivos Btrfs.

A opção de montagem subvol é usada para montar o subvolume de um sistema de arquivos Btrfs usando seu caminho relativo.

A opção de montagem subvolid é usada para montar o subvolume de um sistema de arquivos Btrfs usando o ID do subvolume.

Para obter mais informações, consulte meu artigo Como criar e montar subvolumes Btrfs .

**5. dispositivo

A opção de montagem de dispositivo** é usada no sistema de arquivos Btrfs de vários dispositivos ou RAID Btrfs.

Em alguns casos, o sistema operacional pode falhar ao detectar os dispositivos de armazenamento usados em um sistema de arquivos Btrfs de vários dispositivos ou RAID Btrfs. Nesses casos, você pode usar a opção de montagem do dispositivo para especificar os dispositivos que deseja usar para o sistema de arquivos de vários dispositivos Btrfs ou RAID.

Você pode usar a opção de montagem de dispositivo várias vezes para carregar diferentes dispositivos de armazenamento para o sistema de arquivos de vários dispositivos Btrfs ou RAID.

Você pode usar o nome do dispositivo (ou seja, sdb , sdc ) ou UUID , UUID_SUB ou PARTUUID do dispositivo de armazenamento com a opção de montagem do dispositivo para identificar o dispositivo de armazenamento.

Por exemplo,

- dispositivo=/dev/sdb

- dispositivo=/dev/sdb,dispositivo=/dev/sdc

- dispositivo=UUID_SUB=490a263d-eb9a-4558-931e-998d4d080c5d

- device=UUID_SUB=490a263d-eb9a-4558-931e-998d4d080c5d,device=UUID_SUB=f7ce4875-0874-436a-b47d-3edef66d3424

**6. degraded

A opção de montagem degradada** permite que um RAID Btrfs seja montado com menos dispositivos de armazenamento do que o perfil RAID requer.

Por exemplo, o perfil raid1 requer a presença de 2 dispositivos de armazenamento. Se um dos dispositivos de armazenamento não estiver disponível em qualquer caso, você usa a opção de montagem degradada para montar o RAID mesmo que 1 de 2 dispositivos de armazenamento esteja disponível.

**7. commit

A opção commit** mount é usada para definir o intervalo (em segundos) dentro do qual os dados serão gravados no dispositivo de armazenamento.

O padrão é definido como 30 segundos.

Para definir o intervalo de confirmação para 15 segundos, você pode usar a opção de montagem commit=15 (digamos).

**8. ssd e nossd

A opção de montagem ssd** informa ao sistema de arquivos Btrfs que o sistema de arquivos está usando um dispositivo de armazenamento SSD, e o sistema de arquivos Btrfs faz a otimização SSD necessária.

A opção de montagem nossd desativa a otimização do SSD.

O sistema de arquivos Btrfs detecta automaticamente se um SSD é usado para o sistema de arquivos Btrfs. Se um SSD for usado, a opção de montagem de SSD será habilitada. Caso contrário, a opção de montagem nossd é habilitada.

**9. ssd_spread e nossd_spread

A opção de montagem ssd_spread** tenta alocar grandes blocos contínuos de espaço não utilizado do SSD. Esse recurso melhora o desempenho de SSDs de baixo custo (baratos).

A opção de montagem nossd_spread desativa o recurso ssd_spread .

O sistema de arquivos Btrfs detecta automaticamente se um SSD é usado para o sistema de arquivos Btrfs. Se um SSD for usado, a opção de montagem ssd_spread será habilitada. Caso contrário, a opção de montagem nossd_spread é habilitada.

**10. descarte e nodiscard

Se você estiver usando um SSD que suporte TRIM enfileirado assíncrono (SATA rev3.1), a opção de montagem de descarte** permitirá o descarte de blocos de arquivos liberados. Isso melhorará o desempenho do SSD.

Se o SSD não suportar TRIM enfileirado assíncrono, a opção de montagem de descarte prejudicará o desempenho do SSD. Nesse caso, a opção de montagem nodiscard deve ser usada.

Por padrão, a opção de montagem nodiscard é usada.

**11. norecovery

Se a opção de montagem norecovery** for usada, o sistema de arquivos Btrfs não tentará executar a operação de recuperação de dados no momento da montagem.

**12. usebackuproot e nousebackuproot

Se a opção de montagem usebackuproot for usada, o sistema de arquivos Btrfs tentará recuperar qualquer raiz de árvore ruim/corrompida no momento da montagem. O sistema de arquivos Btrfs pode armazenar várias raízes de árvore no sistema de arquivos. A opção de montagem usebackuproot** procurará uma boa raiz de árvore e usará a primeira boa que encontrar.

A opção de montagem nousebackuproot não verificará ou recuperará raízes de árvore inválidas/corrompidas no momento da montagem. Este é o comportamento padrão do sistema de arquivos Btrfs.

**13. space_cache, space_cache=version, nospace_cache e clear_cache

A opção de montagem space_cache** é usada para controlar o cache de espaço livre. O cache de espaço livre é usado para melhorar o desempenho da leitura do espaço livre do grupo de blocos do sistema de arquivos Btrfs na memória (RAM).

O sistema de arquivos Btrfs suporta 2 versões do cache de espaço livre: v1 (padrão) e v2

O mecanismo de cache de espaço livre v2 melhora o desempenho de sistemas de arquivos grandes (tamanho de vários terabytes).

Você pode usar a opção de montagem space_cache=v1 para definir a v1 do cache de espaço livre e a opção de montagem space_cache=v2 para definir a v2 do cache de espaço livre.

A opção de montagem clear_cache é usada para limpar o cache de espaço livre.

Quando o cache de espaço livre v2 é criado, o cache deve ser limpo para criar um cache de espaço livre v1 .

Portanto, para usar o cache de espaço livre v1 após a criação do cache de espaço livre v2 , as opções de montagem clear_cache e space_cache=v1 devem ser combinadas: clear_cache,space_cache=v1

A opção de montagem nospace_cache é usada para desabilitar o cache de espaço livre.

Para desabilitar o cache de espaço livre após a criação do cache v1 ou v2 , as opções de montagem nospace_cache e clear_cache devem ser combinadas: clear_cache,nosapce_cache

**14. skip_balance

Por padrão, a operação de balanceamento interrompida/pausada de um sistema de arquivos Btrfs de vários dispositivos ou RAID Btrfs será retomada automaticamente assim que o sistema de arquivos Btrfs for montado. Para desabilitar a retomada automática da operação de equilíbrio interrompido/pausado em um sistema de arquivos Btrfs de vários dispositivos ou RAID Btrfs, você pode usar a opção de montagem skip_balance .**

**15. datacow e nodatacow

A opção datacow** mount habilita o recurso Copy-on-Write (CoW) do sistema de arquivos Btrfs. É o comportamento padrão.

Se você deseja desabilitar o recurso Copy-on-Write (CoW) do sistema de arquivos Btrfs para os arquivos recém-criados, monte o sistema de arquivos Btrfs com a opção de montagem nodatacow .

**16. datasum e nodatasum

A opção datasum** mount habilita a soma de verificação de dados para arquivos recém-criados do sistema de arquivos Btrfs. Este é o comportamento padrão.

Se você não quiser que o sistema de arquivos Btrfs faça a soma de verificação dos dados dos arquivos recém-criados, monte o sistema de arquivos Btrfs com a opção de montagem nodatasum .

Perfis Btrfs

Um perfil Btrfs é usado para informar ao sistema de arquivos Btrfs quantas cópias dos dados/metadados devem ser mantidas e quais níveis de RAID devem ser usados para os dados/metadados. O sistema de arquivos Btrfs contém muitos perfis. Entendê-los o ajudará a configurar um RAID Btrfs da maneira que você deseja.

Os perfis Btrfs disponíveis são os seguintes:

single : Se o perfil único for usado para os dados/metadados, apenas uma cópia dos dados/metadados será armazenada no sistema de arquivos, mesmo se você adicionar vários dispositivos de armazenamento ao sistema de arquivos. Assim, 100% do espaço em disco de cada um dos dispositivos de armazenamento adicionados ao sistema de arquivos pode ser utilizado.

dup : Se o perfil dup for usado para os dados/metadados, cada um dos dispositivos de armazenamento adicionados ao sistema de arquivos manterá duas cópias dos dados/metadados. Assim, 50% do espaço em disco de cada um dos dispositivos de armazenamento adicionados ao sistema de arquivos pode ser utilizado.

raid0 : No perfil raid0 , os dados/metadados serão divididos igualmente em todos os dispositivos de armazenamento adicionados ao sistema de arquivos. Nesta configuração, não haverá dados/metadados redundantes (duplicados). Assim, 100% do espaço em disco de cada um dos dispositivos de armazenamento adicionados ao sistema de arquivos pode ser usado. Se, em qualquer caso, um dos dispositivos de armazenamento falhar, todo o sistema de arquivos será corrompido. Você precisará de pelo menos dois dispositivos de armazenamento para configurar o sistema de arquivos Btrfs no perfil raid0 .

raid1 : No perfil raid1 , duas cópias dos dados/metadados serão armazenadas nos dispositivos de armazenamento adicionados ao sistema de arquivos. Nesta configuração, a matriz RAID pode sobreviver a uma falha de unidade. Mas você pode usar apenas 50% do espaço total em disco. Você precisará de pelo menos dois dispositivos de armazenamento para configurar o sistema de arquivos Btrfs no perfil raid1 .

raid1c3 : No perfil raid1c3 , três cópias dos dados/metadados serão armazenadas nos dispositivos de armazenamento adicionados ao sistema de arquivos. Nesta configuração, a matriz RAID pode sobreviver a duas falhas de unidade, mas você pode usar apenas 33% do espaço total em disco. Você precisará de pelo menos três dispositivos de armazenamento para configurar o sistema de arquivos Btrfs no perfil raid1c3 .

raid1c4 : No perfil raid1c4 , quatro cópias dos dados/metadados serão armazenadas nos dispositivos de armazenamento adicionados ao sistema de arquivos. Nesta configuração, a matriz RAID pode sobreviver a três falhas de unidade, mas você pode usar apenas 25% do espaço total em disco. Você precisará de pelo menos quatro dispositivos de armazenamento para configurar o sistema de arquivos Btrfs no perfil raid1c4 .

raid10 : No perfil raid10 , duas cópias dos dados/metadados serão armazenadas nos dispositivos de armazenamento adicionados ao sistema de arquivos, como no perfil raid1 . Além disso, os dados/metadados serão divididos entre os dispositivos de armazenamento, como no perfil raid0 .

O perfil raid10 é um híbrido dos perfis raid1 e raid0 . Alguns dos dispositivos de armazenamento formam arrays raid1 e alguns desses arrays raid1 são usados para formar um array raid0 . Em uma configuração raid10 , o sistema de arquivos pode sobreviver a uma única falha de unidade em cada uma das matrizes raid1 .

Você pode usar 50% do espaço total em disco na configuração raid10 . Você precisará de pelo menos quatro dispositivos de armazenamento para configurar o sistema de arquivos Btrfs no perfil raid10 .

raid5 : No perfil raid5 , uma cópia dos dados/metadados será dividida entre os dispositivos de armazenamento. Uma única paridade será calculada e distribuída entre os dispositivos de armazenamento do array RAID.

Em uma configuração raid5 , o sistema de arquivos pode sobreviver a uma única falha de unidade. Se uma unidade falhar, você pode adicionar uma nova unidade ao sistema de arquivos e os dados perdidos serão calculados a partir da paridade distribuída das unidades em execução.

Você pode usar 1 00x(N-1)/N % do total de espaços em disco na configuração raid5 . Aqui, N é o número de dispositivos de armazenamento adicionados ao sistema de arquivos. Você precisará de pelo menos três dispositivos de armazenamento para configurar o sistema de arquivos Btrfs no perfil raid5 .

raid6 : No perfil raid6 , uma cópia dos dados/metadados será dividida entre os dispositivos de armazenamento. Duas paridades serão calculadas e distribuídas entre os dispositivos de armazenamento do array RAID.

Em uma configuração raid6 , o sistema de arquivos pode sobreviver a duas falhas de unidade ao mesmo tempo. Se uma unidade falhar, você poderá adicionar uma nova unidade ao sistema de arquivos e os dados perdidos serão calculados a partir das duas paridades distribuídas das unidades em execução.

Você pode usar 100x(N-2)/N % do espaço total em disco na configuração raid6 . Aqui, N é o número de dispositivos de armazenamento adicionados ao sistema de arquivos. Você precisará de pelo menos quatro dispositivos de armazenamento para configurar o sistema de arquivos Btrfs no perfil raid6 .

-

@ 9223d2fa:b57e3de7

2025-04-15 02:54:00

@ 9223d2fa:b57e3de7

2025-04-15 02:54:0012,600 steps

-

@ 0fa80bd3:ea7325de

2025-04-09 21:19:39

@ 0fa80bd3:ea7325de

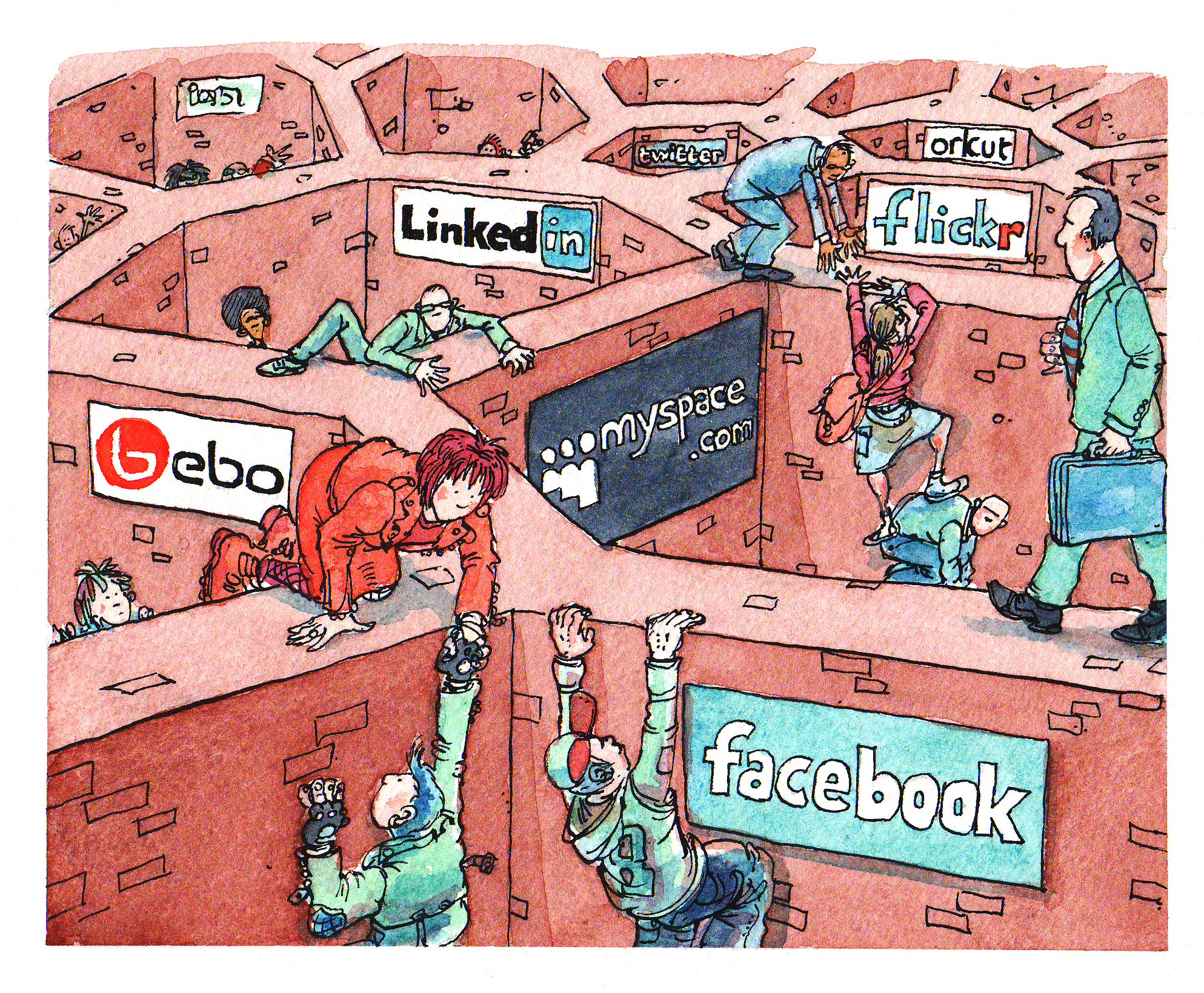

2025-04-09 21:19:39DAOs promised decentralization. They offered a system where every member could influence a project's direction, where money and power were transparently distributed, and decisions were made through voting. All of it recorded immutably on the blockchain, free from middlemen.

But something didn’t work out. In practice, most DAOs haven’t evolved into living, self-organizing organisms. They became something else: clubs where participation is unevenly distributed. Leaders remained - only now without formal titles. They hold influence through control over communications, task framing, and community dynamics. Centralization still exists, just wrapped in a new package.

But there's a second, less obvious problem. Crowds can’t create strategy. In DAOs, people vote for what "feels right to the majority." But strategy isn’t about what feels good - it’s about what’s necessary. Difficult, unpopular, yet forward-looking decisions often fail when put to a vote. A founder’s vision is a risk. But in healthy teams, it’s that risk that drives progress. In DAOs, risk is almost always diluted until it becomes something safe and vague.

Instead of empowering leaders, DAOs often neutralize them. This is why many DAOs resemble consensus machines. Everyone talks, debates, and participates, but very little actually gets done. One person says, “Let’s jump,” and five others respond, “Let’s discuss that first.” This dynamic might work for open forums, but not for action.

Decentralization works when there’s trust and delegation, not just voting. Until DAOs develop effective systems for assigning roles, taking ownership, and acting with flexibility, they will keep losing ground to old-fashioned startups led by charismatic founders with a clear vision.

We’ve seen this in many real-world cases. Take MakerDAO, one of the most mature and technically sophisticated DAOs. Its governance token (MKR) holders vote on everything from interest rates to protocol upgrades. While this has allowed for transparency and community involvement, the process is often slow and bureaucratic. Complex proposals stall. Strategic pivots become hard to implement. And in 2023, a controversial proposal to allocate billions to real-world assets passed only narrowly, after months of infighting - highlighting how vision and execution can get stuck in the mud of distributed governance.

On the other hand, Uniswap DAO, responsible for the largest decentralized exchange, raised governance participation only after launching a delegation system where token holders could choose trusted representatives. Still, much of the activity is limited to a small group of active contributors. The vast majority of token holders remain passive. This raises the question: is it really community-led, or just a formalized power structure with lower transparency?

Then there’s ConstitutionDAO, an experiment that went viral. It raised over $40 million in days to try and buy a copy of the U.S. Constitution. But despite the hype, the DAO failed to win the auction. Afterwards, it struggled with refund logistics, communication breakdowns, and confusion over governance. It was a perfect example of collective enthusiasm without infrastructure or planning - proof that a DAO can raise capital fast but still lack cohesion.

Not all efforts have failed. Projects like Gitcoin DAO have made progress by incentivizing small, individual contributions. Their quadratic funding mechanism rewards projects based on the number of contributors, not just the size of donations, helping to elevate grassroots initiatives. But even here, long-term strategy often falls back on a core group of organizers rather than broad community consensus.

The pattern is clear: when the stakes are low or the tasks are modular, DAOs can coordinate well. But when bold moves are needed—when someone has to take responsibility and act under uncertainty DAOs often freeze. In the name of consensus, they lose momentum.

That’s why the organization of the future can’t rely purely on decentralization. It must encourage individual initiative and the ability to take calculated risks. People need to see their contribution not just as a vote, but as a role with clear actions and expected outcomes. When the situation demands, they should be empowered to act first and present the results to the community afterwards allowing for both autonomy and accountability. That’s not a flaw in the system. It’s how real progress happens.

-

@ c066aac5:6a41a034

2025-04-05 16:58:58

@ c066aac5:6a41a034

2025-04-05 16:58:58I’m drawn to extremities in art. The louder, the bolder, the more outrageous, the better. Bold art takes me out of the mundane into a whole new world where anything and everything is possible. Having grown up in the safety of the suburban midwest, I was a bit of a rebellious soul in search of the satiation that only came from the consumption of the outrageous. My inclination to find bold art draws me to NOSTR, because I believe NOSTR can be the place where the next generation of artistic pioneers go to express themselves. I also believe that as much as we are able, were should invite them to come create here.

My Background: A Small Side Story

My father was a professional gamer in the 80s, back when there was no money or glory in the avocation. He did get a bit of spotlight though after the fact: in the mid 2000’s there were a few parties making documentaries about that era of gaming as well as current arcade events (namely 2007’sChasing GhostsandThe King of Kong: A Fistful of Quarters). As a result of these documentaries, there was a revival in the arcade gaming scene. My family attended events related to the documentaries or arcade gaming and I became exposed to a lot of things I wouldn’t have been able to find. The producer ofThe King of Kong: A Fistful of Quarters had previously made a documentary calledNew York Dollwhich was centered around the life of bassist Arthur Kane. My 12 year old mind was blown: The New York Dolls were a glam-punk sensation dressed in drag. The music was from another planet. Johnny Thunders’ guitar playing was like Chuck Berry with more distortion and less filter. Later on I got to meet the Galaga record holder at the time, Phil Day, in Ottumwa Iowa. Phil is an Australian man of high intellect and good taste. He exposed me to great creators such as Nick Cave & The Bad Seeds, Shakespeare, Lou Reed, artists who created things that I had previously found inconceivable.

I believe this time period informed my current tastes and interests, but regrettably I think it also put coals on the fire of rebellion within. I stopped taking my parents and siblings seriously, the Christian faith of my family (which I now hold dearly to) seemed like a mundane sham, and I felt I couldn’t fit in with most people because of my avant-garde tastes. So I write this with the caveat that there should be a way to encourage these tastes in children without letting them walk down the wrong path. There is nothing inherently wrong with bold art, but I’d advise parents to carefully find ways to cultivate their children’s tastes without completely shutting them down and pushing them away as a result. My parents were very loving and patient during this time; I thank God for that.

With that out of the way, lets dive in to some bold artists:

Nicolas Cage: Actor

There is an excellent video by Wisecrack on Nicolas Cage that explains him better than I will, which I will linkhere. Nicolas Cage rejects the idea that good acting is tied to mere realism; all of his larger than life acting decisions are deliberate choices. When that clicked for me, I immediately realized the man is a genius. He borrows from Kabuki and German Expressionism, art forms that rely on exaggeration to get the message across. He has even created his own acting style, which he calls Nouveau Shamanic. He augments his imagination to go from acting to being. Rather than using the old hat of method acting, he transports himself to a new world mentally. The projects he chooses to partake in are based on his own interests or what he considers would be a challenge (making a bad script good for example). Thus it doesn’t matter how the end result comes out; he has already achieved his goal as an artist. Because of this and because certain directors don’t know how to use his talents, he has a noticeable amount of duds in his filmography. Dig around the duds, you’ll find some pure gold. I’d personally recommend the filmsPig, Joe, Renfield, and his Christmas film The Family Man.

Nick Cave: Songwriter

What a wild career this man has had! From the apocalyptic mayhem of his band The Birthday Party to the pensive atmosphere of his albumGhosteen, it seems like Nick Cave has tried everything. I think his secret sauce is that he’s always working. He maintains an excellent newsletter calledThe Red Hand Files, he has written screenplays such asLawless, he has written books, he has made great film scores such asThe Assassination of Jesse James by the Coward Robert Ford, the man is religiously prolific. I believe that one of the reasons he is prolific is that he’s not afraid to experiment. If he has an idea, he follows it through to completion. From the albumMurder Ballads(which is comprised of what the title suggests) to his rejected sequel toGladiator(Gladiator: Christ Killer), he doesn’t seem to be afraid to take anything on. This has led to some over the top works as well as some deeply personal works. Albums likeSkeleton TreeandGhosteenwere journeys through the grief of his son’s death. The Boatman’s Callis arguably a better break-up album than anything Taylor Swift has put out. He’s not afraid to be outrageous, he’s not afraid to offend, but most importantly he’s not afraid to be himself. Works I’d recommend include The Birthday Party’sLive 1981-82, Nick Cave & The Bad Seeds’The Boatman’s Call, and the filmLawless.

Jim Jarmusch: Director

I consider Jim’s films to be bold almost in an ironic sense: his works are bold in that they are, for the most part, anti-sensational. He has a rule that if his screenplays are criticized for a lack of action, he makes them even less eventful. Even with sensational settings his films feel very close to reality, and they demonstrate the beauty of everyday life. That's what is bold about his art to me: making the sensational grounded in reality while making everyday reality all the more special. Ghost Dog: The Way of the Samurai is about a modern-day African-American hitman who strictly follows the rules of the ancient Samurai, yet one can resonate with the humanity of a seemingly absurd character. Only Lovers Left Aliveis a vampire love story, but in the middle of a vampire romance one can see their their own relationships in a new deeply human light. Jim’s work reminds me that art reflects life, and that there is sacred beauty in seemingly mundane everyday life. I personally recommend his filmsPaterson,Down by Law, andCoffee and Cigarettes.

NOSTR: We Need Bold Art

NOSTR is in my opinion a path to a better future. In a world creeping slowly towards everything apps, I hope that the protocol where the individual owns their data wins over everything else. I love freedom and sovereignty. If NOSTR is going to win the race of everything apps, we need more than Bitcoin content. We need more than shirtless bros paying for bananas in foreign countries and exercising with girls who have seductive accents. Common people cannot see themselves in such a world. NOSTR needs to catch the attention of everyday people. I don’t believe that this can be accomplished merely by introducing more broadly relevant content; people are searching for content that speaks to them. I believe that NOSTR can and should attract artists of all kinds because NOSTR is one of the few places on the internet where artists can express themselves fearlessly. Getting zaps from NOSTR’s value-for-value ecosystem has far less friction than crowdfunding a creative project or pitching investors that will irreversibly modify an artist’s vision. Having a place where one can post their works without fear of censorship should be extremely enticing. Having a place where one can connect with fellow humans directly as opposed to a sea of bots should seem like the obvious solution. If NOSTR can become a safe haven for artists to express themselves and spread their work, I believe that everyday people will follow. The banker whose stressful job weighs on them will suddenly find joy with an original meme made by a great visual comedian. The programmer for a healthcare company who is drowning in hopeless mundanity could suddenly find a new lust for life by hearing the song of a musician who isn’t afraid to crowdfund their their next project by putting their lighting address on the streets of the internet. The excel guru who loves independent film may find that NOSTR is the best way to support non corporate movies. My closing statement: continue to encourage the artists in your life as I’m sure you have been, but while you’re at it give them the purple pill. You may very well be a part of building a better future.

-

@ 04c915da:3dfbecc9

2025-03-26 20:54:33

@ 04c915da:3dfbecc9

2025-03-26 20:54:33Capitalism is the most effective system for scaling innovation. The pursuit of profit is an incredibly powerful human incentive. Most major improvements to human society and quality of life have resulted from this base incentive. Market competition often results in the best outcomes for all.

That said, some projects can never be monetized. They are open in nature and a business model would centralize control. Open protocols like bitcoin and nostr are not owned by anyone and if they were it would destroy the key value propositions they provide. No single entity can or should control their use. Anyone can build on them without permission.

As a result, open protocols must depend on donation based grant funding from the people and organizations that rely on them. This model works but it is slow and uncertain, a grind where sustainability is never fully reached but rather constantly sought. As someone who has been incredibly active in the open source grant funding space, I do not think people truly appreciate how difficult it is to raise charitable money and deploy it efficiently.

Projects that can be monetized should be. Profitability is a super power. When a business can generate revenue, it taps into a self sustaining cycle. Profit fuels growth and development while providing projects independence and agency. This flywheel effect is why companies like Google, Amazon, and Apple have scaled to global dominance. The profit incentive aligns human effort with efficiency. Businesses must innovate, cut waste, and deliver value to survive.

Contrast this with non monetized projects. Without profit, they lean on external support, which can dry up or shift with donor priorities. A profit driven model, on the other hand, is inherently leaner and more adaptable. It is not charity but survival. When survival is tied to delivering what people want, scale follows naturally.

The real magic happens when profitable, sustainable businesses are built on top of open protocols and software. Consider the many startups building on open source software stacks, such as Start9, Mempool, and Primal, offering premium services on top of the open source software they build out and maintain. Think of companies like Block or Strike, which leverage bitcoin’s open protocol to offer their services on top. These businesses amplify the open software and protocols they build on, driving adoption and improvement at a pace donations alone could never match.

When you combine open software and protocols with profit driven business the result are lean, sustainable companies that grow faster and serve more people than either could alone. Bitcoin’s network, for instance, benefits from businesses that profit off its existence, while nostr will expand as developers monetize apps built on the protocol.

Capitalism scales best because competition results in efficiency. Donation funded protocols and software lay the groundwork, while market driven businesses build on top. The profit incentive acts as a filter, ensuring resources flow to what works, while open systems keep the playing field accessible, empowering users and builders. Together, they create a flywheel of innovation, growth, and global benefit.

-

@ 04c915da:3dfbecc9

2025-03-25 17:43:44

@ 04c915da:3dfbecc9

2025-03-25 17:43:44One of the most common criticisms leveled against nostr is the perceived lack of assurance when it comes to data storage. Critics argue that without a centralized authority guaranteeing that all data is preserved, important information will be lost. They also claim that running a relay will become prohibitively expensive. While there is truth to these concerns, they miss the mark. The genius of nostr lies in its flexibility, resilience, and the way it harnesses human incentives to ensure data availability in practice.

A nostr relay is simply a server that holds cryptographically verifiable signed data and makes it available to others. Relays are simple, flexible, open, and require no permission to run. Critics are right that operating a relay attempting to store all nostr data will be costly. What they miss is that most will not run all encompassing archive relays. Nostr does not rely on massive archive relays. Instead, anyone can run a relay and choose to store whatever subset of data they want. This keeps costs low and operations flexible, making relay operation accessible to all sorts of individuals and entities with varying use cases.

Critics are correct that there is no ironclad guarantee that every piece of data will always be available. Unlike bitcoin where data permanence is baked into the system at a steep cost, nostr does not promise that every random note or meme will be preserved forever. That said, in practice, any data perceived as valuable by someone will likely be stored and distributed by multiple entities. If something matters to someone, they will keep a signed copy.

Nostr is the Streisand Effect in protocol form. The Streisand effect is when an attempt to suppress information backfires, causing it to spread even further. With nostr, anyone can broadcast signed data, anyone can store it, and anyone can distribute it. Try to censor something important? Good luck. The moment it catches attention, it will be stored on relays across the globe, copied, and shared by those who find it worth keeping. Data deemed important will be replicated across servers by individuals acting in their own interest.

Nostr’s distributed nature ensures that the system does not rely on a single point of failure or a corporate overlord. Instead, it leans on the collective will of its users. The result is a network where costs stay manageable, participation is open to all, and valuable verifiable data is stored and distributed forever.

-

@ 21335073:a244b1ad

2025-03-18 20:47:50

@ 21335073:a244b1ad

2025-03-18 20:47:50Warning: This piece contains a conversation about difficult topics. Please proceed with caution.

TL;DR please educate your children about online safety.

Julian Assange wrote in his 2012 book Cypherpunks, “This book is not a manifesto. There isn’t time for that. This book is a warning.” I read it a few times over the past summer. Those opening lines definitely stood out to me. I wish we had listened back then. He saw something about the internet that few had the ability to see. There are some individuals who are so close to a topic that when they speak, it’s difficult for others who aren’t steeped in it to visualize what they’re talking about. I didn’t read the book until more recently. If I had read it when it came out, it probably would have sounded like an unknown foreign language to me. Today it makes more sense.

This isn’t a manifesto. This isn’t a book. There is no time for that. It’s a warning and a possible solution from a desperate and determined survivor advocate who has been pulling and unraveling a thread for a few years. At times, I feel too close to this topic to make any sense trying to convey my pathway to my conclusions or thoughts to the general public. My hope is that if nothing else, I can convey my sense of urgency while writing this. This piece is a watchman’s warning.

When a child steps online, they are walking into a new world. A new reality. When you hand a child the internet, you are handing them possibilities—good, bad, and ugly. This is a conversation about lowering the potential of negative outcomes of stepping into that new world and how I came to these conclusions. I constantly compare the internet to the road. You wouldn’t let a young child run out into the road with no guidance or safety precautions. When you hand a child the internet without any type of guidance or safety measures, you are allowing them to play in rush hour, oncoming traffic. “Look left, look right for cars before crossing.” We almost all have been taught that as children. What are we taught as humans about safety before stepping into a completely different reality like the internet? Very little.

I could never really figure out why many folks in tech, privacy rights activists, and hackers seemed so cold to me while talking about online child sexual exploitation. I always figured that as a survivor advocate for those affected by these crimes, that specific, skilled group of individuals would be very welcoming and easy to talk to about such serious topics. I actually had one hacker laugh in my face when I brought it up while I was looking for answers. I thought maybe this individual thought I was accusing them of something I wasn’t, so I felt bad for asking. I was constantly extremely disappointed and would ask myself, “Why don’t they care? What could I say to make them care more? What could I say to make them understand the crisis and the level of suffering that happens as a result of the problem?”

I have been serving minor survivors of online child sexual exploitation for years. My first case serving a survivor of this specific crime was in 2018—a 13-year-old girl sexually exploited by a serial predator on Snapchat. That was my first glimpse into this side of the internet. I won a national award for serving the minor survivors of Twitter in 2023, but I had been working on that specific project for a few years. I was nominated by a lawyer representing two survivors in a legal battle against the platform. I’ve never really spoken about this before, but at the time it was a choice for me between fighting Snapchat or Twitter. I chose Twitter—or rather, Twitter chose me. I heard about the story of John Doe #1 and John Doe #2, and I was so unbelievably broken over it that I went to war for multiple years. I was and still am royally pissed about that case. As far as I was concerned, the John Doe #1 case proved that whatever was going on with corporate tech social media was so out of control that I didn’t have time to wait, so I got to work. It was reading the messages that John Doe #1 sent to Twitter begging them to remove his sexual exploitation that broke me. He was a child begging adults to do something. A passion for justice and protecting kids makes you do wild things. I was desperate to find answers about what happened and searched for solutions. In the end, the platform Twitter was purchased. During the acquisition, I just asked Mr. Musk nicely to prioritize the issue of detection and removal of child sexual exploitation without violating digital privacy rights or eroding end-to-end encryption. Elon thanked me multiple times during the acquisition, made some changes, and I was thanked by others on the survivors’ side as well.

I still feel that even with the progress made, I really just scratched the surface with Twitter, now X. I left that passion project when I did for a few reasons. I wanted to give new leadership time to tackle the issue. Elon Musk made big promises that I knew would take a while to fulfill, but mostly I had been watching global legislation transpire around the issue, and frankly, the governments are willing to go much further with X and the rest of corporate tech than I ever would. My work begging Twitter to make changes with easier reporting of content, detection, and removal of child sexual exploitation material—without violating privacy rights or eroding end-to-end encryption—and advocating for the minor survivors of the platform went as far as my principles would have allowed. I’m grateful for that experience. I was still left with a nagging question: “How did things get so bad with Twitter where the John Doe #1 and John Doe #2 case was able to happen in the first place?” I decided to keep looking for answers. I decided to keep pulling the thread.

I never worked for Twitter. This is often confusing for folks. I will say that despite being disappointed in the platform’s leadership at times, I loved Twitter. I saw and still see its value. I definitely love the survivors of the platform, but I also loved the platform. I was a champion of the platform’s ability to give folks from virtually around the globe an opportunity to speak and be heard.

I want to be clear that John Doe #1 really is my why. He is the inspiration. I am writing this because of him. He represents so many globally, and I’m still inspired by his bravery. One child’s voice begging adults to do something—I’m an adult, I heard him. I’d go to war a thousand more lifetimes for that young man, and I don’t even know his name. Fighting has been personally dark at times; I’m not even going to try to sugarcoat it, but it has been worth it.

The data surrounding the very real crime of online child sexual exploitation is available to the public online at any time for anyone to see. I’d encourage you to go look at the data for yourself. I believe in encouraging folks to check multiple sources so that you understand the full picture. If you are uncomfortable just searching around the internet for information about this topic, use the terms “CSAM,” “CSEM,” “SG-CSEM,” or “AI Generated CSAM.” The numbers don’t lie—it’s a nightmare that’s out of control. It’s a big business. The demand is high, and unfortunately, business is booming. Organizations collect the data, tech companies often post their data, governments report frequently, and the corporate press has covered a decent portion of the conversation, so I’m sure you can find a source that you trust.

Technology is changing rapidly, which is great for innovation as a whole but horrible for the crime of online child sexual exploitation. Those wishing to exploit the vulnerable seem to be adapting to each technological change with ease. The governments are so far behind with tackling these issues that as I’m typing this, it’s borderline irrelevant to even include them while speaking about the crime or potential solutions. Technology is changing too rapidly, and their old, broken systems can’t even dare to keep up. Think of it like the governments’ “War on Drugs.” Drugs won. In this case as well, the governments are not winning. The governments are talking about maybe having a meeting on potentially maybe having legislation around the crimes. The time to have that meeting would have been many years ago. I’m not advocating for governments to legislate our way out of this. I’m on the side of educating and innovating our way out of this.