-

@ c230edd3:8ad4a712

2025-04-11 16:02:15

@ c230edd3:8ad4a712

2025-04-11 16:02:15Chef's notes

Wildly enough, this is delicious. It's sweet and savory.

(I copied this recipe off of a commercial cheese maker's site, just FYI)

I hadn't fully froze the ice cream when I took the picture shown. This is fresh out of the churner.

Details

- ⏲️ Prep time: 15 min

- 🍳 Cook time: 30 min

- 🍽️ Servings: 4

Ingredients

- 12 oz blue cheese

- 3 Tbsp lemon juice

- 1 c sugar

- 1 tsp salt

- 1 qt heavy cream

- 3/4 c chopped dark chocolate

Directions

- Put the blue cheese, lemon juice, sugar, and salt into a bowl

- Bring heavy cream to a boil, stirring occasionally

- Pour heavy cream over the blue cheese mix and stir until melted

- Pour into prepared ice cream maker, follow unit instructions

- Add dark chocolate halfway through the churning cycle

- Freeze until firm. Enjoy.

-

@ c230edd3:8ad4a712

2025-04-09 00:33:31

@ c230edd3:8ad4a712

2025-04-09 00:33:31Chef's notes

I found this recipe a couple years ago and have been addicted to it since. Its incredibly easy, and cheap to prep. Freeze the sausage in flat, single serving portions. That way it can be cooked from frozen for a fast, flavorful, and healthy lunch or dinner. I took inspiration from the video that contained this recipe, and almost always pan fry the frozen sausage with some baby broccoli. The steam cooks the broccoli and the fats from the sausage help it to sear, while infusing the vibrant flavors. Serve with some rice, if desired. I often use serrano peppers, due to limited produce availability. They work well for a little heat and nice flavor that is not overpowering.

Details

- ⏲️ Prep time: 25 min

- 🍳 Cook time: 15 min (only needed if cooking at time of prep)

- 🍽️ Servings: 10

Ingredients

- 4 lbs ground pork

- 12-15 cloves garlic, minced

- 6 Thai or Serrano peppers, rough chopped

- 1/4 c. lime juice

- 4 Tbsp fish sauce

- 1 Tbsp brown sugar

- 1/2 c. chopped cilantro

Directions

- Mix all ingredients in a large bowl.

- Portion and freeze, as desired.

- Sautè frozen portions in hot frying pan, with broccoli or other fresh veggies.

- Serve with rice or alone.

-

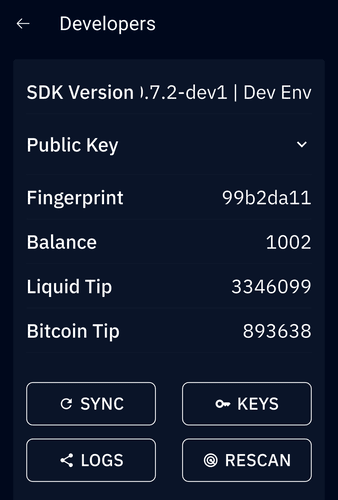

@ 00000001:b0c77eb9

2025-02-14 21:24:24

@ 00000001:b0c77eb9

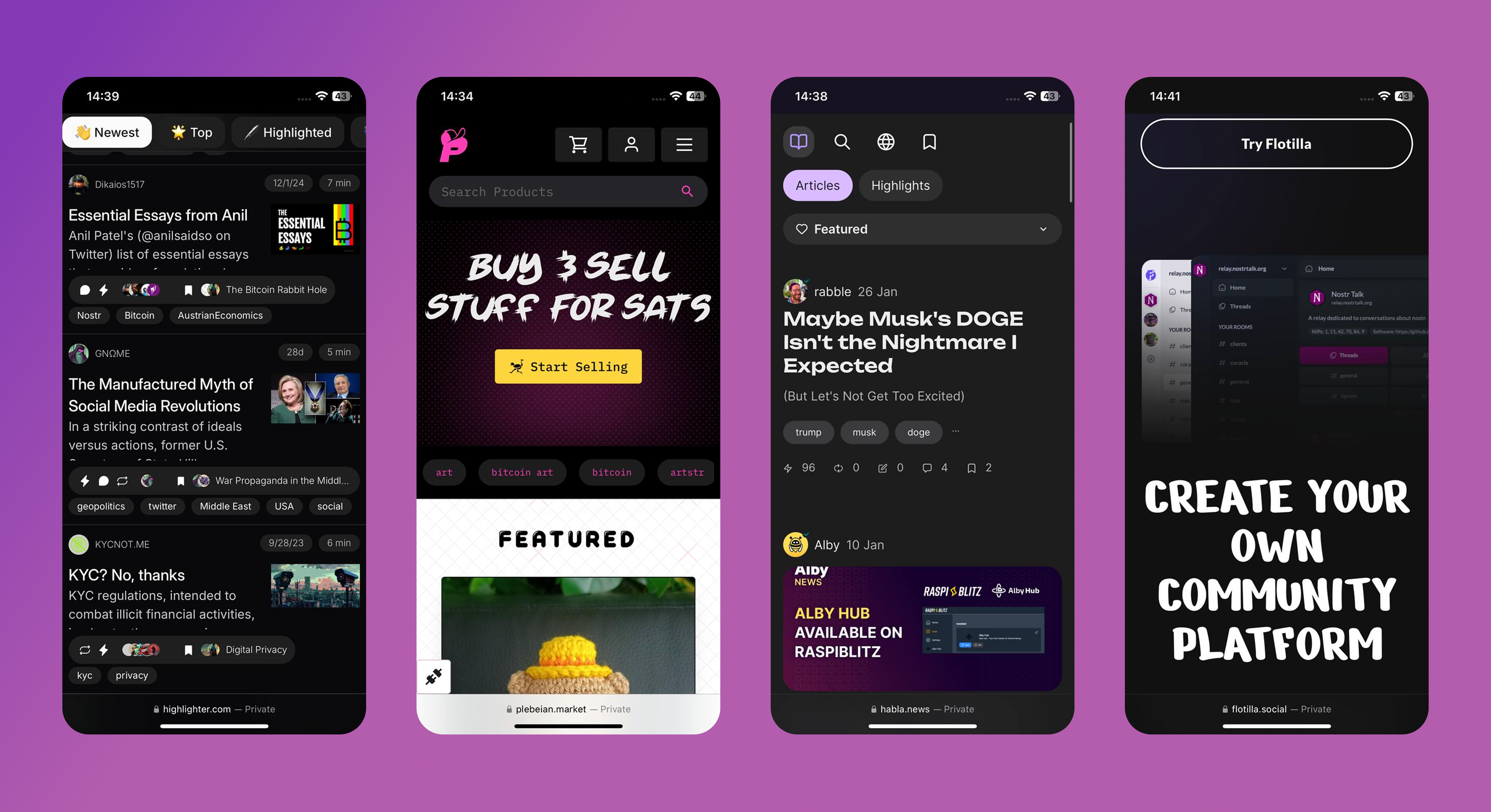

2025-02-14 21:24:24مواقع التواصل الإجتماعي العامة هي التي تتحكم بك، تتحكم بك بفرض أجندتها وتجبرك على اتباعها وتحظر وتحذف كل ما يخالفها، وحرية التعبير تنحصر في أجندتها تلك!

وخوارزمياتها الخبيثة التي لا حاجة لها، تعرض لك مايريدون منك أن تراه وتحجب ما لا يريدونك أن تراه.

في نوستر انت المتحكم، انت الذي تحدد من تتابع و انت الذي تحدد المرحلات التي تنشر منشوراتك بها.

نوستر لامركزي، بمعنى عدم وجود سلطة تتحكم ببياناتك، بياناتك موجودة في المرحلات، ولا احد يستطيع حذفها او تعديلها او حظر ظهورها.

و هذا لا ينطبق فقط على مواقع التواصل الإجتماعي العامة، بل ينطبق أيضاً على الـfediverse، في الـfediverse انت لست حر، انت تتبع الخادم الذي تستخدمه ويستطيع هذا الخادم حظر ما لا يريد ظهوره لك، لأنك لا تتواصل مع بقية الخوادم بنفسك، بل خادمك من يقوم بذلك بالنيابة عنك.

وحتى إذا كنت تمتلك خادم في شبكة الـfediverse، إذا خالفت اجندة بقية الخوادم ونظرتهم عن حرية الرأي و التعبير سوف يندرج خادمك في القائمة السوداء fediblock ولن يتمكن خادمك من التواصل مع بقية خوادم الشبكة، ستكون محصوراً بالخوادم الأخرى المحظورة كخادمك، بالتالي انت في الشبكة الأخرى من الـfediverse!

نعم، يوجد شبكتان في الكون الفدرالي fediverse شبكة الصالحين التابعين للأجندة الغربية وشبكة الطالحين الذين لا يتبعون لها، إذا تم إدراج خادمك في قائمة fediblock سوف تذهب للشبكة الأخرى!

-

@ d34e832d:383f78d0

2025-04-24 06:28:48

@ d34e832d:383f78d0

2025-04-24 06:28:48Operation

Central to this implementation is the utilization of Tails OS, a Debian-based live operating system designed for privacy and anonymity, alongside the Electrum Wallet, a lightweight Bitcoin wallet that provides a streamlined interface for secure Bitcoin transactions.

Additionally, the inclusion of advanced cryptographic verification mechanisms, such as QuickHash, serves to bolster integrity checks throughout the storage process. This multifaceted approach ensures a rigorous adherence to end-to-end operational security (OpSec) principles while simultaneously safeguarding user autonomy in the custody of digital assets.

Furthermore, the proposed methodology aligns seamlessly with contemporary cybersecurity paradigms, prioritizing characteristics such as deterministic builds—where software builds are derived from specific source code to eliminate variability—offline key generation processes designed to mitigate exposure to online threats, and the implementation of minimal attack surfaces aimed at reducing potential vectors for exploitation.

Ultimately, this sophisticated approach presents a methodical and secure paradigm for the custody of private keys, thereby catering to the exigencies of high-assurance Bitcoin storage requirements.

1. Cold Storage Refers To The offline Storage

Cold storage refers to the offline storage of private keys used to sign Bitcoin transactions, providing the highest level of protection against network-based threats. This paper outlines a verifiable method for constructing such a storage system using the following core principles:

- Air-gapped key generation

- Open-source software

- Deterministic cryptographic tools

- Manual integrity verification

- Offline transaction signing

The method prioritizes cryptographic security, software verifiability, and minimal hardware dependency.

2. Hardware and Software Requirements

2.1 Hardware

- One 64-bit computer (laptop/desktop)

- 1 x USB Flash Drive (≥8 GB, high-quality brand recommended)

- Paper and pen (for seed phrase)

- Optional: Printer (for xpub QR export)

2.2 Software Stack

- Tails OS (latest ISO, from tails.boum.org)

- Balena Etcher (to flash ISO)

- QuickHash GUI (for SHA-256 checksum validation)

- Electrum Wallet (bundled within Tails OS)

3. System Preparation and Software Verification

3.1 Image Verification

Prior to flashing the ISO, the integrity of the Tails OS image must be cryptographically validated. Using QuickHash:

plaintext SHA256 (tails-amd64-<version>.iso) = <expected_hash>Compare the hash output with the official hash provided on the Tails OS website. This mitigates the risk of ISO tampering or supply chain compromise.

3.2 Flashing the OS

Balena Etcher is used to flash the ISO to a USB drive:

- Insert USB drive.

- Launch Balena Etcher.

- Select the verified Tails ISO.

- Flash to USB and safely eject.

4. Cold Wallet Generation Procedure

4.1 Boot Into Tails OS

- Restart the system and boot into BIOS/UEFI boot menu.

- Select the USB drive containing Tails OS.

- Configure network settings to disable all connectivity.

4.2 Create Wallet in Electrum (Cold)

- Open Electrum from the Tails application launcher.

- Select "Standard Wallet" → "Create a new seed".

- Choose SegWit for address type (for lower fees and modern compatibility).

- Write down the 12-word seed phrase on paper. Never store digitally.

- Confirm the seed.

- Set a strong password for wallet access.

5. Exporting the Master Public Key (xpub)

- Open Electrum > Wallet > Information

- Export the Master Public Key (MPK) for receiving-only use.

- Optionally generate QR code for cold-to-hot usage (wallet watching).

This allows real-time monitoring of incoming Bitcoin transactions without ever exposing private keys.

6. Transaction Workflow

6.1 Receiving Bitcoin (Cold to Hot)

- Use the exported xpub in a watch-only wallet (desktop or mobile).

- Generate addresses as needed.

- Senders deposit Bitcoin to those addresses.

6.2 Spending Bitcoin (Hot Redeem Mode)

Important: This process temporarily compromises air-gap security.

- Boot into Tails (or use Electrum in a clean Linux environment).

- Import the 12-word seed phrase.

- Create transaction offline.

- Export signed transaction via QR code or USB.

- Broadcast using an online device.

6.3 Recommended Alternative: PSBT

To avoid full wallet import: - Use Partially Signed Bitcoin Transactions (PSBT) protocol to sign offline. - Broadcast PSBT using Sparrow Wallet or Electrum online.

7. Security Considerations

| Threat | Mitigation | |-------|------------| | OS Compromise | Use Tails (ephemeral environment, RAM-only) | | Supply Chain Attack | Manual SHA256 verification | | Key Leakage | No network access during key generation | | Phishing/Clone Wallets | Verify Electrum’s signature (when updating) | | Physical Theft | Store paper seed in tamper-evident location |

8. Backup Strategy

- Store 12-word seed phrase in multiple secure physical locations.

- Do not photograph or digitize.

- For added entropy, use Shamir Secret Sharing (e.g., 2-of-3 backups).

9. Consider

Through the meticulous integration of verifiable software solutions, the execution of air-gapped key generation methodologies, and adherence to stringent operational protocols, users have the capacity to establish a Bitcoin cold storage wallet that embodies an elevated degree of cryptographic assurance.

This DIY system presents a zero-dependency alternative to conventional third-party custody solutions and consumer-grade hardware wallets.

Consequently, it empowers individuals with the ability to manage their Bitcoin assets while ensuring full trust minimization and maximizing their sovereign control over private keys and transaction integrity within the decentralized financial ecosystem..

10. References And Citations

Nakamoto, Satoshi. Bitcoin: A Peer-to-Peer Electronic Cash System. 2008.

“Tails - The Amnesic Incognito Live System.” tails.boum.org, The Tor Project.

“Electrum Bitcoin Wallet.” electrum.org, 2025.

“QuickHash GUI.” quickhash-gui.org, 2025.

“Balena Etcher.” balena.io, 2025.

Bitcoin Core Developers. “Don’t Trust, Verify.” bitcoincore.org, 2025.In Addition

🪙 SegWit vs. Legacy Bitcoin Wallets

⚖️ TL;DR Decision Chart

| If you... | Use SegWit | Use Legacy | |-----------|----------------|----------------| | Want lower fees | ✅ Yes | 🚫 No | | Send to/from old services | ⚠️ Maybe | ✅ Yes | | Care about long-term scaling | ✅ Yes | 🚫 No | | Need max compatibility | ⚠️ Mixed | ✅ Yes | | Run a modern wallet | ✅ Yes | 🚫 Legacy support fading | | Use cold storage often | ✅ Yes | ⚠️ Depends on wallet support | | Use Lightning Network | ✅ Required | 🚫 Not supported |

🔍 1. What Are We Comparing?

There are two major types of Bitcoin wallet address formats:

🏛️ Legacy (P2PKH)

- Format starts with:

1 - Example:

1A1zP1eP5QGefi2DMPTfTL5SLmv7DivfNa - Oldest, most universally compatible

- Higher fees, larger transactions

- May lack support in newer tools and layer-2 solutions

🛰️ SegWit (P2WPKH)

- Formats start with:

- Nested SegWit (P2SH):

3... - Native SegWit (bech32):

bc1q... - Introduced via Bitcoin Improvement Proposal (BIP) 141

- Smaller transaction sizes → lower fees

- Native support by most modern wallets

💸 2. Transaction Fees

SegWit = Cheaper.

- SegWit reduces the size of Bitcoin transactions in a block.

- This means you pay less per transaction.

- Example: A SegWit transaction might cost 40%–60% less in fees than a legacy one.💡 Why?

Bitcoin charges fees per byte, not per amount.

SegWit removes certain data from the base transaction structure, which shrinks byte size.

🧰 3. Wallet & Service Compatibility

| Category | Legacy | SegWit (Nested / Native) | |----------|--------|---------------------------| | Old Exchanges | ✅ Full support | ⚠️ Partial | | Modern Exchanges | ✅ Yes | ✅ Yes | | Hardware Wallets (Trezor, Ledger) | ✅ Yes | ✅ Yes | | Mobile Wallets (Phoenix, BlueWallet) | ⚠️ Rare | ✅ Yes | | Lightning Support | 🚫 No | ✅ Native SegWit required |

🧠 Recommendation:

If you interact with older platforms or do cross-compatibility testing, you may want to: - Use nested SegWit (address starts with

3), which is backward compatible. - Avoid bech32-only wallets if your exchange doesn't support them (though rare in 2025).

🛡️ 4. Security and Reliability

Both formats are secure in terms of cryptographic strength.

However: - SegWit fixes a bug known as transaction malleability, which helps build protocols on top of Bitcoin (like the Lightning Network). - SegWit transactions are more standardized going forward.

💬 User takeaway:

For basic sending and receiving, both are equally secure. But for future-proofing, SegWit is the better bet.

🌐 5. Future-Proofing

Legacy wallets are gradually being phased out:

- Developers are focusing on SegWit and Taproot compatibility.

- Wallet providers are defaulting to SegWit addresses.

- Fee structures increasingly assume users have upgraded.

🚨 If you're using a Legacy wallet today, you're still safe. But: - Some services may stop supporting withdrawals to legacy addresses. - Your future upgrade path may be more complex.

🚀 6. Real-World Scenarios

🧊 Cold Storage User

- Use SegWit for low-fee UTXOs and efficient backup formats.

- Consider Native SegWit (

bc1q) if supported by your hardware wallet.

👛 Mobile Daily User

- Use Native SegWit for cheaper everyday payments.

- Ideal if using Lightning apps — it's often mandatory.

🔄 Exchange Trader

- Check your exchange’s address type support.

- Consider nested SegWit (

3...) if bridging old + new systems.

📜 7. Migration Tips

If you're moving from Legacy to SegWit:

- Create a new SegWit wallet in your software/hardware wallet.

- Send funds from your old Legacy wallet to the SegWit address.

- Back up the new seed — never reuse the old one.

- Watch out for fee rates and change address handling.

✅ Final User Recommendations

| Use Case | Address Type | |----------|--------------| | Long-term HODL | SegWit (

bc1q) | | Maximum compatibility | SegWit (nested3...) | | Fee-sensitive use | Native SegWit (bc1q) | | Lightning | Native SegWit (bc1q) | | Legacy systems only | Legacy (1...) – short-term only |

📚 Further Reading

- Nakamoto, Satoshi. Bitcoin: A Peer-to-Peer Electronic Cash System. 2008.

- Bitcoin Core Developers. “Segregated Witness (Consensus Layer Change).” github.com/bitcoin, 2017.

- “Electrum Documentation: Wallet Types.” docs.electrum.org, 2024.

- “Bitcoin Wallet Compatibility.” bitcoin.org, 2025.

- Ledger Support. “SegWit vs Legacy Addresses.” ledger.com, 2024.

-

@ d34e832d:383f78d0

2025-04-24 06:12:32

@ d34e832d:383f78d0

2025-04-24 06:12:32

Goal

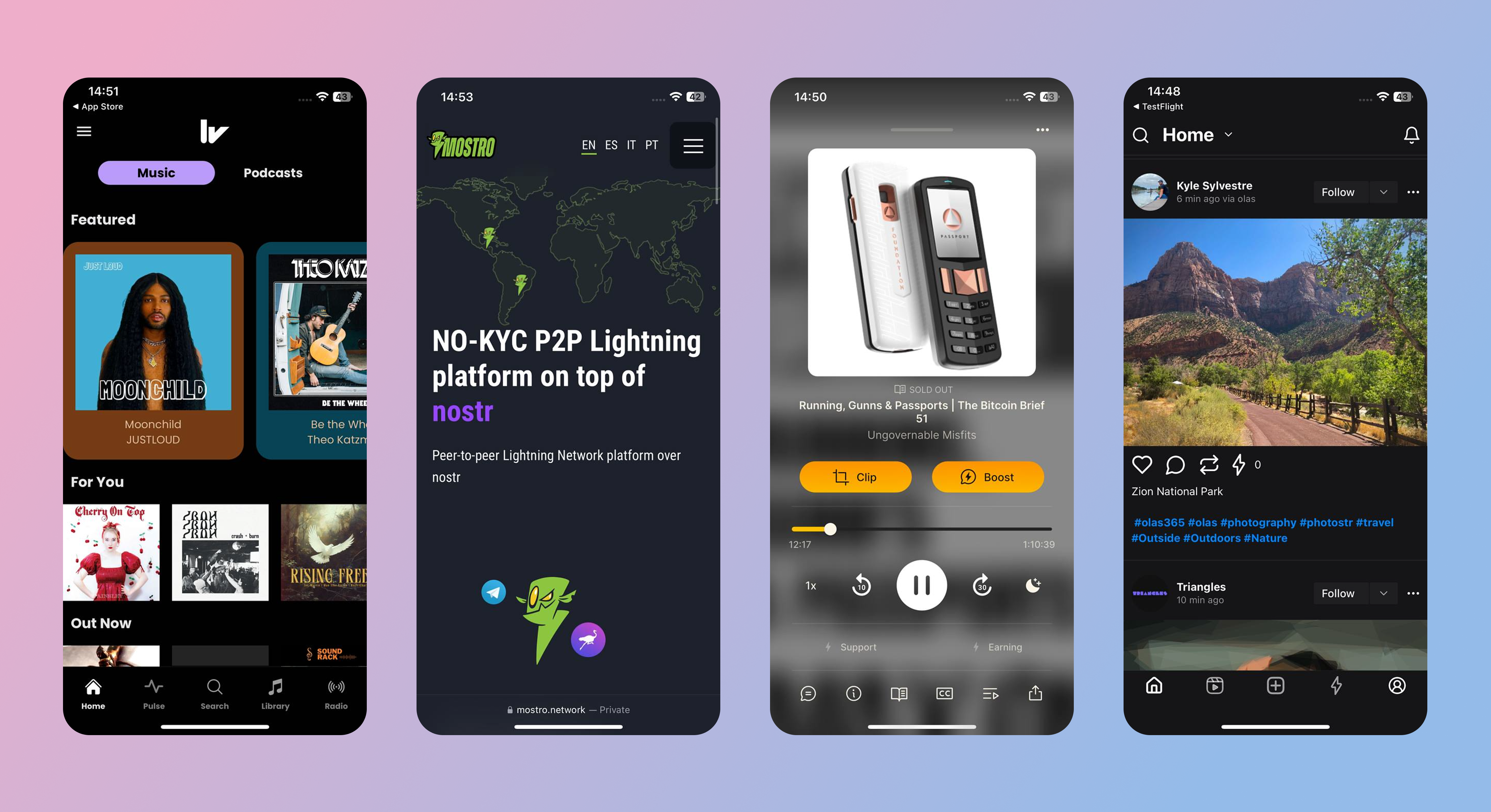

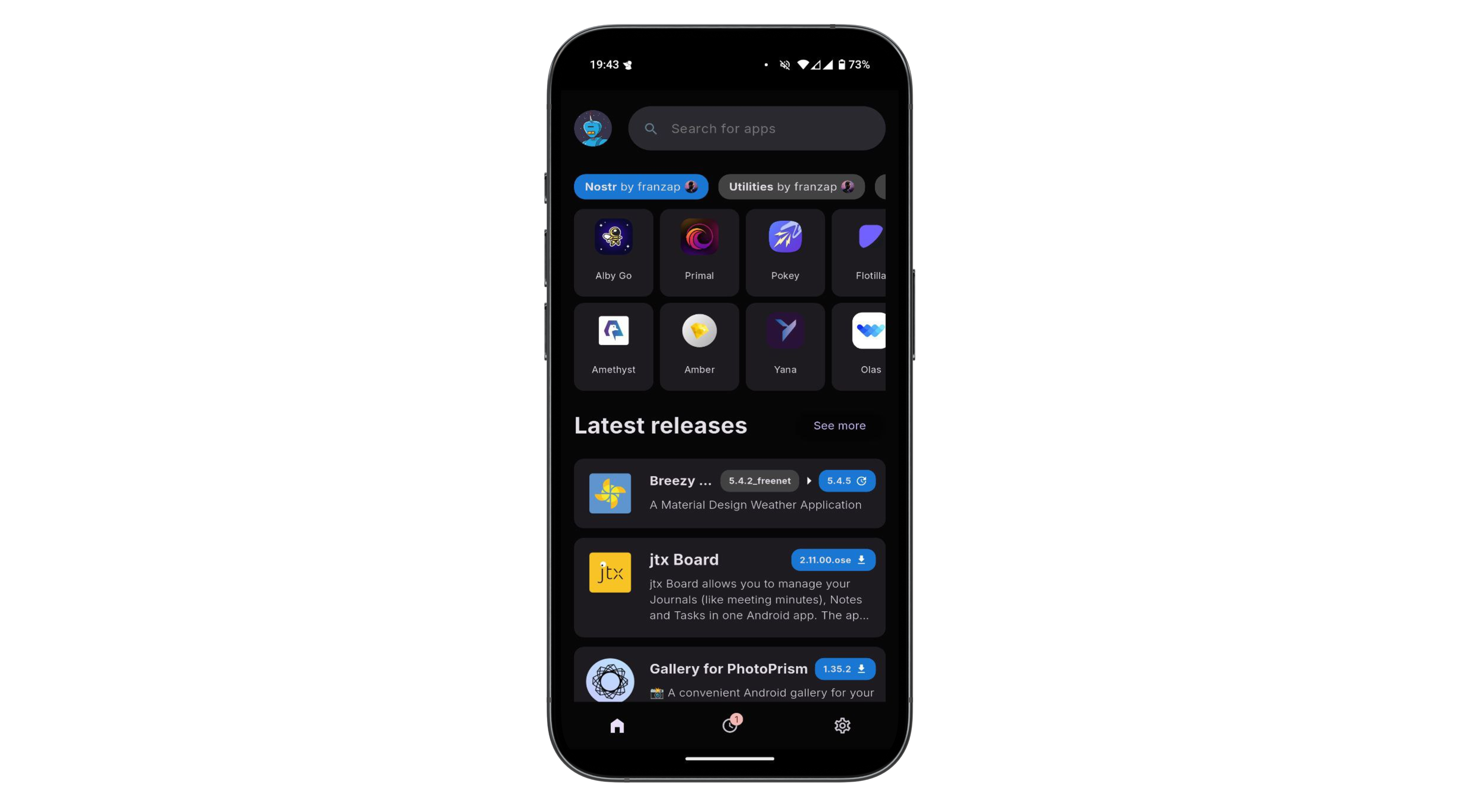

This analytical discourse delves into Jack Dorsey's recent utterances concerning Bitcoin, artificial intelligence, decentralized social networking platforms such as Nostr, and the burgeoning landscape of open-source cryptocurrency mining initiatives.

Dorsey's pronouncements escape the confines of isolated technological fascinations; rather, they elucidate a cohesive conceptual schema wherein Bitcoin transcends its conventional role as a mere store of value—akin to digital gold—and emerges as a foundational protocol intended for the construction of a decentralized, sovereign, and perpetually self-evolving internet ecosystem.

A thorough examination of Dorsey's confluence of Bitcoin with artificial intelligence advancements, adaptive learning paradigms, and integrated social systems reveals an assertion of Bitcoin's position as an entity that evolves beyond simple currency, evolving into a distinctly novel socio-technological organism characterized by its inherent ability to adapt and grow. His vigorous endorsement of native digital currency, open communication protocols, and decentralized infrastructural frameworks is posited here as a revolutionary paradigm—a conceptual

1. The Path

Jack Dorsey, co-founder of Twitter and Square (now Block), has emerged as one of the most compelling evangelists for a decentralized future. His ideas about Bitcoin go far beyond its role as a speculative asset or inflation hedge. In a recent interview, Dorsey ties together themes of open-source AI, peer-to-peer currency, decentralized media, and radical self-education, sketching a future in which Bitcoin is the lynchpin of an emerging technological and social ecosystem. This thesis reviews Dorsey’s statements and offers a critical framework to understand why his vision uniquely positions Bitcoin as the keystone of a post-institutional, digital world.

2. Bitcoin: The Native Currency of the Internet

“It’s the best current manifestation of a native internet currency.” — Jack Dorsey

Bitcoin's status as an open protocol with no central controlling authority echoes the original spirit of the internet: decentralized, borderless, and resilient. Dorsey's framing of Bitcoin not just as a payment system but as the "native money of the internet" is a profound conceptual leap. It suggests that just as HTTP became the standard for web documents, Bitcoin can become the monetary layer for the open web.

This framing bypasses traditional narratives of digital gold or institutional adoption and centers a P2P vision of global value transfer. Unlike central bank digital currencies or platform-based payment rails, Bitcoin is opt-in, permissionless, and censorship-resistant—qualities essential for sovereignty in the digital age.

3. Nostr and the Decentralization of Social Systems

Dorsey’s support for Nostr, an open protocol for decentralized social media, reflects a desire to restore user agency, protocol composability, and speech sovereignty. Nostr’s architecture parallels Bitcoin’s: open, extensible, and resilient to censorship.

Here, Bitcoin serves not just as money but as a network effect driver. When combined with Lightning and P2P tipping, Nostr becomes more than just a Twitter alternative—it evolves into a micropayment-native communication system, a living proof that Bitcoin can power an entire open-source social economy.

4. Open-Source AI and Cognitive Sovereignty

Dorsey's forecast that open-source AI will emerge as an alternative to proprietary systems aligns with his commitment to digital autonomy. If Bitcoin empowers financial sovereignty and Nostr enables communicative freedom, open-source AI can empower cognitive independence—freeing humanity from centralized algorithmic manipulation.

He draws a fascinating parallel between AI learning models and human learning itself, suggesting both can be self-directed, recursive, and radically decentralized. This resonates with the Bitcoin ethos: systems should evolve through transparent, open participation—not gatekeeping or institutional control.

5. Bitcoin Mining: Sovereignty at the Hardware Layer

Block’s initiative to create open-source mining hardware is a direct attempt to counter centralization in Bitcoin’s infrastructure. ASIC chip development and mining rig customization empower individuals and communities to secure the network directly.

This move reinforces Dorsey’s vision that true decentralization requires ownership at every layer, including hardware. It is a radical assertion of vertical sovereignty—from protocol to interface to silicon.

6. Learning as the Core Protocol

“The most compounding skill is learning itself.” — Jack Dorsey

Dorsey’s deepest insight is that the throughline connecting Bitcoin, AI, and Nostr is not technology—it’s learning. Bitcoin represents more than code; it’s a living experiment in voluntary consensus, a distributed educational system in cryptographic form.

Dorsey’s emphasis on meditation, intensive retreats, and self-guided exploration mirrors the trustless, sovereign nature of Bitcoin. Learning becomes the ultimate protocol: recursive, adaptive, and decentralized—mirroring AI models and Bitcoin nodes alike.

7. Critical Risks and Honest Reflections

Dorsey remains honest about Bitcoin’s current limitations:

- Accessibility: UX barriers for onboarding new users.

- Usability: Friction in everyday use.

- State-Level Adoption: Risks of co-optation as mere digital gold.

However, his caution enhances credibility. His focus remains on preserving Bitcoin as a P2P electronic cash system, not transforming it into another tool of institutional control.

8. Bitcoin as a Living System

What emerges from Dorsey's vision is not a product pitch, but a philosophical reorientation: Bitcoin, Nostr, and open AI are not discrete tools—they are living systems forming a new type of civilization stack.

They are not static infrastructures, but emergent grammars of human cooperation, facilitating value exchange, learning, and community formation in ways never possible before.

Bitcoin, in this view, is not merely stunningly original—it is civilizationally generative, offering not just monetary innovation but a path to software-upgraded humanity.

Works Cited and Tools Used

Dorsey, Jack. Interview on Bitcoin, AI, and Decentralization. April 2025.

Nakamoto, Satoshi. “Bitcoin: A Peer-to-Peer Electronic Cash System.” 2008.

Nostr Protocol. https://nostr.com.

Block, Inc. Bitcoin Mining Hardware Initiatives. 2024.

Obsidian Canvas. Decentralized Note-Taking and Networked Thinking. 2025. -

@ d34e832d:383f78d0

2025-04-24 05:56:06

@ d34e832d:383f78d0

2025-04-24 05:56:06Idea

Through the integration of Optical Character Recognition (OCR), Docker-based deployment, and secure remote access via Twin Gate, Paperless NGX empowers individuals and small organizations to digitize, organize, and retrieve documents with minimal friction. This research explores its technical infrastructure, real-world applications, and how such a system can redefine document archival practices for the digital age.

Agile, Remote-Accessible, and Searchable Document System

In a world of increasing digital interdependence, managing physical documents is becoming not only inefficient but also environmentally and logistically unsustainable. The demand for agile, remote-accessible, and searchable document systems has never been higher—especially for researchers, small businesses, and archival professionals. Paperless NGX, an open-source platform, addresses these needs by offering a streamlined, secure, and automated way to manage documents digitally.

This Idea explores how Paperless NGX facilitates the transition to a paperless workflow and proposes best practices for sustainable, scalable usage.

Paperless NGX: The Platform

Paperless NGX is an advanced fork of the original Paperless project, redesigned with modern containers, faster performance, and enhanced community contributions. Its core functions include:

- Text Extraction with OCR: Leveraging the

ocrmypdfPython library, Paperless NGX can extract searchable text from scanned PDFs and images. - Searchable Document Indexing: Full-text search allows users to locate documents not just by filename or metadata, but by actual content.

- Dockerized Setup: A ready-to-use Docker Compose environment simplifies deployment, including the use of setup scripts for Ubuntu-based servers.

- Modular Workflows: Custom triggers and automation rules allow for smart processing pipelines based on file tags, types, or email source.

Key Features and Technical Infrastructure

1. Installation and Deployment

The system runs in a containerized environment, making it highly portable and isolated. A typical installation involves: - Docker Compose with YAML configuration - Volume mapping for persistent storage - Optional integration with reverse proxies (e.g., Nginx) for HTTPS access

2. OCR and Indexing

Using

ocrmypdf, scanned documents are processed into fully searchable PDFs. This function dramatically improves retrieval, especially for archived legal, medical, or historical records.3. Secure Access via Twin Gate

To solve the challenge of secure remote access without exposing the network, Twin Gate acts as a zero-trust access proxy. It encrypts communication between the Paperless NGX server and the client, enabling access from anywhere without the need for traditional VPNs.

4. Email Integration and Ingestion

Paperless NGX can ingest attachments directly from configured email folders. This feature automates much of the document intake process, especially useful for receipts, invoices, and academic PDFs.

Sustainable Document Management Workflow

A practical paperless strategy requires not just tools, but repeatable processes. A sustainable workflow recommended by the Paperless NGX community includes:

- Capture & Tagging

All incoming documents are tagged with a default “inbox” tag for triage. - Physical Archive Correlation

If the physical document is retained, assign it a serial number (e.g., ASN-001), which is matched digitally. - Curation & Tagging

Apply relevant category and topic tags to improve searchability. - Archival Confirmation

Remove the “inbox” tag once fully processed and categorized.

Backup and Resilience

Reliability is key to any archival system. Paperless NGX includes backup functionality via: - Cron job–scheduled Docker exports - Offsite and cloud backups using rsync or encrypted cloud drives - Restore mechanisms using documented CLI commands

This ensures document availability even in the event of hardware failure or data corruption.

Limitations and Considerations

While Paperless NGX is powerful, it comes with several caveats: - Technical Barrier to Entry: Requires basic Docker and Linux skills to install and maintain. - OCR Inaccuracy for Handwritten Texts: The OCR engine may struggle with cursive or handwritten documents. - Plugin and Community Dependency: Continuous support relies on active community contribution.

Consider

Paperless NGX emerges as a pragmatic and privacy-centric alternative to conventional cloud-based document management systems, effectively addressing the critical challenges of data security and user autonomy.

The implementation of advanced Optical Character Recognition (OCR) technology facilitates the indexing and searching of documents, significantly enhancing information retrieval efficiency.

Additionally, the platform offers secure remote access protocols that ensure data integrity while preserving the confidentiality of sensitive information during transmission.

Furthermore, its customizable workflow capabilities empower both individuals and organizations to precisely tailor their data management processes, thereby reclaiming sovereignty over their information ecosystems.

In an era increasingly characterized by a shift towards paperless methodologies, the significance of solutions such as Paperless NGX cannot be overstated; they play an instrumental role in engineering a future in which information remains not only accessible but also safeguarded and sustainably governed.

In Addition

To Further The Idea

This technical paper presents an optimized strategy for transforming an Intel NUC into a compact, power-efficient self-hosted server using Ubuntu. The setup emphasizes reliability, low energy consumption, and cost-effectiveness for personal or small business use. Services such as Paperless NGX, Nextcloud, Gitea, and Docker containers are examined for deployment. The paper details hardware selection, system installation, secure remote access, and best practices for performance and longevity.

1. Cloud sovereignty, Privacy, and Data Ownership

As cloud sovereignty, privacy, and data ownership become critical concerns, self-hosting is increasingly appealing. An Intel NUC (Next Unit of Computing) provides an ideal middle ground between Raspberry Pi boards and enterprise-grade servers—balancing performance, form factor, and power draw. With Ubuntu LTS and Docker, users can run a full suite of services with minimal overhead.

2. Hardware Overview

2.1 Recommended NUC Specifications:

| Component | Recommended Specs | |------------------|-----------------------------------------------------| | Model | Intel NUC 11/12 Pro (e.g., NUC11TNHi5, NUC12WSKi7) | | CPU | Intel Core i5 or i7 (11th/12th Gen) | | RAM | 16GB–32GB DDR4 (dual channel preferred) | | Storage | 512GB–2TB NVMe SSD (Samsung 980 Pro or similar) | | Network | Gigabit Ethernet + Optional Wi-Fi 6 | | Power Supply | 65W USB-C or barrel connector | | Cooling | Internal fan, well-ventilated location |

NUCs are also capable of dual-drive setups and support for Intel vPro for remote management on some models.

3. Operating System and Software Stack

3.1 Ubuntu Server LTS

- Version: Ubuntu Server 22.04 LTS

- Installation Method: Bootable USB (Rufus or Balena Etcher)

- Disk Partitioning: LVM with encryption recommended for full disk security

- Security:

- UFW (Uncomplicated Firewall)

- Fail2ban

- SSH hardened with key-only login

bash sudo apt update && sudo apt upgrade sudo ufw allow OpenSSH sudo ufw enable

4. Docker and System Services

Docker and Docker Compose streamline the deployment of isolated, reproducible environments.

4.1 Install Docker and Compose

bash sudo apt install docker.io docker-compose sudo systemctl enable docker4.2 Common Services to Self-Host:

| Application | Description | Access Port | |--------------------|----------------------------------------|-------------| | Paperless NGX | Document archiving and OCR | 8000 | | Nextcloud | Personal cloud, contacts, calendar | 443 | | Gitea | Lightweight Git repository | 3000 | | Nginx Proxy Manager| SSL proxy for all services | 81, 443 | | Portainer | Docker container management GUI | 9000 | | Watchtower | Auto-update containers | - |

5. Network & Remote Access

5.1 Local IP & Static Assignment

- Set a static IP for consistent access (via router DHCP reservation or Netplan).

5.2 Access Options

- Local Only: VPN into local network (e.g., WireGuard, Tailscale)

- Remote Access:

- Reverse proxy via Nginx with Certbot for HTTPS

- Twin Gate or Tailscale for zero-trust remote access

- DNS via DuckDNS, Cloudflare

6. Performance Optimization

- Enable

zramfor compressed RAM swap - Trim SSDs weekly with

fstrim - Use Docker volumes, not bind mounts for stability

- Set up unattended upgrades:

bash sudo apt install unattended-upgrades sudo dpkg-reconfigure --priority=low unattended-upgrades

7. Power and Environmental Considerations

- Idle Power Draw: ~7–12W (depending on configuration)

- UPS Recommended: e.g., APC Back-UPS 600VA

- Use BIOS Wake-on-LAN if remote booting is needed

8. Maintenance and Monitoring

- Monitoring: Glances, Netdata, or Prometheus + Grafana

- Backups:

- Use

rsyncto external drive or NAS - Cloud backup options: rclone to Google Drive, S3

- Paperless NGX backups:

docker compose exec -T web document-exporter ...

9. Consider

Running a personal server using an Intel NUC and Ubuntu offers a private, low-maintenance, and modular solution to digital infrastructure needs. It’s an ideal base for self-hosting services, offering superior control over data and strong security with the right setup. The NUC's small form factor and efficient power usage make it an optimal home server platform that scales well for many use cases.

- Text Extraction with OCR: Leveraging the

-

@ d34e832d:383f78d0

2025-04-24 05:14:14

@ d34e832d:383f78d0

2025-04-24 05:14:14Idea

By instituting a robust network of conceptual entities, referred to as 'Obsidian nodes'—which are effectively discrete, idea-centric notes—researchers are empowered to establish a resilient and non-linear archival framework for knowledge accumulation.

These nodes, intricately connected via hyperlinks and systematically organized through the graphical interface of the Obsidian Canvas, facilitate profound intellectual exploration and the synthesis of disparate domains of knowledge.

Consequently, this innovative workflow paradigm emphasizes semantic precision and the interconnectedness of ideas, diverging from conventional, source-centric information architectures prevalent in traditional academic practices.

Traditional research workflows often emphasize organizing notes by source, resulting in static, siloed knowledge that resists integration and insight. With the rise of personal knowledge management (PKM) tools like Obsidian, it becomes possible to structure information in a way that mirrors the dynamic and interconnected nature of human thought.

At the heart of this approach are Obsidian nodes—atomic, standalone notes representing single ideas, arguments, or claims. These nodes form the basis of a semantic research network, made visible and manageable via Obsidian’s graph view and Canvas feature. This thesis outlines how such a framework enhances understanding, supports creativity, and aligns with best practices in information architecture.

Obsidian Nodes: Atomic Units of Thought

An Obsidian node is a note crafted to encapsulate one meaningful concept or question. It is:

- Atomic: Contains only one idea, making it easier to link and reuse.

- Context-Independent: Designed to stand on its own, without requiring the original source for meaning.

- Networked: Linked to other Obsidian nodes through backlinks and tags.

This system draws on the principles of the Zettelkasten method, but adapts them to the modern, markdown-based environment of Obsidian.

Benefits of Node-Based Note-Taking

- Improved Retrieval: Ideas can be surfaced based on content relevance, not source origin.

- Cross-Disciplinary Insight: Linking between concepts across fields becomes intuitive.

- Sustainable Growth: Each new node adds value to the network without redundancy.

Graph View: Visualizing Connections

Obsidian’s graph view offers a macro-level overview of the knowledge graph, showing how nodes interrelate. This encourages serendipitous discovery and identifies central or orphaned concepts that need further development.

- Clusters emerge around major themes.

- Hubs represent foundational ideas.

- Bridges between nodes show interdisciplinary links.

The graph view isn’t just a map—it’s an evolving reflection of intellectual progress.

Canvas: Thinking Spatially with Digital Notes

Obsidian Canvas acts as a digital thinking space. Unlike the abstract graph view, Canvas allows for spatial arrangement of Obsidian nodes, images, and ideas. This supports visual reasoning, ideation, and project planning.

Use Cases of Canvas

- Synthesizing Ideas: Group related nodes in physical proximity.

- Outlining Arguments: Arrange claims into narrative or logic flows.

- Designing Research Papers: Lay out structure and integrate supporting points visually.

Canvas brings a tactile quality to digital thinking, enabling workflows similar to sticky notes, mind maps, or corkboard pinning—but with markdown-based power and extensibility.

Template and Workflow

To simplify creation and encourage consistency, Obsidian nodes are generated using a templater plugin. Each node typically includes:

```markdown

{{title}}

Tags: #topic #field

Linked Nodes: [[Related Node]]

Summary: A 1-2 sentence idea explanation.

Source: [[Source Note]]

Date Created: {{date}}

```The Canvas workspace pulls these nodes as cards, allowing for arrangement, grouping, and visual tracing of arguments or research paths.

Discussion and Challenges

While this approach enhances creativity and research depth, challenges include:

- Initial Setup: Learning and configuring plugins like Templater, Dataview, and Canvas.

- Overlinking or Underlinking: Finding the right granularity in note-making takes practice.

- Scalability: As networks grow, maintaining structure and avoiding fragmentation becomes crucial.

- Team Collaboration: While Git can assist, Obsidian remains largely optimized for solo workflows.

Consider

Through the innovative employment of Obsidian's interconnected nodes and the Canvas feature, researchers are enabled to construct a meticulously engineered semantic architecture that reflects the intricate topology of their knowledge frameworks.

This paradigm shift facilitates a transformation of conventional note-taking, evolving this practice from a static, merely accumulative repository of information into a dynamic and adaptive cognitive ecosystem that actively engages with the user’s thought processes. With methodological rigor and a structured approach, Obsidian transcends its role as mere documentation software, evolving into both a secondary cognitive apparatus and a sophisticated digital writing infrastructure.

This dual functionality significantly empowers the long-term intellectual endeavors and creative pursuits of students, scholars, and lifelong learners, thereby enhancing their capacity for sustained engagement with complex ideas.

-

@ d34e832d:383f78d0

2025-04-24 05:04:55

@ d34e832d:383f78d0

2025-04-24 05:04:55A Knowledge Management Framework for your Academic Writing

Idea Approach

The primary objective of this framework is to streamline and enhance the efficiency of several critical academic processes, namely the reading, annotation, synthesis, and writing stages inherent to doctoral studies.

By leveraging established best practices from various domains, including digital note-taking methodologies, sophisticated knowledge management techniques, and the scientifically-grounded principles of spaced repetition systems, this proposed workflow is adept at optimizing long-term retention of information, fostering the development of novel ideas, and facilitating the meticulous preparation of manuscripts. Furthermore, this integrated approach capitalizes on Zotero's robust annotation functionalities, harmoniously merged with Obsidian's Zettelkasten-inspired architecture, thereby enriching the depth and structural coherence of academic inquiry, ultimately leading to more impactful scholarly contributions.

Doctoral research demands a sophisticated approach to information management, critical thinking, and synthesis. Traditional systems of note-taking and bibliography management are often fragmented and inefficient, leading to cognitive overload and disorganized research outputs. This thesis proposes a workflow that leverages Zotero for reference management, Obsidian for networked note-taking, and Anki for spaced repetition learning—each component enhanced by a set of plugins, templates, and color-coded systems.

2. Literature Review and Context

2.1 Digital Research Workflows

Recent research in digital scholarship has highlighted the importance of structured knowledge environments. Tools like Roam Research, Obsidian, and Notion have gained traction among academics seeking flexibility and networked thinking. However, few workflows provide seamless interoperability between reference management, reading, and idea synthesis.

2.2 The Zettelkasten Method

Originally developed by sociologist Niklas Luhmann, the Zettelkasten ("slip-box") method emphasizes creating atomic notes—single ideas captured and linked through context. This approach fosters long-term idea development and is highly compatible with digital graph-based note systems like Obsidian.

3. Zotero Workflow: Structured Annotation and Tagging

Zotero serves as the foundational tool for ingesting and organizing academic materials. The built-in PDF reader is augmented through a color-coded annotation schema designed to categorize information efficiently:

- Red: Refuted or problematic claims requiring skepticism or clarification

- Yellow: Prominent claims, novel hypotheses, or insightful observations

- Green: Verified facts or claims that align with the research narrative

- Purple: Structural elements like chapter titles or section headers

- Blue: Inter-author references or connections to external ideas

- Pink: Unclear arguments, logical gaps, or questions for future inquiry

- Orange: Precise definitions and technical terminology

Annotations are accompanied by tags and notes in Zotero, allowing robust filtering and thematic grouping.

4. Obsidian Integration: Bridging Annotation and Synthesis

4.1 Plugin Architecture

Three key plugins optimize Obsidian’s role in the workflow:

- Zotero Integration (via

obsidian-citation-plugin): Syncs annotated PDFs and metadata directly from Zotero - Highlighter: Enables color-coded highlights in Obsidian, mirroring Zotero's scheme

- Templater: Automates formatting and consistency using Nunjucks templates

A custom keyboard shortcut (e.g.,

Ctrl+Shift+Z) is used to trigger the extraction of annotations into structured Obsidian notes.4.2 Custom Templating

The templating system ensures imported notes include:

- Citation metadata (title, author, year, journal)

- Full-color annotations with comments and page references

- Persistent notes for long-term synthesis

- An embedded bibtex citation key for seamless referencing

5. Zettelkasten and Atomic Note Generation

Obsidian’s networked note system supports idea-centered knowledge development. Each note captures a singular, discrete idea—independent of the source material—facilitating:

- Thematic convergence across disciplines

- Independent recombination of ideas

- Emergence of new questions and hypotheses

A standard atomic note template includes: - Note ID (timestamp or semantic UID) - Topic statement - Linked references - Associated atomic notes (via backlinks)

The Graph View provides a visual map of conceptual relationships, allowing researchers to track the evolution of their arguments.

6. Canvas for Spatial Organization

Obsidian’s Canvas plugin is used to mimic physical research boards: - Notes are arranged spatially to represent conceptual clusters or chapter structures - Embedded visual content enhances memory retention and creative thought - Notes and cards can be grouped by theme, timeline, or argumentative flow

This supports both granular research and holistic thesis design.

7. Flashcard Integration with Anki

Key insights, definitions, and questions are exported from Obsidian to Anki, enabling spaced repetition of core content. This supports: - Preparation for comprehensive exams - Retention of complex theories and definitions - Active recall training during literature reviews

Flashcards are automatically generated using Obsidian-to-Anki bridges, with tagging synced to Obsidian topics.

8. Word Processor Integration and Writing Stage

Zotero’s Word plugin simplifies: - In-text citation - Automatic bibliography generation - Switching between citation styles (APA, Chicago, MLA, etc.)

Drafts in Obsidian are later exported into formal academic writing environments such as Microsoft Word or LaTeX editors for formatting and submission.

9. Discussion and Evaluation

The proposed workflow significantly reduces friction in managing large volumes of information and promotes deep engagement with source material. Its modular nature allows adaptation for various disciplines and writing styles. Potential limitations include: - Initial learning curve - Reliance on plugin maintenance - Challenges in team-based collaboration

Nonetheless, the ability to unify reading, note-taking, synthesis, and writing into a seamless ecosystem offers clear benefits in focus, productivity, and academic rigor.

10. Consider

This idea demonstrates that a well-structured digital workflow using Zotero and Obsidian can transform the PhD research process. It empowers researchers to move beyond passive reading into active knowledge creation, aligned with the long-term demands of scholarly writing. Future iterations could include AI-assisted summarization, collaborative graph spaces, and greater mobile integration.

9. Evaluation Of The Approach

While this workflow offers significant advantages in clarity, synthesis, and long-term idea development, several limitations must be acknowledged:

-

Initial Learning Curve: New users may face a steep learning curve when setting up and mastering the integrated use of Zotero, Obsidian, and their associated plugins. Understanding markdown syntax, customizing templates in Templater, and configuring citation keys all require upfront time investment. However, this learning period can be offset by the long-term gains in productivity and mental clarity.

-

Plugin Ecosystem Volatility: Since both Obsidian and many of its key plugins are maintained by open-source communities or individual developers, updates can occasionally break workflows or require manual adjustments.

-

Interoperability Challenges: Synchronizing metadata, highlights, and notes between systems (especially on multiple devices or operating systems) may present issues if not managed carefully. This includes Zotero’s Better BibTeX keys, Obsidian sync, and Anki integration.

-

Limited Collaborative Features: This workflow is optimized for individual use. Real-time collaboration on notes or shared reference libraries may require alternative platforms or additional tooling.

Despite these constraints, the workflow remains highly adaptable and has proven effective across disciplines for researchers aiming to build a durable intellectual infrastructure over the course of a PhD.

9. Evaluation Of The Approach

While the Zotero–Obsidian workflow dramatically improves research organization and long-term knowledge retention, several caveats must be considered:

-

Initial Learning Curve: Mastery of this workflow requires technical setup and familiarity with markdown, citation keys, and plugin configuration. While challenging at first, the learning effort is front-loaded and pays off in efficiency over time.

-

Reliance on Plugin Maintenance: A key risk of this system is its dependence on community-maintained plugins. Tools like Zotero Integration, Templater, and Highlighter are not officially supported by Obsidian or Zotero core teams. This means updates or changes to the Obsidian API or plugin repository may break functionality or introduce bugs. Active plugin support is crucial to the system’s longevity.

-

Interoperability and Syncing Issues: Managing synchronization across Zotero, Obsidian, and Anki—especially across multiple devices—can lead to inconsistencies or data loss without careful setup. Users should ensure robust syncing solutions (e.g. Obsidian Sync, Zotero WebDAV, or GitHub backup).

-

Limited Collaboration Capabilities: This setup is designed for solo research workflows. Collaborative features (such as shared note-taking or group annotations) are limited and may require alternate solutions like Notion, Google Docs, or Overleaf when working in teams.

The integration of Zotero with Obsidian presents a notable advantage for individual researchers, exhibiting substantial efficiency in literature management and personal knowledge organization through its unique workflows. However, this model demonstrates significant deficiencies when evaluated in the context of collaborative research dynamics.

Specifically, while Zotero facilitates the creation and management of shared libraries, allowing for the aggregation of sources and references among users, Obsidian is fundamentally limited by its lack of intrinsic support for synchronous collaborative editing functionalities, thereby precluding simultaneous contributions from multiple users in real time. Although the application of version control systems such as Git has the potential to address this limitation, enabling a structured mechanism for tracking changes and managing contributions, the inherent complexity of such systems may pose a barrier to usability for team members who lack familiarity or comfort with version control protocols.

Furthermore, the nuances of color-coded annotation systems and bespoke personal note taxonomies utilized by individual researchers may present interoperability challenges when applied in a group setting, as these systems require rigorously defined conventions to ensure consistency and clarity in cross-collaborator communication and understanding. Thus, researchers should be cognizant of the challenges inherent in adapting tools designed for solitary workflows to the multifaceted requirements of collaborative research initiatives.

-

@ d34e832d:383f78d0

2025-04-24 02:56:59

@ d34e832d:383f78d0

2025-04-24 02:56:591. The Ledger or Physical USD?

Bitcoin embodies a paradigmatic transformation in the foundational constructs of trust, ownership, and value preservation within the context of a digital economy. In stark contrast to conventional financial infrastructures that are predicated on centralized regulatory frameworks, Bitcoin operationalizes an intricate interplay of cryptographic techniques, consensus-driven algorithms, and incentivization structures to engender a decentralized and censorship-resistant paradigm for the transfer and safeguarding of digital assets. This conceptual framework elucidates the pivotal mechanisms underpinning Bitcoin's functional architecture, encompassing its distributed ledger technology (DLT) structure, robust security protocols, consensus algorithms such as Proof of Work (PoW), the intricacies of its monetary policy defined by the halving events and limited supply, as well as the broader implications these components have on stakeholder engagement and user agency.

2. The Core Functionality of Bitcoin

At its core, Bitcoin is a public ledger that records ownership and transfers of value. This ledger—called the blockchain—is maintained and verified by thousands of decentralized nodes across the globe.

2.1 Public Ledger

All Bitcoin transactions are stored in a transparent, append-only ledger. Each transaction includes: - A reference to prior ownership (input) - A transfer of value to a new owner (output) - A digital signature proving authorization

2.2 Ownership via Digital Signatures

Bitcoin uses asymmetric cryptography: - A private key is known only to the owner and is used to sign transactions. - A public key (or address) is used by the network to verify the authenticity of the transaction.

This system ensures that only the rightful owner can spend bitcoins, and that all network participants can independently verify that the transaction is valid.

3. Decentralization and Ledger Synchronization

Unlike traditional banking systems, which rely on a central institution, Bitcoin’s ledger is decentralized: - Every node keeps a copy of the blockchain. - No single party controls the system. - Updates to the ledger occur only through network consensus.

This decentralization ensures fault tolerance, censorship resistance, and transparency.

4. Preventing Double Spending

One of Bitcoin’s most critical innovations is solving the double-spending problem without a central authority.

4.1 Balance Validation

Before a transaction is accepted, nodes verify: - The digital signature is valid. - The input has not already been spent. - The sender has sufficient balance.

This is made possible by referencing previous transactions and ensuring the inputs match the unspent transaction outputs (UTXOs).

5. Blockchain and Proof-of-Work

To ensure consistency across the distributed network, Bitcoin uses a blockchain—a sequential chain of blocks containing batches of verified transactions.

5.1 Mining and Proof-of-Work

Adding a new block requires solving a cryptographic puzzle, known as Proof-of-Work (PoW): - The puzzle involves finding a hash value that meets network-defined difficulty. - This process requires computational power, which deters tampering. - Once a block is validated, it is propagated across the network.

5.2 Block Rewards and Incentives

Miners are incentivized to participate by: - Block rewards: New bitcoins issued with each block (initially 50 BTC, halved every ~4 years). - Transaction fees: Paid by users to prioritize their transactions.

6. Network Consensus and Security

Bitcoin relies on Nakamoto Consensus, which prioritizes the longest chain—the one with the most accumulated proof-of-work.

- In case of competing chains (forks), the network chooses the chain with the most computational effort.

- This mechanism makes rewriting history or creating fraudulent blocks extremely difficult, as it would require control of over 50% of the network's total hash power.

7. Transaction Throughput and Fees

Bitcoin’s average block time is 10 minutes, and each block can contain ~1MB of data, resulting in ~3–7 transactions per second.

- During periods of high demand, users compete by offering higher transaction fees to get included faster.

- Solutions like Lightning Network aim to scale transaction speed and lower costs by processing payments off-chain.

8. Monetary Policy and Scarcity

Bitcoin enforces a fixed supply cap of 21 million coins, making it deflationary by design.

- This limited supply contrasts with fiat currencies, which can be printed at will by central banks.

- The controlled issuance schedule and halving events contribute to Bitcoin’s store-of-value narrative, similar to digital gold.

9. Consider

Bitcoin integrates advanced cryptographic methodologies, including public-private key pairings and hashing algorithms, to establish a formidable framework of security that underpins its operation as a digital currency. The economic incentives are meticulously structured through mechanisms such as mining rewards and transaction fees, which not only incentivize network participation but also regulate the supply of Bitcoin through a halving schedule intrinsic to its decentralized protocol. This architecture manifests a paradigm wherein individual users can autonomously oversee their financial assets, authenticate transactions through a rigorously constructed consensus algorithm, specifically the Proof of Work mechanism, and engage with a borderless financial ecosystem devoid of traditional intermediaries such as banks. Despite the notable challenges pertaining to transaction throughput scalability and a complex regulatory landscape that intermittently threatens its proliferation, Bitcoin steadfastly persists as an archetype of decentralized trust, heralding a transformative shift in financial paradigms within the contemporary digital milieu.

10. References

- Nakamoto, S. (2008). Bitcoin: A Peer-to-Peer Electronic Cash System.

- Antonopoulos, A. M. (2017). Mastering Bitcoin: Unlocking Digital Cryptocurrencies.

- Bitcoin.org. (n.d.). How Bitcoin Works

-

@ d34e832d:383f78d0

2025-04-24 00:56:03

@ d34e832d:383f78d0

2025-04-24 00:56:03WebSocket communication is integral to modern real-time web applications, powering everything from chat apps and online gaming to collaborative editing tools and live dashboards. However, its persistent and event-driven nature introduces unique debugging challenges. Traditional browser developer tools provide limited insight into WebSocket message flows, especially in complex, asynchronous applications.

This thesis evaluates the use of Chrome-based browser extensions—specifically those designed to enhance WebSocket debugging—and explores how visual event tracing improves developer experience (DX). By profiling real-world applications and comparing built-in tools with popular WebSocket DevTools extensions, we analyze the impact of visual feedback, message inspection, and timeline tracing on debugging efficiency, code quality, and development speed.

The Idea

As front-end development evolves, WebSockets have become a foundational technology for building reactive user experiences. Debugging WebSocket behavior, however, remains a cumbersome task. Chrome DevTools offers a basic view of WebSocket frames, but lacks features such as message categorization, event correlation, or contextual logging. Developers often resort to

console.logand custom logging systems, increasing friction and reducing productivity.This research investigates how browser extensions designed for WebSocket inspection—such as Smart WebSocket Client, WebSocket King Client, and WSDebugger—can enhance debugging workflows. We focus on features that provide visual structure to communication patterns, simplify message replay, and allow for real-time monitoring of state transitions.

Related Work

Chrome DevTools

While Chrome DevTools supports WebSocket inspection under the Network > Frames tab, its utility is limited: - Messages are displayed in a flat, unstructured stream. - No built-in timeline or replay mechanism. - Filtering and contextual debugging features are minimal.

WebSocket-Specific Extensions

Numerous browser extensions aim to fill this gap: - Smart WebSocket Client: Allows custom message sending, frame inspection, and saved session reuse. - WSDebugger: Offers structured logging and visualization of message flows. - WebSocket Monitor: Enables real-time monitoring of multiple connections with UI overlays.

Methodology

Tools Evaluated:

- Chrome DevTools (baseline)

- Smart WebSocket Client

- WSDebugger

- WebSocket King Client

Evaluation Criteria:

- Real-time message monitoring

- UI clarity and UX consistency

- Support for message replay and editing

- Message categorization and filtering

- Timeline-based visualization

Test Applications:

- A collaborative markdown editor

- A multiplayer drawing game (WebSocket over Node.js)

- A lightweight financial dashboard (stock ticker)

Findings

1. Enhanced Visibility

Extensions provide structured visual representations of WebSocket communication: - Grouped messages by type (e.g., chat, system, control) - Color-coded frames for quick scanning - Collapsible and expandable message trees

2. Real-Time Inspection and Replay

- Replaying previous messages with altered payloads accelerates bug reproduction.

- Message history can be annotated, aiding team collaboration during debugging.

3. Timeline-Based Analysis

- Extensions with timeline views help identify latency issues, bottlenecks, and inconsistent message pacing.

- Developers can correlate message sequences with UI events more intuitively.

4. Improved Debugging Flow

- Developers report reduced context-switching between source code and devtools.

- Some extensions allow breakpoints or watchers on WebSocket events, mimicking JavaScript debugging.

Consider

Visual debugging extensions represent a key advancement in tooling for real-time application development. By extending Chrome DevTools with features tailored for WebSocket tracing, developers gain actionable insights, faster debugging cycles, and a better understanding of application behavior. Future work should explore native integration of timeline and message tagging features into standard browser DevTools.

Developer Experience and Limitations

Visual tools significantly enhance the developer experience (DX) by reducing friction and offering cognitive support during debugging. Rather than parsing raw JSON blobs manually or tracing asynchronous behavior through logs, developers can rely on intuitive UI affordances such as real-time visualizations, message filtering, and replay features.

However, some limitations remain:

- Lack of binary frame support: Many extensions focus on text-based payloads and may not correctly parse or display binary frames.

- Non-standard encoding issues: Applications using custom serialization formats (e.g., Protocol Buffers, MsgPack) require external decoding tools or browser instrumentation.

- Extension compatibility: Some extensions may conflict with Content Security Policies (CSP) or have limited functionality when debugging production sites served over HTTPS.

- Performance overhead: Real-time visualization and logging can add browser CPU/memory overhead, particularly in high-frequency WebSocket environments.

Despite these drawbacks, the overall impact on debugging efficiency and developer comprehension remains highly positive.

Developer Experience and Limitations

Visual tools significantly enhance the developer experience (DX) by reducing friction and offering cognitive support during debugging. Rather than parsing raw JSON blobs manually or tracing asynchronous behavior through logs, developers can rely on intuitive UI affordances such as live message streams, structured views, and interactive inspection of frames.

However, some limitations exist:

- Security restrictions: Content Security Policy (CSP) and Cross-Origin Resource Sharing (CORS) can restrict browser extensions from accessing WebSocket frames in production environments.

- Binary and custom formats: Extensions may not handle binary frames or non-standard encodings (e.g., Protocol Buffers) without additional tooling.

- Limited protocol awareness: Generic tools may not fully interpret application-specific semantics, requiring context from the developer.

- Performance trade-offs: Logging and rendering large volumes of data can cause UI lag, especially in high-throughput WebSocket apps.

Despite these constraints, DevTools extensions continue to offer valuable insight during development and testing stages.

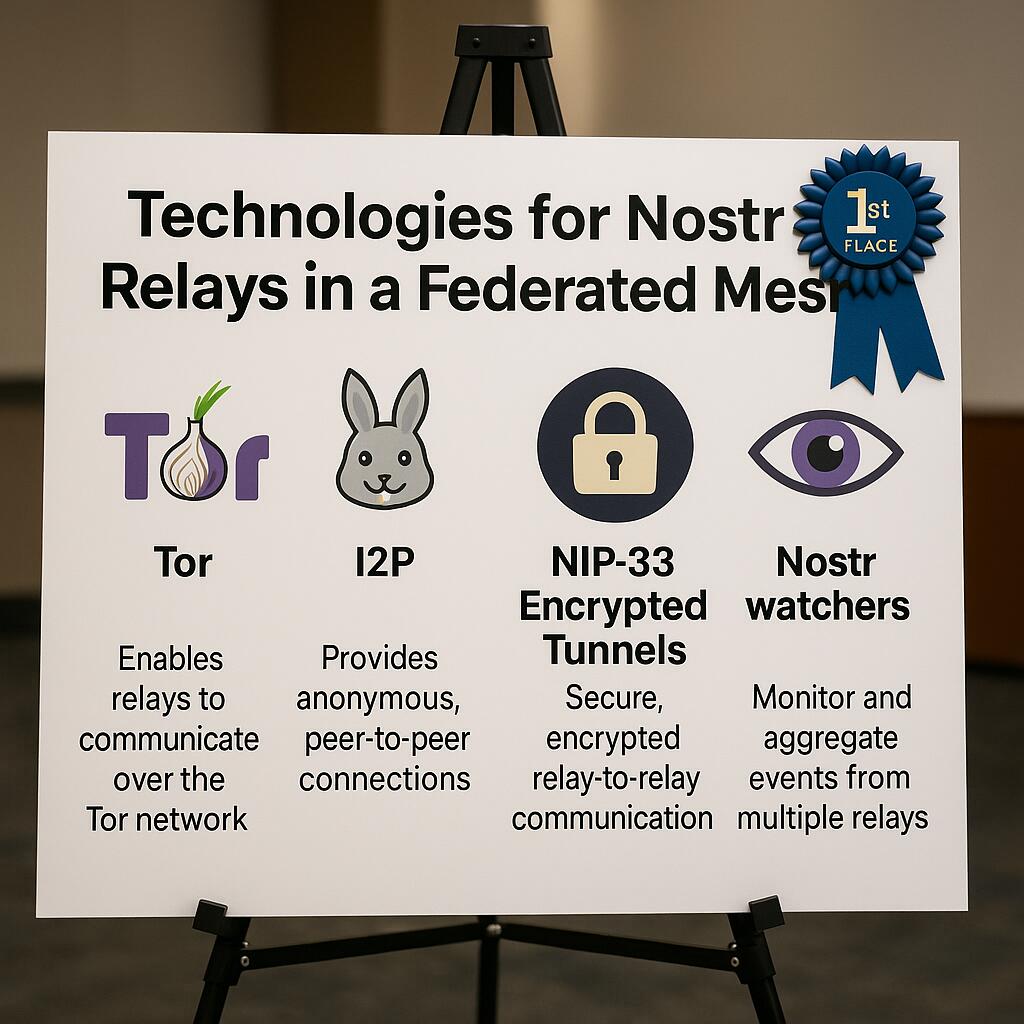

Applying this analysis to relays in the Nostr protocol surfaces some fascinating implications about traffic analysis, developer tooling, and privacy risks, even when data is cryptographically signed. Here's how the concepts relate:

🧠 What This Means for Nostr Relays

1. Traffic Analysis Still Applies

Even though Nostr events are cryptographically signed and, optionally, encrypted (e.g., DMs), relay communication is over plaintext WebSockets or WSS (WebSocket Secure). This means:

- IP addresses, packet size, and timing patterns are all visible to anyone on-path (e.g., ISPs, malicious actors).

- Client behavior can be inferred: Is someone posting, reading, or just idling?

- Frequent "kind" values (like

kind:1for notes orkind:4for encrypted DMs) produce recognizable traffic fingerprints.

🔍 Example:

A pattern like: -

client → relay: small frame at intervals of 30s -relay → client: burst of medium frames …could suggest someone is polling for new posts or using a chat app built on Nostr.

2. DevTools for Nostr Client Devs

For client developers (e.g., building on top of

nostr-tools), browser DevTools and WebSocket inspection make debugging much easier:- You can trace real-time Nostr events without writing logging logic.

- You can verify frame integrity, event flow, and relay responses instantly.

- However, DevTools have limits when Nostr apps use:

- Binary payloads (e.g., zlib-compressed events)

- Custom encodings or protocol adaptations (e.g., for mobile)

3. Fingerprinting Relays and Clients

- Each relay has its own behavior: how fast it responds, whether it sends OKs, how it deals with malformed events.

- These can be fingerprinted by adversaries to identify which software is being used (e.g.,

nostr-rs-relay,strfry, etc.). - Similarly, client apps often emit predictable

REQ,EVENT,CLOSEsequences that can be fingerprinted even over WSS.

4. Privacy Risks

Even if DMs are encrypted: - Message size and timing can hint at contents ("user is typing", long vs. short message, emoji burst, etc.) - Public relays might correlate patterns across multiple clients—even without payload access. - Side-channel analysis becomes viable against high-value targets.

5. Mitigation Strategies in Nostr

Borrowing from TLS and WebSocket security best practices:

| Strategy | Application to Nostr | |-----------------------------|----------------------------------------------------| | Padding messages | Normalize

EVENTsize, especially for DMs | | Batching requests | Send multipleREQsubscriptions in one frame | | Randomize connection times | Avoid predictable connection schedules | | Use private relays / Tor| Obfuscate source IP and reduce metadata exposure | | Connection reuse | Avoid per-event relay opens, use persistent WSS |

TL;DR for Builders

If you're building on Nostr and care about privacy, WebSocket metadata is a leak. The payload isn't the only thing that matters. Be mindful of event timing, size, and structure, even over encrypted channels.

-

@ df478568:2a951e67

2025-04-23 20:25:03

@ df478568:2a951e67

2025-04-23 20:25:03If you've made one single-sig bitcoin wallet, you've made then all. The idea is, write down 12 or 24 magic words. Make your wallet disappear by dropping your phone in the toilet. Repeat the 12 magic words and do some hocus-pocus. Your sats re-appear from realms unknown. Or...Each word represents a 4 digit number from 0000-2047. I say it's magic.

I've recommended many wallets over the years. It's difficult to find the perfect wallet because there are so many with different security tailored for different threat models. You don't need Anchorwatch level of security for 1000 sats. 12 words is good enough. Misty Breez is like Aqua Wallet because the sats get swapped to Liquid in a similar way with a couple differences.

- Misty Breez has no stableshitcoin¹ support.

- Misty Breez gives you a lightning address. Misty Breez Lightning Wallet.

That's a big deal. That's what I need to orange pill the man on the corner selling tamales out of his van. Bitcoin is for everybody, at least anybody who can write 12 words down. A few years ago, almost nobody, not even many bitcoiners had a lightning address. Now Misty Breez makes it easy for anyone with a 5th grade reading level to start using lightning addresses. The tamale guy can send sats back home with as many tariffs as a tweet without leaving his truck.

How Misty Breez Works

Back in the day, I drooled over every word Elizabeth Stark at lightning labs uttered. I still believed in shitcoins at the time. Stark said atomic swaps can be made over the lightning network. Litecoin, since it also adopted the lightning network, can be swapped with bitcoin and vice-versa. I thought this was a good idea because it solves the coincidence of wants. I could technically have a sign on my website that says, "shitcoin accepted here" and automatically convert all my shitcoins to sats.

I don't do that because I now know there is no reason to think any shitcoin will go up in value over the long-term for various reasons. Technically, cashu is a shitcoin. Technically, Liquid is a shitcoin. Technically, I am not a card carrying bitcoin maxi because of this. I use these shitcoins because I find them useful. I consider them to be honest shitcoins(term stolen from NVK²).

Breeze does ~atomic swaps~~ peer swaps between bitcoin and Liquid. The sender sends sats. The receiver turns those sats into Liquid Bitcoin(L-BTC). This L-BTC is backed by bitcoin, therefore Liquid is a full reserve bank in many ways. That's why it molds into my ethical framework. I originally became interested in bitcoin because I thought fractional reserve banking was a scam and bitcoin was(and is) the most viable alternative to this scam.

Sats sent to Misty Breez wallet are pretty secure. It does not offer perfect security. There is no perfect security. Even though on-chain bitcoin is the most pristine example of cybersecurity on the planet, it still has risk. Just ask the guy who is digging up a landfill to find his bitcoin. I have found most noobs lose keys to bitcoin you give them. Very few take the time to keep it safe because they don't understand bitcoin well enough to know it will go up forever Laura.

She writes 12 words down with a reluctant bored look on her face. Wam. Bam. Thank you m'am. Might as well consider it a donation to the network because that index card will be buried in a pile of future trash in no time. Here's a tiny violin playing for the pre-coiners who lost sats.

"Lost coins only make everyone else's coins worth slightly more. Think of it as a donation to everyone." --Sathoshi Nakamoto, BitcoinTalk --June 21, 2010

The same thing will happen with the Misty Wallet. The 12 words will be written down my someone bored and unfulfilled woman working at NPC-Mart, but her phone buzzes in her pocket the next day. She recieved a new payment. Then you share the address on nostr and five people send her sats for no reason at all. They say everyone requires three touch points. Setting up a pre-coiner with a wallet which has a lightning address will allow you to send her as many touch points as you want. You could even send 21 sats per day for 21 days using Zap Planner. That way bitcoin is not just an "investment," but something people can see in action like a lion in the jungle chasing a gazelle.

Make Multiple Orange Pill Touch Points With Misty The Breez Lightning Address

It's no longer just a one-night stand. It's a relationship. You can softly send her sats seven days a week like a Rabbit Hole recap listening freak. Show people how to use bitcoin as it was meant to be used: Peer to Peer electronic cash.

Misty wallet is still beta software so be careful because lightning is still in the w reckless days. Don't risk more sats that you are willing to lose with it just yet, but consider learning how to use it so you can teach others after the wallet is battle tested. I had trouble sending sats to my lightning address today from Phoenix wallet. Hopefully that gets resovled, but I couldn't use it today for whatever reason. I still think it's an awesome idea and will follow this project because I think it has potential.

Misty wallet is still beta software so be careful because lightning is still in the w reckless days. Don't risk more sats that you are willing to lose with it just yet, but consider learning how to use it so you can teach others after the wallet is battle tested. I had trouble sending sats to my lightning address today from Phoenix wallet. Hopefully that gets resovled, but I couldn't use it today for whatever reason. I still think it's an awesome idea and will follow this project because I think it has potential.npub1marc26z8nh3xkj5rcx7ufkatvx6ueqhp5vfw9v5teq26z254renshtf3g0

¹ Stablecoins are shitcoins, but I admit they are not totally useless, but the underlying asset is the epitome of money printer go brrrrrr. ²NVK called cashu an honeset shitcoin on the Bitcoin.review podcast and I've used the term ever sense.

-

@ d34e832d:383f78d0

2025-04-23 20:19:15

@ d34e832d:383f78d0

2025-04-23 20:19:15A Look into Traffic Analysis and What WebSocket Patterns Reveal at the Network Level

While WebSocket encryption (typically via WSS) is essential for protecting data in transit, traffic analysis remains a potent method of uncovering behavioral patterns, data structure inference, and protocol usage—even when payloads are unreadable. This idea investigates the visibility of encrypted WebSocket communications using Wireshark and similar packet inspection tools. We explore what metadata remains visible, how traffic flow can be modeled, and what risks and opportunities exist for developers, penetration testers, and network analysts. The study concludes by discussing mitigation strategies and the implications for privacy, application security, and protocol design.

Consider

In the age of real-time web applications, WebSockets have emerged as a powerful protocol enabling low-latency, bidirectional communication. From collaborative tools and chat applications to financial trading platforms and IoT dashboards, WebSockets have become foundational for interactive user experiences.

However, encryption via WSS (WebSocket Secure, running over TLS) gives developers and users a sense of security. The payload may be unreadable, but what about the rest of the connection? Can patterns, metadata, and traffic characteristics still leak critical information?

This thesis seeks to answer those questions by leveraging Wireshark, the de facto tool for packet inspection, and exploring the world of traffic analysis at the network level.

Background and Related Work

The WebSocket Protocol

Defined in RFC 6455, WebSocket operates over TCP and provides a persistent, full-duplex connection. The protocol upgrades an HTTP connection, then communicates through a simple frame-based structure.

Encryption with WSS

WSS connections use TLS (usually on port 443), making them indistinguishable from HTTPS traffic at the packet level. Payloads are encrypted, but metadata such as IP addresses, timing, packet size, and connection duration remain visible.

Traffic Analysis

Traffic analysis—despite encryption—has long been a technique used in network forensics, surveillance, and malware detection. Prior studies have shown that encrypted protocols like HTTPS, TLS, and SSH still reveal behavioral information through patterns.

Methodology

Tools Used:

- Wireshark (latest stable version)

- TLS decryption with local keys (when permitted)

- Simulated and real-world WebSocket apps (chat, games, IoT dashboards)

- Scripts to generate traffic patterns (Python using websockets and aiohttp)

Test Environments:

- Controlled LAN environments with known server and client

- Live observation of open-source WebSocket platforms (e.g., Matrix clients)

Data Points Captured:

- Packet timing and size

- TLS handshake details

- IP/TCP headers

- Frame burst patterns

- Message rate and directionality

Findings

1. Metadata Leaks

Even without payload access, the following data is visible: - Source/destination IP - Port numbers (typically 443) - Server certificate info - Packet sizes and intervals - TLS handshake fingerprinting (e.g., JA3 hashes)

2. Behavioral Patterns

- Chat apps show consistent message frequency and short message sizes.

- Multiplayer games exhibit rapid bursts of small packets.

- IoT devices often maintain idle connections with periodic keepalives.

- Typing indicators, heartbeats, or "ping/pong" mechanisms are visible even under encryption.

3. Timing and Packet Size Fingerprinting

Even encrypted payloads can be fingerprinted by: - Regularity in payload size (e.g., 92 bytes every 15s) - Distinct bidirectional patterns (e.g., send/ack/send per user action) - TLS record sizes which may indirectly hint at message length

Side-Channel Risks in Encrypted WebSocket Communication

Although WebSocket payloads transmitted over WSS (WebSocket Secure) are encrypted, they remain susceptible to side-channel analysis, a class of attacks that exploit observable characteristics of the communication channel rather than its content.

Side-Channel Risks Include:

1. User Behavior Inference

Adversaries can analyze packet timing and frequency to infer user behavior. For example, typing indicators in chat applications often trigger short, regular packets. Even without payload visibility, a passive observer may identify when a user is typing, idle, or has closed the application. Session duration, message frequency, and bursts of activity can be linked to specific user actions.2. Application Fingerprinting

TLS handshake metadata and consistent traffic patterns can allow an observer to identify specific client libraries or platforms. For example, the sequence and structure of TLS extensions (via JA3 fingerprinting) can differentiate between browsers, SDKs, or WebSocket frameworks. Application behavior—such as timing of keepalives or frequency of updates—can further reinforce these fingerprints.3. Usage Pattern Recognition

Over time, recurring patterns in packet flow may reveal application logic. For instance, multiplayer game sessions often involve predictable synchronization intervals. Financial dashboards may show bursts at fixed polling intervals. This allows for profiling of application type, logic loops, or even user roles.4. Leakage Through Timing

Time-based attacks can be surprisingly revealing. Regular intervals between message bursts can disclose structured interactions—such as polling, pings, or scheduled updates. Fine-grained timing analysis may even infer when individual keystrokes occur, especially in sparse channels where interactivity is high and payloads are short.5. Content Length Correlation

While encrypted, the size of a TLS record often correlates closely to the plaintext message length. This enables attackers to estimate the size of messages, which can be linked to known commands or data structures. Repeated message sizes (e.g., 112 bytes every 30s) may suggest state synchronization or batched updates.6. Session Correlation Across Time

Using IP, JA3 fingerprints, and behavioral metrics, it’s possible to link multiple sessions back to the same client. This weakens anonymity, especially when combined with data from DNS logs, TLS SNI fields (if exposed), or consistent traffic habits. In anonymized systems, this can be particularly damaging.Side-Channel Risks in Encrypted WebSocket Communication

Although WebSocket payloads transmitted over WSS (WebSocket Secure) are encrypted, they remain susceptible to side-channel analysis, a class of attacks that exploit observable characteristics of the communication channel rather than its content.

1. Behavior Inference

Even with end-to-end encryption, adversaries can make educated guesses about user actions based on traffic patterns:

- Typing detection: In chat applications, short, repeated packets every few hundred milliseconds may indicate a user typing.

- Voice activity: In VoIP apps using WebSockets, a series of consistent-size packets followed by silence can reveal when someone starts and stops speaking.

- Gaming actions: Packet bursts at high frequency may correlate with real-time game movement or input actions.

2. Session Duration