-

@ 9f94e6cc:f3472946

2024-11-21 18:55:12

@ 9f94e6cc:f3472946

2024-11-21 18:55:12Der Entartungswettbewerb TikTok hat die Jugend im Griff und verbrutzelt ihre Hirne. Über Reels, den Siegeszug des Hochformats und die Regeln der Viralität.

Text: Aron Morhoff

Hollywood steckt heute in der Hosentasche. 70 Prozent aller YouTube-Inhalte werden auf mobilen Endgeräten, also Smartphones, geschaut. Instagram und TikTok sind die angesagtesten Anwendungen für junge Menschen. Es gibt sie nur noch als App, und ihr Design ist für Mobiltelefone optimiert.

Einst waren Rechner und Laptops die Tools, mit denen ins Internet gegangen wurde. Auch als das Smartphone seinen Siegeszug antrat, waren die Sehgewohnheiten noch auf das Querformat ausgerichtet. Heute werden Rechner fast nur noch zum Arbeiten verwendet. Das Berieseln, die Unterhaltung, das passive Konsumieren hat sich vollständig auf die iPhones und Samsungs dieser Welt verlagert. Das Telefon hat den aufrechten Gang angenommen, kaum einer mehr hält sein Gerät waagerecht.

Homo Digitalis Erectus

Die Welt steht also Kopf. Die Form eines Mediums hat Einfluss auf den Inhalt. Marshall McLuhan formulierte das so: Das Medium selbst ist die Botschaft. Ja mei, mag sich mancher denken, doch medienanthropologisch ist diese Entwicklung durchaus eine Betrachtung wert. Ein Querformat eignet sich besser, um Landschaften, einen Raum oder eine Gruppe abzubilden. Das Hochformat entspricht grob den menschlichen Maßen von der Hüfte bis zum Kopf. Der TikTok-Tanz ist im Smartphone-Design also schon angelegt. Das Hochformat hat die Medieninhalte unserer Zeit noch narzisstischer gemacht.

Dass wir uns durch Smartphones freizügiger und enthemmter zur Schau stellen, ist bekannt. 2013 wurde „Selfie“ vom Oxford English Dictionary zum Wort des Jahres erklärt. Selfie, Selbstporträt, Selbstdarstellung.

Neu ist der Aufwand, der heute vonnöten ist, um die Aufmerksamkeitsschwelle der todamüsierten Mediengesellschaft überhaupt noch zu durchbrechen. In beängstigender Hypnose erwischt man viele Zeitgenossen inzwischen beim Doomscrollen. Das ist der Fachbegriff für das weggetretene Endloswischen und erklärt auch den Namen „Reel“: Der Begriff, im Deutschen verwandt mit „Rolle“, beschreibt die Filmrolle, von der 24 Bilder pro Sekunde auf den Projektor gewischt oder eben abgespult werden.

Länger als drei Sekunden darf ein Kurzvideo deshalb nicht mehr gehen, ohne dass etwas Aufregendes passiert. Sonst wird das Reel aus Langeweile weggewischt. Die Welt im Dopamin-Rausch. Für den Ersteller eines Videos heißt das inzwischen: Sei der lauteste, schrillste, gestörteste Marktschreier. Das Wettrennen um die Augäpfel zwingt zu extremen Formen von Clickbait.

15 Sekunden Ruhm

Das nimmt inzwischen skurrile Formen an. Das Video „Look who I found“ von Noel Robinson (geboren 2001) war im letzten Jahr einer der erfolgreichsten deutschen TikTok-Clips. Man sieht den Deutsch-Nigerianer beim Antanzen eines karikaturartig übergewichtigen Menschen. Noel wird geschubst und fällt. Daraufhin wechselt das Lied – und der fette Mann bewegt seinen Schwabbelbauch im Takt. Noel steht wieder auf, grinst, beide tanzen gemeinsam. Das dauert 15 Sekunden. Ich rate Ihnen, sich das Video einmal anzuschauen, um die Mechanismen von TikTok zu verstehen. Achten Sie alleine darauf, wie vielen Reizen (Menschenmenge, Antanzen, Sturz, Schwabbelbauch) Sie in den ersten fünf Sekunden ausgesetzt sind. Wer schaut so was? Bis dato 220 Millionen Menschen. Das ist kapitalistische Verwertungslogik im bereits verwesten Endstadium. Adorno oder Fromm hätten am Medienzeitgeist entweder ihre Freude oder mächtig zu knabbern.

Die Internet- und Smartphoneabdeckung beträgt mittlerweile fast 100 Prozent. Das Überangebot hat die Regeln geändert. Um überhaupt gesehen zu werden, muss man heute viral gehen. Was dafür inzwischen nötig ist, spricht die niedrigsten Bedürfnisse des Menschen an: Gewalt, Ekel, Sexualisierung, Schock. Die jungen Erwachsenen, die heute auf sozialen Netzwerken den Ton angeben, haben diese Mechanismen längst verinnerlicht. Wie bewusst ihnen das ist, ist fraglich. 2024 prallt eine desaströse Bildungssituation samt fehlender Medienkompetenz auf eine egomanische Jugend, die Privatsphäre nie gekannt hat und seit Kindesbeinen alles in den Äther ballert, was es festhalten kann. Man muss kein Kulturpessimist sein, um diese degenerative Dynamik, auch in ihrer Implikation für unser Zusammenleben und das psychische Wohlergehen der Generation TikTok, als beängstigend zu bezeichnen.

Aron Morhoff studierte Medienethik und ist Absolvent der Freien Akademie für Medien & Journalismus. Frühere Stationen: RT Deutsch und Nuoviso. Heute: Stichpunkt Magazin, Manova, Milosz Matuschek und seine Liveshow "Addictive Programming".

-

@ eac63075:b4988b48

2024-11-09 17:57:27

@ eac63075:b4988b48

2024-11-09 17:57:27Based on a recent paper that included collaboration from renowned experts such as Lynn Alden, Steve Lee, and Ren Crypto Fish, we discuss in depth how Bitcoin's consensus is built, the main risks, and the complex dynamics of protocol upgrades.

Podcast https://www.fountain.fm/episode/wbjD6ntQuvX5u2G5BccC

Presentation https://gamma.app/docs/Analyzing-Bitcoin-Consensus-Risks-in-Protocol-Upgrades-p66axxjwaa37ksn

1. Introduction to Consensus in Bitcoin

Consensus in Bitcoin is the foundation that keeps the network secure and functional, allowing users worldwide to perform transactions in a decentralized manner without the need for intermediaries. Since its launch in 2009, Bitcoin is often described as an "immutable" system designed to resist changes, and it is precisely this resistance that ensures its security and stability.

The central idea behind consensus in Bitcoin is to create a set of acceptance rules for blocks and transactions, ensuring that all network participants agree on the transaction history. This prevents "double-spending," where the same bitcoin could be used in two simultaneous transactions, something that would compromise trust in the network.

Evolution of Consensus in Bitcoin

Over the years, consensus in Bitcoin has undergone several adaptations, and the way participants agree on changes remains a delicate process. Unlike traditional systems, where changes can be imposed from the top down, Bitcoin operates in a decentralized model where any significant change needs the support of various groups of stakeholders, including miners, developers, users, and large node operators.

Moreover, the update process is extremely cautious, as hasty changes can compromise the network's security. As a result, the philosophy of "don't fix what isn't broken" prevails, with improvements happening incrementally and only after broad consensus among those involved. This model can make progress seem slow but ensures that Bitcoin remains faithful to the principles of security and decentralization.

2. Technical Components of Consensus

Bitcoin's consensus is supported by a set of technical rules that determine what is considered a valid transaction and a valid block on the network. These technical aspects ensure that all nodes—the computers that participate in the Bitcoin network—agree on the current state of the blockchain. Below are the main technical components that form the basis of the consensus.

Validation of Blocks and Transactions

The validation of blocks and transactions is the central point of consensus in Bitcoin. A block is only considered valid if it meets certain criteria, such as maximum size, transaction structure, and the solving of the "Proof of Work" problem. The proof of work, required for a block to be included in the blockchain, is a computational process that ensures the block contains significant computational effort—protecting the network against manipulation attempts.

Transactions, in turn, need to follow specific input and output rules. Each transaction includes cryptographic signatures that prove the ownership of the bitcoins sent, as well as validation scripts that verify if the transaction conditions are met. This validation system is essential for network nodes to autonomously confirm that each transaction follows the rules.

Chain Selection

Another fundamental technical issue for Bitcoin's consensus is chain selection, which becomes especially important in cases where multiple versions of the blockchain coexist, such as after a network split (fork). To decide which chain is the "true" one and should be followed, the network adopts the criterion of the highest accumulated proof of work. In other words, the chain with the highest number of valid blocks, built with the greatest computational effort, is chosen by the network as the official one.

This criterion avoids permanent splits because it encourages all nodes to follow the same main chain, reinforcing consensus.

Soft Forks vs. Hard Forks

In the consensus process, protocol changes can happen in two ways: through soft forks or hard forks. These variations affect not only the protocol update but also the implications for network users:

-

Soft Forks: These are changes that are backward compatible. Only nodes that adopt the new update will follow the new rules, but old nodes will still recognize the blocks produced with these rules as valid. This compatibility makes soft forks a safer option for updates, as it minimizes the risk of network division.

-

Hard Forks: These are updates that are not backward compatible, requiring all nodes to update to the new version or risk being separated from the main chain. Hard forks can result in the creation of a new coin, as occurred with the split between Bitcoin and Bitcoin Cash in 2017. While hard forks allow for deeper changes, they also bring significant risks of network fragmentation.

These technical components form the base of Bitcoin's security and resilience, allowing the system to remain functional and immutable without losing the necessary flexibility to evolve over time.

3. Stakeholders in Bitcoin's Consensus

Consensus in Bitcoin is not decided centrally. On the contrary, it depends on the interaction between different groups of stakeholders, each with their motivations, interests, and levels of influence. These groups play fundamental roles in how changes are implemented or rejected on the network. Below, we explore the six main stakeholders in Bitcoin's consensus.

1. Economic Nodes

Economic nodes, usually operated by exchanges, custody providers, and large companies that accept Bitcoin, exert significant influence over consensus. Because they handle large volumes of transactions and act as a connection point between the Bitcoin ecosystem and the traditional financial system, these nodes have the power to validate or reject blocks and to define which version of the software to follow in case of a fork.

Their influence is proportional to the volume of transactions they handle, and they can directly affect which chain will be seen as the main one. Their incentive is to maintain the network's stability and security to preserve its functionality and meet regulatory requirements.

2. Investors

Investors, including large institutional funds and individual Bitcoin holders, influence consensus indirectly through their impact on the asset's price. Their buying and selling actions can affect Bitcoin's value, which in turn influences the motivation of miners and other stakeholders to continue investing in the network's security and development.

Some institutional investors have agreements with custodians that may limit their ability to act in network split situations. Thus, the impact of each investor on consensus can vary based on their ownership structure and how quickly they can react to a network change.

3. Media Influencers

Media influencers, including journalists, analysts, and popular personalities on social media, have a powerful role in shaping public opinion about Bitcoin and possible updates. These influencers can help educate the public, promote debates, and bring transparency to the consensus process.

On the other hand, the impact of influencers can be double-edged: while they can clarify complex topics, they can also distort perceptions by amplifying or minimizing change proposals. This makes them a force both of support and resistance to consensus.

4. Miners

Miners are responsible for validating transactions and including blocks in the blockchain. Through computational power (hashrate), they also exert significant influence over consensus decisions. In update processes, miners often signal their support for a proposal, indicating that the new version is safe to use. However, this signaling is not always definitive, and miners can change their position if they deem it necessary.

Their incentive is to maximize returns from block rewards and transaction fees, as well as to maintain the value of investments in their specialized equipment, which are only profitable if the network remains stable.

5. Protocol Developers

Protocol developers, often called "Core Developers," are responsible for writing and maintaining Bitcoin's code. Although they do not have direct power over consensus, they possess an informal veto power since they decide which changes are included in the main client (Bitcoin Core). This group also serves as an important source of technical knowledge, helping guide decisions and inform other stakeholders.

Their incentive lies in the continuous improvement of the network, ensuring security and decentralization. Many developers are funded by grants and sponsorships, but their motivations generally include a strong ideological commitment to Bitcoin's principles.

6. Users and Application Developers

This group includes people who use Bitcoin in their daily transactions and developers who build solutions based on the network, such as wallets, exchanges, and payment platforms. Although their power in consensus is less than that of miners or economic nodes, they play an important role because they are responsible for popularizing Bitcoin's use and expanding the ecosystem.

If application developers decide not to adopt an update, this can affect compatibility and widespread acceptance. Thus, they indirectly influence consensus by deciding which version of the protocol to follow in their applications.

These stakeholders are vital to the consensus process, and each group exerts influence according to their involvement, incentives, and ability to act in situations of change. Understanding the role of each makes it clearer how consensus is formed and why it is so difficult to make significant changes to Bitcoin.

4. Mechanisms for Activating Updates in Bitcoin

For Bitcoin to evolve without compromising security and consensus, different mechanisms for activating updates have been developed over the years. These mechanisms help coordinate changes among network nodes to minimize the risk of fragmentation and ensure that updates are implemented in an orderly manner. Here, we explore some of the main methods used in Bitcoin, their advantages and disadvantages, as well as historical examples of significant updates.

Flag Day

The Flag Day mechanism is one of the simplest forms of activating changes. In it, a specific date or block is determined as the activation moment, and all nodes must be updated by that point. This method does not involve prior signaling; participants simply need to update to the new software version by the established day or block.

-

Advantages: Simplicity and predictability are the main benefits of Flag Day, as everyone knows the exact activation date.

-

Disadvantages: Inflexibility can be a problem because there is no way to adjust the schedule if a significant part of the network has not updated. This can result in network splits if a significant number of nodes are not ready for the update.

An example of Flag Day was the Pay to Script Hash (P2SH) update in 2012, which required all nodes to adopt the change to avoid compatibility issues.

BIP34 and BIP9

BIP34 introduced a more dynamic process, in which miners increase the version number in block headers to signal the update. When a predetermined percentage of the last blocks is mined with this new version, the update is automatically activated. This model later evolved with BIP9, which allowed multiple updates to be signaled simultaneously through "version bits," each corresponding to a specific change.

-

Advantages: Allows the network to activate updates gradually, giving more time for participants to adapt.

-

Disadvantages: These methods rely heavily on miner support, which means that if a sufficient number of miners do not signal the update, it can be delayed or not implemented.

BIP9 was used in the activation of SegWit (BIP141) but faced challenges because some miners did not signal their intent to activate, leading to the development of new mechanisms.

User Activated Soft Forks (UASF) and User Resisted Soft Forks (URSF)

To increase the decision-making power of ordinary users, the concept of User Activated Soft Fork (UASF) was introduced, allowing node operators, not just miners, to determine consensus for a change. In this model, nodes set a date to start rejecting blocks that are not in compliance with the new update, forcing miners to adapt or risk having their blocks rejected by the network.

URSF, in turn, is a model where nodes reject blocks that attempt to adopt a specific update, functioning as resistance against proposed changes.

-

Advantages: UASF returns decision-making power to node operators, ensuring that changes do not depend solely on miners.

-

Disadvantages: Both UASF and URSF can generate network splits, especially in cases of strong opposition among different stakeholders.

An example of UASF was the activation of SegWit in 2017, where users supported activation independently of miner signaling, which ended up forcing its adoption.

BIP8 (LOT=True)

BIP8 is an evolution of BIP9, designed to prevent miners from indefinitely blocking a change desired by the majority of users and developers. BIP8 allows setting a parameter called "lockinontimeout" (LOT) as true, which means that if the update has not been fully signaled by a certain point, it is automatically activated.

-

Advantages: Ensures that changes with broad support among users are not blocked by miners who wish to maintain the status quo.

-

Disadvantages: Can lead to network splits if miners or other important stakeholders do not support the update.

Although BIP8 with LOT=True has not yet been used in Bitcoin, it is a proposal that can be applied in future updates if necessary.

These activation mechanisms have been essential for Bitcoin's development, allowing updates that keep the network secure and functional. Each method brings its own advantages and challenges, but all share the goal of preserving consensus and network cohesion.

5. Risks and Considerations in Consensus Updates

Consensus updates in Bitcoin are complex processes that involve not only technical aspects but also political, economic, and social considerations. Due to the network's decentralized nature, each change brings with it a set of risks that need to be carefully assessed. Below, we explore some of the main challenges and future scenarios, as well as the possible impacts on stakeholders.

Network Fragility with Alternative Implementations

One of the main risks associated with consensus updates is the possibility of network fragmentation when there are alternative software implementations. If an update is implemented by a significant group of nodes but rejected by others, a network split (fork) can occur. This creates two competing chains, each with a different version of the transaction history, leading to unpredictable consequences for users and investors.

Such fragmentation weakens Bitcoin because, by dividing hashing power (computing) and coin value, it reduces network security and investor confidence. A notable example of this risk was the fork that gave rise to Bitcoin Cash in 2017 when disagreements over block size resulted in a new chain and a new asset.

Chain Splits and Impact on Stakeholders

Chain splits are a significant risk in update processes, especially in hard forks. During a hard fork, the network is split into two separate chains, each with its own set of rules. This results in the creation of a new coin and leaves users with duplicated assets on both chains. While this may seem advantageous, in the long run, these splits weaken the network and create uncertainties for investors.

Each group of stakeholders reacts differently to a chain split:

-

Institutional Investors and ETFs: Face regulatory and compliance challenges because many of these assets are managed under strict regulations. The creation of a new coin requires decisions to be made quickly to avoid potential losses, which may be hampered by regulatory constraints.

-

Miners: May be incentivized to shift their computing power to the chain that offers higher profitability, which can weaken one of the networks.

-

Economic Nodes: Such as major exchanges and custody providers, have to quickly choose which chain to support, influencing the perceived value of each network.

Such divisions can generate uncertainties and loss of value, especially for institutional investors and those who use Bitcoin as a store of value.

Regulatory Impacts and Institutional Investors

With the growing presence of institutional investors in Bitcoin, consensus changes face new compliance challenges. Bitcoin ETFs, for example, are required to follow strict rules about which assets they can include and how chain split events should be handled. The creation of a new asset or migration to a new chain can complicate these processes, creating pressure for large financial players to quickly choose a chain, affecting the stability of consensus.

Moreover, decisions regarding forks can influence the Bitcoin futures and derivatives market, affecting perception and adoption by new investors. Therefore, the need to avoid splits and maintain cohesion is crucial to attract and preserve the confidence of these investors.

Security Considerations in Soft Forks and Hard Forks

While soft forks are generally preferred in Bitcoin for their backward compatibility, they are not without risks. Soft forks can create different classes of nodes on the network (updated and non-updated), which increases operational complexity and can ultimately weaken consensus cohesion. In a network scenario with fragmentation of node classes, Bitcoin's security can be affected, as some nodes may lose part of the visibility over updated transactions or rules.

In hard forks, the security risk is even more evident because all nodes need to adopt the new update to avoid network division. Experience shows that abrupt changes can create temporary vulnerabilities, in which malicious agents try to exploit the transition to attack the network.

Bounty Claim Risks and Attack Scenarios

Another risk in consensus updates are so-called "bounty claims"—accumulated rewards that can be obtained if an attacker manages to split or deceive a part of the network. In a conflict scenario, a group of miners or nodes could be incentivized to support a new update or create an alternative version of the software to benefit from these rewards.

These risks require stakeholders to carefully assess each update and the potential vulnerabilities it may introduce. The possibility of "bounty claims" adds a layer of complexity to consensus because each interest group may see a financial opportunity in a change that, in the long term, may harm network stability.

The risks discussed above show the complexity of consensus in Bitcoin and the importance of approaching it gradually and deliberately. Updates need to consider not only technical aspects but also economic and social implications, in order to preserve Bitcoin's integrity and maintain trust among stakeholders.

6. Recommendations for the Consensus Process in Bitcoin

To ensure that protocol changes in Bitcoin are implemented safely and with broad support, it is essential that all stakeholders adopt a careful and coordinated approach. Here are strategic recommendations for evaluating, supporting, or rejecting consensus updates, considering the risks and challenges discussed earlier, along with best practices for successful implementation.

1. Careful Evaluation of Proposal Maturity

Stakeholders should rigorously assess the maturity level of a proposal before supporting its implementation. Updates that are still experimental or lack a robust technical foundation can expose the network to unnecessary risks. Ideally, change proposals should go through an extensive testing phase, have security audits, and receive review and feedback from various developers and experts.

2. Extensive Testing in Secure and Compatible Networks

Before an update is activated on the mainnet, it is essential to test it on networks like testnet and signet, and whenever possible, on other compatible networks that offer a safe and controlled environment to identify potential issues. Testing on networks like Litecoin was fundamental for the safe launch of innovations like SegWit and the Lightning Network, allowing functionalities to be validated on a lower-impact network before being implemented on Bitcoin.

The Liquid Network, developed by Blockstream, also plays an important role as an experimental network for new proposals, such as OP_CAT. By adopting these testing environments, stakeholders can mitigate risks and ensure that the update is reliable and secure before being adopted by the main network.

3. Importance of Stakeholder Engagement

The success of a consensus update strongly depends on the active participation of all stakeholders. This includes economic nodes, miners, protocol developers, investors, and end users. Lack of participation can lead to inadequate decisions or even future network splits, which would compromise Bitcoin's security and stability.

4. Key Questions for Evaluating Consensus Proposals

To assist in decision-making, each group of stakeholders should consider some key questions before supporting a consensus change:

- Does the proposal offer tangible benefits for Bitcoin's security, scalability, or usability?

- Does it maintain backward compatibility or introduce the risk of network split?

- Are the implementation requirements clear and feasible for each group involved?

- Are there clear and aligned incentives for all stakeholder groups to accept the change?

5. Coordination and Timing in Implementations

Timing is crucial. Updates with short activation windows can force a split because not all nodes and miners can update simultaneously. Changes should be planned with ample deadlines to allow all stakeholders to adjust their systems, avoiding surprises that could lead to fragmentation.

Mechanisms like soft forks are generally preferable to hard forks because they allow a smoother transition. Opting for backward-compatible updates when possible facilitates the process and ensures that nodes and miners can adapt without pressure.

6. Continuous Monitoring and Re-evaluation

After an update, it's essential to monitor the network to identify problems or side effects. This continuous process helps ensure cohesion and trust among all participants, keeping Bitcoin as a secure and robust network.

These recommendations, including the use of secure networks for extensive testing, promote a collaborative and secure environment for Bitcoin's consensus process. By adopting a deliberate and strategic approach, stakeholders can preserve Bitcoin's value as a decentralized and censorship-resistant network.

7. Conclusion

Consensus in Bitcoin is more than a set of rules; it's the foundation that sustains the network as a decentralized, secure, and reliable system. Unlike centralized systems, where decisions can be made quickly, Bitcoin requires a much more deliberate and cooperative approach, where the interests of miners, economic nodes, developers, investors, and users must be considered and harmonized. This governance model may seem slow, but it is fundamental to preserving the resilience and trust that make Bitcoin a global store of value and censorship-resistant.

Consensus updates in Bitcoin must balance the need for innovation with the preservation of the network's core principles. The development process of a proposal needs to be detailed and rigorous, going through several testing stages, such as in testnet, signet, and compatible networks like Litecoin and Liquid Network. These networks offer safe environments for proposals to be analyzed and improved before being launched on the main network.

Each proposed change must be carefully evaluated regarding its maturity, impact, backward compatibility, and support among stakeholders. The recommended key questions and appropriate timing are critical to ensure that an update is adopted without compromising network cohesion. It's also essential that the implementation process is continuously monitored and re-evaluated, allowing adjustments as necessary and minimizing the risk of instability.

By following these guidelines, Bitcoin's stakeholders can ensure that the network continues to evolve safely and robustly, maintaining user trust and further solidifying its role as one of the most resilient and innovative digital assets in the world. Ultimately, consensus in Bitcoin is not just a technical issue but a reflection of its community and the values it represents: security, decentralization, and resilience.

8. Links

Whitepaper: https://github.com/bitcoin-cap/bcap

Youtube (pt-br): https://www.youtube.com/watch?v=rARycAibl9o&list=PL-qnhF0qlSPkfhorqsREuIu4UTbF0h4zb

-

-

@ 42342239:1d80db24

2024-12-19 15:26:01

@ 42342239:1d80db24

2024-12-19 15:26:01Im Frühjahr kündigte EU-Kommissarin Ursula von der Leyen an, sie wolle einen „ Europäischen Demokratieschild " schaffen, um die EU vor ausländischer Einflussnahme zu schützen. Von der Leyens Demokratieschild befindet sich derzeit in der Planungsphase. Die erklärte Absicht besteht darin, eine „ spezielle Struktur zur Bekämpfung ausländischer Informationsmanipulation und -einmischung" zu schaffen. Obwohl es als Instrument zum Schutz der Demokratie angepriesen wird, vermuten einige, dass es sich in Wirklichkeit um einen verschleierten Versuch handelt, abweichende Meinungen zu unterdrücken. Der im vergangenen Jahr verabschiedete Digital Services Act (DSA) der EU ist eng mit diesem Schild verbunden. Durch den DSA riskieren große Social-Media-Plattformen wie Elon Musks X erhebliche Geldstrafen, wenn sie den Forderungen der EU-Bürokraten nach Zensur und Moderation nicht nachkommen.

Note: This text is also available in English at substack.com. Many thanks to

stroger1@iris.tofor this German translation.Im krassen Gegensatz dazu hat sich der künftige US-Präsident Donald Trump als klarer Befürworter der Meinungsfreiheit und entschiedener Gegner der Zensur hervorgetan. Er wurde bereits von YouTube gesperrt, hat jedoch erklärt, er wolle „das linke Zensurregime zerschlagen und das Recht auf freie Meinungsäußerung für alle Amerikaner zurückfordern" . Er hat auch behauptet: „Wenn wir keine freie Meinungsäußerung haben, dann haben wir einfach kein freies Land."

Sein künftiger Vizepräsident J.D. Vance hat sogar angedeutet, dass er bereit sei, US-Militärhilfe von der Achtung der Meinungsfreiheit in den europäischen NATO-Ländern abhängig zu machen. Vances Aussage erfolgte, nachdem EU-Binnenmarktkommissar Thierry Breton vor seinem geplanten Gespräch mit Trump einen umstrittenen Brief an Musk geschickt hatte. Heute erscheint dies als unkluger Schritt, nicht zuletzt, weil er als Versuch gewertet werden kann, die US-Wahl zu beeinflussen -- etwas, das paradoxerweise dem erklärten Zweck von von der Leyens Demokratieschild (d. h. ausländische Manipulationen zu bekämpfen) widerspricht.

Wenn die NATO möchte, dass wir sie weiterhin unterstützen, und die NATO möchte, dass wir weiterhin ein gutes Mitglied dieses Militärbündnisses sind, warum respektieren Sie dann nicht die amerikanischen Werte und die freie Meinungsäußerung?

- J.D. Vance

In der EU sind Verfechter der Meinungsfreiheit in der Öffentlichkeit weniger verbreitet. In Deutschland hat Vizekanzler Robert Habeck kürzlich erklärt, er sei „überhaupt nicht glücklich darüber, was dort [auf X] passiert ... seit Elon Musk das Amt übernommen hat", und wünscht sich eine strengere Regulierung der sozialen Medien. Die Wohnung eines deutschen Rentners wurde kürzlich von der Polizei durchsucht, nachdem er ein Bild von Habeck mit einem abfälligen Kommentar veröffentlicht hatte . Die deutsche Polizei verfolgt auch einen anderen Kontoinhaber, der einen Minister als „übergewichtig" bezeichnet hat. Dieser überhaupt nicht übergewichtige Minister hat kürzlich eine Zeitung verboten , die mit der laut Meinungsumfragen zweitgrößten Partei Deutschlands, der Alternative für Deutschland (AfD), verbündet ist. Eine Partei, die 113 deutsche Parlamentarier nun offiziell verbieten wollen .

Nach dem US-Wahlergebnis stellen sich viele unbeantwortete Fragen. Wird das Weiße Haus seine Aufmerksamkeit auf die restriktivere Haltung der EU richten, die als Untergrabung der freien Meinungsäußerung angesehen werden kann? Oder droht Musks X und Chinas TikTok stattdessen ein EU-Verbot? Können EU-Länder noch mit militärischer Unterstützung aus den USA rechnen? Und wenn große amerikanische Plattformen verboten werden, wohin sollten sich die EU-Bürger stattdessen wenden? Abgesehen von russischen Alternativen gibt es keine großen europäischen Plattformen. Und wenn die Frage der Meinungsfreiheit neu überdacht wird, was bedeutet das für die Zukunft von Parteien wie der deutschen AfD?

-

@ eac63075:b4988b48

2024-10-26 22:14:19

@ eac63075:b4988b48

2024-10-26 22:14:19The future of physical money is at stake, and the discussion about DREX, the new digital currency planned by the Central Bank of Brazil, is gaining momentum. In a candid and intense conversation, Federal Deputy Julia Zanatta (PL/SC) discussed the challenges and risks of this digital transition, also addressing her Bill No. 3,341/2024, which aims to prevent the extinction of physical currency. This bill emerges as a direct response to legislative initiatives seeking to replace physical money with digital alternatives, limiting citizens' options and potentially compromising individual freedom. Let's delve into the main points of this conversation.

https://www.fountain.fm/episode/i5YGJ9Ors3PkqAIMvNQ0

What is a CBDC?

Before discussing the specifics of DREX, it’s important to understand what a CBDC (Central Bank Digital Currency) is. CBDCs are digital currencies issued by central banks, similar to a digital version of physical money. Unlike cryptocurrencies such as Bitcoin, which operate in a decentralized manner, CBDCs are centralized and regulated by the government. In other words, they are digital currencies created and controlled by the Central Bank, intended to replace physical currency.

A prominent feature of CBDCs is their programmability. This means that the government can theoretically set rules about how, where, and for what this currency can be used. This aspect enables a level of control over citizens' finances that is impossible with physical money. By programming the currency, the government could limit transactions by setting geographical or usage restrictions. In practice, money within a CBDC could be restricted to specific spending or authorized for use in a defined geographical area.

In countries like China, where citizen actions and attitudes are also monitored, a person considered to have a "low score" due to a moral or ideological violation may have their transactions limited to essential purchases, restricting their digital currency use to non-essential activities. This financial control is strengthened because, unlike physical money, digital currency cannot be exchanged anonymously.

Practical Example: The Case of DREX During the Pandemic

To illustrate how DREX could be used, an example was given by Eric Altafim, director of Banco Itaú. He suggested that, if DREX had existed during the COVID-19 pandemic, the government could have restricted the currency’s use to a 5-kilometer radius around a person’s residence, limiting their economic mobility. Another proposed use by the executive related to the Bolsa Família welfare program: the government could set up programming that only allows this benefit to be used exclusively for food purchases. Although these examples are presented as control measures for safety or organization, they demonstrate how much a CBDC could restrict citizens' freedom of choice.

To illustrate the potential for state control through a Central Bank Digital Currency (CBDC), such as DREX, it is helpful to look at the example of China. In China, the implementation of a CBDC coincides with the country’s Social Credit System, a governmental surveillance tool that assesses citizens' and companies' behavior. Together, these technologies allow the Chinese government to monitor, reward, and, above all, punish behavior deemed inappropriate or threatening to the government.

How Does China's Social Credit System Work?

Implemented in 2014, China's Social Credit System assigns every citizen and company a "score" based on various factors, including financial behavior, criminal record, social interactions, and even online activities. This score determines the benefits or penalties each individual receives and can affect everything from public transport access to obtaining loans and enrolling in elite schools for their children. Citizens with low scores may face various sanctions, including travel restrictions, fines, and difficulty in securing loans.

With the adoption of the CBDC — or “digital yuan” — the Chinese government now has a new tool to closely monitor citizens' financial transactions, facilitating the application of Social Credit System penalties. China’s CBDC is a programmable digital currency, which means that the government can restrict how, when, and where the money can be spent. Through this level of control, digital currency becomes a powerful mechanism for influencing citizens' behavior.

Imagine, for instance, a citizen who repeatedly posts critical remarks about the government on social media or participates in protests. If the Social Credit System assigns this citizen a low score, the Chinese government could, through the CBDC, restrict their money usage in certain areas or sectors. For example, they could be prevented from buying tickets to travel to other regions, prohibited from purchasing certain consumer goods, or even restricted to making transactions only at stores near their home.

Another example of how the government can use the CBDC to enforce the Social Credit System is by monitoring purchases of products such as alcohol or luxury items. If a citizen uses the CBDC to spend more than the government deems reasonable on such products, this could negatively impact their social score, resulting in additional penalties such as future purchase restrictions or a lowered rating that impacts their personal and professional lives.

In China, this kind of control has already been demonstrated in several cases. Citizens added to Social Credit System “blacklists” have seen their spending and investment capacity severely limited. The combination of digital currency and social scores thus creates a sophisticated and invasive surveillance system, through which the Chinese government controls important aspects of citizens’ financial lives and individual freedoms.

Deputy Julia Zanatta views these examples with great concern. She argues that if the state has full control over digital money, citizens will be exposed to a level of economic control and surveillance never seen before. In a democracy, this control poses a risk, but in an authoritarian regime, it could be used as a powerful tool of repression.

DREX and Bill No. 3,341/2024

Julia Zanatta became aware of a bill by a Workers' Party (PT) deputy (Bill 4068/2020 by Deputy Reginaldo Lopes - PT/MG) that proposes the extinction of physical money within five years, aiming for a complete transition to DREX, the digital currency developed by the Central Bank of Brazil. Concerned about the impact of this measure, Julia drafted her bill, PL No. 3,341/2024, which prohibits the elimination of physical money, ensuring citizens the right to choose physical currency.

“The more I read about DREX, the less I want its implementation,” says the deputy. DREX is a Central Bank Digital Currency (CBDC), similar to other state digital currencies worldwide, but which, according to Julia, carries extreme control risks. She points out that with DREX, the State could closely monitor each citizen’s transactions, eliminating anonymity and potentially restricting freedom of choice. This control would lie in the hands of the Central Bank, which could, in a crisis or government change, “freeze balances or even delete funds directly from user accounts.”

Risks and Individual Freedom

Julia raises concerns about potential abuses of power that complete digitalization could allow. In a democracy, state control over personal finances raises serious questions, and EddieOz warns of an even more problematic future. “Today we are in a democracy, but tomorrow, with a government transition, we don't know if this kind of power will be used properly or abused,” he states. In other words, DREX gives the State the ability to restrict or condition the use of money, opening the door to unprecedented financial surveillance.

EddieOz cites Nigeria as an example, where a CBDC was implemented, and the government imposed severe restrictions on the use of physical money to encourage the use of digital currency, leading to protests and clashes in the country. In practice, the poorest and unbanked — those without regular access to banking services — were harshly affected, as without physical money, many cannot conduct basic transactions. Julia highlights that in Brazil, this situation would be even more severe, given the large number of unbanked individuals and the extent of rural areas where access to technology is limited.

The Relationship Between DREX and Pix

The digital transition has already begun with Pix, which revolutionized instant transfers and payments in Brazil. However, Julia points out that Pix, though popular, is a citizen’s choice, while DREX tends to eliminate that choice. The deputy expresses concern about new rules suggested for Pix, such as daily transaction limits of a thousand reais, justified as anti-fraud measures but which, in her view, represent additional control and a profit opportunity for banks. “How many more rules will banks create to profit from us?” asks Julia, noting that DREX could further enhance control over personal finances.

International Precedents and Resistance to CBDC

The deputy also cites examples from other countries resisting the idea of a centralized digital currency. In the United States, states like New Hampshire have passed laws to prevent the advance of CBDCs, and leaders such as Donald Trump have opposed creating a national digital currency. Trump, addressing the topic, uses a justification similar to Julia’s: in a digitalized system, “with one click, your money could disappear.” She agrees with the warning, emphasizing the control risk that a CBDC represents, especially for countries with disadvantaged populations.

Besides the United States, Canada, Colombia, and Australia have also suspended studies on digital currencies, citing the need for further discussions on population impacts. However, in Brazil, the debate on DREX is still limited, with few parliamentarians and political leaders openly discussing the topic. According to Julia, only she and one or two deputies are truly trying to bring this discussion to the Chamber, making DREX’s advance even more concerning.

Bill No. 3,341/2024 and Popular Pressure

For Julia, her bill is a first step. Although she acknowledges that ideally, it would prevent DREX's implementation entirely, PL 3341/2024 is a measure to ensure citizens' choice to use physical money, preserving a form of individual freedom. “If the future means control, I prefer to live in the past,” Julia asserts, reinforcing that the fight for freedom is at the heart of her bill.

However, the deputy emphasizes that none of this will be possible without popular mobilization. According to her, popular pressure is crucial for other deputies to take notice and support PL 3341. “I am only one deputy, and we need the public’s support to raise the project’s visibility,” she explains, encouraging the public to press other parliamentarians and ask them to “pay attention to PL 3341 and the project that prohibits the end of physical money.” The deputy believes that with a strong awareness and pressure movement, it is possible to advance the debate and ensure Brazilians’ financial freedom.

What’s at Stake?

Julia Zanatta leaves no doubt: DREX represents a profound shift in how money will be used and controlled in Brazil. More than a simple modernization of the financial system, the Central Bank’s CBDC sets precedents for an unprecedented level of citizen surveillance and control in the country. For the deputy, this transition needs to be debated broadly and transparently, and it’s up to the Brazilian people to defend their rights and demand that the National Congress discuss these changes responsibly.

The deputy also emphasizes that, regardless of political or partisan views, this issue affects all Brazilians. “This agenda is something that will affect everyone. We need to be united to ensure people understand the gravity of what could happen.” Julia believes that by sharing information and generating open debate, it is possible to prevent Brazil from following the path of countries that have already implemented a digital currency in an authoritarian way.

A Call to Action

The future of physical money in Brazil is at risk. For those who share Deputy Julia Zanatta’s concerns, the time to act is now. Mobilize, get informed, and press your representatives. PL 3341/2024 is an opportunity to ensure that Brazilian citizens have a choice in how to use their money, without excessive state interference or surveillance.

In the end, as the deputy puts it, the central issue is freedom. “My fear is that this project will pass, and people won’t even understand what is happening.” Therefore, may every citizen at least have the chance to understand what’s at stake and make their voice heard in defense of a Brazil where individual freedom and privacy are respected values.

-

@ 42342239:1d80db24

2024-12-19 09:00:14

@ 42342239:1d80db24

2024-12-19 09:00:14Germany, the EU's largest economy, is once again forced to bear the label "Europe's sick man". The economic news makes for dismal reading. Industrial production has been trending downward for a long time. Energy-intensive production has decreased by as much as 20% in just a few years. Volkswagen is closing factories. Thyssenkrupp is laying off employees and more than three million pensioners are at risk of poverty according to a study.

Germany is facing a number of major challenges, including high energy costs and increased competition from China. In 2000, Germany accounted for 8% of global industrial production, but by 2030, its share is expected to have fallen to 3%. In comparison, China accounted for 2% of global industrial production in 2000, but is expected to account for nearly half (45%) of industrial production in a few years. This is according to a report from the UN's Industrial Development Organization.

Germany's electricity prices are five times higher than China's, a situation that poses a significant obstacle to maintaining a competitive position. The three main reasons are the phase-out of nuclear power, the sabotage of Nord Stream, and the ongoing energy transition, also known as Energiewende. Upon closer inspection, it is clear that industrial production has been trending downward since the transition to a greener economy began to be prioritized.

Germany's former defense minister, EU Commission President von der Leyen, called the European Green Deal Europe's "man on the moon" moment in 2019. This year, she has launched increased focus on these green goals.

However, the EU as a whole has fallen behind the US year after year. European companies have significantly higher energy costs than their American competitors, with electricity prices 2-3 times higher and natural gas prices 4-5 times higher.

The Environmental Kuznets Curve is a model that examines the relationship between economic growth and environmental impact. The idea is that increased material prosperity initially leads to more environmental degradation, but after a certain threshold is passed, there is a gradual decrease. Decreased material prosperity can thus, according to the relationship, lead to increased environmental degradation, for example due to changed consumption habits (fewer organic products in the shopping basket).

This year's election has resulted in a historic change, where all incumbent government parties in the Western world have seen their popularity decline. The pattern appears to be repeating itself in Germany next year, where Chancellor Olaf Scholz is struggling with low confidence figures ahead of the election in February, which doesn't seem surprising. Adjusted for inflation, German wages are several percent lower than a few years ago, especially in the manufacturing industry.

Germany is still a very rich country, but the current trend towards deindustrialization and falling wages can have consequences for environmental priorities in the long run. Economic difficulties can lead to environmental issues being downgraded. Perhaps the declining support for incumbent government parties is a first sign? Somewhere along the road to poverty, people will rise up in revolt.

-

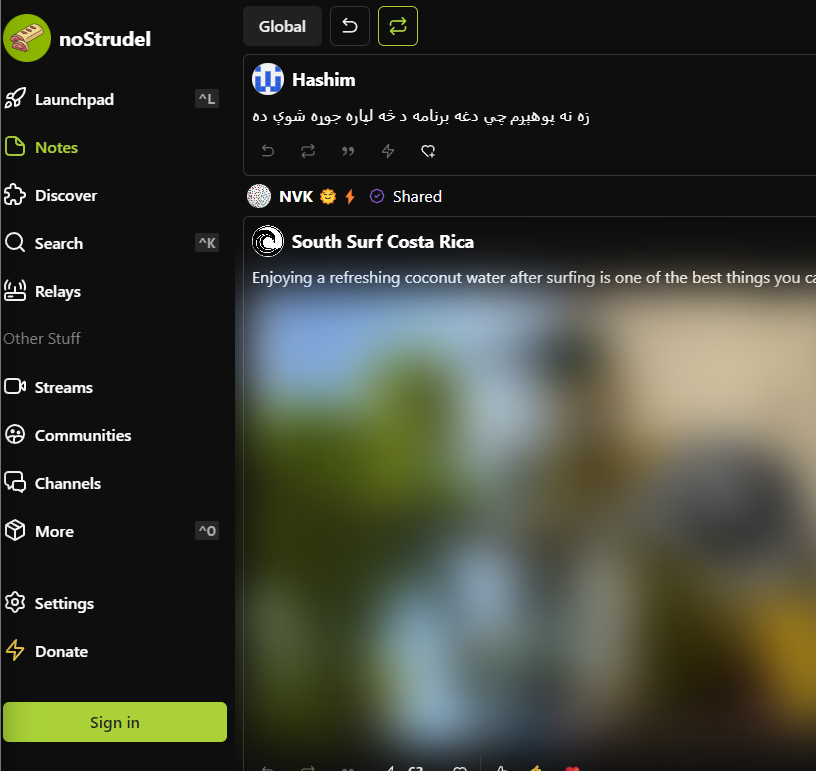

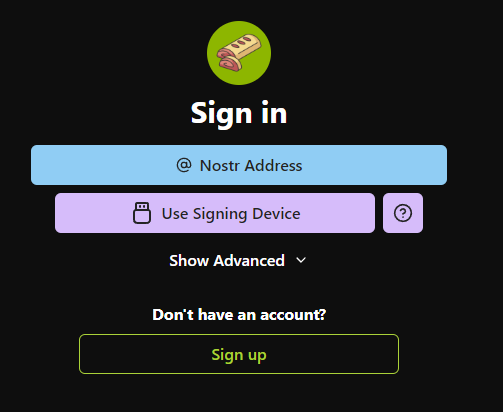

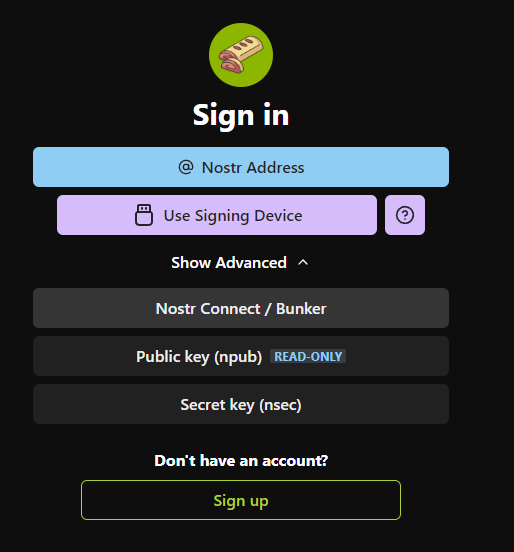

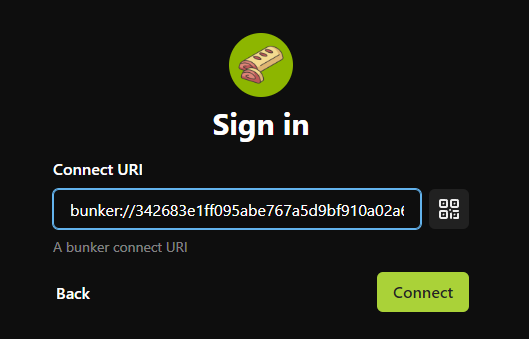

@ ee11a5df:b76c4e49

2024-12-16 05:29:30

@ ee11a5df:b76c4e49

2024-12-16 05:29:30Nostr 2?

Breaking Changes in Nostr

Nostr was a huge leap forward. But it isn't perfect.

When developers notice a problem with nostr, they confer with each other to work out a solution to the problem. This is usually in the form of a NIP PR on the nips repo.

Some problems are easy. Just add something new and optional. No biggie. Zaps, git stuff, bunkers... just dream it up and add it.

Other problems can only be fixed by breaking changes. With a breaking change, the overall path forward is like this: Add the new way of doing it while preserving the old way. Push the major software to switch to the new way. Then deprecate the old way. This is a simplification, but it is the basic idea. It is how we solved markers/quotes/root and how we are upgrading encryption, among other things.

This process of pushing through a breaking change becomes more difficult as we have more and more existing nostr software out there that will break. Most of the time what happens is that the major software is driven to make the change (usually by nostr:npub180cvv07tjdrrgpa0j7j7tmnyl2yr6yr7l8j4s3evf6u64th6gkwsyjh6w6), and the smaller software is left to fend for itself. A while back I introduced the BREAKING.md file to help people developing smaller lesser-known software keep up with these changes.

Big Ideas

But some ideas just can't be applied to nostr. The idea is too big. The change is too breaking. It changes something fundamental. And nobody has come up with a smooth path to move from the old way to the new way.

And so we debate a bunch of things, and never settle on anything, and eventually nostr:npub180cvv07tjdrrgpa0j7j7tmnyl2yr6yr7l8j4s3evf6u64th6gkwsyjh6w6 makes a post saying that we don't really want it anyways 😉.

As we encounter good ideas that are hard to apply to nostr, I've been filing them away in a repository I call "nostr-next", so we don't forget about them, in case we ever wanted to start over.

It seems to me that starting over every time we encountered such a thing would be unwise. However, once we collect enough changes that we couldn't reasonably phase into nostr, then a tipping point is crossed where it becomes worthwhile to start over. In terms of the "bang for the buck" metaphor, the bang becomes bigger and bigger but the buck (the pain and cost of starting over) doesn't grow as rapidly.

WHAT? Start over?

IMHO starting over could be very bad if done in a cavalier way. The community could fracture. The new protocol could fail to take off due to lacking the network effect. The odds that a new protocol catches on are low, irrespective of how technically superior it could be.

So the big question is: can we preserve the nostr community and it's network effect while making a major step-change to the protocol and software?

I don't know the answer to that one, but I have an idea about it.

I think the new-protocol clients can be dual-stack, creating events in both systems and linking those events together via tags. The nostr key identity would still be used, and the new system identity too. This is better than things like the mostr bridge because each user would remain in custody of their own keys.

The nitty gritty

Here are some of the things I think would make nostr better, but which nostr can't easily fix. A lot of these ideas have been mentioned before by multiple people and I didn't give credit to all of you (sorry) because my brain can't track it all. But I've been collecting these over time at https://github.com/mikedilger/nostr-next

- Events as CBOR or BEVE or MsgPack or a fixed-schema binary layout... anything but JSON text with its parsing, it's encoding ambiguity, it's requirement to copy fields before hashing them, its unlimited size constraints. (me, nostr:npub1xtscya34g58tk0z605fvr788k263gsu6cy9x0mhnm87echrgufzsevkk5s)

- EdDSA ed25519 keys instead of secp256k1, to enable interoperability with a bunch of other stuff like ssh, pgp, TLS, Mainline DHT, and many more, plus just being better cryptography (me, Nuh, Orlovsky, except Orlovsky wanted Ristretto25519 for speed)

- Bootstrapping relay lists (and relay endpoints) from Mainline DHT (nostr:npub1jvxvaufrwtwj79s90n79fuxmm9pntk94rd8zwderdvqv4dcclnvs9s7yqz)

- Master keys and revocable subkeys / device keys (including having your nostr key as a subkey)

- Encryption to use different encryption-specific subkeys and ephemeral ones from the sender.

- Relay keypairs, TLS without certificates, relays known by keypair instead of URL

- Layered protocol (separate core from applications)

- Software remembering when they first saw an event, for 2 reasons, the main one being revocation (don't trust the date in the event, trust when you first saw it), the second being more precise time range queries.

- Upgrade/feature negotiation (HTTP headers prior to starting websockets)

- IDs starting with a timestamp so they are temporally adjacent (significantly better database performance) (Vitor's idea)

- Filters that allow boolean expressions on tag values, and also ID exclusions. Removing IDs from filters and moving to a GET command.

- Changing the transport (I'm against this but I know others want to)

What would it look like?

Someone (Steve Farroll) has taken my nostr-next repo and turned it into a proposed protocol he calls Mosaic. I think it is quite representative of that repo, as it already includes most of those suggestions, so I've been contributing to it. Mosaic spec is rendered here.

Of course, where Mosaic stands right now it is mostly my ideas (and Steve's), it doesn't have feedback or input from other nostr developers yet. That is what this blog post is about. I think it is time for other nostr devs to be made aware of this thing.

It is currently in the massive breaking changes phase. It might not look that way because of the detail and refinement of the documentation, but indeed everything is changing rapidly. It probably has some bad ideas and is probably missing some great ideas that you have.

Which is why this is a good time for other devs to start taking a look at it.

It is also the time to debate meta issues like "are you crazy Mike?" or "no we have to just break nostr but keep it nostr, we can't dual-stack" or whatever.

Personally I think mosaic-spec should develop and grow for quite a while before the "tipping point" happens. That is, I'm not sure we should jump in feet first yet, but rather we build up and refine this new protocol and spend a lot of time thinking about how to migrate smoothly, and break it a lot while nobody is using it.

So you can just reply to this, or DM me, or open issues or PRs at Mosaic, or just whisper to each other while giving me the evil eye.

-

@ eac63075:b4988b48

2024-10-21 08:11:11

@ eac63075:b4988b48

2024-10-21 08:11:11Imagine sending a private message to a friend, only to learn that authorities could be scanning its contents without your knowledge. This isn't a scene from a dystopian novel but a potential reality under the European Union's proposed "Chat Control" measures. Aimed at combating serious crimes like child exploitation and terrorism, these proposals could significantly impact the privacy of everyday internet users. As encrypted messaging services become the norm for personal and professional communication, understanding Chat Control is essential. This article delves into what Chat Control entails, why it's being considered, and how it could affect your right to private communication.

https://www.fountain.fm/episode/coOFsst7r7mO1EP1kSzV

https://open.spotify.com/episode/0IZ6kMExfxFm4FHg5DAWT8?si=e139033865e045de

Sections:

- Introduction

- What Is Chat Control?

- Why Is the EU Pushing for Chat Control?

- The Privacy Concerns and Risks

- The Technical Debate: Encryption and Backdoors

- Global Reactions and the Debate in Europe

- Possible Consequences for Messaging Services

- What Happens Next? The Future of Chat Control

- Conclusion

What Is Chat Control?

"Chat Control" refers to a set of proposed measures by the European Union aimed at monitoring and scanning private communications on messaging platforms. The primary goal is to detect and prevent the spread of illegal content, such as child sexual abuse material (CSAM) and to combat terrorism. While the intention is to enhance security and protect vulnerable populations, these proposals have raised significant privacy concerns.

At its core, Chat Control would require messaging services to implement automated scanning technologies that can analyze the content of messages—even those that are end-to-end encrypted. This means that the private messages you send to friends, family, or colleagues could be subject to inspection by algorithms designed to detect prohibited content.

Origins of the Proposal

The initiative for Chat Control emerged from the EU's desire to strengthen its digital security infrastructure. High-profile cases of online abuse and the use of encrypted platforms by criminal organizations have prompted lawmakers to consider more invasive surveillance tactics. The European Commission has been exploring legislation that would make it mandatory for service providers to monitor communications on their platforms.

How Messaging Services Work

Most modern messaging apps, like Signal, Session, SimpleX, Veilid, Protonmail and Tutanota (among others), use end-to-end encryption (E2EE). This encryption ensures that only the sender and the recipient can read the messages being exchanged. Not even the service providers can access the content. This level of security is crucial for maintaining privacy in digital communications, protecting users from hackers, identity thieves, and other malicious actors.

Key Elements of Chat Control

- Automated Content Scanning: Service providers would use algorithms to scan messages for illegal content.

- Circumvention of Encryption: To scan encrypted messages, providers might need to alter their encryption methods, potentially weakening security.

- Mandatory Reporting: If illegal content is detected, providers would be required to report it to authorities.

- Broad Applicability: The measures could apply to all messaging services operating within the EU, affecting both European companies and international platforms.

Why It Matters

Understanding Chat Control is essential because it represents a significant shift in how digital privacy is handled. While combating illegal activities online is crucial, the methods proposed could set a precedent for mass surveillance and the erosion of privacy rights. Everyday users who rely on encrypted messaging for personal and professional communication might find their conversations are no longer as private as they once thought.

Why Is the EU Pushing for Chat Control?

The European Union's push for Chat Control stems from a pressing concern to protect its citizens, particularly children, from online exploitation and criminal activities. With the digital landscape becoming increasingly integral to daily life, the EU aims to strengthen its ability to combat serious crimes facilitated through online platforms.

Protecting Children and Preventing Crime

One of the primary motivations behind Chat Control is the prevention of child sexual abuse material (CSAM) circulating on the internet. Law enforcement agencies have reported a significant increase in the sharing of illegal content through private messaging services. By implementing Chat Control, the EU believes it can more effectively identify and stop perpetrators, rescue victims, and deter future crimes.

Terrorism is another critical concern. Encrypted messaging apps can be used by terrorist groups to plan and coordinate attacks without detection. The EU argues that accessing these communications could be vital in preventing such threats and ensuring public safety.

Legal Context and Legislative Drivers

The push for Chat Control is rooted in several legislative initiatives:

-

ePrivacy Directive: This directive regulates the processing of personal data and the protection of privacy in electronic communications. The EU is considering amendments that would allow for the scanning of private messages under specific circumstances.

-

Temporary Derogation: In 2021, the EU adopted a temporary regulation permitting voluntary detection of CSAM by communication services. The current proposals aim to make such measures mandatory and more comprehensive.

-

Regulation Proposals: The European Commission has proposed regulations that would require service providers to detect, report, and remove illegal content proactively. This would include the use of technologies to scan private communications.

Balancing Security and Privacy

EU officials argue that the proposed measures are a necessary response to evolving digital threats. They emphasize the importance of staying ahead of criminals who exploit technology to harm others. By implementing Chat Control, they believe law enforcement can be more effective without entirely dismantling privacy protections.

However, the EU also acknowledges the need to balance security with fundamental rights. The proposals include provisions intended to limit the scope of surveillance, such as:

-

Targeted Scanning: Focusing on specific threats rather than broad, indiscriminate monitoring.

-

Judicial Oversight: Requiring court orders or oversight for accessing private communications.

-

Data Protection Safeguards: Implementing measures to ensure that data collected is handled securely and deleted when no longer needed.

The Urgency Behind the Push

High-profile cases of online abuse and terrorism have heightened the sense of urgency among EU policymakers. Reports of increasing online grooming and the widespread distribution of illegal content have prompted calls for immediate action. The EU posits that without measures like Chat Control, these problems will continue to escalate unchecked.

Criticism and Controversy

Despite the stated intentions, the push for Chat Control has been met with significant criticism. Opponents argue that the measures could be ineffective against savvy criminals who can find alternative ways to communicate. There is also concern that such surveillance could be misused or extended beyond its original purpose.

The Privacy Concerns and Risks

While the intentions behind Chat Control focus on enhancing security and protecting vulnerable groups, the proposed measures raise significant privacy concerns. Critics argue that implementing such surveillance could infringe on fundamental rights and set a dangerous precedent for mass monitoring of private communications.

Infringement on Privacy Rights

At the heart of the debate is the right to privacy. By scanning private messages, even with automated tools, the confidentiality of personal communications is compromised. Users may no longer feel secure sharing sensitive information, fearing that their messages could be intercepted or misinterpreted by algorithms.

Erosion of End-to-End Encryption

End-to-end encryption (E2EE) is a cornerstone of digital security, ensuring that only the sender and recipient can read the messages exchanged. Chat Control could necessitate the introduction of "backdoors" or weaken encryption protocols, making it easier for unauthorized parties to access private data. This not only affects individual privacy but also exposes communications to potential cyber threats.

Concerns from Privacy Advocates

Organizations like Signal and Tutanota, which offer encrypted messaging services, have voiced strong opposition to Chat Control. They warn that undermining encryption could have far-reaching consequences:

- Security Risks: Weakening encryption makes systems more vulnerable to hacking, espionage, and cybercrime.

- Global Implications: Changes in EU regulations could influence policies worldwide, leading to a broader erosion of digital privacy.

- Ineffectiveness Against Crime: Determined criminals might resort to other, less detectable means of communication, rendering the measures ineffective while still compromising the privacy of law-abiding citizens.

Potential for Government Overreach

There is a fear that Chat Control could lead to increased surveillance beyond its original scope. Once the infrastructure for scanning private messages is in place, it could be repurposed or expanded to monitor other types of content, stifling free expression and dissent.

Real-World Implications for Users

- False Positives: Automated scanning technologies are not infallible and could mistakenly flag innocent content, leading to unwarranted scrutiny or legal consequences for users.

- Chilling Effect: Knowing that messages could be monitored might discourage people from expressing themselves freely, impacting personal relationships and societal discourse.

- Data Misuse: Collected data could be vulnerable to leaks or misuse, compromising personal and sensitive information.

Legal and Ethical Concerns

Privacy advocates also highlight potential conflicts with existing laws and ethical standards:

- Violation of Fundamental Rights: The European Convention on Human Rights and other international agreements protect the right to privacy and freedom of expression.

- Questionable Effectiveness: The ethical justification for such invasive measures is challenged if they do not significantly improve safety or if they disproportionately impact innocent users.

Opposition from Member States and Organizations

Countries like Germany and organizations such as the European Digital Rights (EDRi) have expressed opposition to Chat Control. They emphasize the need to protect digital privacy and caution against hasty legislation that could have unintended consequences.

The Technical Debate: Encryption and Backdoors

The discussion around Chat Control inevitably leads to a complex technical debate centered on encryption and the potential introduction of backdoors into secure communication systems. Understanding these concepts is crucial to grasping the full implications of the proposed measures.

What Is End-to-End Encryption (E2EE)?

End-to-end encryption is a method of secure communication that prevents third parties from accessing data while it's transferred from one end system to another. In simpler terms, only the sender and the recipient can read the messages. Even the service providers operating the messaging platforms cannot decrypt the content.

- Security Assurance: E2EE ensures that sensitive information—be it personal messages, financial details, or confidential business communications—remains private.

- Widespread Use: Popular messaging apps like Signal, Session, SimpleX, Veilid, Protonmail and Tutanota (among others) rely on E2EE to protect user data.

How Chat Control Affects Encryption

Implementing Chat Control as proposed would require messaging services to scan the content of messages for illegal material. To do this on encrypted platforms, providers might have to:

- Introduce Backdoors: Create a means for third parties (including the service provider or authorities) to access encrypted messages.

- Client-Side Scanning: Install software on users' devices that scans messages before they are encrypted and sent, effectively bypassing E2EE.

The Risks of Weakening Encryption

1. Compromised Security for All Users

Introducing backdoors or client-side scanning tools can create vulnerabilities:

- Exploitable Gaps: If a backdoor exists, malicious actors might find and exploit it, leading to data breaches.

- Universal Impact: Weakening encryption doesn't just affect targeted individuals; it potentially exposes all users to increased risk.

2. Undermining Trust in Digital Services

- User Confidence: Knowing that private communications could be accessed might deter people from using digital services or push them toward unregulated platforms.

- Business Implications: Companies relying on secure communications might face increased risks, affecting economic activities.

3. Ineffectiveness Against Skilled Adversaries

- Alternative Methods: Criminals might shift to other encrypted channels or develop new ways to avoid detection.

- False Sense of Security: Weakening encryption could give the impression of increased safety while adversaries adapt and continue their activities undetected.

Signal’s Response and Stance

Signal, a leading encrypted messaging service, has been vocal in its opposition to the EU's proposals:

- Refusal to Weaken Encryption: Signal's CEO Meredith Whittaker has stated that the company would rather cease operations in the EU than compromise its encryption standards.

- Advocacy for Privacy: Signal emphasizes that strong encryption is essential for protecting human rights and freedoms in the digital age.

Understanding Backdoors

A "backdoor" in encryption is an intentional weakness inserted into a system to allow authorized access to encrypted data. While intended for legitimate use by authorities, backdoors pose several problems:

- Security Vulnerabilities: They can be discovered and exploited by unauthorized parties, including hackers and foreign governments.

- Ethical Concerns: The existence of backdoors raises questions about consent and the extent to which governments should be able to access private communications.

The Slippery Slope Argument

Privacy advocates warn that introducing backdoors or mandatory scanning sets a precedent:

- Expanded Surveillance: Once in place, these measures could be extended to monitor other types of content beyond the original scope.

- Erosion of Rights: Gradual acceptance of surveillance can lead to a significant reduction in personal freedoms over time.

Potential Technological Alternatives

Some suggest that it's possible to fight illegal content without undermining encryption:

- Metadata Analysis: Focusing on patterns of communication rather than content.

- Enhanced Reporting Mechanisms: Encouraging users to report illegal content voluntarily.

- Investing in Law Enforcement Capabilities: Strengthening traditional investigative methods without compromising digital security.

The technical community largely agrees that weakening encryption is not the solution:

- Consensus on Security: Strong encryption is essential for the safety and privacy of all internet users.

- Call for Dialogue: Technologists and privacy experts advocate for collaborative approaches that address security concerns without sacrificing fundamental rights.

Global Reactions and the Debate in Europe

The proposal for Chat Control has ignited a heated debate across Europe and beyond, with various stakeholders weighing in on the potential implications for privacy, security, and fundamental rights. The reactions are mixed, reflecting differing national perspectives, political priorities, and societal values.

Support for Chat Control

Some EU member states and officials support the initiative, emphasizing the need for robust measures to combat online crime and protect citizens, especially children. They argue that:

- Enhanced Security: Mandatory scanning can help law enforcement agencies detect and prevent serious crimes.

- Responsibility of Service Providers: Companies offering communication services should play an active role in preventing their platforms from being used for illegal activities.

- Public Safety Priorities: The protection of vulnerable populations justifies the implementation of such measures, even if it means compromising some aspects of privacy.

Opposition within the EU

Several countries and organizations have voiced strong opposition to Chat Control, citing concerns over privacy rights and the potential for government overreach.

Germany

- Stance: Germany has been one of the most vocal opponents of the proposed measures.

- Reasons:

- Constitutional Concerns: The German government argues that Chat Control could violate constitutional protections of privacy and confidentiality of communications.

- Security Risks: Weakening encryption is seen as a threat to cybersecurity.

- Legal Challenges: Potential conflicts with national laws protecting personal data and communication secrecy.

Netherlands

- Recent Developments: The Dutch government decided against supporting Chat Control, emphasizing the importance of encryption for security and privacy.

- Arguments:

- Effectiveness Doubts: Skepticism about the actual effectiveness of the measures in combating crime.

- Negative Impact on Privacy: Concerns about mass surveillance and the infringement of citizens' rights.

Table reference: Patrick Breyer - Chat Control in 23 September 2024

Table reference: Patrick Breyer - Chat Control in 23 September 2024Privacy Advocacy Groups

European Digital Rights (EDRi)

- Role: A network of civil and human rights organizations working to defend rights and freedoms in the digital environment.

- Position:

- Strong Opposition: EDRi argues that Chat Control is incompatible with fundamental rights.

- Awareness Campaigns: Engaging in public campaigns to inform citizens about the potential risks.

- Policy Engagement: Lobbying policymakers to consider alternative approaches that respect privacy.

Politicians and Activists

Patrick Breyer

- Background: A Member of the European Parliament (MEP) from Germany, representing the Pirate Party.

- Actions:

- Advocacy: Actively campaigning against Chat Control through speeches, articles, and legislative efforts.

- Public Outreach: Using social media and public events to raise awareness.

- Legal Expertise: Highlighting the legal inconsistencies and potential violations of EU law.

Global Reactions

International Organizations

- Human Rights Watch and Amnesty International: These organizations have expressed concerns about the implications for human rights, urging the EU to reconsider.

Technology Companies

- Global Tech Firms: Companies like Apple and Microsoft are monitoring the situation, as EU regulations could affect their operations and user trust.

- Industry Associations: Groups representing tech companies have issued statements highlighting the risks to innovation and competitiveness.

The Broader Debate

The controversy over Chat Control reflects a broader struggle between security interests and privacy rights in the digital age. Key points in the debate include:

- Legal Precedents: How the EU's decision might influence laws and regulations in other countries.

- Digital Sovereignty: The desire of nations to control digital spaces within their borders.

- Civil Liberties: The importance of protecting freedoms in the face of technological advancements.

Public Opinion

- Diverse Views: Surveys and public forums show a range of opinions, with some citizens prioritizing security and others valuing privacy above all.

- Awareness Levels: Many people are still unaware of the potential changes, highlighting the need for public education on the issue.

The EU is at a crossroads, facing the challenge of addressing legitimate security concerns without undermining the fundamental rights that are central to its values. The outcome of this debate will have significant implications for the future of digital privacy and the balance between security and freedom in society.

Possible Consequences for Messaging Services

The implementation of Chat Control could have significant implications for messaging services operating within the European Union. Both large platforms and smaller providers might need to adapt their technologies and policies to comply with the new regulations, potentially altering the landscape of digital communication.

Impact on Encrypted Messaging Services

Signal and Similar Platforms

-