-

The following script try, using [nak](https://github.com/fiatjaf/nak), to find out the last ten people who have followed a `target_pubkey`, sorted by the most recent. It's possibile to shorten `search_timerange` to speed up the search.

```

#!/usr/bin/env fish

# Target pubkey we're looking for in the tags

set target_pubkey "6e468422dfb74a5738702a8823b9b28168abab8655faacb6853cd0ee15deee93"

set current_time (date +%s)

set search_timerange (math $current_time - 600) # 24 hours = 86400 seconds

set pubkeys (nak req --kind 3 -s $search_timerange wss://relay.damus.io/ wss://nos.lol/ 2>/dev/null | \

jq -r --arg target "$target_pubkey" '

select(. != null and type == "object" and has("tags")) |

select(.tags[] | select(.[0] == "p" and .[1] == $target)) |

.pubkey

' | sort -u)

if test -z "$pubkeys"

exit 1

end

set all_events ""

set extended_search_timerange (math $current_time - 31536000) # One year

for pubkey in $pubkeys

echo "Checking $pubkey"

set events (nak req --author $pubkey -l 5 -k 3 -s $extended_search_timerange wss://relay.damus.io wss://nos.lol 2>/dev/null | \

jq -c --arg target "$target_pubkey" '

select(. != null and type == "object" and has("tags")) |

select(.tags[][] == $target)

' 2>/dev/null)

set count (echo "$events" | jq -s 'length')

if test "$count" -eq 1

set all_events $all_events $events

end

end

if test -n "$all_events"

echo -e "Last people following $target_pubkey:"

echo -e ""

set sorted_events (printf "%s\n" $all_events | jq -r -s '

unique_by(.id) |

sort_by(-.created_at) |

.[] | @json

')

for event in $sorted_events

set npub (echo $event | jq -r '.pubkey' | nak encode npub)

set created_at (echo $event | jq -r '.created_at')

if test (uname) = "Darwin"

set follow_date (date -r "$created_at" "+%Y-%m-%d %H:%M")

else

set follow_date (date -d @"$created_at" "+%Y-%m-%d %H:%M")

end

echo "$follow_date - $npub"

end

end

```

-

This article hopes to complement the article by Lyn Alden on YouTube: https://www.youtube.com/watch?v=jk_HWmmwiAs

## The reason why we have broken money

Before the invention of key technologies such as the printing press and electronic communications, even such as those as early as morse code transmitters, gold had won the competition for best medium of money around the world.

In fact, it was not just gold by itself that became money, rulers and world leaders developed coins in order to help the economy grow. Gold nuggets were not as easy to transact with as coins with specific imprints and denominated sizes.

However, these modern technologies created massive efficiencies that allowed us to communicate and perform services more efficiently and much faster, yet the medium of money could not benefit from these advancements. Gold was heavy, slow and expensive to move globally, even though requesting and performing services globally did not have this limitation anymore.

Banks took initiative and created derivatives of gold: paper and electronic money; these new currencies allowed the economy to continue to grow and evolve, but it was not without its dark side. Today, no currency is denominated in gold at all, money is backed by nothing and its inherent value, the paper it is printed on, is worthless too.

Banks and governments eventually transitioned from a money derivative to a system of debt that could be co-opted and controlled for political and personal reasons. Our money today is broken and is the cause of more expensive, poorer quality goods in the economy, a larger and ever growing wealth gap, and many of the follow-on problems that have come with it.

## Bitcoin overcomes the "transfer of hard money" problem

Just like gold coins were created by man, Bitcoin too is a technology created by man. Bitcoin, however is a much more profound invention, possibly more of a discovery than an invention in fact. Bitcoin has proven to be unbreakable, incorruptible and has upheld its ability to keep its units scarce, inalienable and counterfeit proof through the nature of its own design.

Since Bitcoin is a digital technology, it can be transferred across international borders almost as quickly as information itself. It therefore severely reduces the need for a derivative to be used to represent money to facilitate digital trade. This means that as the currency we use today continues to fare poorly for many people, bitcoin will continue to stand out as hard money, that just so happens to work as well, functionally, along side it.

Bitcoin will also always be available to anyone who wishes to earn it directly; even China is unable to restrict its citizens from accessing it. The dollar has traditionally become the currency for people who discover that their local currency is unsustainable. Even when the dollar has become illegal to use, it is simply used privately and unofficially. However, because bitcoin does not require you to trade it at a bank in order to use it across borders and across the web, Bitcoin will continue to be a viable escape hatch until we one day hit some critical mass where the world has simply adopted Bitcoin globally and everyone else must adopt it to survive.

Bitcoin has not yet proven that it can support the world at scale. However it can only be tested through real adoption, and just as gold coins were developed to help gold scale, tools will be developed to help overcome problems as they arise; ideally without the need for another derivative, but if necessary, hopefully with one that is more neutral and less corruptible than the derivatives used to represent gold.

## Bitcoin blurs the line between commodity and technology

Bitcoin is a technology, it is a tool that requires human involvement to function, however it surprisingly does not allow for any concentration of power. Anyone can help to facilitate Bitcoin's operations, but no one can take control of its behaviour, its reach, or its prioritisation, as it operates autonomously based on a pre-determined, neutral set of rules.

At the same time, its built-in incentive mechanism ensures that people do not have to operate bitcoin out of the good of their heart. Even though the system cannot be co-opted holistically, It will not stop operating while there are people motivated to trade their time and resources to keep it running and earn from others' transaction fees. Although it requires humans to operate it, it remains both neutral and sustainable.

Never before have we developed or discovered a technology that could not be co-opted and used by one person or faction against another. Due to this nature, Bitcoin's units are often described as a commodity; they cannot be usurped or virtually cloned, and they cannot be affected by political biases.

## The dangers of derivatives

A derivative is something created, designed or developed to represent another thing in order to solve a particular complication or problem. For example, paper and electronic money was once a derivative of gold.

In the case of Bitcoin, if you cannot link your units of bitcoin to an "address" that you personally hold a cryptographically secure key to, then you very likely have a derivative of bitcoin, not bitcoin itself. If you buy bitcoin on an online exchange and do not withdraw the bitcoin to a wallet that you control, then you legally own an electronic derivative of bitcoin.

Bitcoin is a new technology. It will have a learning curve and it will take time for humanity to learn how to comprehend, authenticate and take control of bitcoin collectively. Having said that, many people all over the world are already using and relying on Bitcoin natively. For many, it will require for people to find the need or a desire for a neutral money like bitcoin, and to have been burned by derivatives of it, before they start to understand the difference between the two. Eventually, it will become an essential part of what we regard as common sense.

## Learn for yourself

If you wish to learn more about how to handle bitcoin and avoid derivatives, you can start by searching online for tutorials about "Bitcoin self custody".

There are many options available, some more practical for you, and some more practical for others. Don't spend too much time trying to find the perfect solution; practice and learn. You may make mistakes along the way, so be careful not to experiment with large amounts of your bitcoin as you explore new ideas and technologies along the way. This is similar to learning anything, like riding a bicycle; you are sure to fall a few times, scuff the frame, so don't buy a high performance racing bike while you're still learning to balance.

-

# Instructions

1. Place 2 medium-sized, boiled potatoes and a handful of sliced leeks in a pot.

2. Fill the pot with water or vegetable broth, to cover the potatoes twice over.

3. Add a splash of white wine, if you like, and some bouillon powder, if you went with water instead of broth.

4. Bring the soup to a boil and then simmer for 15 minutes.

5. Puree the soup, in the pot, with a hand mixer. It shouldn't be completely smooth, when you're done, but rather have small bits and pieces of the veggies floating around.

6. Bring the soup to a boil, again, and stir in one container (200-250 mL) of heavy cream.

7. Thicken the soup, as needed, and then simmer for 5 more minutes.

8. Garnish with croutons and veggies (here I used sliced green onions and radishes) and serve.

Guten Appetit!

-

New Year’s resolutions often feel boring and repetitive. Most revolve around getting in shape, eating healthier, or giving up alcohol. While the idea is interesting—using the start of a new calendar year as a catalyst for change—it also seems unnecessary. Why wait for a specific date to make a change? If you want to improve something in your life, you can just do it. You don’t need an excuse.

That’s why I’ve never been drawn to the idea of making a list of resolutions. If I wanted a change, I’d make it happen, without worrying about the calendar. At least, that’s how I felt until now—when, for once, the timing actually gave me a real reason to embrace the idea of New Year’s resolutions.

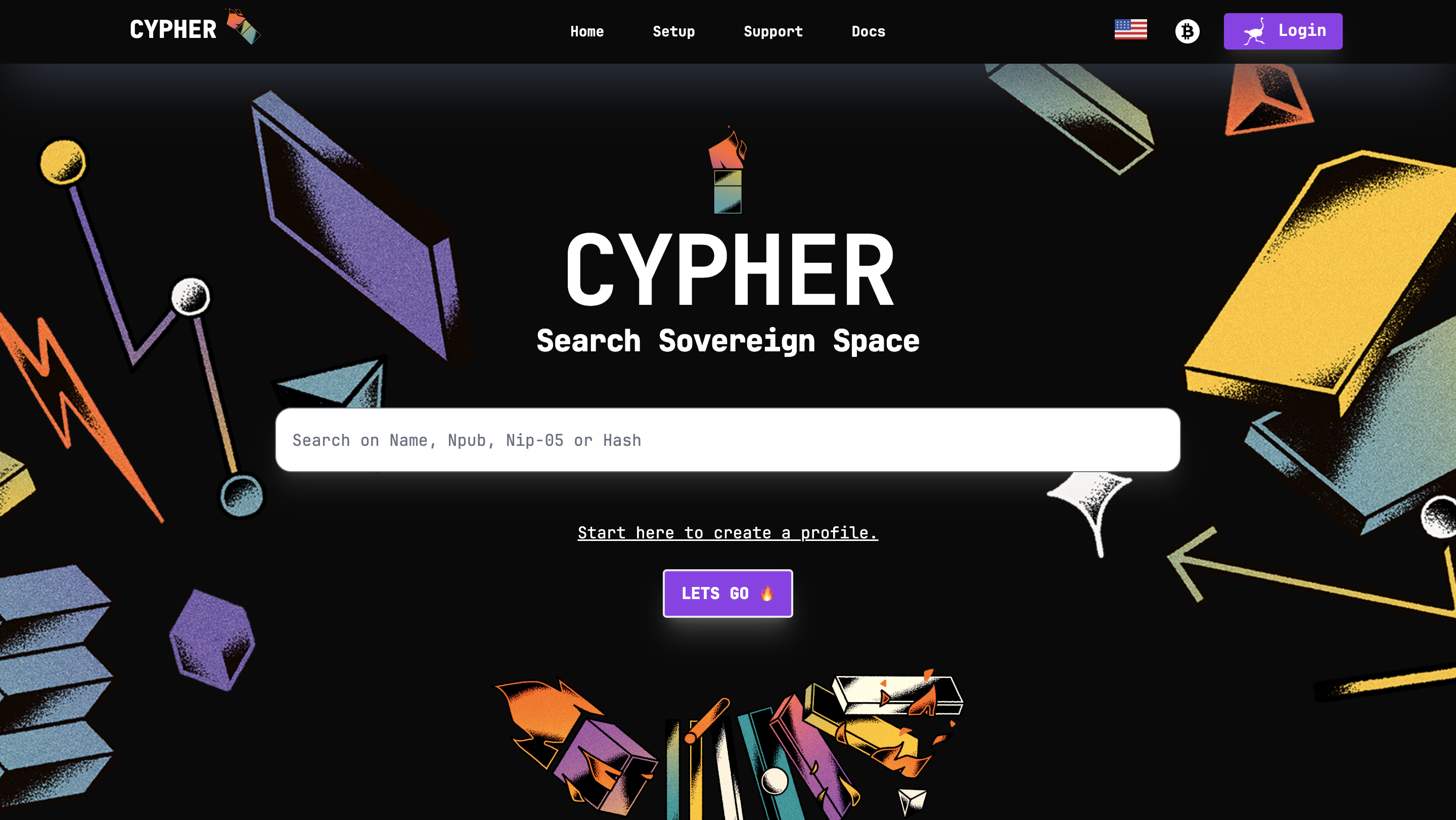

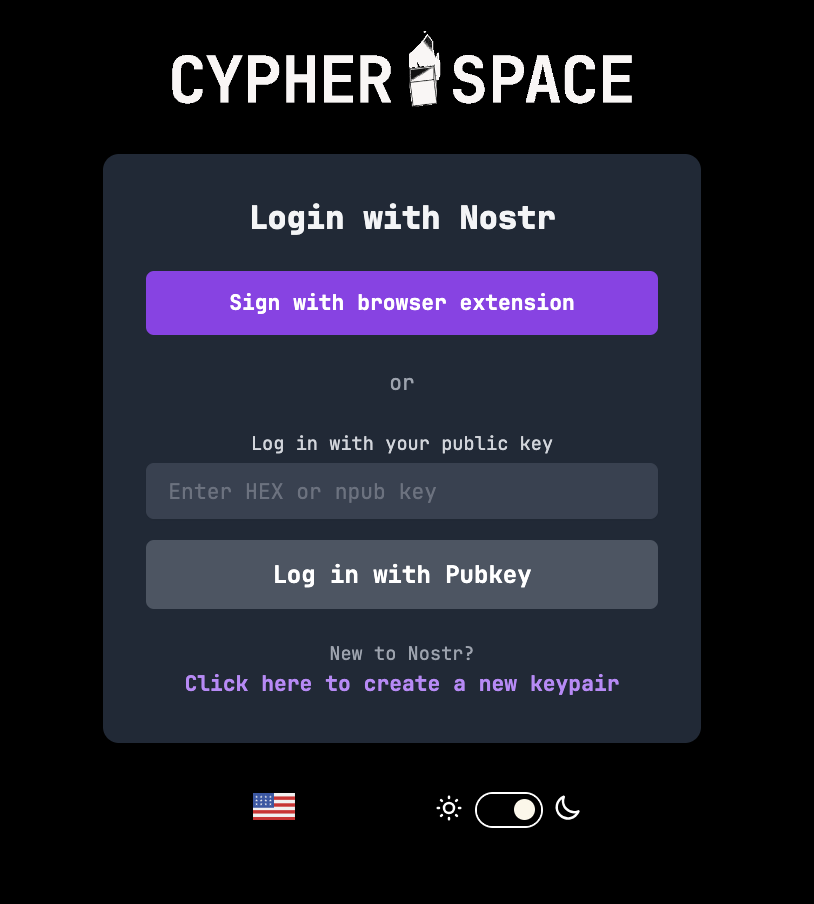

Enter [Olas](https://olas.app).

If you're a visual creator, you've likely experienced the relentless grind of building a following on platforms like Instagram—endless doomscrolling, ever-changing algorithms, and the constant pressure to stay relevant. But what if there was a better way? Olas is a Nostr-powered alternative to Instagram that prioritizes community, creativity, and value-for-value exchanges. It's a game changer.

Instagram’s failings are well-known. Its algorithm often dictates whose content gets seen, leaving creators frustrated and powerless. Monetization hurdles further alienate creators who are forced to meet arbitrary follower thresholds before earning anything. Additionally, the platform’s design fosters endless comparisons and exposure to negativity, which can take a significant toll on mental health.

Instagram’s algorithms are notorious for keeping users hooked, often at the cost of their mental health. I've spoken about this extensively, most recently at Nostr Valley, explaining how legacy social media is bad for you. You might find yourself scrolling through content that leaves you feeling anxious or drained. Olas takes a fresh approach, replacing "doomscrolling" with "bloomscrolling." This is a common theme across the Nostr ecosystem. The lack of addictive rage algorithms allows the focus to shift to uplifting, positive content that inspires rather than exhausts.

Monetization is another area where Olas will set itself apart. On Instagram, creators face arbitrary barriers to earning—needing thousands of followers and adhering to restrictive platform rules. Olas eliminates these hurdles by leveraging the Nostr protocol, enabling creators to earn directly through value-for-value exchanges. Fans can support their favorite artists instantly, with no delays or approvals required. The plan is to enable a brand new Olas account that can get paid instantly, with zero followers - that's wild.

Olas addresses these issues head-on. Operating on the open Nostr protocol, it removes centralized control over one's content’s reach or one's ability to monetize. With transparent, configurable algorithms, and a community that thrives on mutual support, Olas creates an environment where creators can grow and succeed without unnecessary barriers.

Join me on my New Year's resolution. Join me on Olas and take part in the [#Olas365](https://olas.app/search/olas365) challenge! It’s a simple yet exciting way to share your content. The challenge is straightforward: post at least one photo per day on Olas (though you’re welcome to share more!).

[Download on iOS](https://testflight.apple.com/join/2FMVX2yM).

[Download on Android](https://github.com/pablof7z/olas/releases/) or download via Zapstore.

Let's make waves together.

-

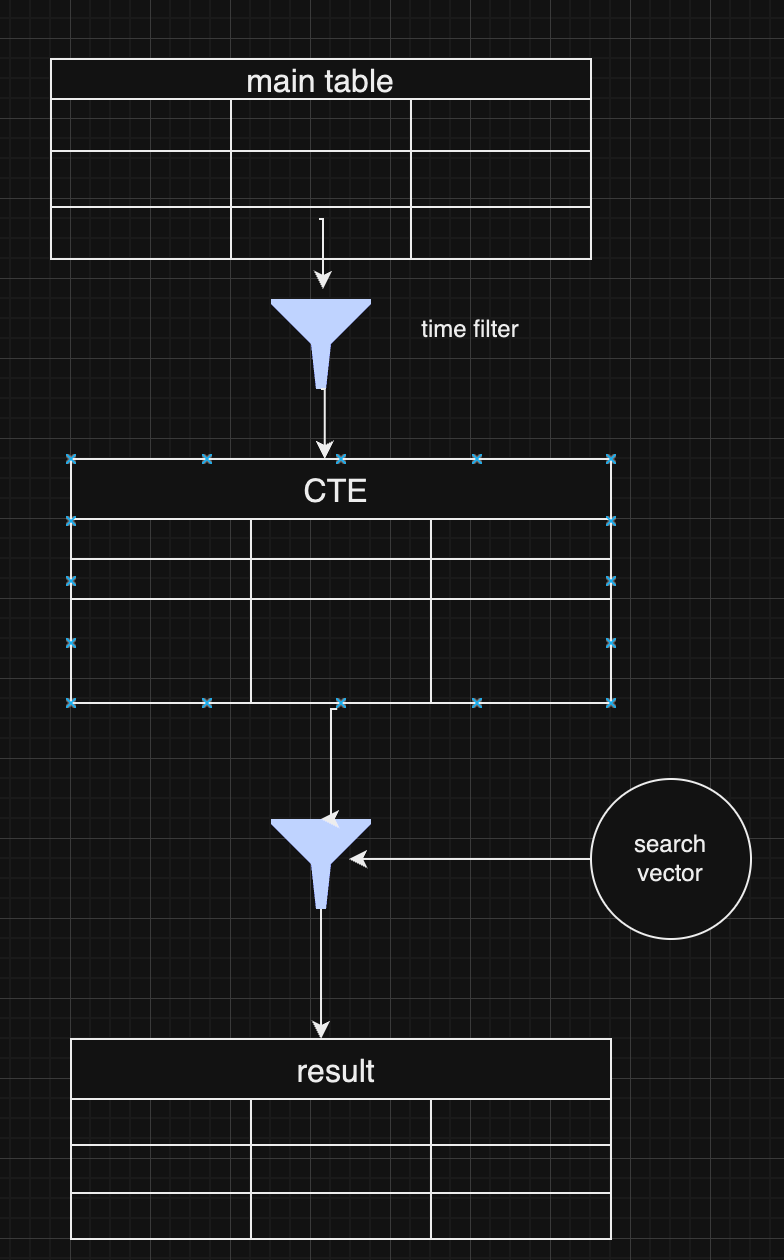

## The Rise of Graph RAGs and the Quest for Data Quality

As we enter a new year, it’s impossible to ignore the boom of retrieval-augmented generation (RAG) systems, particularly those leveraging graph-based approaches. The previous year saw a surge in advancements and discussions about Graph RAGs, driven by their potential to enhance large language models (LLMs), reduce hallucinations, and deliver more reliable outputs. Let’s dive into the trends, challenges, and strategies for making the most of Graph RAGs in artificial intelligence.

## Booming Interest in Graph RAGs

Graph RAGs have dominated the conversation in AI circles. With new research papers and innovations emerging weekly, it’s clear that this approach is reshaping the landscape. These systems, especially those developed by tech giants like Microsoft, demonstrate how graphs can:

* **Enhance LLM Outputs:** By grounding responses in structured knowledge, graphs significantly reduce hallucinations.

* **Support Complex Queries:** Graphs excel at managing linked and connected data, making them ideal for intricate problem-solving.

Conferences on linked and connected data have increasingly focused on Graph RAGs, underscoring their central role in modern AI systems. However, the excitement around this technology has brought critical questions to the forefront: How do we ensure the quality of the graphs we’re building, and are they genuinely aligned with our needs?

## Data Quality: The Foundation of Effective Graphs

A high-quality graph is the backbone of any successful RAG system. Constructing these graphs from unstructured data requires attention to detail and rigorous processes. Here’s why:

* **Richness of Entities:** Effective retrieval depends on graphs populated with rich, detailed entities.

* **Freedom from Hallucinations:** Poorly constructed graphs amplify inaccuracies rather than mitigating them.

Without robust data quality, even the most sophisticated Graph RAGs become ineffective. As a result, the focus must shift to refining the graph construction process. Improving data strategy and ensuring meticulous data preparation is essential to unlock the full potential of Graph RAGs.

## Hybrid Graph RAGs and Variations

While standard Graph RAGs are already transformative, hybrid models offer additional flexibility and power. Hybrid RAGs combine structured graph data with other retrieval mechanisms, creating systems that:

* Handle diverse data sources with ease.

* Offer improved adaptability to complex queries.

Exploring these variations can open new avenues for AI systems, particularly in domains requiring structured and unstructured data processing.

## Ontology: The Key to Graph Construction Quality

Ontology — defining how concepts relate within a knowledge domain — is critical for building effective graphs. While this might sound abstract, it’s a well-established field blending philosophy, engineering, and art. Ontology engineering provides the framework for:

* **Defining Relationships:** Clarifying how concepts connect within a domain.

* **Validating Graph Structures:** Ensuring constructed graphs are logically sound and align with domain-specific realities.

Traditionally, ontologists — experts in this discipline — have been integral to large enterprises and research teams. However, not every team has access to dedicated ontologists, leading to a significant challenge: How can teams without such expertise ensure the quality of their graphs?

## How to Build Ontology Expertise in a Startup Team

For startups and smaller teams, developing ontology expertise may seem daunting, but it is achievable with the right approach:

1. **Assign a Knowledge Champion:** Identify a team member with a strong analytical mindset and give them time and resources to learn ontology engineering.

2. **Provide Training:** Invest in courses, workshops, or certifications in knowledge graph and ontology creation.

3. **Leverage Partnerships:** Collaborate with academic institutions, domain experts, or consultants to build initial frameworks.

4. **Utilize Tools:** Introduce ontology development tools like Protégé, OWL, or SHACL to simplify the creation and validation process.

5. **Iterate with Feedback:** Continuously refine ontologies through collaboration with domain experts and iterative testing.

So, it is not always affordable for a startup to have a dedicated oncologist or knowledge engineer in a team, but you could involve consulters or build barefoot experts.

You could read about barefoot experts in my article :

Even startups can achieve robust and domain-specific ontology frameworks by fostering in-house expertise.

## How to Find or Create Ontologies

For teams venturing into Graph RAGs, several strategies can help address the ontology gap:

1. **Leverage Existing Ontologies:** Many industries and domains already have open ontologies. For instance:

* **Public Knowledge Graphs:** Resources like Wikipedia’s graph offer a wealth of structured knowledge.

* **Industry Standards:** Enterprises such as Siemens have invested in creating and sharing ontologies specific to their fields.

* **Business Framework Ontology (BFO):** A valuable resource for enterprises looking to define business processes and structures.

1. **Build In-House Expertise:** If budgets allow, consider hiring knowledge engineers or providing team members with the resources and time to develop expertise in ontology creation.

2. **Utilize LLMs for Ontology Construction:** Interestingly, LLMs themselves can act as a starting point for ontology development:

* **Prompt-Based Extraction:** LLMs can generate draft ontologies by leveraging their extensive training on graph data.

* **Domain Expert Refinement:** Combine LLM-generated structures with insights from domain experts to create tailored ontologies.

## Parallel Ontology and Graph Extraction

An emerging approach involves extracting ontologies and graphs in parallel. While this can streamline the process, it presents challenges such as:

* **Detecting Hallucinations:** Differentiating between genuine insights and AI-generated inaccuracies.

* **Ensuring Completeness:** Ensuring no critical concepts are overlooked during extraction.

Teams must carefully validate outputs to ensure reliability and accuracy when employing this parallel method.

## LLMs as Ontologists

While traditionally dependent on human expertise, ontology creation is increasingly supported by LLMs. These models, trained on vast amounts of data, possess inherent knowledge of many open ontologies and taxonomies. Teams can use LLMs to:

* **Generate Skeleton Ontologies:** Prompt LLMs with domain-specific information to draft initial ontology structures.

* **Validate and Refine Ontologies:** Collaborate with domain experts to refine these drafts, ensuring accuracy and relevance.

However, for validation and graph construction, formal tools such as OWL, SHACL, and RDF should be prioritized over LLMs to minimize hallucinations and ensure robust outcomes.

## Final Thoughts: Unlocking the Power of Graph RAGs

The rise of Graph RAGs underscores a simple but crucial correlation: improving graph construction and data quality directly enhances retrieval systems. To truly harness this power, teams must invest in understanding ontologies, building quality graphs, and leveraging both human expertise and advanced AI tools.

As we move forward, the interplay between Graph RAGs and ontology engineering will continue to shape the future of AI. Whether through adopting existing frameworks or exploring innovative uses of LLMs, the path to success lies in a deep commitment to data quality and domain understanding.

Have you explored these technologies in your work? Share your experiences and insights — and stay tuned for more discussions on ontology extraction and its role in AI advancements. Cheers to a year of innovation!

-

## The Four-Layer Framework

### Layer 1: Zoom Out

Start by looking at the big picture. What’s the subject about, and why does it matter? Focus on the overarching ideas and how they fit together. Think of this as the 30,000-foot view—it’s about understanding the "why" and "how" before diving into the "what."

**Example**: If you’re learning programming, start by understanding that it’s about giving logical instructions to computers to solve problems.

- **Tip**: Keep it simple. Summarize the subject in one or two sentences and avoid getting bogged down in specifics at this stage.

_Once you have the big picture in mind, it’s time to start breaking it down._

---

### Layer 2: Categorize and Connect

Now it’s time to break the subject into categories—like creating branches on a tree. This helps your brain organize information logically and see connections between ideas.

**Example**: Studying biology? Group concepts into categories like cells, genetics, and ecosystems.

- **Tip**: Use headings or labels to group similar ideas. Jot these down in a list or simple diagram to keep track.

_With your categories in place, you’re ready to dive into the details that bring them to life._

---

### Layer 3: Master the Details

Once you’ve mapped out the main categories, you’re ready to dive deeper. This is where you learn the nuts and bolts—like formulas, specific techniques, or key terminology. These details make the subject practical and actionable.

**Example**: In programming, this might mean learning the syntax for loops, conditionals, or functions in your chosen language.

- **Tip**: Focus on details that clarify the categories from Layer 2. Skip anything that doesn’t add to your understanding.

_Now that you’ve mastered the essentials, you can expand your knowledge to include extra material._

---

### Layer 4: Expand Your Horizons

Finally, move on to the extra material—less critical facts, trivia, or edge cases. While these aren’t essential to mastering the subject, they can be useful in specialized discussions or exams.

**Example**: Learn about rare programming quirks or historical trivia about a language’s development.

- **Tip**: Spend minimal time here unless it’s necessary for your goals. It’s okay to skim if you’re short on time.

---

## Pro Tips for Better Learning

### 1. Use Active Recall and Spaced Repetition

Test yourself without looking at notes. Review what you’ve learned at increasing intervals—like after a day, a week, and a month. This strengthens memory by forcing your brain to actively retrieve information.

### 2. Map It Out

Create visual aids like [diagrams or concept maps](https://excalidraw.com/) to clarify relationships between ideas. These are particularly helpful for organizing categories in Layer 2.

### 3. Teach What You Learn

Explain the subject to someone else as if they’re hearing it for the first time. Teaching **exposes any gaps** in your understanding and **helps reinforce** the material.

### 4. Engage with LLMs and Discuss Concepts

Take advantage of tools like ChatGPT or similar large language models to **explore your topic** in greater depth. Use these tools to:

- Ask specific questions to clarify confusing points.

- Engage in discussions to simulate real-world applications of the subject.

- Generate examples or analogies that deepen your understanding.

**Tip**: Use LLMs as a study partner, but don’t rely solely on them. Combine these insights with your own critical thinking to develop a well-rounded perspective.

---

## Get Started

Ready to try the Four-Layer Method? Take 15 minutes today to map out the big picture of a topic you’re curious about—what’s it all about, and why does it matter? By building your understanding step by step, you’ll master the subject with less stress and more confidence.

-

Here are my predictions for Nostr in 2025:

**Decentralization:** The outbox and inbox communication models, sometimes referred to as the Gossip model, will become the standard across the ecosystem. By the end of 2025, all major clients will support these models, providing seamless communication and enhanced decentralization. Clients that do not adopt outbox/inbox by then will be regarded as outdated or legacy systems.

**Privacy Standards:** Major clients such as Damus and Primal will move away from NIP-04 DMs, adopting more secure protocol possibilities like NIP-17 or NIP-104. These upgrades will ensure enhanced encryption and metadata protection. Additionally, NIP-104 MLS tools will drive the development of new clients and features, providing users with unprecedented control over the privacy of their communications.

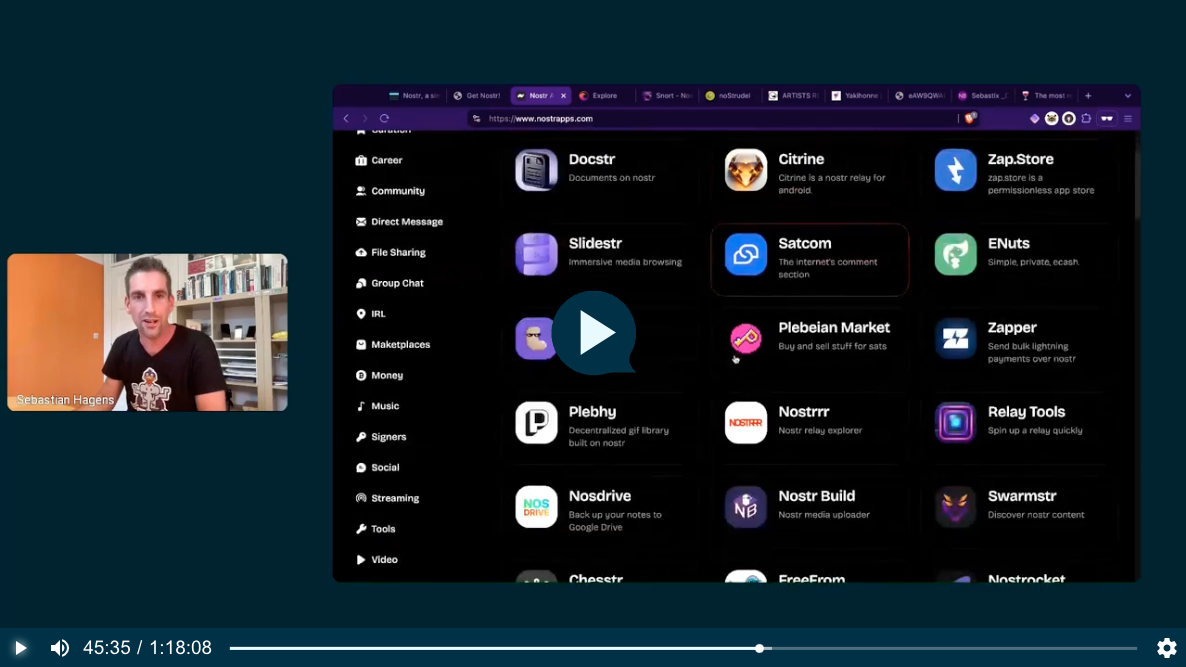

**Interoperability:** Nostr's ecosystem will become even more interconnected. Platforms like the Olas image-sharing service will expand into prominent clients such as Primal, Damus, Coracle, and Snort, alongside existing integrations with Amethyst, Nostur, and Nostrudel. Similarly, audio and video tools like Nostr Nests and Zap.stream will gain seamless integration into major clients, enabling easy participation in live events across the ecosystem.

**Adoption and Migration:** Inspired by early pioneers like Fountain and Orange Pill App, more platforms will adopt Nostr for authentication, login, and social systems. In 2025, a significant migration from a high-profile application platform with hundreds of thousands of users will transpire, doubling Nostr’s daily activity and establishing it as a cornerstone of decentralized technologies.

-

We didn't hear them land on earth, nor did we see them. The spores were not visible to the naked eye. Like dust particles, they softly fell, unhindered, through our atmosphere, covering the earth. It took us a while to realize that something extraordinary was happening on our planet. In most places, the mushrooms didn't grow at all. The conditions weren't right. In some places—mostly rocky places—they grew large enough to be noticeable. People all over the world posted pictures online. "White eggs," they called them. It took a bit until botanists and mycologists took note. Most didn't realize that we were dealing with a species unknown to us.

We aren't sure who sent them. We aren't even sure if there is a "who" behind the spores. But once the first portals opened up, we learned that these mushrooms aren't just a quirk of biology. The portals were small at first—minuscule, even. Like a pinhole camera, we were able to glimpse through, but we couldn't make out much. We were only able to see colors and textures if the conditions were right. We weren't sure what we were looking at.

We still don't understand why some mushrooms open up, and some don't. Most don't. What we do know is that they like colder climates and high elevations. What we also know is that the portals don't stay open for long. Like all mushrooms, the flush only lasts for a week or two. When a portal opens, it looks like the mushroom is eating a hole into itself at first. But the hole grows, and what starts as a shimmer behind a grey film turns into a clear picture as the egg ripens. When conditions are right, portals will remain stable for up to three days. Once the fruit withers, the portal closes, and the mushroom decays.

The eggs grew bigger year over year. And with it, the portals. Soon enough, the portals were big enough to stick your finger through. And that's when things started to get weird...

-

### **English**

#### Introducing YakiHonne: Write Without Limits

YakiHonne is the ultimate text editor designed to help you express yourself creatively, no matter the language.

**Features you'll love:**

- 🌟 **Rich Formatting**: Add headings, bold, italics, and more.

- 🌏 **Multilingual Support**: Seamlessly write in English, Chinese, Arabic, and Japanese.

- 🔗 **Interactive Links**: [Learn more about YakiHonne](https://yakihonne.com).

**Benefits:**

1. Easy to use.

2. Enhance readability with customizable styles.

3. Supports various complex formats including LateX.

> "YakiHonne is a game-changer for content creators."

-

Today I learned how to install [NVapi](https://github.com/sammcj/NVApi) to monitor my GPUs in Home Assistant.

**NVApi** is a lightweight API designed for monitoring NVIDIA GPU utilization and enabling automated power management. It provides real-time GPU metrics, supports integration with tools like Home Assistant, and offers flexible power management and PCIe link speed management based on workload and thermal conditions.

- **GPU Utilization Monitoring**: Utilization, memory usage, temperature, fan speed, and power consumption.

- **Automated Power Limiting**: Adjusts power limits dynamically based on temperature thresholds and total power caps, configurable per GPU or globally.

- **Cross-GPU Coordination**: Total power budget applies across multiple GPUs in the same system.

- **PCIe Link Speed Management**: Controls minimum and maximum PCIe link speeds with idle thresholds for power optimization.

- **Home Assistant Integration**: Uses the built-in RESTful platform and template sensors.

## Getting the Data

```

sudo apt install golang-go

git clone https://github.com/sammcj/NVApi.git

cd NVapi

go run main.go -port 9999 -rate 1

curl http://localhost:9999/gpu

```

Response for a single GPU:

```

[

{

"index": 0,

"name": "NVIDIA GeForce RTX 4090",

"gpu_utilisation": 0,

"memory_utilisation": 0,

"power_watts": 16,

"power_limit_watts": 450,

"memory_total_gb": 23.99,

"memory_used_gb": 0.46,

"memory_free_gb": 23.52,

"memory_usage_percent": 2,

"temperature": 38,

"processes": [],

"pcie_link_state": "not managed"

}

]

```

Response for multiple GPUs:

```

[

{

"index": 0,

"name": "NVIDIA GeForce RTX 3090",

"gpu_utilisation": 0,

"memory_utilisation": 0,

"power_watts": 14,

"power_limit_watts": 350,

"memory_total_gb": 24,

"memory_used_gb": 0.43,

"memory_free_gb": 23.57,

"memory_usage_percent": 2,

"temperature": 36,

"processes": [],

"pcie_link_state": "not managed"

},

{

"index": 1,

"name": "NVIDIA RTX A4000",

"gpu_utilisation": 0,

"memory_utilisation": 0,

"power_watts": 10,

"power_limit_watts": 140,

"memory_total_gb": 15.99,

"memory_used_gb": 0.56,

"memory_free_gb": 15.43,

"memory_usage_percent": 3,

"temperature": 41,

"processes": [],

"pcie_link_state": "not managed"

}

]

```

# Start at Boot

Create `/etc/systemd/system/nvapi.service`:

```

[Unit]

Description=Run NVapi

After=network.target

[Service]

Type=simple

Environment="GOPATH=/home/ansible/go"

WorkingDirectory=/home/ansible/NVapi

ExecStart=/usr/bin/go run main.go -port 9999 -rate 1

Restart=always

User=ansible

# Environment="GPU_TEMP_CHECK_INTERVAL=5"

# Environment="GPU_TOTAL_POWER_CAP=400"

# Environment="GPU_0_LOW_TEMP=40"

# Environment="GPU_0_MEDIUM_TEMP=70"

# Environment="GPU_0_LOW_TEMP_LIMIT=135"

# Environment="GPU_0_MEDIUM_TEMP_LIMIT=120"

# Environment="GPU_0_HIGH_TEMP_LIMIT=100"

# Environment="GPU_1_LOW_TEMP=45"

# Environment="GPU_1_MEDIUM_TEMP=75"

# Environment="GPU_1_LOW_TEMP_LIMIT=140"

# Environment="GPU_1_MEDIUM_TEMP_LIMIT=125"

# Environment="GPU_1_HIGH_TEMP_LIMIT=110"

[Install]

WantedBy=multi-user.target

```

## Home Assistant

Add to Home Assistant `configuration.yaml` and restart HA (completely).

For a single GPU, this works:

```

sensor:

- platform: rest

name: MYPC GPU Information

resource: http://mypc:9999

method: GET

headers:

Content-Type: application/json

value_template: "{{ value_json[0].index }}"

json_attributes:

- name

- gpu_utilisation

- memory_utilisation

- power_watts

- power_limit_watts

- memory_total_gb

- memory_used_gb

- memory_free_gb

- memory_usage_percent

- temperature

scan_interval: 1 # seconds

- platform: template

sensors:

mypc_gpu_0_gpu:

friendly_name: "MYPC {{ state_attr('sensor.mypc_gpu_information', 'name') }} GPU"

value_template: "{{ state_attr('sensor.mypc_gpu_information', 'gpu_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_0_memory:

friendly_name: "MYPC {{ state_attr('sensor.mypc_gpu_information', 'name') }} Memory"

value_template: "{{ state_attr('sensor.mypc_gpu_information', 'memory_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_0_power:

friendly_name: "MYPC {{ state_attr('sensor.mypc_gpu_information', 'name') }} Power"

value_template: "{{ state_attr('sensor.mypc_gpu_information', 'power_watts') }}"

unit_of_measurement: "W"

mypc_gpu_0_power_limit:

friendly_name: "MYPC {{ state_attr('sensor.mypc_gpu_information', 'name') }} Power Limit"

value_template: "{{ state_attr('sensor.mypc_gpu_information', 'power_limit_watts') }}"

unit_of_measurement: "W"

mypc_gpu_0_temperature:

friendly_name: "MYPC {{ state_attr('sensor.mypc_gpu_information', 'name') }} Temperature"

value_template: "{{ state_attr('sensor.mypc_gpu_information', 'temperature') }}"

unit_of_measurement: "°C"

```

For multiple GPUs:

```

rest:

scan_interval: 1

resource: http://mypc:9999

sensor:

- name: "MYPC GPU0 Information"

value_template: "{{ value_json[0].index }}"

json_attributes_path: "$.0"

json_attributes:

- name

- gpu_utilisation

- memory_utilisation

- power_watts

- power_limit_watts

- memory_total_gb

- memory_used_gb

- memory_free_gb

- memory_usage_percent

- temperature

- name: "MYPC GPU1 Information"

value_template: "{{ value_json[1].index }}"

json_attributes_path: "$.1"

json_attributes:

- name

- gpu_utilisation

- memory_utilisation

- power_watts

- power_limit_watts

- memory_total_gb

- memory_used_gb

- memory_free_gb

- memory_usage_percent

- temperature

- platform: template

sensors:

mypc_gpu_0_gpu:

friendly_name: "MYPC GPU0 GPU"

value_template: "{{ state_attr('sensor.mypc_gpu0_information', 'gpu_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_0_memory:

friendly_name: "MYPC GPU0 Memory"

value_template: "{{ state_attr('sensor.mypc_gpu0_information', 'memory_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_0_power:

friendly_name: "MYPC GPU0 Power"

value_template: "{{ state_attr('sensor.mypc_gpu0_information', 'power_watts') }}"

unit_of_measurement: "W"

mypc_gpu_0_power_limit:

friendly_name: "MYPC GPU0 Power Limit"

value_template: "{{ state_attr('sensor.mypc_gpu0_information', 'power_limit_watts') }}"

unit_of_measurement: "W"

mypc_gpu_0_temperature:

friendly_name: "MYPC GPU0 Temperature"

value_template: "{{ state_attr('sensor.mypc_gpu0_information', 'temperature') }}"

unit_of_measurement: "C"

- platform: template

sensors:

mypc_gpu_1_gpu:

friendly_name: "MYPC GPU1 GPU"

value_template: "{{ state_attr('sensor.mypc_gpu1_information', 'gpu_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_1_memory:

friendly_name: "MYPC GPU1 Memory"

value_template: "{{ state_attr('sensor.mypc_gpu1_information', 'memory_utilisation') }}"

unit_of_measurement: "%"

mypc_gpu_1_power:

friendly_name: "MYPC GPU1 Power"

value_template: "{{ state_attr('sensor.mypc_gpu1_information', 'power_watts') }}"

unit_of_measurement: "W"

mypc_gpu_1_power_limit:

friendly_name: "MYPC GPU1 Power Limit"

value_template: "{{ state_attr('sensor.mypc_gpu1_information', 'power_limit_watts') }}"

unit_of_measurement: "W"

mypc_gpu_1_temperature:

friendly_name: "MYPC GPU1 Temperature"

value_template: "{{ state_attr('sensor.mypc_gpu1_information', 'temperature') }}"

unit_of_measurement: "C"

```

Basic entity card:

```

type: entities

entities:

- entity: sensor.mypc_gpu_0_gpu

secondary_info: last-updated

- entity: sensor.mypc_gpu_0_memory

secondary_info: last-updated

- entity: sensor.mypc_gpu_0_power

secondary_info: last-updated

- entity: sensor.mypc_gpu_0_power_limit

secondary_info: last-updated

- entity: sensor.mypc_gpu_0_temperature

secondary_info: last-updated

```

# Ansible Role

```

---

- name: install go

become: true

package:

name: golang-go

state: present

- name: git clone

git:

repo: "https://github.com/sammcj/NVApi.git"

dest: "/home/ansible/NVapi"

update: yes

force: true

# go run main.go -port 9999 -rate 1

- name: install systemd service

become: true

copy:

src: nvapi.service

dest: /etc/systemd/system/nvapi.service

- name: Reload systemd daemons, enable, and restart nvapi

become: true

systemd:

name: nvapi

daemon_reload: yes

enabled: yes

state: restarted

```

-

Che cosa significherebbe trattare l'IA come uno strumento invece che come una persona?

Dall’avvio di ChatGPT, le esplorazioni in due direzioni hanno preso velocità.

La prima direzione riguarda le capacità tecniche. Quanto grande possiamo addestrare un modello? Quanto bene può rispondere alle domande del SAT? Con quanta efficienza possiamo distribuirlo?

La seconda direzione riguarda il design dell’interazione. Come comunichiamo con un modello? Come possiamo usarlo per un lavoro utile? Quale metafora usiamo per ragionare su di esso?

La prima direzione è ampiamente seguita e enormemente finanziata, e per una buona ragione: i progressi nelle capacità tecniche sono alla base di ogni possibile applicazione. Ma la seconda è altrettanto cruciale per il campo e ha enormi incognite. Siamo solo a pochi anni dall’inizio dell’era dei grandi modelli. Quali sono le probabilità che abbiamo già capito i modi migliori per usarli?

Propongo una nuova modalità di interazione, in cui i modelli svolgano il ruolo di applicazioni informatiche (ad esempio app per telefoni): fornendo un’interfaccia grafica, interpretando gli input degli utenti e aggiornando il loro stato. In questa modalità, invece di essere un “agente” che utilizza un computer per conto dell’essere umano, l’IA può fornire un ambiente informatico più ricco e potente che possiamo utilizzare.

### Metafore per l’interazione

Al centro di un’interazione c’è una metafora che guida le aspettative di un utente su un sistema. I primi giorni dell’informatica hanno preso metafore come “scrivanie”, “macchine da scrivere”, “fogli di calcolo” e “lettere” e le hanno trasformate in equivalenti digitali, permettendo all’utente di ragionare sul loro comportamento. Puoi lasciare qualcosa sulla tua scrivania e tornare a prenderlo; hai bisogno di un indirizzo per inviare una lettera. Man mano che abbiamo sviluppato una conoscenza culturale di questi dispositivi, la necessità di queste particolari metafore è scomparsa, e con esse i design di interfaccia skeumorfici che le rafforzavano. Come un cestino o una matita, un computer è ora una metafora di se stesso.

La metafora dominante per i grandi modelli oggi è modello-come-persona. Questa è una metafora efficace perché le persone hanno capacità estese che conosciamo intuitivamente. Implica che possiamo avere una conversazione con un modello e porgli domande; che il modello possa collaborare con noi su un documento o un pezzo di codice; che possiamo assegnargli un compito da svolgere da solo e che tornerà quando sarà finito.

Tuttavia, trattare un modello come una persona limita profondamente il nostro modo di pensare all’interazione con esso. Le interazioni umane sono intrinsecamente lente e lineari, limitate dalla larghezza di banda e dalla natura a turni della comunicazione verbale. Come abbiamo tutti sperimentato, comunicare idee complesse in una conversazione è difficile e dispersivo. Quando vogliamo precisione, ci rivolgiamo invece a strumenti, utilizzando manipolazioni dirette e interfacce visive ad alta larghezza di banda per creare diagrammi, scrivere codice e progettare modelli CAD. Poiché concepiamo i modelli come persone, li utilizziamo attraverso conversazioni lente, anche se sono perfettamente in grado di accettare input diretti e rapidi e di produrre risultati visivi. Le metafore che utilizziamo limitano le esperienze che costruiamo, e la metafora modello-come-persona ci impedisce di esplorare il pieno potenziale dei grandi modelli.

Per molti casi d’uso, e specialmente per il lavoro produttivo, credo che il futuro risieda in un’altra metafora: modello-come-computer.

### Usare un’IA come un computer

Sotto la metafora modello-come-computer, interagiremo con i grandi modelli seguendo le intuizioni che abbiamo sulle applicazioni informatiche (sia su desktop, tablet o telefono). Nota che ciò non significa che il modello sarà un’app tradizionale più di quanto il desktop di Windows fosse una scrivania letterale. “Applicazione informatica” sarà un modo per un modello di rappresentarsi a noi. Invece di agire come una persona, il modello agirà come un computer.

Agire come un computer significa produrre un’interfaccia grafica. Al posto del flusso lineare di testo in stile telescrivente fornito da ChatGPT, un sistema modello-come-computer genererà qualcosa che somiglia all’interfaccia di un’applicazione moderna: pulsanti, cursori, schede, immagini, grafici e tutto il resto. Questo affronta limitazioni chiave dell’interfaccia di chat standard modello-come-persona:

- **Scoperta.** Un buon strumento suggerisce i suoi usi. Quando l’unica interfaccia è una casella di testo vuota, spetta all’utente capire cosa fare e comprendere i limiti del sistema. La barra laterale Modifica in Lightroom è un ottimo modo per imparare l’editing fotografico perché non si limita a dirti cosa può fare questa applicazione con una foto, ma cosa potresti voler fare. Allo stesso modo, un’interfaccia modello-come-computer per DALL-E potrebbe mostrare nuove possibilità per le tue generazioni di immagini.

- **Efficienza.** La manipolazione diretta è più rapida che scrivere una richiesta a parole. Per continuare l’esempio di Lightroom, sarebbe impensabile modificare una foto dicendo a una persona quali cursori spostare e di quanto. Ci vorrebbe un giorno intero per chiedere un’esposizione leggermente più bassa e una vibranza leggermente più alta, solo per vedere come apparirebbe. Nella metafora modello-come-computer, il modello può creare strumenti che ti permettono di comunicare ciò che vuoi più efficientemente e quindi di fare le cose più rapidamente.

A differenza di un’app tradizionale, questa interfaccia grafica è generata dal modello su richiesta. Questo significa che ogni parte dell’interfaccia che vedi è rilevante per ciò che stai facendo in quel momento, inclusi i contenuti specifici del tuo lavoro. Significa anche che, se desideri un’interfaccia più ampia o diversa, puoi semplicemente richiederla. Potresti chiedere a DALL-E di produrre alcuni preset modificabili per le sue impostazioni ispirati da famosi artisti di schizzi. Quando clicchi sul preset Leonardo da Vinci, imposta i cursori per disegni prospettici altamente dettagliati in inchiostro nero. Se clicchi su Charles Schulz, seleziona fumetti tecnicolor 2D a basso dettaglio.

### Una bicicletta della mente proteiforme

La metafora modello-come-persona ha una curiosa tendenza a creare distanza tra l’utente e il modello, rispecchiando il divario di comunicazione tra due persone che può essere ridotto ma mai completamente colmato. A causa della difficoltà e del costo di comunicare a parole, le persone tendono a suddividere i compiti tra loro in blocchi grandi e il più indipendenti possibile. Le interfacce modello-come-persona seguono questo schema: non vale la pena dire a un modello di aggiungere un return statement alla tua funzione quando è più veloce scriverlo da solo. Con il sovraccarico della comunicazione, i sistemi modello-come-persona sono più utili quando possono fare un intero blocco di lavoro da soli. Fanno le cose per te.

Questo contrasta con il modo in cui interagiamo con i computer o altri strumenti. Gli strumenti producono feedback visivi in tempo reale e sono controllati attraverso manipolazioni dirette. Hanno un overhead comunicativo così basso che non è necessario specificare un blocco di lavoro indipendente. Ha più senso mantenere l’umano nel loop e dirigere lo strumento momento per momento. Come stivali delle sette leghe, gli strumenti ti permettono di andare più lontano a ogni passo, ma sei ancora tu a fare il lavoro. Ti permettono di fare le cose più velocemente.

Considera il compito di costruire un sito web usando un grande modello. Con le interfacce di oggi, potresti trattare il modello come un appaltatore o un collaboratore. Cercheresti di scrivere a parole il più possibile su come vuoi che il sito appaia, cosa vuoi che dica e quali funzionalità vuoi che abbia. Il modello genererebbe una prima bozza, tu la eseguirai e poi fornirai un feedback. “Fai il logo un po’ più grande”, diresti, e “centra quella prima immagine principale”, e “deve esserci un pulsante di login nell’intestazione”. Per ottenere esattamente ciò che vuoi, invierai una lista molto lunga di richieste sempre più minuziose.

Un’interazione alternativa modello-come-computer sarebbe diversa: invece di costruire il sito web, il modello genererebbe un’interfaccia per te per costruirlo, dove ogni input dell’utente a quell’interfaccia interroga il grande modello sotto il cofano. Forse quando descrivi le tue necessità creerebbe un’interfaccia con una barra laterale e una finestra di anteprima. All’inizio la barra laterale contiene solo alcuni schizzi di layout che puoi scegliere come punto di partenza. Puoi cliccare su ciascuno di essi, e il modello scrive l’HTML per una pagina web usando quel layout e lo visualizza nella finestra di anteprima. Ora che hai una pagina su cui lavorare, la barra laterale guadagna opzioni aggiuntive che influenzano la pagina globalmente, come accoppiamenti di font e schemi di colore. L’anteprima funge da editor WYSIWYG, permettendoti di afferrare elementi e spostarli, modificarne i contenuti, ecc. A supportare tutto ciò è il modello, che vede queste azioni dell’utente e riscrive la pagina per corrispondere ai cambiamenti effettuati. Poiché il modello può generare un’interfaccia per aiutare te e lui a comunicare più efficientemente, puoi esercitare più controllo sul prodotto finale in meno tempo.

La metafora modello-come-computer ci incoraggia a pensare al modello come a uno strumento con cui interagire in tempo reale piuttosto che a un collaboratore a cui assegnare compiti. Invece di sostituire un tirocinante o un tutor, può essere una sorta di bicicletta proteiforme per la mente, una che è sempre costruita su misura esattamente per te e il terreno che intendi attraversare.

### Un nuovo paradigma per l’informatica?

I modelli che possono generare interfacce su richiesta sono una frontiera completamente nuova nell’informatica. Potrebbero essere un paradigma del tutto nuovo, con il modo in cui cortocircuitano il modello di applicazione esistente. Dare agli utenti finali il potere di creare e modificare app al volo cambia fondamentalmente il modo in cui interagiamo con i computer. Al posto di una singola applicazione statica costruita da uno sviluppatore, un modello genererà un’applicazione su misura per l’utente e le sue esigenze immediate. Al posto della logica aziendale implementata nel codice, il modello interpreterà gli input dell’utente e aggiornerà l’interfaccia utente. È persino possibile che questo tipo di interfaccia generativa sostituisca completamente il sistema operativo, generando e gestendo interfacce e finestre al volo secondo necessità.

All’inizio, l’interfaccia generativa sarà un giocattolo, utile solo per l’esplorazione creativa e poche altre applicazioni di nicchia. Dopotutto, nessuno vorrebbe un’app di posta elettronica che occasionalmente invia email al tuo ex e mente sulla tua casella di posta. Ma gradualmente i modelli miglioreranno. Anche mentre si spingeranno ulteriormente nello spazio di esperienze completamente nuove, diventeranno lentamente abbastanza affidabili da essere utilizzati per un lavoro reale.

Piccoli pezzi di questo futuro esistono già. Anni fa Jonas Degrave ha dimostrato che ChatGPT poteva fare una buona simulazione di una riga di comando Linux. Allo stesso modo, websim.ai utilizza un LLM per generare siti web su richiesta mentre li navighi. Oasis, GameNGen e DIAMOND addestrano modelli video condizionati sull’azione su singoli videogiochi, permettendoti di giocare ad esempio a Doom dentro un grande modello. E Genie 2 genera videogiochi giocabili da prompt testuali. L’interfaccia generativa potrebbe ancora sembrare un’idea folle, ma non è così folle.

Ci sono enormi domande aperte su come apparirà tutto questo. Dove sarà inizialmente utile l’interfaccia generativa? Come condivideremo e distribuiremo le esperienze che creiamo collaborando con il modello, se esistono solo come contesto di un grande modello? Vorremmo davvero farlo? Quali nuovi tipi di esperienze saranno possibili? Come funzionerà tutto questo in pratica? I modelli genereranno interfacce come codice o produrranno direttamente pixel grezzi?

Non conosco ancora queste risposte. Dovremo sperimentare e scoprirlo!Che cosa significherebbe trattare l'IA come uno strumento invece che come una persona?

Dall’avvio di ChatGPT, le esplorazioni in due direzioni hanno preso velocità.

La prima direzione riguarda le capacità tecniche. Quanto grande possiamo addestrare un modello? Quanto bene può rispondere alle domande del SAT? Con quanta efficienza possiamo distribuirlo?

La seconda direzione riguarda il design dell’interazione. Come comunichiamo con un modello? Come possiamo usarlo per un lavoro utile? Quale metafora usiamo per ragionare su di esso?

La prima direzione è ampiamente seguita e enormemente finanziata, e per una buona ragione: i progressi nelle capacità tecniche sono alla base di ogni possibile applicazione. Ma la seconda è altrettanto cruciale per il campo e ha enormi incognite. Siamo solo a pochi anni dall’inizio dell’era dei grandi modelli. Quali sono le probabilità che abbiamo già capito i modi migliori per usarli?

Propongo una nuova modalità di interazione, in cui i modelli svolgano il ruolo di applicazioni informatiche (ad esempio app per telefoni): fornendo un’interfaccia grafica, interpretando gli input degli utenti e aggiornando il loro stato. In questa modalità, invece di essere un “agente” che utilizza un computer per conto dell’essere umano, l’IA può fornire un ambiente informatico più ricco e potente che possiamo utilizzare.

### Metafore per l’interazione

Al centro di un’interazione c’è una metafora che guida le aspettative di un utente su un sistema. I primi giorni dell’informatica hanno preso metafore come “scrivanie”, “macchine da scrivere”, “fogli di calcolo” e “lettere” e le hanno trasformate in equivalenti digitali, permettendo all’utente di ragionare sul loro comportamento. Puoi lasciare qualcosa sulla tua scrivania e tornare a prenderlo; hai bisogno di un indirizzo per inviare una lettera. Man mano che abbiamo sviluppato una conoscenza culturale di questi dispositivi, la necessità di queste particolari metafore è scomparsa, e con esse i design di interfaccia skeumorfici che le rafforzavano. Come un cestino o una matita, un computer è ora una metafora di se stesso.

La metafora dominante per i grandi modelli oggi è modello-come-persona. Questa è una metafora efficace perché le persone hanno capacità estese che conosciamo intuitivamente. Implica che possiamo avere una conversazione con un modello e porgli domande; che il modello possa collaborare con noi su un documento o un pezzo di codice; che possiamo assegnargli un compito da svolgere da solo e che tornerà quando sarà finito.

Tuttavia, trattare un modello come una persona limita profondamente il nostro modo di pensare all’interazione con esso. Le interazioni umane sono intrinsecamente lente e lineari, limitate dalla larghezza di banda e dalla natura a turni della comunicazione verbale. Come abbiamo tutti sperimentato, comunicare idee complesse in una conversazione è difficile e dispersivo. Quando vogliamo precisione, ci rivolgiamo invece a strumenti, utilizzando manipolazioni dirette e interfacce visive ad alta larghezza di banda per creare diagrammi, scrivere codice e progettare modelli CAD. Poiché concepiamo i modelli come persone, li utilizziamo attraverso conversazioni lente, anche se sono perfettamente in grado di accettare input diretti e rapidi e di produrre risultati visivi. Le metafore che utilizziamo limitano le esperienze che costruiamo, e la metafora modello-come-persona ci impedisce di esplorare il pieno potenziale dei grandi modelli.

Per molti casi d’uso, e specialmente per il lavoro produttivo, credo che il futuro risieda in un’altra metafora: modello-come-computer.

### Usare un’IA come un computer

Sotto la metafora modello-come-computer, interagiremo con i grandi modelli seguendo le intuizioni che abbiamo sulle applicazioni informatiche (sia su desktop, tablet o telefono). Nota che ciò non significa che il modello sarà un’app tradizionale più di quanto il desktop di Windows fosse una scrivania letterale. “Applicazione informatica” sarà un modo per un modello di rappresentarsi a noi. Invece di agire come una persona, il modello agirà come un computer.

Agire come un computer significa produrre un’interfaccia grafica. Al posto del flusso lineare di testo in stile telescrivente fornito da ChatGPT, un sistema modello-come-computer genererà qualcosa che somiglia all’interfaccia di un’applicazione moderna: pulsanti, cursori, schede, immagini, grafici e tutto il resto. Questo affronta limitazioni chiave dell’interfaccia di chat standard modello-come-persona:

Scoperta. Un buon strumento suggerisce i suoi usi. Quando l’unica interfaccia è una casella di testo vuota, spetta all’utente capire cosa fare e comprendere i limiti del sistema. La barra laterale Modifica in Lightroom è un ottimo modo per imparare l’editing fotografico perché non si limita a dirti cosa può fare questa applicazione con una foto, ma cosa potresti voler fare. Allo stesso modo, un’interfaccia modello-come-computer per DALL-E potrebbe mostrare nuove possibilità per le tue generazioni di immagini.

Efficienza. La manipolazione diretta è più rapida che scrivere una richiesta a parole. Per continuare l’esempio di Lightroom, sarebbe impensabile modificare una foto dicendo a una persona quali cursori spostare e di quanto. Ci vorrebbe un giorno intero per chiedere un’esposizione leggermente più bassa e una vibranza leggermente più alta, solo per vedere come apparirebbe. Nella metafora modello-come-computer, il modello può creare strumenti che ti permettono di comunicare ciò che vuoi più efficientemente e quindi di fare le cose più rapidamente.

A differenza di un’app tradizionale, questa interfaccia grafica è generata dal modello su richiesta. Questo significa che ogni parte dell’interfaccia che vedi è rilevante per ciò che stai facendo in quel momento, inclusi i contenuti specifici del tuo lavoro. Significa anche che, se desideri un’interfaccia più ampia o diversa, puoi semplicemente richiederla. Potresti chiedere a DALL-E di produrre alcuni preset modificabili per le sue impostazioni ispirati da famosi artisti di schizzi. Quando clicchi sul preset Leonardo da Vinci, imposta i cursori per disegni prospettici altamente dettagliati in inchiostro nero. Se clicchi su Charles Schulz, seleziona fumetti tecnicolor 2D a basso dettaglio.

### Una bicicletta della mente proteiforme

La metafora modello-come-persona ha una curiosa tendenza a creare distanza tra l’utente e il modello, rispecchiando il divario di comunicazione tra due persone che può essere ridotto ma mai completamente colmato. A causa della difficoltà e del costo di comunicare a parole, le persone tendono a suddividere i compiti tra loro in blocchi grandi e il più indipendenti possibile. Le interfacce modello-come-persona seguono questo schema: non vale la pena dire a un modello di aggiungere un return statement alla tua funzione quando è più veloce scriverlo da solo. Con il sovraccarico della comunicazione, i sistemi modello-come-persona sono più utili quando possono fare un intero blocco di lavoro da soli. Fanno le cose per te.

Questo contrasta con il modo in cui interagiamo con i computer o altri strumenti. Gli strumenti producono feedback visivi in tempo reale e sono controllati attraverso manipolazioni dirette. Hanno un overhead comunicativo così basso che non è necessario specificare un blocco di lavoro indipendente. Ha più senso mantenere l’umano nel loop e dirigere lo strumento momento per momento. Come stivali delle sette leghe, gli strumenti ti permettono di andare più lontano a ogni passo, ma sei ancora tu a fare il lavoro. Ti permettono di fare le cose più velocemente.

Considera il compito di costruire un sito web usando un grande modello. Con le interfacce di oggi, potresti trattare il modello come un appaltatore o un collaboratore. Cercheresti di scrivere a parole il più possibile su come vuoi che il sito appaia, cosa vuoi che dica e quali funzionalità vuoi che abbia. Il modello genererebbe una prima bozza, tu la eseguirai e poi fornirai un feedback. “Fai il logo un po’ più grande”, diresti, e “centra quella prima immagine principale”, e “deve esserci un pulsante di login nell’intestazione”. Per ottenere esattamente ciò che vuoi, invierai una lista molto lunga di richieste sempre più minuziose.

Un’interazione alternativa modello-come-computer sarebbe diversa: invece di costruire il sito web, il modello genererebbe un’interfaccia per te per costruirlo, dove ogni input dell’utente a quell’interfaccia interroga il grande modello sotto il cofano. Forse quando descrivi le tue necessità creerebbe un’interfaccia con una barra laterale e una finestra di anteprima. All’inizio la barra laterale contiene solo alcuni schizzi di layout che puoi scegliere come punto di partenza. Puoi cliccare su ciascuno di essi, e il modello scrive l’HTML per una pagina web usando quel layout e lo visualizza nella finestra di anteprima. Ora che hai una pagina su cui lavorare, la barra laterale guadagna opzioni aggiuntive che influenzano la pagina globalmente, come accoppiamenti di font e schemi di colore. L’anteprima funge da editor WYSIWYG, permettendoti di afferrare elementi e spostarli, modificarne i contenuti, ecc. A supportare tutto ciò è il modello, che vede queste azioni dell’utente e riscrive la pagina per corrispondere ai cambiamenti effettuati. Poiché il modello può generare un’interfaccia per aiutare te e lui a comunicare più efficientemente, puoi esercitare più controllo sul prodotto finale in meno tempo.

La metafora modello-come-computer ci incoraggia a pensare al modello come a uno strumento con cui interagire in tempo reale piuttosto che a un collaboratore a cui assegnare compiti. Invece di sostituire un tirocinante o un tutor, può essere una sorta di bicicletta proteiforme per la mente, una che è sempre costruita su misura esattamente per te e il terreno che intendi attraversare.

### Un nuovo paradigma per l’informatica?

I modelli che possono generare interfacce su richiesta sono una frontiera completamente nuova nell’informatica. Potrebbero essere un paradigma del tutto nuovo, con il modo in cui cortocircuitano il modello di applicazione esistente. Dare agli utenti finali il potere di creare e modificare app al volo cambia fondamentalmente il modo in cui interagiamo con i computer. Al posto di una singola applicazione statica costruita da uno sviluppatore, un modello genererà un’applicazione su misura per l’utente e le sue esigenze immediate. Al posto della logica aziendale implementata nel codice, il modello interpreterà gli input dell’utente e aggiornerà l’interfaccia utente. È persino possibile che questo tipo di interfaccia generativa sostituisca completamente il sistema operativo, generando e gestendo interfacce e finestre al volo secondo necessità.

All’inizio, l’interfaccia generativa sarà un giocattolo, utile solo per l’esplorazione creativa e poche altre applicazioni di nicchia. Dopotutto, nessuno vorrebbe un’app di posta elettronica che occasionalmente invia email al tuo ex e mente sulla tua casella di posta. Ma gradualmente i modelli miglioreranno. Anche mentre si spingeranno ulteriormente nello spazio di esperienze completamente nuove, diventeranno lentamente abbastanza affidabili da essere utilizzati per un lavoro reale.

Piccoli pezzi di questo futuro esistono già. Anni fa Jonas Degrave ha dimostrato che ChatGPT poteva fare una buona simulazione di una riga di comando Linux. Allo stesso modo, websim.ai utilizza un LLM per generare siti web su richiesta mentre li navighi. Oasis, GameNGen e DIAMOND addestrano modelli video condizionati sull’azione su singoli videogiochi, permettendoti di giocare ad esempio a Doom dentro un grande modello. E Genie 2 genera videogiochi giocabili da prompt testuali. L’interfaccia generativa potrebbe ancora sembrare un’idea folle, ma non è così folle.

Ci sono enormi domande aperte su come apparirà tutto questo. Dove sarà inizialmente utile l’interfaccia generativa? Come condivideremo e distribuiremo le esperienze che creiamo collaborando con il modello, se esistono solo come contesto di un grande modello? Vorremmo davvero farlo? Quali nuovi tipi di esperienze saranno possibili? Come funzionerà tutto questo in pratica? I modelli genereranno interfacce come codice o produrranno direttamente pixel grezzi?

Non conosco ancora queste risposte. Dovremo sperimentare e scoprirlo!

Tradotto da:\

https://willwhitney.com/computing-inside-ai.htmlhttps://willwhitney.com/computing-inside-ai.html

-

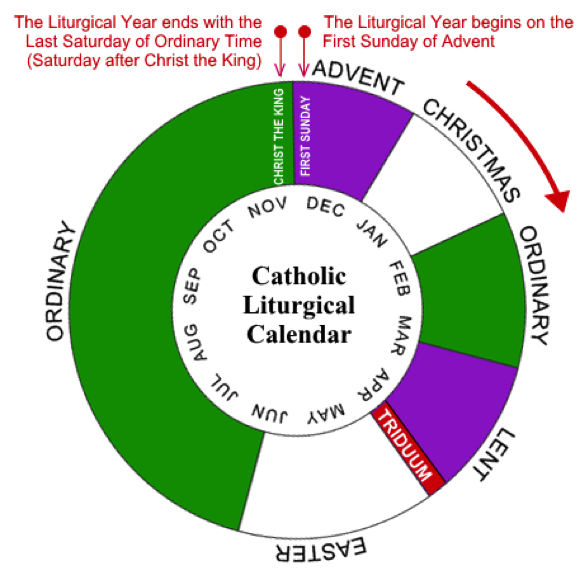

Christmas season hasn't actually started, yet, in Roman #Catholic Germany. We're in Advent until the evening of the 24th of December, at which point Christmas begins (with the Nativity, at Vespers), and continues on for 40 days until Mariä Lichtmess (Presentation of Christ in the temple) on February 2nd.

It's 40 days because that's how long the post-partum isolation is, before women were allowed back into the temple (after a ritual cleansing).

That is the day when we put away all of the Christmas decorations and bless the candles, for the next year. (Hence, the British name "Candlemas".) It used to also be when household staff would get paid their cash wages and could change employer. And it is the day precisely in the middle of winter.

Between Christmas Eve and Candlemas are many celebrations, concluding with the Twelfth Night called Epiphany or Theophany. This is the day some Orthodox celebrate Christ's baptism, so traditions rotate around blessing of waters.

The Monday after Epiphany was the start of the farming season, in England, so that Sunday all of the ploughs were blessed, but the practice has largely died out.

Our local tradition is for the altar servers to dress as the wise men and go door-to-door, carrying their star and looking for the Baby Jesus, who is rumored to be lying in a manger.

They collect cash gifts and chocolates, along the way, and leave the generous their powerful blessing, written over the door. The famous 20 * C + M + B * 25 blessing means "Christus mansionem benedicat" (Christ, bless this house), or "Caspar, Melchior, Balthasar" (the names of the three kings), depending upon who you ask.

They offer the cash to the Baby Jesus (once they find him in the church's Nativity scene), but eat the sweets, themselves. It is one of the biggest donation-collections in the world, called the "Sternsinger" (star singers). The money goes from the German children, to help children elsewhere, and they collect around €45 million in cash and coins, every year.

As an interesting aside:

The American "groundhog day", derives from one of the old farmers' sayings about Candlemas, brought over by the Pennsylvania Dutch. It says, that if the badger comes out of his hole and sees his shadow, then it'll remain cold for 4 more weeks. When they moved to the USA, they didn't have any badgers around, so they switched to groundhogs, as they also hibernate in winter.

-

Depuis sa création en 2009, Bitcoin suscite débats passionnés. Pour certains, il s’agit d’une tentative utopique d’émancipation du pouvoir étatique, inspirée par une philosophie libertarienne radicale. Pour d’autres, c'est une réponse pragmatique à un système monétaire mondial jugé défaillant. Ces deux perspectives, souvent opposées, méritent d’être confrontées pour mieux comprendre les enjeux et les limites de cette révolution monétaire.

### La critique économique de Bitcoin

David Cayla, dans ses dernières contributions, perçoit Bitcoin comme une tentative de réaliser une « utopie libertarienne ». Selon lui, les inventeurs des cryptomonnaies cherchent à créer une monnaie détachée de l’État et des rapports sociaux traditionnels, en s’inspirant de l’école autrichienne d’économie. Cette école, incarnée par des penseurs comme Friedrich Hayek, prône une monnaie privée et indépendante de l’intervention politique.

Cayla reproche à Bitcoin de nier la dimension intrinsèquement sociale et politique de la monnaie. Pour lui, toute monnaie repose sur la confiance collective et la régulation étatique. En rendant la monnaie exogène et dénuée de dette, Bitcoin chercherait à éviter les interactions sociales au profit d’une logique purement individuelle. Selon cette analyse, Bitcoin serait une illusion, incapable de remplacer les monnaies traditionnelles qui sont des « biens publics » soutenus par des institutions.

Cependant, cette critique semble minimiser la légitimité des motivations derrière Bitcoin. La crise financière de 2008, marquée par des abus systémiques et des sauvetages bancaires controversés, a largement érodé la confiance dans les systèmes monétaires centralisés. Bitcoin n’est pas qu’une réaction idéologique : c’est une tentative de créer un système monétaire transparent, immuable et résistant aux manipulations.

### La perspective maximaliste

Pour les bitcoiners, Bitcoin n’est pas une utopie mais une réponse pragmatique à un problème réel : la centralisation excessive et les défaillances des monnaies fiat. Contrairement à ce que Cayla affirme, Bitcoin ne cherche pas à nier la société, mais à protéger les individus de la coercition étatique. En déplaçant la confiance des institutions vers un protocole neutre et transparent, Bitcoin redéfinit les bases des échanges monétaires.

L’accusation selon laquelle Bitcoin serait « asocial » ignore son écosystème communautaire mondial. Les bitcoiners collaborent activement à l’amélioration du réseau, organisent des événements éducatifs et développent des outils inclusifs. Bitcoin n’est pas un rejet des rapports sociaux, mais une tentative de les reconstruire sur des bases plus justes et transparentes.

Un maximaliste insisterait également sur l’émergence historique de la monnaie. Contrairement à l’idée que l’État aurait toujours créé la monnaie, de nombreux exemples montrent que celle-ci émergeait souvent du marché, avant d’être capturée par les gouvernements pour financer des guerres ou imposer des monopoles. Bitcoin réintroduit une monnaie à l’abri des manipulations politiques, similaire aux systèmes basés sur l’or, mais en mieux : numérique, immuable et accessible.

### Utopie contre réalité

Si Cayla critique l’absence de dette dans Bitcoin, Nous y voyons une vertu. La « monnaie-dette » actuelle repose sur un système de création monétaire inflationniste qui favorise les inégalités et l’érosion du pouvoir d’achat. En limitant son offre à 21 millions d’unités, Bitcoin offre une alternative saine et prévisible, loin des politiques monétaires arbitraires.

Cependant, les maximalistes ne nient pas que Bitcoin ait encore des défis à relever. La volatilité de sa valeur, sa complexité technique pour les nouveaux utilisateurs, et les tensions entre réglementation et liberté individuelle sont des questions ouvertes. Mais pour autant, ces épreuves ne remettent pas en cause sa pertinence. Au contraire, elles illustrent l’importance de réfléchir à des modèles alternatifs.

### Conclusion : un débat essentiel

Bitcoin n’est pas une utopie libertarienne, mais une révolution monétaire sans précédent. Les critiques de Cayla, bien qu’intellectuellement stimulantes, manquent de saisir l’essence de Bitcoin : une monnaie qui libère les individus des abus systémiques des États et des institutions centralisées. Loin de simplement avoir un « potentiel », Bitcoin est déjà en train de redéfinir les rapports entre la monnaie, la société et la liberté. Bitcoin offre une expérience unique : celle d’une monnaie mondiale, neutre et décentralisée, qui redonne le pouvoir aux individus. Que l’on soit sceptique ou enthousiaste, il est clair que Bitcoin oblige à repenser les rapports entre monnaie, société et État.

En fin de compte, le débat sur Bitcoin n’est pas seulement une querelle sur sa légitimité, mais une interrogation sur la manière dont il redessine les rapports sociaux et sociétaux autour de la monnaie. En rendant le pouvoir monétaire à chaque individu, Bitcoin propose un modèle où les interactions économiques peuvent être réalisées sans coercition, renforçant ainsi la confiance mutuelle et les communautés globales. Cette discussion, loin d’être close, ne fait que commencer.

-

I started a long series of articles about how to model different types of knowledge graphs in the relational model, which makes on-device memory models for AI agents possible.

We model-directed graphs

Also, graphs of entities

We even model hypergraphs

Last time, we discussed why classical triple and simple knowledge graphs are insufficient for AI agents and complex memory, especially in the domain of time-aware or multi-model knowledge.

So why do we need metagraphs, and what kind of challenge could they help us to solve?

- complex and nested event and temporal context and temporal relations as edges

- multi-mode and multilingual knowledge

- human-like memory for AI agents that has multiple contexts and relations between knowledge in neuron-like networks

## MetaGraphs

A meta graph is a concept that extends the idea of a graph by allowing edges to become graphs. Meta Edges connect a set of nodes, which could also be subgraphs. So, at some level, node and edge are pretty similar in properties but act in different roles in a different context.

Also, in some cases, edges could be referenced as nodes.

This approach enables the representation of more complex relationships and hierarchies than a traditional graph structure allows. Let’s break down each term to understand better metagraphs and how they differ from hypergraphs and graphs.

## Graph Basics

- A standard **graph** has a set of **nodes** (or vertices) and **edges** (connections between nodes).

- Edges are generally simple and typically represent a binary relationship between two nodes.

- For instance, an edge in a social network graph might indicate a “friend” relationship between two people (nodes).

## Hypergraph

- A **hypergraph** extends the concept of an edge by allowing it to connect any number of nodes, not just two.

- Each connection, called a **hyperedge**, can link multiple nodes.

- This feature allows hypergraphs to model more complex relationships involving multiple entities simultaneously. For example, a hyperedge in a hypergraph could represent a project team, connecting all team members in a single relation.

- Despite its flexibility, a hypergraph doesn’t capture hierarchical or nested structures; it only generalizes the number of connections in an edge.

## Metagraph

- A **metagraph** allows the edges to be graphs themselves. This means each edge can contain its own nodes and edges, creating nested, hierarchical structures.

- In a meta graph, an edge could represent a relationship defined by a graph. For instance, a meta graph could represent a network of organizations where each organization’s structure (departments and connections) is represented by its own internal graph and treated as an edge in the larger meta graph.

- This recursive structure allows metagraphs to model complex data with multiple layers of abstraction. They can capture multi-node relationships (as in hypergraphs) and detailed, structured information about each relationship.

## Named Graphs and Graph of Graphs

As you can notice, the structure of a metagraph is quite complex and could be complex to model in relational and classical RDF setups. It could create a challenge of luck of tools and software solutions for your problem.

If you need to model nested graphs, you could use a much simpler model of Named graphs, which could take you quite far.

The concept of the named graph came from the RDF community, which needed to group some sets of triples. In this way, you form subgraphs inside an existing graph. You could refer to the subgraph as a regular node. This setup simplifies complex graphs, introduces hierarchies, and even adds features and properties of hypergraphs while keeping a directed nature.

It looks complex, but it is not so hard to model it with a slight modification of a directed graph.

So, the node could host graphs inside. Let's reflect this fact with a location for a node. If a node belongs to a main graph, we could set the location to null or introduce a main node . it is up to you

Nodes could have edges to nodes in different subgraphs. This structure allows any kind of nesting graphs. Edges stay location-free

## Meta Graphs in Relational Model

Let’s try to make several attempts to model different meta-graphs with some constraints.

## Directed Metagraph where edges are not used as nodes and could not contain subgraphs

In this case, the edge always points to two sets of nodes. This introduces an overhead of creating a node set for a single node. In this model, we can model empty node sets that could require application-level constraints to prevent such cases.

## Directed Metagraph where edges are not used as nodes and could contain subgraphs

Adding a node set that could model a subgraph located in an edge is easy but could be separate from in-vertex or out-vert.

I also do not see a direct need to include subgraphs to a node, as we could just use a node set interchangeably, but it still could be a case.

## Directed Metagraph where edges are used as nodes and could contain subgraphs

As you can notice, we operate all the time with node sets. We could simply allow the extension node set to elements set that include node and edge IDs, but in this case, we need to use uuid or any other strategy to differentiate node IDs from edge IDs. In this case, we have a collision of ephemeral edges or ephemeral nodes when we want to change the role and purpose of the node as an edge or vice versa.

A full-scale metagraph model is way too complex for a relational database.

So we need a better model.

Now, we have more flexibility but loose structural constraints. We cannot show that the element should have one vertex, one vertex, or both. This type of constraint has been moved to the application level. Also, the crucial question is about query and retrieval needs.

Any meta-graph model should be more focused on domain and needs and should be used in raw form. We did it for a pure theoretical purpose.

-

Hey folks! Today, let’s dive into the intriguing world of neurosymbolic approaches, retrieval-augmented generation (RAG), and personal knowledge graphs (PKGs). Together, these concepts hold much potential for bringing true reasoning capabilities to large language models (LLMs). So, let’s break down how symbolic logic, knowledge graphs, and modern AI can come together to empower future AI systems to reason like humans.

## The Neurosymbolic Approach: What It Means ?

Neurosymbolic AI combines two historically separate streams of artificial intelligence: symbolic reasoning and neural networks. Symbolic AI uses formal logic to process knowledge, similar to how we might solve problems or deduce information. On the other hand, neural networks, like those underlying GPT-4, focus on learning patterns from vast amounts of data — they are probabilistic statistical models that excel in generating human-like language and recognizing patterns but often lack deep, explicit reasoning.

While GPT-4 can produce impressive text, it’s still not very effective at reasoning in a truly logical way. Its foundation, transformers, allows it to excel in pattern recognition, but the models struggle with reasoning because, at their core, they rely on statistical probabilities rather than true symbolic logic. This is where neurosymbolic methods and knowledge graphs come in.

## Symbolic Calculations and the Early Vision of AI