-

@ 04c915da:3dfbecc9

2025-03-26 20:54:33

Capitalism is the most effective system for scaling innovation. The pursuit of profit is an incredibly powerful human incentive. Most major improvements to human society and quality of life have resulted from this base incentive. Market competition often results in the best outcomes for all.

That said, some projects can never be monetized. They are open in nature and a business model would centralize control. Open protocols like bitcoin and nostr are not owned by anyone and if they were it would destroy the key value propositions they provide. No single entity can or should control their use. Anyone can build on them without permission.

As a result, open protocols must depend on donation based grant funding from the people and organizations that rely on them. This model works but it is slow and uncertain, a grind where sustainability is never fully reached but rather constantly sought. As someone who has been incredibly active in the open source grant funding space, I do not think people truly appreciate how difficult it is to raise charitable money and deploy it efficiently.

Projects that can be monetized should be. Profitability is a super power. When a business can generate revenue, it taps into a self sustaining cycle. Profit fuels growth and development while providing projects independence and agency. This flywheel effect is why companies like Google, Amazon, and Apple have scaled to global dominance. The profit incentive aligns human effort with efficiency. Businesses must innovate, cut waste, and deliver value to survive.

Contrast this with non monetized projects. Without profit, they lean on external support, which can dry up or shift with donor priorities. A profit driven model, on the other hand, is inherently leaner and more adaptable. It is not charity but survival. When survival is tied to delivering what people want, scale follows naturally.

The real magic happens when profitable, sustainable businesses are built on top of open protocols and software. Consider the many startups building on open source software stacks, such as Start9, Mempool, and Primal, offering premium services on top of the open source software they build out and maintain. Think of companies like Block or Strike, which leverage bitcoin’s open protocol to offer their services on top. These businesses amplify the open software and protocols they build on, driving adoption and improvement at a pace donations alone could never match.

When you combine open software and protocols with profit driven business the result are lean, sustainable companies that grow faster and serve more people than either could alone. Bitcoin’s network, for instance, benefits from businesses that profit off its existence, while nostr will expand as developers monetize apps built on the protocol.

Capitalism scales best because competition results in efficiency. Donation funded protocols and software lay the groundwork, while market driven businesses build on top. The profit incentive acts as a filter, ensuring resources flow to what works, while open systems keep the playing field accessible, empowering users and builders. Together, they create a flywheel of innovation, growth, and global benefit.

-

@ 04c915da:3dfbecc9

2025-03-25 17:43:44

One of the most common criticisms leveled against nostr is the perceived lack of assurance when it comes to data storage. Critics argue that without a centralized authority guaranteeing that all data is preserved, important information will be lost. They also claim that running a relay will become prohibitively expensive. While there is truth to these concerns, they miss the mark. The genius of nostr lies in its flexibility, resilience, and the way it harnesses human incentives to ensure data availability in practice.

A nostr relay is simply a server that holds cryptographically verifiable signed data and makes it available to others. Relays are simple, flexible, open, and require no permission to run. Critics are right that operating a relay attempting to store all nostr data will be costly. What they miss is that most will not run all encompassing archive relays. Nostr does not rely on massive archive relays. Instead, anyone can run a relay and choose to store whatever subset of data they want. This keeps costs low and operations flexible, making relay operation accessible to all sorts of individuals and entities with varying use cases.

Critics are correct that there is no ironclad guarantee that every piece of data will always be available. Unlike bitcoin where data permanence is baked into the system at a steep cost, nostr does not promise that every random note or meme will be preserved forever. That said, in practice, any data perceived as valuable by someone will likely be stored and distributed by multiple entities. If something matters to someone, they will keep a signed copy.

Nostr is the Streisand Effect in protocol form. The Streisand effect is when an attempt to suppress information backfires, causing it to spread even further. With nostr, anyone can broadcast signed data, anyone can store it, and anyone can distribute it. Try to censor something important? Good luck. The moment it catches attention, it will be stored on relays across the globe, copied, and shared by those who find it worth keeping. Data deemed important will be replicated across servers by individuals acting in their own interest.

Nostr’s distributed nature ensures that the system does not rely on a single point of failure or a corporate overlord. Instead, it leans on the collective will of its users. The result is a network where costs stay manageable, participation is open to all, and valuable verifiable data is stored and distributed forever.

-

@ 39cc53c9:27168656

2025-03-30 05:54:48

> [Read the original blog post](https://blog.kycnot.me/p/monero-history)

Bitcoin enthusiasts frequently and correctly remark how much value it adds to Bitcoin not to have a face, a leader, or a central authority behind it. This particularity means there isn't a single person to exert control over, or a single human point of failure who could become corrupt or harmful to the project.

Because of this, it is said that no other coin can be equally valuable as Bitcoin in terms of decentralization and trustworthiness. Bitcoin is unique not just for being first, but also because of how the events behind its inception developed. This implies that, from Bitcoin onwards, any coin created would have been created by someone, consequently having an authority behind it. For this and some other reasons, some people refer to Bitcoin as "[The Immaculate Conception](https://yewtu.be/watch?v=FXvQcuIb5rU)".

While other coins may have their own unique features and advantages, they may not be able to replicate Bitcoin's community-driven nature. However, one other cryptocurrency shares a similar story of mystery behind its creation: **Monero**.

## History of Monero

### Bytecoin and CryptoNote

In March 2014, a Bitcointalk thread titled "*Bytecoin. Secure, private, untraceable since 2012*" was initiated by a user under the nickname "**DStrange**"[^1^]. DStrange presented Bytecoin (BCN) as a unique cryptocurrency, in operation since July 2012. Unlike Bitcoin, it employed a new algorithm known as CryptoNote.

DStrange apparently stumbled upon the Bytecoin website by chance while mining a dying bitcoin fork, and decided to create a thread on Bitcointalk[^1^]. This sparked curiosity among some users, who wondered how could Bytecoin remain unnoticed since its alleged launch in 2012 until then[^2^] [^3^].

Some time after, a user brought up the "CryptoNote v2.0" whitepaper for the first time, underlining its innovative features[^4^]. Authored by the pseudonymous **Nicolas van Saberhagen** in October 2013, the CryptoNote v2 whitepaper[^5^] highlighted the traceability and privacy problems in Bitcoin. Saberhagen argued that these flaws could not be quickly fixed, suggesting it would be more efficient to start a new project rather than trying to patch the original[^5^], an statement simmilar to the one from Satoshi Nakamoto[^6^].

Checking with Saberhagen's digital signature, the release date of the whitepaper seemed correct, which would mean that Cryptonote (v1) was created in 2012[^7^] [^8^], although there's an important detail: *"Signing time is from the clock on the signer's computer"* [^9^].

Moreover, the whitepaper v1 contains a footnote link to a Bitcointalk post dated May 5, 2013[^10^], making it impossible for the whitepaper to have been signed and released on December 12, 2012.

As the narrative developed, users discovered that a significant **80% portion of Bytecoin had been pre-mined**[^11^] and blockchain dates seemed to be faked to make it look like it had been operating since 2012, leading to controversy surrounding the project.

The origins of CryptoNote and Bytecoin remain mysterious, leaving suspicions of a possible scam attempt, although the whitepaper had a good amount of work and thought on it.

### The fork

In April 2014, the Bitcointalk user **`thankful_for_today`**, who had also participated in the Bytecoin thread[^12^], announced plans to launch a Bytecoin fork named **Bitmonero**[^13^] [^14^].

The primary motivation behind this fork was *"Because there is a number of technical and marketing issues I wanted to do differently. And also because I like ideas and technology and I want it to succeed"*[^14^]. This time Bitmonero did things different from Bytecoin: there was no premine or instamine, and no portion of the block reward went to development.

However, thankful_for_today proposed controversial changes that the community disagreed with. **Johnny Mnemonic** relates the events surrounding Bitmonero and thankful_for_today in a Bitcointalk comment[^15^]:

> When thankful_for_today launched BitMonero [...] he ignored everything that was discussed and just did what he wanted. The block reward was considerably steeper than what everyone was expecting. He also moved forward with 1-minute block times despite everyone's concerns about the increase of orphan blocks. He also didn't address the tail emission concern that should've (in my opinion) been in the code at launch time. Basically, he messed everything up. *Then, he disappeared*.

After disappearing for a while, thankful_for_today returned to find that the community had taken over the project. Johnny Mnemonic continues:

> I, and others, started working on new forks that were closer to what everyone else was hoping for. [...] it was decided that the BitMonero project should just be taken over. There were like 9 or 10 interested parties at the time if my memory is correct. We voted on IRC to drop the "bit" from BitMonero and move forward with the project. Thankful_for_today suddenly resurfaced, and wasn't happy to learn the community had assumed control of the coin. He attempted to maintain his own fork (still calling it "BitMonero") for a while, but that quickly fell into obscurity.

The unfolding of these events show us the roots of Monero. Much like Satoshi Nakamoto, the creators behind CryptoNote/Bytecoin and thankful_for_today remain a mystery[^17^] [^18^], having disappeared without a trace. This enigma only adds to Monero's value.

Since community took over development, believing in the project's potential and its ability to be guided in a better direction, Monero was given one of Bitcoin's most important qualities: **a leaderless nature**. With no single face or entity directing its path, Monero is safe from potential corruption or harm from a "central authority".

The community continued developing Monero until today. Since then, Monero has undergone a lot of technological improvements, migrations and achievements such as [RingCT](https://www.getmonero.org/resources/moneropedia/ringCT.html) and [RandomX](https://github.com/tevador/randomx). It also has developed its own [Community Crowdfundinc System](https://ccs.getmonero.org/), conferences such as [MoneroKon](https://monerokon.org/) and [Monerotopia](https://monerotopia.com/) are taking place every year, and has a very active [community](https://www.getmonero.org/community/hangouts/) around it.

> Monero continues to develop with goals of privacy and security first, ease of use and efficiency second. [^16^]

This stands as a testament to the power of a dedicated community operating without a central figure of authority. This decentralized approach aligns with the original ethos of cryptocurrency, making Monero a prime example of community-driven innovation. For this, I thank all the people involved in Monero, that lead it to where it is today.

*If you find any information that seems incorrect, unclear or any missing important events, please [contact me](https://kycnot.me/about#contact) and I will make the necessary changes.*

### Sources of interest

* https://forum.getmonero.org/20/general-discussion/211/history-of-monero

* https://monero.stackexchange.com/questions/852/what-is-the-origin-of-monero-and-its-relationship-to-bytecoin

* https://en.wikipedia.org/wiki/Monero

* https://bitcointalk.org/index.php?topic=583449.0

* https://bitcointalk.org/index.php?topic=563821.0

* https://bitcointalk.org/index.php?action=profile;u=233561

* https://bitcointalk.org/index.php?topic=512747.0

* https://bitcointalk.org/index.php?topic=740112.0

* https://monero.stackexchange.com/a/1024

* https://inspec2t-project.eu/cryptocurrency-with-a-focus-on-anonymity-these-facts-are-known-about-monero/

* https://medium.com/coin-story/coin-perspective-13-riccardo-spagni-69ef82907bd1

* https://www.getmonero.org/resources/about/

* https://www.wired.com/2017/01/monero-drug-dealers-cryptocurrency-choice-fire/

* https://www.monero.how/why-monero-vs-bitcoin

* https://old.reddit.com/r/Monero/comments/u8e5yr/satoshi_nakamoto_talked_about_privacy_features/

[^1^]: https://bitcointalk.org/index.php?topic=512747.0

[^2^]: https://bitcointalk.org/index.php?topic=512747.msg5901770#msg5901770

[^3^]: https://bitcointalk.org/index.php?topic=512747.msg5950051#msg5950051

[^4^]: https://bitcointalk.org/index.php?topic=512747.msg5953783#msg5953783

[^5^]: https://bytecoin.org/old/whitepaper.pdf

[^6^]: https://bitcointalk.org/index.php?topic=770.msg8637#msg8637

[^7^]: https://bitcointalk.org/index.php?topic=512747.msg7039536#msg7039536

[^8^]: https://bitcointalk.org/index.php?topic=512747.msg7039689#msg7039689

[^9^]: https://i.stack.imgur.com/qtJ43.png

[^10^]: https://bitcointalk.org/index.php?topic=740112

[^11^]: https://bitcointalk.org/index.php?topic=512747.msg6265128#msg6265128

[^12^]: https://bitcointalk.org/index.php?topic=512747.msg5711328#msg5711328

[^13^]: https://bitcointalk.org/index.php?topic=512747.msg6146717#msg6146717

[^14^]: https://bitcointalk.org/index.php?topic=563821.0

[^15^]: https://bitcointalk.org/index.php?topic=583449.msg10731078#msg10731078

[^16^]: https://www.getmonero.org/resources/about/

[^17^]: https://old.reddit.com/r/Monero/comments/lz2e5v/going_deep_in_the_cryptonote_rabbit_hole_who_was/

[^18^]: https://old.reddit.com/r/Monero/comments/oxpimb/is_there_any_evidence_that_thankful_for_today/

-

@ b2d670de:907f9d4a

2025-03-25 20:17:57

This guide will walk you through setting up your own Strfry Nostr relay on a Debian/Ubuntu server and making it accessible exclusively as a TOR hidden service. By the end, you'll have a privacy-focused relay that operates entirely within the TOR network, enhancing both your privacy and that of your users.

## Table of Contents

1. Prerequisites

2. Initial Server Setup

3. Installing Strfry Nostr Relay

4. Configuring Your Relay

5. Setting Up TOR

6. Making Your Relay Available on TOR

7. Testing Your Setup]

8. Maintenance and Security

9. Troubleshooting

## Prerequisites

- A Debian or Ubuntu server

- Basic familiarity with command line operations (most steps are explained in detail)

- Root or sudo access to your server

## Initial Server Setup

First, let's make sure your server is properly set up and secured.

### Update Your System

Connect to your server via SSH and update your system:

```bash

sudo apt update

sudo apt upgrade -y

```

### Set Up a Basic Firewall

Install and configure a basic firewall:

```bash

sudo apt install ufw -y

sudo ufw allow ssh

sudo ufw enable

```

This allows SSH connections while blocking other ports for security.

## Installing Strfry Nostr Relay

This guide includes the full range of steps needed to build and set up Strfry. It's simply based on the current version of the `DEPLOYMENT.md` document in the Strfry GitHub repository. If the build/setup process is changed in the repo, this document could get outdated. If so, please report to me that something is outdated and check for updated steps [here](https://github.com/hoytech/strfry/blob/master/docs/DEPLOYMENT.md).

### Install Dependencies

First, let's install the necessary dependencies. Each package serves a specific purpose in building and running Strfry:

```bash

sudo apt install -y git build-essential libyaml-perl libtemplate-perl libregexp-grammars-perl libssl-dev zlib1g-dev liblmdb-dev libflatbuffers-dev libsecp256k1-dev libzstd-dev

```

Here's why each dependency is needed:

**Basic Development Tools:**

- `git`: Version control system used to clone the Strfry repository and manage code updates

- `build-essential`: Meta-package that includes compilers (gcc, g++), make, and other essential build tools

**Perl Dependencies** (used for Strfry's build scripts):

- `libyaml-perl`: Perl interface to parse YAML configuration files

- `libtemplate-perl`: Template processing system used during the build process

- `libregexp-grammars-perl`: Advanced regular expression handling for Perl scripts

**Core Libraries for Strfry:**

- `libssl-dev`: Development files for OpenSSL, used for secure connections and cryptographic operations

- `zlib1g-dev`: Compression library that Strfry uses to reduce data size

- `liblmdb-dev`: Lightning Memory-Mapped Database library, which Strfry uses for its high-performance database backend

- `libflatbuffers-dev`: Memory-efficient serialization library for structured data

- `libsecp256k1-dev`: Optimized C library for EC operations on curve secp256k1, essential for Nostr's cryptographic signatures

- `libzstd-dev`: Fast real-time compression algorithm for efficient data storage and transmission

### Clone and Build Strfry

Clone the Strfry repository:

```bash

git clone https://github.com/hoytech/strfry.git

cd strfry

```

Build Strfry:

```bash

git submodule update --init

make setup-golpe

make -j2 # This uses 2 CPU cores. Adjust based on your server (e.g., -j4 for 4 cores)

```

This build process will take several minutes, especially on servers with limited CPU resources, so go get a coffee and post some great memes on nostr in the meantime.

### Install Strfry

Install the Strfry binary to your system path:

```bash

sudo cp strfry /usr/local/bin

```

This makes the `strfry` command available system-wide, allowing it to be executed from any directory and by any user with the appropriate permissions.

## Configuring Your Relay

### Create Strfry User

Create a dedicated user for running Strfry. This enhances security by isolating the relay process:

```bash

sudo useradd -M -s /usr/sbin/nologin strfry

```

The `-M` flag prevents creating a home directory, and `-s /usr/sbin/nologin` prevents anyone from logging in as this user. This is a security best practice for service accounts.

### Create Data Directory

Create a directory for Strfry's data:

```bash

sudo mkdir /var/lib/strfry

sudo chown strfry:strfry /var/lib/strfry

sudo chmod 755 /var/lib/strfry

```

This creates a dedicated directory for Strfry's database and sets the appropriate permissions so that only the strfry user can write to it.

### Configure Strfry

Copy the sample configuration file:

```bash

sudo cp strfry.conf /etc/strfry.conf

```

Edit the configuration file:

```bash

sudo nano /etc/strfry.conf

```

Modify the database path:

```

# Find this line:

db = "./strfry-db/"

# Change it to:

db = "/var/lib/strfry/"

```

Check your system's hard limit for file descriptors:

```bash

ulimit -Hn

```

Update the `nofiles` setting in your configuration to match this value (or set to 0):

```

# Add or modify this line in the config (example if your limit is 524288):

nofiles = 524288

```

The `nofiles` setting determines how many open files Strfry can have simultaneously. Setting it to your system's hard limit (or 0 to use the system default) helps prevent "too many open files" errors if your relay becomes popular.

You might also want to customize your relay's information in the config file. Look for the `info` section and update it with your relay's name, description, and other details.

Set ownership of the configuration file:

```bash

sudo chown strfry:strfry /etc/strfry.conf

```

### Create Systemd Service

Create a systemd service file for managing Strfry:

```bash

sudo nano /etc/systemd/system/strfry.service

```

Add the following content:

```ini

[Unit]

Description=strfry relay service

[Service]

User=strfry

ExecStart=/usr/local/bin/strfry relay

Restart=on-failure

RestartSec=5

ProtectHome=yes

NoNewPrivileges=yes

ProtectSystem=full

LimitCORE=1000000000

[Install]

WantedBy=multi-user.target

```

This systemd service configuration:

- Runs Strfry as the dedicated strfry user

- Automatically restarts the service if it fails

- Implements security measures like `ProtectHome` and `NoNewPrivileges`

- Sets resource limits appropriate for a relay

Enable and start the service:

```bash

sudo systemctl enable strfry.service

sudo systemctl start strfry

```

Check the service status:

```bash

sudo systemctl status strfry

```

### Verify Relay is Running

Test that your relay is running locally:

```bash

curl localhost:7777

```

You should see a message indicating that the Strfry relay is running. This confirms that Strfry is properly installed and configured before we proceed to set up TOR.

## Setting Up TOR

Now let's make your relay accessible as a TOR hidden service.

### Install TOR

Install TOR from the package repositories:

```bash

sudo apt install -y tor

```

This installs the TOR daemon that will create and manage your hidden service.

### Configure TOR

Edit the TOR configuration file:

```bash

sudo nano /etc/tor/torrc

```

Scroll down to wherever you see a commented out part like this:

```

#HiddenServiceDir /var/lib/tor/hidden_service/

#HiddenServicePort 80 127.0.0.1:80

```

Under those lines, add the following lines to set up a hidden service for your relay:

```

HiddenServiceDir /var/lib/tor/strfry-relay/

HiddenServicePort 80 127.0.0.1:7777

```

This configuration:

- Creates a hidden service directory at `/var/lib/tor/strfry-relay/`

- Maps port 80 on your .onion address to port 7777 on your local machine

- Keeps all traffic encrypted within the TOR network

Create the directory for your hidden service:

```bash

sudo mkdir -p /var/lib/tor/strfry-relay/

sudo chown debian-tor:debian-tor /var/lib/tor/strfry-relay/

sudo chmod 700 /var/lib/tor/strfry-relay/

```

The strict permissions (700) are crucial for security as they ensure only the debian-tor user can access the directory containing your hidden service private keys.

Restart TOR to apply changes:

```bash

sudo systemctl restart tor

```

## Making Your Relay Available on TOR

### Get Your Onion Address

After restarting TOR, you can find your onion address:

```bash

sudo cat /var/lib/tor/strfry-relay/hostname

```

This will output something like `abcdefghijklmnopqrstuvwxyz234567.onion`, which is your relay's unique .onion address. This is what you'll share with others to access your relay.

### Understanding Onion Addresses

The .onion address is a special-format hostname that is automatically generated based on your hidden service's private key.

Your users will need to use this address with the WebSocket protocol prefix to connect: `ws://youronionaddress.onion`

## Testing Your Setup

### Test with a Nostr Client

The best way to test your relay is with an actual Nostr client that supports TOR:

1. Open your TOR browser

2. Go to your favorite client, either on clearnet or an onion service.

- Check out [this list](https://github.com/0xtrr/onion-service-nostr-clients?tab=readme-ov-file#onion-service-nostr-clients) of nostr clients available over TOR.

3. Add your relay URL: `ws://youronionaddress.onion` to your relay list

4. Try posting a note and see if it appears on your relay

- In some nostr clients, you can also click on a relay to get information about it like the relay name and description you set earlier in the stryfry config. If you're able to see the correct values for the name and the description, you were able to connect to the relay.

- Some nostr clients also gives you a status on what relays a note was posted to, this could also give you an indication that your relay works as expected.

Note that not all Nostr clients support TOR connections natively. Some may require additional configuration or use of TOR Browser. E.g. most mobile apps would most likely require a TOR proxy app running in the background (some have TOR support built in too).

## Maintenance and Security

### Regular Updates

Keep your system, TOR, and relay updated:

```bash

# Update system

sudo apt update

sudo apt upgrade -y

# Update Strfry

cd ~/strfry

git pull

git submodule update

make -j2

sudo cp strfry /usr/local/bin

sudo systemctl restart strfry

# Verify TOR is still running properly

sudo systemctl status tor

```

Regular updates are crucial for security, especially for TOR which may have security-critical updates.

### Database Management

Strfry has built-in database management tools. Check the Strfry documentation for specific commands related to database maintenance, such as managing event retention and performing backups.

### Monitoring Logs

To monitor your Strfry logs:

```bash

sudo journalctl -u strfry -f

```

To check TOR logs:

```bash

sudo journalctl -u tor -f

```

Monitoring logs helps you identify potential issues and understand how your relay is being used.

### Backup

This is not a best practices guide on how to do backups. Preferably, backups should be stored either offline or on a different machine than your relay server. This is just a simple way on how to do it on the same server.

```bash

# Stop the relay temporarily

sudo systemctl stop strfry

# Backup the database

sudo cp -r /var/lib/strfry /path/to/backup/location

# Restart the relay

sudo systemctl start strfry

```

Back up your TOR hidden service private key. The private key is particularly sensitive as it defines your .onion address - losing it means losing your address permanently. If you do a backup of this, ensure that is stored in a safe place where no one else has access to it.

```bash

sudo cp /var/lib/tor/strfry-relay/hs_ed25519_secret_key /path/to/secure/backup/location

```

## Troubleshooting

### Relay Not Starting

If your relay doesn't start:

```bash

# Check logs

sudo journalctl -u strfry -e

# Verify configuration

cat /etc/strfry.conf

# Check permissions

ls -la /var/lib/strfry

```

Common issues include:

- Incorrect configuration format

- Permission problems with the data directory

- Port already in use (another service using port 7777)

- Issues with setting the nofiles limit (setting it too big)

### TOR Hidden Service Not Working

If your TOR hidden service is not accessible:

```bash

# Check TOR logs

sudo journalctl -u tor -e

# Verify TOR is running

sudo systemctl status tor

# Check onion address

sudo cat /var/lib/tor/strfry-relay/hostname

# Verify TOR configuration

sudo cat /etc/tor/torrc

```

Common TOR issues include:

- Incorrect directory permissions

- TOR service not running

- Incorrect port mapping in torrc

### Testing Connectivity

If you're having trouble connecting to your service:

```bash

# Verify Strfry is listening locally

sudo ss -tulpn | grep 7777

# Check that TOR is properly running

sudo systemctl status tor

# Test the local connection directly

curl --include --no-buffer localhost:7777

```

---

## Privacy and Security Considerations

Running a Nostr relay as a TOR hidden service provides several important privacy benefits:

1. **Network Privacy**: Traffic to your relay is encrypted and routed through the TOR network, making it difficult to determine who is connecting to your relay.

2. **Server Anonymity**: The physical location and IP address of your server are concealed, providing protection against denial-of-service attacks and other targeting.

3. **Censorship Resistance**: TOR hidden services are more resilient against censorship attempts, as they don't rely on the regular DNS system and can't be easily blocked.

4. **User Privacy**: Users connecting to your relay through TOR enjoy enhanced privacy, as their connections are also encrypted and anonymized.

However, there are some important considerations:

- TOR connections are typically slower than regular internet connections

- Not all Nostr clients support TOR connections natively

- Running a hidden service increases the importance of keeping your server secure

---

Congratulations! You now have a Strfry Nostr relay running as a TOR hidden service. This setup provides a resilient, privacy-focused, and censorship-resistant communication channel that helps strengthen the Nostr network.

For further customization and advanced configuration options, refer to the [Strfry documentation](https://github.com/hoytech/strfry).

Consider sharing your relay's .onion address with the Nostr community to help grow the privacy-focused segment of the network!

If you plan on providing a relay service that the public can use (either for free or paid for), consider adding it to [this list](https://github.com/0xtrr/onion-service-nostr-relays). Only add it if you plan to run a stable and available relay.

-

@ bc52210b:20bfc6de

2025-03-25 20:17:22

CISA, or Cross-Input Signature Aggregation, is a technique in Bitcoin that allows multiple signatures from different inputs in a transaction to be combined into a single, aggregated signature. This is a big deal because Bitcoin transactions often involve multiple inputs (e.g., spending from different wallet outputs), each requiring its own signature. Normally, these signatures take up space individually, but CISA compresses them into one, making transactions more efficient.

This magic is possible thanks to the linearity property of Schnorr signatures, a type of digital signature introduced to Bitcoin with the Taproot upgrade. Unlike the older ECDSA signatures, Schnorr signatures have mathematical properties that allow multiple signatures to be added together into a single valid signature. Think of it like combining multiple handwritten signatures into one super-signature that still proves everyone signed off!

Fun Fact: CISA was considered for inclusion in Taproot but was left out to keep the upgrade simple and manageable. Adding CISA would’ve made Taproot more complex, so the developers hit pause on it—for now.

---

**CISA vs. Key Aggregation (MuSig, FROST): Don’t Get Confused!**

Before we go deeper, let’s clear up a common mix-up: CISA is not the same as protocols like MuSig or FROST. Here’s why:

* Signature Aggregation (CISA): Combines multiple signatures into one, each potentially tied to different public keys and messages (e.g., different transaction inputs).

* Key Aggregation (MuSig, FROST): Combines multiple public keys into a single aggregated public key, then generates one signature for that key.

**Key Differences:**

1. What’s Aggregated?

* CISA: Aggregates signatures.

* Key Aggregation: Aggregates public keys.

2. What the Verifier Needs

* CISA: The verifier needs all individual public keys and their corresponding messages to check the aggregated signature.

* Key Aggregation: The verifier only needs the single aggregated public key and one message.

3. When It Happens

* CISA: Used during transaction signing, when inputs are being combined into a transaction.

* MuSig: Used during address creation, setting up a multi-signature (multisig) address that multiple parties control.

So, CISA is about shrinking signature data in a transaction, while MuSig/FROST are about simplifying multisig setups. Different tools, different jobs!

---

**Two Flavors of CISA: Half-Agg and Full-Agg**

CISA comes in two modes:

* Full Aggregation (Full-Agg): Interactive, meaning signers need to collaborate during the signing process. (We’ll skip the details here since the query focuses on Half-Agg.)

* Half Aggregation (Half-Agg): Non-interactive, meaning signers can work independently, and someone else can combine the signatures later.

Since the query includes “CISA Part 2: Half Signature Aggregation,” let’s zoom in on Half-Agg.

---

**Half Signature Aggregation (Half-Agg) Explained**

**How It Works**

Half-Agg is a non-interactive way to aggregate Schnorr signatures. Here’s the process:

1. Independent Signing: Each signer creates their own Schnorr signature for their input, without needing to talk to the other signers.

2. Aggregation Step: An aggregator (could be anyone, like a wallet or node) takes all these signatures and combines them into one aggregated signature.

A Schnorr signature has two parts:

* R: A random point (32 bytes).

* s: A scalar value (32 bytes).

In Half-Agg:

* The R values from each signature are kept separate (one per input).

* The s values from all signatures are combined into a single s value.

**Why It Saves Space (~50%)**

Let’s break down the size savings with some math:

Before Aggregation:

* Each Schnorr signature = 64 bytes (32 for R + 32 for s).

* For n inputs: n × 64 bytes.

After Half-Agg:

* Keep n R values (32 bytes each) = 32 × n bytes.

* Combine all s values into one = 32 bytes.

* Total size: 32 × n + 32 bytes.

Comparison:

* Original: 64n bytes.

* Half-Agg: 32n + 32 bytes.

* For large n, the “+32” becomes small compared to 32n, so it’s roughly 32n, which is half of 64n. Hence, ~50% savings!

**Real-World Impact:**

Based on recent Bitcoin usage, Half-Agg could save:

* ~19.3% in space (reducing transaction size).

* ~6.9% in fees (since fees depend on transaction size). This assumes no major changes in how people use Bitcoin post-CISA.

---

**Applications of Half-Agg**

Half-Agg isn’t just a cool idea—it has practical uses:

1. Transaction-wide Aggregation

* Combine all signatures within a single transaction.

* Result: Smaller transactions, lower fees.

2. Block-wide Aggregation

* Combine signatures across all transactions in a Bitcoin block.

* Result: Even bigger space savings at the blockchain level.

3. Off-chain Protocols / P2P

* Use Half-Agg in systems like Lightning Network gossip messages.

* Benefit: Efficiency without needing miners or a Bitcoin soft fork.

---

**Challenges with Half-Agg**

While Half-Agg sounds awesome, it’s not without hurdles, especially at the block level:

1. Breaking Adaptor Signatures

* Adaptor signatures are special signatures used in protocols like Discreet Log Contracts (DLCs) or atomic swaps. They tie a signature to revealing a secret, ensuring fair exchanges.

* Aggregating signatures across a block might mess up these protocols, as the individual signatures get blended together, potentially losing the properties adaptor signatures rely on.

2. Impact on Reorg Recovery

* In Bitcoin, a reorganization (reorg) happens when the blockchain switches to a different chain of blocks. Transactions from the old chain need to be rebroadcast or reprocessed.

* If signatures are aggregated at the block level, it could complicate extracting individual transactions and their signatures during a reorg, slowing down recovery.

These challenges mean Half-Agg needs careful design, especially for block-wide use.

---

**Wrapping Up**

CISA is a clever way to make Bitcoin transactions more efficient by aggregating multiple Schnorr signatures into one, thanks to their linearity property. Half-Agg, the non-interactive mode, lets signers work independently, cutting signature size by about 50% (to 32n + 32 bytes from 64n bytes). It could save ~19.3% in space and ~6.9% in fees, with uses ranging from single transactions to entire blocks or off-chain systems like Lightning.

But watch out—block-wide Half-Agg could trip up adaptor signatures and reorg recovery, so it’s not a slam dunk yet. Still, it’s a promising tool for a leaner, cheaper Bitcoin future!

-

@ b17fccdf:b7211155

2025-03-25 11:23:36

Si vives en España, quizás hayas notado que no puedes acceder a ciertas páginas webs durante los fines de semana o en algunos días entre semana, entre ellas, la [guía de MiniBolt](https://minbolt.info/).

Esto tiene una **razón**, por supuesto una **solución**, además de una **conclusión**. Sin entrar en demasiados detalles:

## La razón

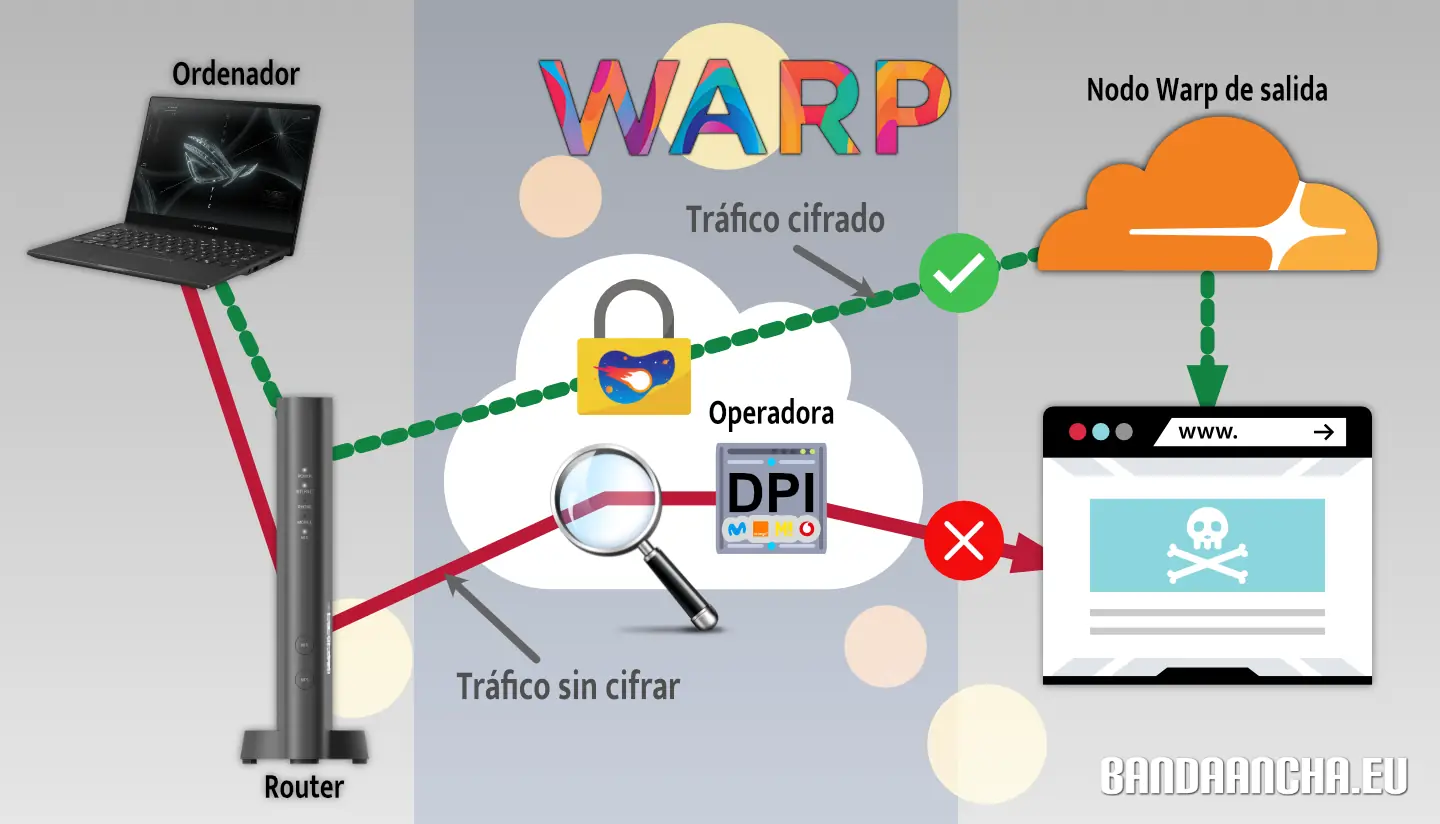

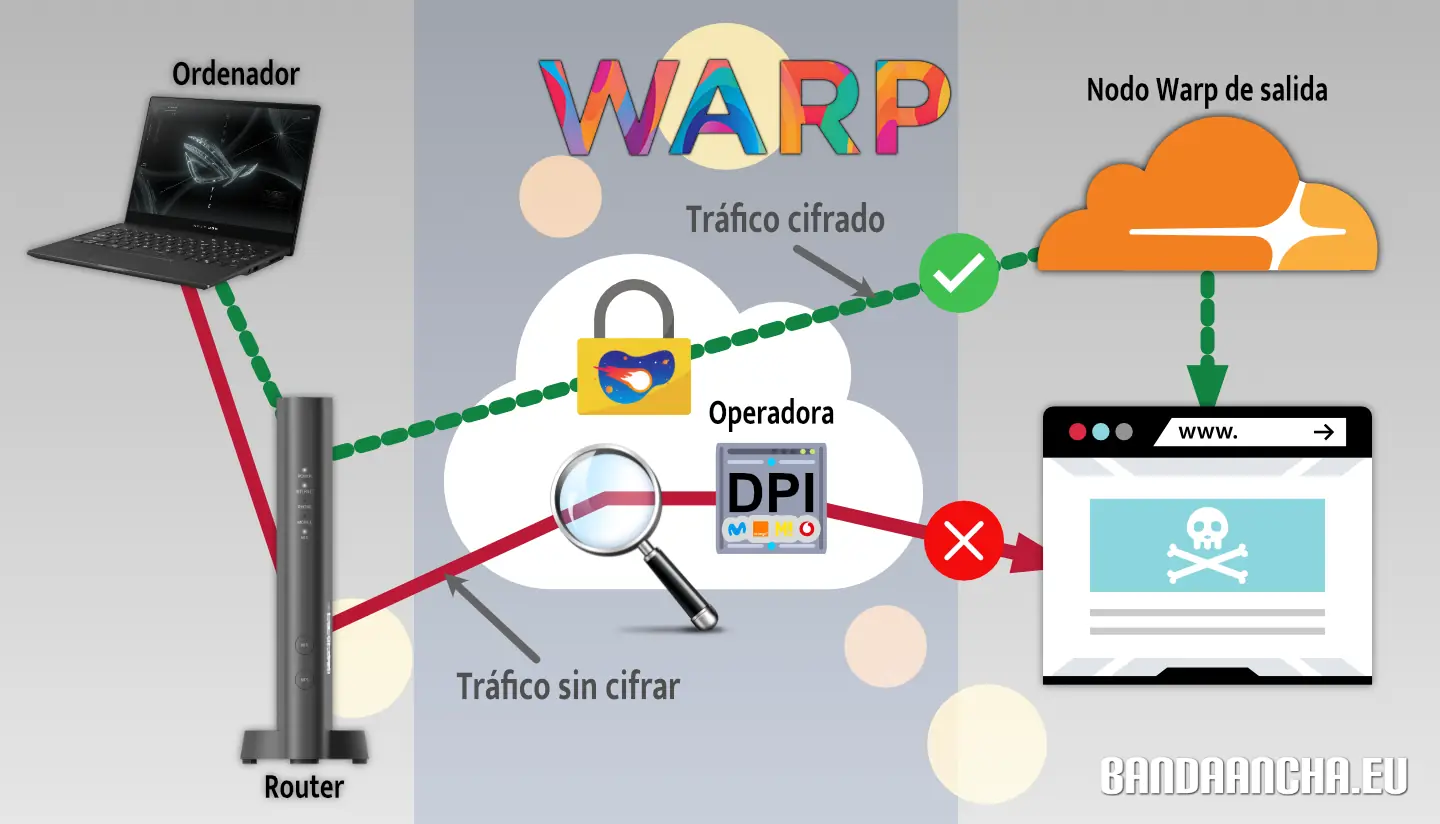

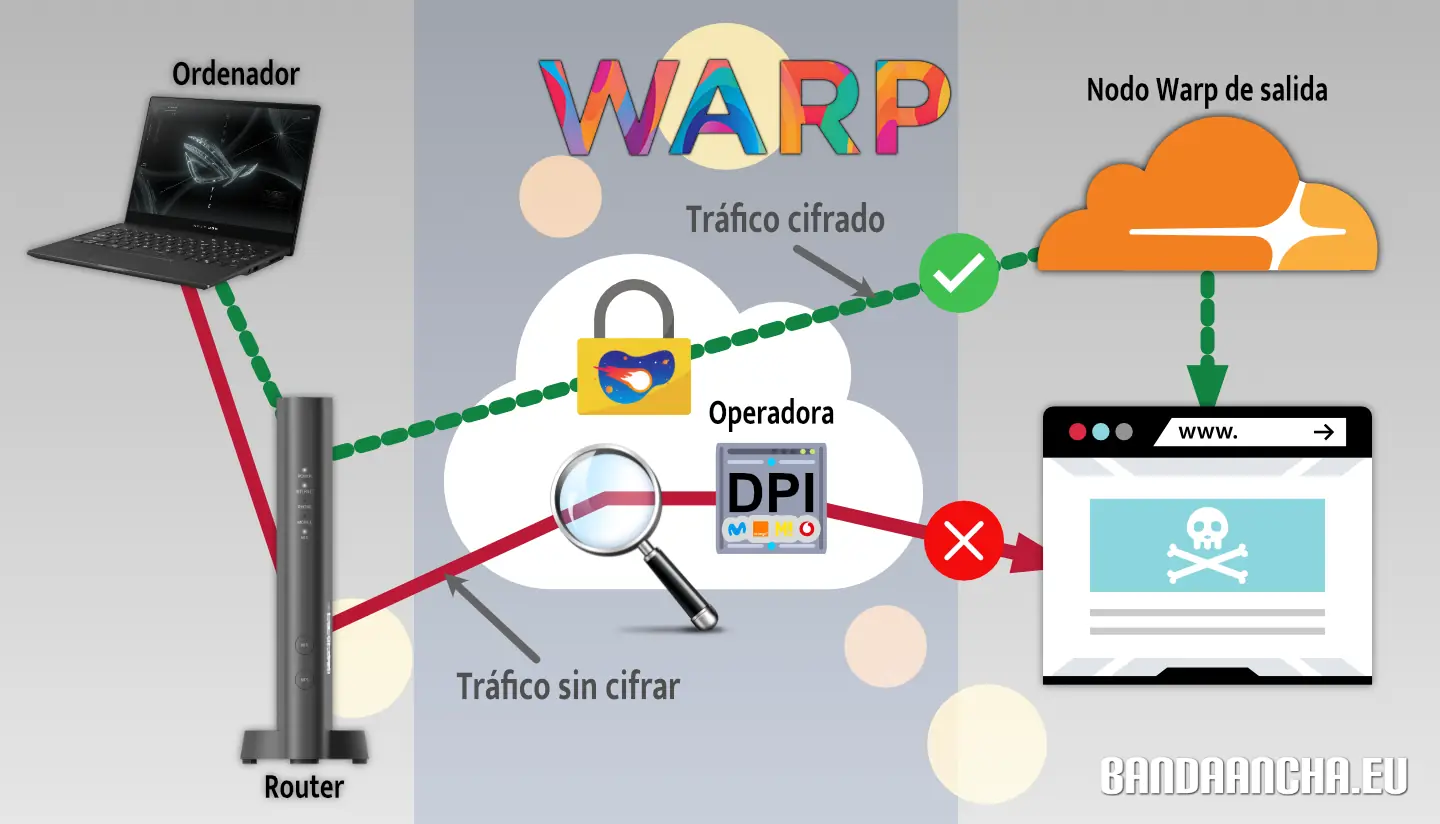

El **bloqueo a Cloudflare**, implementado desde hace casi dos meses por operadores de Internet (ISPs) en España (como Movistar, O2, DIGI, Pepephone, entre otros), se basa en una [orden judicial](https://www.poderjudicial.es/search/AN/openDocument/3c85bed480cbb1daa0a8778d75e36f0d/20221004) emitida tras una demanda de LALIGA (Fútbol). Esta medida busca combatir la piratería en España, un problema que afecta directamente a dicha organización.

Aunque la intención original era restringir el acceso a dominios específicos que difundieran dicho contenido, Cloudflare emplea el protocolo [ECH](https://developers.cloudflare.com/ssl/edge-certificates/ech) (Encrypted Client Hello), que oculta el nombre del dominio, el cual antes se transmitía en texto plano durante el proceso de establecimiento de una conexión TLS. Esta medida dificulta que las operadoras analicen el tráfico para aplicar **bloqueos basados en dominios**, lo que les obliga a recurrir a **bloqueos más amplios por IP o rangos de IP** para cumplir con la orden judicial.

Esta práctica tiene **consecuencias graves**, que han sido completamente ignoradas por quienes la ejecutan. Es bien sabido que una infraestructura de IP puede alojar numerosos dominios, tanto legítimos como no legítimos. La falta de un "ajuste fino" en los bloqueos provoca un **perjuicio para terceros**, **restringiendo el acceso a muchos dominios legítimos** que no tiene relación alguna con actividades ilícitas, pero que comparten las mismas IPs de Cloudflare con dominios cuestionables. Este es el caso de la [web de MiniBolt](https://minibolt.minibolt.info) y su dominio `minibolt.info`, los cuales **utilizan Cloudflare como proxy** para aprovechar las medidas de **seguridad, privacidad, optimización y servicios** adicionales que la plataforma ofrece de forma gratuita.

Si bien este bloqueo parece ser temporal (al menos durante la temporada 24/25 de fútbol, hasta finales de mayo), es posible que se reactive con el inicio de la nueva temporada.

## La solución

Obviamente, **MiniBolt no dejará de usar Cloudflare** como proxy por esta razón. Por lo que a continuación se exponen algunas medidas que como usuario puedes tomar para **evitar esta restricción** y poder acceder:

**~>** Utiliza **una VPN**:

Existen varias soluciones de proveedores de VPN, ordenadas según su reputación en privacidad:

- [IVPN](https://www.ivpn.net/es/)

- [Mullvad VPN](https://mullvad.net/es/vpn)

- [Proton VPN](https://protonvpn.com/es-es) (**gratis**)

- [Obscura VPN](https://obscura.net/) (**solo para macOS**)

- [Cloudfare WARP](https://developers.cloudflare.com/cloudflare-one/connections/connect-devices/warp/download-warp/) (**gratis**) + permite utilizar el modo proxy local para enrutar solo la navegación, debes utilizar la opción "WARP a través de proxy local" siguiendo estos pasos:

1. Inicia Cloudflare WARP y dentro de la pequeña interfaz haz click en la rueda dentada abajo a la derecha > "Preferencias" > "Avanzado" > "Configurar el modo proxy"

2. Marca la casilla "Habilite el modo proxy en este dispositivo"

3. Elige un "Puerto de escucha de proxy" entre 0-65535. ej: 1080, haz click en "Aceptar" y cierra la ventana de preferencias

4. Accede de nuevo a Cloudflare WARP y pulsa sobre el switch para habilitar el servicio.

3. Ahora debes apuntar el proxy del navegador a Cloudflare WARP, la configuración del navegador es similar a [esta](https://minibolt.minibolt.info/system/system/privacy#example-from-firefox) para el caso de navegadores basados en Firefox. Una vez hecho, deberías poder acceder a la [guía de MiniBolt](https://minibolt.minibolt.info/) sin problemas. Si tienes dudas, déjalas en comentarios e intentaré resolverlas. Más info [AQUÍ](https://bandaancha.eu/articulos/como-saltarse-bloqueo-webs-warp-vpn-9958).

**~>** [**Proxifica tu navegador para usar la red de Tor**](https://minibolt.minibolt.info/system/system/privacy#ssh-remote-access-through-tor), o utiliza el [**navegador oficial de Tor**](https://www.torproject.org/es/download/) (recomendado).

## La conclusión

Estos hechos ponen en tela de juicio los principios fundamentales de la neutralidad de la red, pilares esenciales de la [Declaración de Independencia del Ciberespacio](https://es.wikisource.org/wiki/Declaraci%C3%B3n_de_independencia_del_ciberespacio) que defiende un internet libre, sin restricciones ni censura. Dichos principios se han visto quebrantados sin precedentes en este país, confirmando que ese futuro distópico que muchos negaban, ya es una realidad.

Es momento de actuar y estar preparados: debemos **impulsar el desarrollo y la difusión** de las **herramientas anticensura** que tenemos a nuestro alcance, protegiendo así la **libertad digital** y asegurando un acceso equitativo a la información para todos

Este compromiso es uno de los **pilares fundamentales de MiniBolt,** lo que convierte este desafío en una oportunidad para poner a prueba las **soluciones anticensura** [ya disponibles](https://minibolt.minibolt.info/bonus-guides/system/tor-services), así como **las que están en camino**.

¡Censúrame si puedes, legislador! ¡La lucha por la privacidad y la libertad en Internet ya está en marcha!

---

Fuentes:

* https://bandaancha.eu/articulos/movistar-o2-deja-clientes-sin-acceso-11239

* https://bandaancha.eu/articulos/esta-nueva-sentencia-autoriza-bloqueos-11257

* https://bandaancha.eu/articulos/como-saltarse-bloqueo-webs-warp-vpn-9958

* https://bandaancha.eu/articulos/como-activar-ech-chrome-acceder-webs-10689

* https://comunidad.movistar.es/t5/Soporte-Fibra-y-ADSL/Problema-con-web-que-usan-Cloudflare/td-p/5218007

-

@ 3b7fc823:e194354f

2025-03-23 03:54:16

A quick guide for the less than technical savvy to set up their very own free private tor enabled email using Onionmail. Privacy is for everyone, not just the super cyber nerds.

Onion Mail is an anonymous POP3/SMTP email server program hosted by various people on the internet. You can visit this site and read the details: https://en.onionmail.info/

1. Download Tor Browser

First, if you don't already, go download Tor Browser. You are going to need it. https://www.torproject.org/

2. Sign Up

Using Tor browser go to the directory page (https://onionmail.info/directory.html) choose one of the servers and sign up for an account. I say sign up but it is just choosing a user name you want to go before the @xyz.onion email address and solving a captcha.

3. Account information

Once you are done signing up an Account information page will pop up. **MAKE SURE YOU SAVE THIS!!!** It has your address and passwords (for sending and receiving email) that you will need. If you lose them then you are shit out of luck.

4. Install an Email Client

You can use Claws Mail, Neomutt, or whatever, but for this example, we will be using Thunderbird.

a. Download Thunderbird email client

b. The easy setup popup page that wants your name, email, and password isn't going to like your user@xyz.onion address. Just enter something that looks like a regular email address such as name@example.com and the **Configure Manually**option will appear below. Click that.

5. Configure Incoming (POP3) Server

Under Incoming Server:

Protocol: POP3

Server or Hostname: xyz.onion (whatever your account info says)

Port: 110

Security: STARTTLS

Authentication: Normal password

Username: (your username)

Password: (POP3 password).

6. Configure Outgoing (SMTP) Server

Under Outgoing Server:

Server or Hostname: xyz.onion (whatever your account info says)

Port: 25

Security: STARTTLS

Authentication: Normal password

Username: (your username)

Password: (SMTP password).

7. Click on email at the top and change your address if you had to use a spoof one to get the configure manually to pop up.

8. Configure Proxy

a. Click the **gear icon** on the bottom left for settings. Scroll all the way down to **Network & Disk Space**. Click the **settings button** next to **Connection. Configure how Thunderbird connects to the internet**.

b. Select **Manual Proxy Configuration**. For **SOCKS Host** enter **127.0.0.1** and enter port **9050**. (if you are running this through a VM the port may be different)

c. Now check the box for **SOCKS5** and then **Proxy DNS when using SOCKS5** down at the bottom. Click OK

9. Check Email

For thunderbird to reach the onion mail server it has to be connected to tor. Depending on your local setup, it might be fine as is or you might have to have tor browser open in the background. Click on **inbox** and then the **little cloud icon** with the down arrow to check mail.

10. Security Exception

Thunderbird is not going to like that the onion mail server security certificate is self signed. A popup **Add Security Exception** will appear. Click **Confirm Security Exception**.

You are done. Enjoy your new private email service.

**REMEMBER: The server can read your emails unless they are encrypted. Go into account settings. Look down and click End-toEnd Encryption. Then add your OpenPGP key or open your OpenPGP Key Manager (you might have to download one if you don't already have one) and generate a new key for this account.**

-

@ 39cc53c9:27168656

2025-03-30 05:54:47

> [Read the original blog post](https://blog.kycnot.me/p/diy-seed-backup)

I've been thinking about how to improve my seed backup in a cheap and cool way, mostly for fun. Until now, I had the seed written on a piece of paper in a desk drawer, and I wanted something more durable and fire-proof.

[Show me the final result!](#the-final-result)

After searching online, I found two options I liked the most: the [Cryptosteel](https://cryptosteel.com/) Capsule and the [Trezor Keep](https://trezor.io/trezor-keep-metal). These products are nice but quite expensive, and I didn't want to spend that much on my seed backup. **Privacy** is also important, and sharing details like a shipping address makes me uncomfortable. This concern has grown since the Ledger incident[^1]. A $5 wrench attack[^2] seems too cheap, even if you only hold a few sats.

Upon seeing the design of Cryptosteel, I considered creating something similar at home. Although it may not be as cool as their device, it could offer almost the same in terms of robustness and durability.

## Step 1: Get the materials and tools

When choosing the materials, you will want to go with **stainless steel**. It is durable, resistant to fire, water, and corrosion, very robust, and does not rust. Also, its price point is just right; it's not the cheapest, but it's cheap for the value you get.

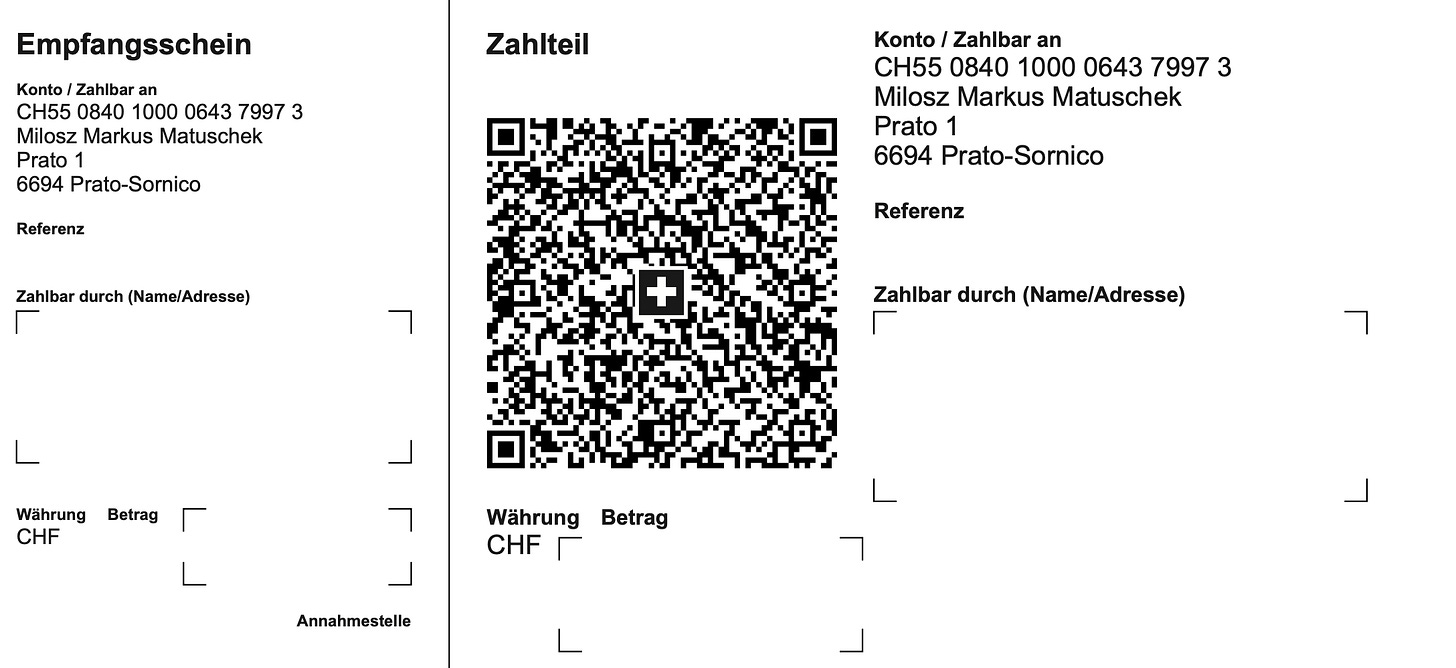

I went to a material store and bought:

- Two bolts

- Two hex nuts and head nuts for the bolts

- A bag of 30 washers

All items were made of stainless steel. The total price was around **€6**. This is enough for making two seed backups.

You will also need:

- A set of metal letter stamps (I bought a 2mm-size letter kit since my washers were small, 6mm in diameter)

- You can find these in local stores or online marketplaces. The set I bought cost me €13.

- A good hammer

- A solid surface to stamp on

Total spent: **19€** for two backups

## Step 2: Stamp and store

Once you have all the materials, you can start stamping your words. There are many videos on the internet that use fancy 3D-printed tools to get the letters nicely aligned, but I went with the free-hand option. The results were pretty decent.

I only stamped the first 4 letters for each word since the BIP-39 wordlist allows for this. Because my stamping kit did not include numbers, I used alphabet letters to define the order. This way, if all the washers were to fall off, I could still reassemble the seed correctly.

## The final result

So this is the final result. I added two smaller washers as protection and also put the top washer reversed so the letters are not visible:

Compared to the Cryptosteel or the Trezor Keep, its size is much more compact. This makes for an easier-to-hide backup, in case you ever need to hide it inside your human body.

## Some ideas

### Tamper-evident seal

To enhance the security this backup, you can consider using a **tamper-evident seal**. This can be easily achieved by printing a **unique** image or using a specific day's newspaper page (just note somewhere what day it was).

Apply a thin layer of glue to the washer's surface and place the seal over it. If someone attempts to access the seed, they will be forced to destroy the seal, which will serve as an evident sign of tampering.

This simple measure will provide an additional layer of protection and allow you to quickly identify any unauthorized access attempts.

Note that this method is not resistant to outright theft. The tamper-evident seal won't stop a determined thief but it will prevent them from accessing your seed without leaving any trace.

### Redundancy

Make sure to add redundancy. Make several copies of this cheap backup, and store them in separate locations.

### Unique wordset

Another layer of security could be to implement your own custom mnemonic dictionary. However, this approach has the risk of permanently losing access to your funds if not implemented correctly.

If done properly, you could potentially end up with a highly secure backup, as no one else would be able to derive the seed phrase from it. To create your custom dictionary, assign a unique number from 1 to 2048 to a word of your choice. Maybe you could use a book, and index the first 2048 unique words that appear. Make sure to store this book and even get a couple copies of it (digitally and phisically).

This self-curated set of words will serve as your personal BIP-39 dictionary. When you need to translate between your custom dictionary and the official [BIP-39 wordlist](https://github.com/bitcoin/bips/blob/master/bip-0039/english.txt), simply use the index number to find the corresponding word in either list.

> Never write the idex or words on your computer (Do not use `Ctr+F`)

[^1]: https://web.archive.org/web/20240326084135/https://www.ledger.com/message-ledgers-ceo-data-leak

[^2]: https://xkcd.com/538/

-

@ 6b3780ef:221416c8

2025-03-26 18:42:00

This workshop will guide you through exploring the concepts behind MCP servers and how to deploy them as DVMs in Nostr using DVMCP. By the end, you'll understand how these systems work together and be able to create your own deployments.

## Understanding MCP Systems

MCP (Model Context Protocol) systems consist of two main components that work together:

1. **MCP Server**: The heart of the system that exposes tools, which you can access via the `.listTools()` method.

2. **MCP Client**: The interface that connects to the MCP server and lets you use the tools it offers.

These servers and clients can communicate using different transport methods:

- **Standard I/O (stdio)**: A simple local connection method when your server and client are on the same machine.

- **Server-Sent Events (SSE)**: Uses HTTP to create a communication channel.

For this workshop, we'll use stdio to deploy our server. DVMCP will act as a bridge, connecting to your MCP server as an MCP client, and exposing its tools as a DVM that anyone can call from Nostr.

## Creating (or Finding) an MCP Server

Building an MCP server is simpler than you might think:

1. Create software in any programming language you're comfortable with.

2. Add an MCP library to expose your server's MCP interface.

3. Create an API that wraps around your software's functionality.

Once your server is ready, an MCP client can connect, for example, with `bun index.js`, and then call `.listTools()` to discover what your server can do. This pattern, known as reflection, makes Nostr DVMs and MCP a perfect match since both use JSON, and DVMs can announce and call tools, effectively becoming an MCP proxy.

Alternatively, you can use one of the many existing MCP servers available in various repositories.

For more information about mcp and how to build mcp servers you can visit https://modelcontextprotocol.io/

## Setting Up the Workshop

Let's get hands-on:

First, to follow this workshop you will need Bun. Install it from https://bun.sh/. For Linux and macOS, you can use the installation script:

```

curl -fsSL https://bun.sh/install | bash

```

1. **Choose your MCP server**: You can either create one or use an existing one.

2. **Inspect your server** using the MCP inspector tool:

```bash

npx @modelcontextprotocol/inspector build/index.js arg1 arg2

```

This will:

- Launch a client UI (default: http://localhost:5173)

- Start an MCP proxy server (default: port 3000)

- Pass any additional arguments directly to your server

3. **Use the inspector**: Open the client UI in your browser to connect with your server, list available tools, and test its functionality.

## Deploying with DVMCP

Now for the exciting part – making your MCP server available to everyone on Nostr:

1. Navigate to your MCP server directory.

2. Run without installing (quickest way):

```

npx @dvmcp/bridge

```

3. Or install globally for regular use:

```

npm install -g @dvmcp/bridge

# or

bun install -g @dvmcp/bridge

```

Then run using:

```bash

dvmcp-bridge

```

This will guide you through creating the necessary configuration.

Watch the console logs to confirm successful setup – you'll see your public key and process information, or any issues that need addressing.

For the configuration, you can set the relay as `wss://relay.dvmcp.fun` , or use any other of your preference

## Testing and Integration

1. **Visit [dvmcp.fun](https://dvmcp.fun)** to see your DVM announcement.

2. Call your tools and watch the responses come back.

For production use, consider running dvmcp-bridge as a system service or creating a container for greater reliability and uptime.

## Integrating with LLM Clients

You can also integrate your DVMCP deployment with LLM clients using the discovery package:

1. Install and use the `@dvmcp/discovery` package:

```bash

npx @dvmcp/discovery

```

2. This package acts as an MCP server for your LLM system by:

- Connecting to configured Nostr relays

- Discovering tools from DVMCP servers

- Making them available to your LLM applications

3. Connect to specific servers or providers using these flags:

```bash

# Connect to all DVMCP servers from a provider

npx @dvmcp/discovery --provider npub1...

# Connect to a specific DVMCP server

npx @dvmcp/discovery --server naddr1...

```

Using these flags, you wouldn't need a configuration file. You can find these commands and Claude desktop configuration already prepared for copy and paste at [dvmcp.fun](https://dvmcp.fun).

This feature lets you connect to any DVMCP server using Nostr and integrate it into your client, either as a DVM or in LLM-powered applications.

## Final thoughts

If you've followed this workshop, you now have an MCP server deployed as a Nostr DVM. This means that local resources from the system where the MCP server is running can be accessed through Nostr in a decentralized manner. This capability is powerful and opens up numerous possibilities and opportunities for fun.

You can use this setup for various use cases, including in a controlled/local environment. For instance, you can deploy a relay in your local network that's only accessible within it, exposing all your local MCP servers to anyone connected to the network. This setup can act as a hub for communication between different systems, which could be particularly interesting for applications in home automation or other fields. The potential applications are limitless.

However, it's important to keep in mind that there are security concerns when exposing local resources publicly. You should be mindful of these risks and prioritize security when creating and deploying your MCP servers on Nostr.

Finally, these are new ideas, and the software is still under development. If you have any feedback, please refer to the GitHub repository to report issues or collaborate. DVMCP also has a Signal group you can join. Additionally, you can engage with the community on Nostr using the #dvmcp hashtag.

## Useful Resources

- **Official Documentation**:

- Model Context Protocol: [modelcontextprotocol.org](https://modelcontextprotocol.org)

- DVMCP.fun: [dvmcp.fun](https://dvmcp.fun)

- **Source Code and Development**:

- DVMCP: [github.com/gzuuus/dvmcp](https://github.com/gzuuus/dvmcp)

- DVMCP.fun: [github.com/gzuuus/dvmcpfun](https://github.com/gzuuus/dvmcpfun)

- **MCP Servers and Clients**:

- Smithery AI: [smithery.ai](https://smithery.ai)

- MCP.so: [mcp.so](https://mcp.so)

- Glama AI MCP Servers: [glama.ai/mcp/servers](https://glama.ai/mcp/servers)

- [Signal group](https://signal.group/#CjQKIOgvfFJf8ZFZ1SsMx7teFqNF73sZ9Elaj_v5i6RSjDHmEhA5v69L4_l2dhQfwAm2SFGD)

Happy building!

-

@ a012dc82:6458a70d

2025-03-19 06:28:40

In recent years, the global economy has faced unprecedented challenges, with inflation rates soaring to levels not seen in decades. This economic turmoil has led investors and consumers alike to seek alternative stores of value and investment strategies. Among the various options, Bitcoin has emerged as a particularly appealing choice. This article explores the reasons behind Bitcoin's growing appeal in an inflation-stricken economy, delving into its characteristics, historical performance, and the broader implications for the financial landscape.

**Table of Contents**

- Understanding Inflation and Its Impacts

- Bitcoin: A New Safe Haven?

- Decentralization and Limited Supply

- Portability and Liquidity

- Bitcoin's Performance in Inflationary Times

- Challenges and Considerations

- The Future of Bitcoin in an Inflationary Economy

- Conclusion

- FAQs

**Understanding Inflation and Its Impacts**

Inflation is the rate at which the general level of prices for goods and services is rising, eroding purchasing power. It can be caused by various factors, including increased production costs, higher energy prices, and expansive government policies. Inflation affects everyone in the economy, from consumers and businesses to investors and retirees, as it diminishes the value of money. When inflation rates rise, the purchasing power of currency falls, leading to higher costs for everyday goods and services. This can result in decreased consumer spending, reduced savings, and overall economic slowdown.

For investors, inflation is a significant concern because it can erode the real returns on their investments. Traditional investments like bonds and savings accounts may not keep pace with inflation, leading to a loss in purchasing power over time. This has prompted a search for alternative investments that can provide a hedge against inflation and preserve, if not increase, the value of their capital.

**Bitcoin: A New Safe Haven?**

Traditionally, assets like gold, real estate, and Treasury Inflation-Protected Securities (TIPS) have been considered safe havens during times of inflation. However, the digital age has introduced a new player: Bitcoin. Bitcoin is a decentralized digital currency that operates without the oversight of a central authority. Its supply is capped at 21 million coins, a feature that many believe gives it anti-inflationary properties. This inherent scarcity is akin to natural resources like gold, which have historically been used as hedges against inflation.

The decentralization of Bitcoin means that it is not subject to the whims of central banking policies or government interference, which are often seen as contributing factors to inflation. This aspect of Bitcoin is particularly appealing to those who have lost faith in traditional financial systems and are looking for alternatives that offer more autonomy and security.

**Decentralization and Limited Supply**

One of the key features that make Bitcoin appealing as a hedge against inflation is its decentralized nature. Unlike fiat currencies, which central banks can print in unlimited quantities, Bitcoin's supply is finite. This scarcity mimics the properties of gold and is seen as a buffer against inflation. The decentralized nature of Bitcoin also means that it is not subject to the same regulatory pressures and monetary policies that can lead to currency devaluation.

Furthermore, the process of "mining" Bitcoin, which involves validating transactions and adding them to the blockchain, is designed to become progressively more difficult over time. This not only ensures the security of the network but also introduces a deflationary element to Bitcoin, as the rate at which new coins are created slows down over time.

**Portability and Liquidity**

Bitcoin's digital nature makes it highly portable and divisible, allowing for easy transfer and exchange worldwide. This liquidity and global accessibility make it an attractive option for investors looking to diversify their portfolios beyond traditional assets. Unlike physical assets like gold or real estate, Bitcoin can be transferred across borders without the need for intermediaries, making it a truly global asset.

The ease of transferring and dividing Bitcoin means that it can be used for a wide range of transactions, from large-scale investments to small, everyday purchases. This versatility, combined with its growing acceptance as a form of payment, enhances its utility and appeal as an investment.

**Bitcoin's Performance in Inflationary Times**

Historically, Bitcoin has shown significant growth during periods of high inflation. While it is known for its price volatility, many investors have turned to Bitcoin as a speculative hedge against depreciating fiat currencies. The digital currency's performance during inflationary periods has bolstered its reputation as a potential safe haven. However, it's important to note that Bitcoin's market is still relatively young and can be influenced by a wide range of factors beyond inflation, such as market sentiment, technological developments, and regulatory changes.

Despite its volatility, Bitcoin has provided substantial returns for some investors, particularly those who entered the market early. Its performance, especially during times of financial instability, has led to increased interest and investment from both individual and institutional investors. As more people look to Bitcoin as a potential hedge against inflation, its role in investment portfolios is likely to evolve.

**Challenges and Considerations**

Despite its growing appeal, Bitcoin is not without its challenges. The cryptocurrency's price volatility can lead to significant losses, and regulatory uncertainties remain a concern. Additionally, the environmental impact of Bitcoin mining has sparked debate. The energy-intensive process required to mine new coins and validate transactions has raised concerns about its sustainability and environmental footprint.

Investors considering Bitcoin as a hedge against inflation should weigh these factors and consider their risk tolerance and investment horizon. While Bitcoin offers potential benefits as an inflation hedge, it also comes with risks that are different from traditional investments. Understanding these risks, and how they align with individual investment strategies, is crucial for anyone considering adding Bitcoin to their portfolio.

**The Future of Bitcoin in an Inflationary Economy**

As the global economy continues to navigate through turbulent waters, the appeal of Bitcoin is likely to grow. Its properties as a decentralized, finite, and easily transferable asset make it a unique option for those looking to protect their wealth from inflation. However, the future of Bitcoin remains uncertain, and its role in the broader financial landscape is still being defined. As with any investment, due diligence and a balanced approach are crucial.

The increasing institutional interest in Bitcoin and the development of financial products around it, such as ETFs and futures, suggest that Bitcoin is becoming more mainstream. However, its acceptance and integration into the global financial system will depend on a variety of factors, including regulatory developments, technological advancements, and market dynamics.

**Conclusion**

The growing appeal of Bitcoin in an inflation-stricken economy highlights the changing dynamics of investment in the digital age. While it offers a novel approach to wealth preservation, it also comes with its own set of risks and challenges. As the world continues to grapple with inflation, the role of Bitcoin and other cryptocurrencies will undoubtedly be a topic of keen interest and debate among investors and policymakers alike. Whether Bitcoin will become a permanent fixture in investment portfolios as a hedge against inflation remains to be seen, but its impact on the financial landscape is undeniable.

**FAQs**

**What is inflation, and how does it affect the economy?**

Inflation is the rate at which the general level of prices for goods and services is rising, leading to a decrease in purchasing power. It affects the economy by reducing the value of money, increasing costs for consumers and businesses, and potentially leading to economic slowdown.

**Why is Bitcoin considered a hedge against inflation?**

Bitcoin is considered a hedge against inflation due to its decentralized nature, limited supply capped at 21 million coins, and its independence from government monetary policies, which are often seen as contributing factors to inflation.

**What are the risks associated with investing in Bitcoin?**

The risks include high price volatility, regulatory uncertainties, and concerns over the environmental impact of Bitcoin mining. Investors should consider their risk tolerance and investment horizon before investing in Bitcoin.

**How does Bitcoin's limited supply contribute to its value?**

Bitcoin's limited supply mimics the scarcity of resources like gold, which has traditionally been used as a hedge against inflation. This scarcity can help to maintain its value over time, especially in contrast to fiat currencies, which can be printed in unlimited quantities.

**Can Bitcoin be used for everyday transactions?**

Yes, Bitcoin can be used for a wide range of transactions, from large-scale investments to small, everyday purchases. Its digital nature allows for easy transfer and division, making it a versatile form of currency.

**That's all for today**

**If you want more, be sure to follow us on:**

**NOSTR: croxroad@getalby.com**

**X: @croxroadnewsco**

**Instagram: @croxroadnews.co/**

**Youtube: @thebitcoinlibertarian**

**Store: https://croxroad.store**

**Subscribe to CROX ROAD Bitcoin Only Daily Newsletter**

**https://www.croxroad.co/subscribe**

**Get Orange Pill App And Connect With Bitcoiners In Your Area. Stack Friends Who Stack Sats

link: https://signup.theorangepillapp.com/opa/croxroad**

**Buy Bitcoin Books At Konsensus Network Store. 10% Discount With Code “21croxroad”

link: https://bitcoinbook.shop?ref=21croxroad**

*DISCLAIMER: None of this is financial advice. This newsletter is strictly educational and is not investment advice or a solicitation to buy or sell any assets or to make any financial decisions. Please be careful and do your own research.*

-

@ fd06f542:8d6d54cd

2025-03-30 02:16:24

> __Warning__ `unrecommended`: deprecated in favor of [NIP-17](17.md)

NIP-04

======

Encrypted Direct Message

------------------------

`final` `unrecommended` `optional`

A special event with kind `4`, meaning "encrypted direct message". It is supposed to have the following attributes:

**`content`** MUST be equal to the base64-encoded, aes-256-cbc encrypted string of anything a user wants to write, encrypted using a shared cipher generated by combining the recipient's public-key with the sender's private-key; this appended by the base64-encoded initialization vector as if it was a querystring parameter named "iv". The format is the following: `"content": "<encrypted_text>?iv=<initialization_vector>"`.

**`tags`** MUST contain an entry identifying the receiver of the message (such that relays may naturally forward this event to them), in the form `["p", "<pubkey, as a hex string>"]`.

**`tags`** MAY contain an entry identifying the previous message in a conversation or a message we are explicitly replying to (such that contextual, more organized conversations may happen), in the form `["e", "<event_id>"]`.

**Note**: By default in the [libsecp256k1](https://github.com/bitcoin-core/secp256k1) ECDH implementation, the secret is the SHA256 hash of the shared point (both X and Y coordinates). In Nostr, only the X coordinate of the shared point is used as the secret and it is NOT hashed. If using libsecp256k1, a custom function that copies the X coordinate must be passed as the `hashfp` argument in `secp256k1_ecdh`. See [here](https://github.com/bitcoin-core/secp256k1/blob/master/src/modules/ecdh/main_impl.h#L29).

Code sample for generating such an event in JavaScript:

```js

import crypto from 'crypto'

import * as secp from '@noble/secp256k1'

let sharedPoint = secp.getSharedSecret(ourPrivateKey, '02' + theirPublicKey)

let sharedX = sharedPoint.slice(1, 33)

let iv = crypto.randomFillSync(new Uint8Array(16))

var cipher = crypto.createCipheriv(

'aes-256-cbc',

Buffer.from(sharedX),

iv

)

let encryptedMessage = cipher.update(text, 'utf8', 'base64')

encryptedMessage += cipher.final('base64')

let ivBase64 = Buffer.from(iv.buffer).toString('base64')

let event = {

pubkey: ourPubKey,

created_at: Math.floor(Date.now() / 1000),

kind: 4,

tags: [['p', theirPublicKey]],

content: encryptedMessage + '?iv=' + ivBase64

}

```

## Security Warning

This standard does not go anywhere near what is considered the state-of-the-art in encrypted communication between peers, and it leaks metadata in the events, therefore it must not be used for anything you really need to keep secret, and only with relays that use `AUTH` to restrict who can fetch your `kind:4` events.

## Client Implementation Warning

Clients *should not* search and replace public key or note references from the `.content`. If processed like a regular text note (where `@npub...` is replaced with `#[0]` with a `["p", "..."]` tag) the tags are leaked and the mentioned user will receive the message in their inbox.

-

@ 39cc53c9:27168656

2025-03-30 05:54:45

> [Read the original blog post](https://blog.kycnot.me/p/ai-tos-analysis)

**kycnot.me** features a somewhat hidden tool that some users may not be aware of. Every month, an automated job crawls every listed service's Terms of Service (ToS) and FAQ pages and conducts an AI-driven analysis, generating a comprehensive overview that highlights key points related to KYC and user privacy.

Here's an example: [Changenow's Tos Review](https://kycnot.me/service/changenow#tos)

## Why?

ToS pages typically contain a lot of complicated text. Since the first versions of **kycnot.me**, I have tried to provide users a comprehensive overview of what can be found in such documents. This automated method keeps the information up-to-date every month, which was one of the main challenges with manual updates.

A significant part of the time I invest in investigating a service for **kycnot.me** involves reading the ToS and looking for any clauses that might indicate aggressive KYC practices or privacy concerns. For the past four years, I performed this task manually. However, with advancements in language models, this process can now be somewhat automated. I still manually review the ToS for a quick check and regularly verify the AI’s findings. However, over the past three months, this automated method has proven to be quite reliable.

Having a quick ToS overview section allows users to avoid reading the entire ToS page. Instead, you can quickly read the important points that are grouped, summarized, and referenced, making it easier and faster to understand the key information.

## Limitations

This method has a key limitation: JS-generated pages. For this reason, I was using Playwright in my crawler implementation. I plan to make a release addressing this issue in the future. There are also sites that don't have ToS/FAQ pages, but these sites already include a warning in that section.

Another issue is false positives. Although not very common, sometimes the AI might incorrectly interpret something harmless as harmful. Such errors become apparent upon reading; it's clear when something marked as bad should not be categorized as such. I manually review these cases regularly, checking for anything that seems off and then removing any inaccuracies.

Overall, the automation provides great results.

## How?

There have been several iterations of this tool. Initially, I started with GPT-3.5, but the results were not good in any way. It made up many things, and important thigs were lost on large ToS pages. I then switched to GPT-4 Turbo, but it was expensive. Eventually, I settled on Claude 3 Sonnet, which provides a quality compromise between GPT-3.5 and GPT-4 Turbo at a more reasonable price, while allowing a generous 200K token context window.

I designed a prompt, which is open source[^1], that has been tweaked many times and will surely be adjusted further in the future.

For the ToS scraping part, I initially wrote a scraper API using Playwright[^2], but I replaced it with Jina AI Reader[^3], which works quite well and is designed for this task.

### Non-conflictive ToS

All services have a dropdown in the ToS section called "Non-conflictive ToS Reviews." These are the reviews that the AI flagged as not needing a user warning. I still provide these because I think they may be interesting to read.

## Feedback and contributing

You can give me feedback on this tool, or share any inaccuraties by either opening an issue on Codeberg[^4] or by contacting me [^5].

You can contribute with pull requests, which are always welcome, or you can [support](https://kycnot.me/about#support) this project with any of the listed ways.

[^1]: https://codeberg.org/pluja/kycnot.me/src/branch/main/src/utils/ai/prompt.go

[^2]: https://codeberg.org/pluja/kycnot.me/commit/483ba8b415cecf323b3d9f0cfd4e9620919467d2

[^3]: https://github.com/jina-ai/reader

[^4]: https://codeberg.org/pluja/kycnot.me

[^5]: https://kycnot.me/about#contact

-

@ e97aaffa:2ebd765d

2025-03-19 05:55:17

Como é difícil encontrar informações sobre o eurodigital, a CBDC da União Europeia, vou colocando aqui, os documentos mais interessantes que fui encontrando:

FAQ:

https://www.ecb.europa.eu/euro/digital_euro/faqs/html/ecb.faq_digital_euro.pt.html

Directório BCE:

https://www.ecb.europa.eu/press/pubbydate/html/index.en.html?topic=Digital%20euro

https://www.ecb.europa.eu/euro/digital_euro/timeline/profuse/html/index.en.html

Documentos mais técnicos:

## 2025