-

@ 21335073:a244b1ad

2025-05-09 13:56:57

@ 21335073:a244b1ad

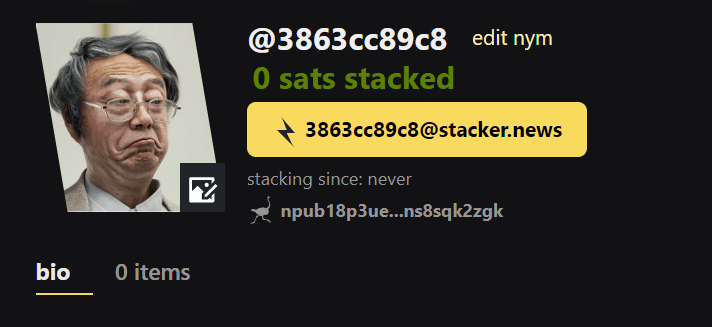

2025-05-09 13:56:57Someone asked for my thoughts, so I’ll share them thoughtfully. I’m not here to dictate how to promote Nostr—I’m still learning about it myself. While I’m not new to Nostr, freedom tech is a newer space for me. I’m skilled at advocating for topics I deeply understand, but freedom tech isn’t my expertise, so take my words with a grain of salt. Nothing I say is set in stone.

Those who need Nostr the most are the ones most vulnerable to censorship on other platforms right now. Reaching them requires real-time awareness of global issues and the dynamic relationships between governments and tech providers, which can shift suddenly. Effective Nostr promoters must grasp this and adapt quickly.

The best messengers are people from or closely tied to these at-risk regions—those who truly understand the local political and cultural dynamics. They can connect with those in need when tensions rise. Ideal promoters are rational, trustworthy, passionate about Nostr, but above all, dedicated to amplifying people’s voices when it matters most.

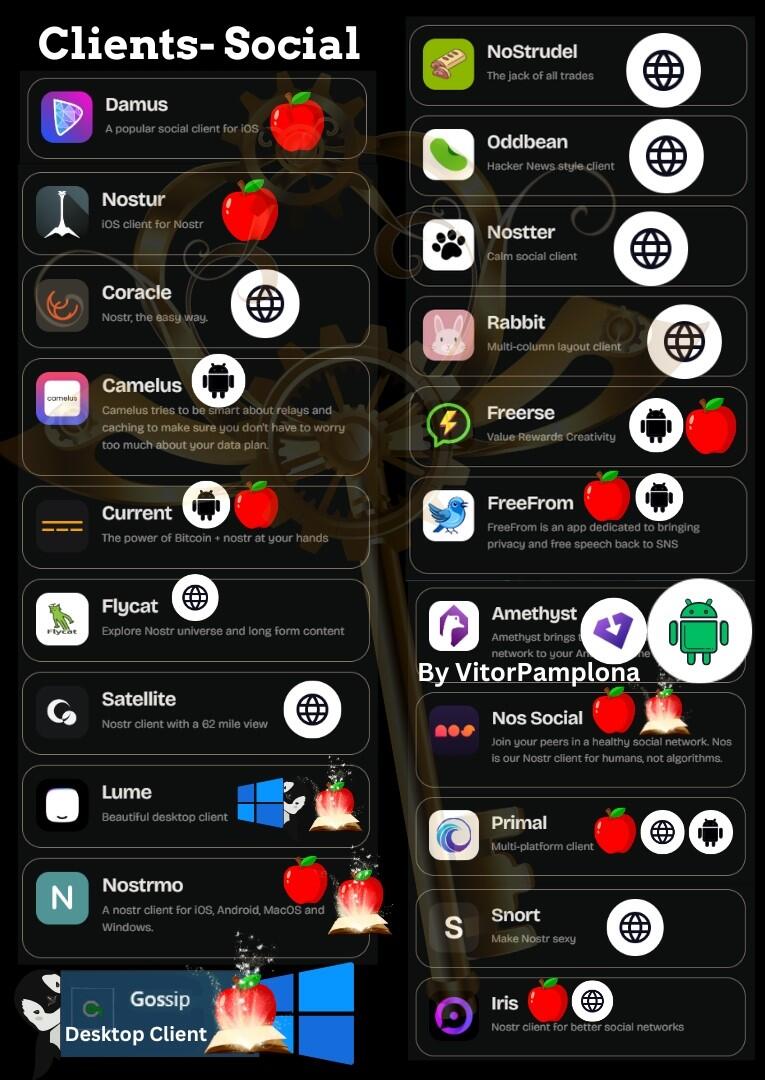

Forget influencers, corporate-backed figures, or traditional online PR—it comes off as inauthentic, corny, desperate and forced. Nostr’s promotion should be grassroots and organic, driven by a few passionate individuals who believe in Nostr and the communities they serve.

The idea that “people won’t join Nostr due to lack of reach” is nonsense. Everyone knows X’s “reach” is mostly with bots. If humans want real conversations, Nostr is the place. X is great for propaganda, but Nostr is for the authentic voices of the people.

Those spreading Nostr must be so passionate they’re willing to onboard others, which is time-consuming but rewarding for the right person. They’ll need to make Nostr and onboarding a core part of who they are. I see no issue with that level of dedication. I’ve been known to get that way myself at times. It’s fun for some folks.

With love, I suggest not adding Bitcoin promotion with Nostr outreach. Zaps already integrate that element naturally. (Still promote within the Bitcoin ecosystem, but this is about reaching vulnerable voices who needed Nostr yesterday.)

To promote Nostr, forget conventional strategies. “Influencers” aren’t the answer. “Influencers” are not the future. A trusted local community member has real influence—reach them. Connect with people seeking Nostr’s benefits but lacking the technical language to express it. This means some in the Nostr community might need to step outside of the Bitcoin bubble, which is uncomfortable but necessary. Thank you in advance to those who are willing to do that.

I don’t know who is paid to promote Nostr, if anyone. This piece isn’t shade. But it’s exhausting to see innocent voices globally silenced on corporate platforms like X while Nostr exists. Last night, I wondered: how many more voices must be censored before the Nostr community gets uncomfortable and thinks creatively to reach the vulnerable?

A warning: the global need for censorship-resistant social media is undeniable. If Nostr doesn’t make itself known, something else will fill that void. Let’s start this conversation.

-

@ 000002de:c05780a7

2025-05-09 18:33:06

@ 000002de:c05780a7

2025-05-09 18:33:06I've been eager to share my thoughts on "Return of the Strong Gods" by R.R. Reno since I finished it a week ago.

I found this book incredibly insightful. @SimpleStacker's excellent review prompted me to pick it up, and I'm glad I did. While I won't be providing a full review, I will share some of the key insights and thoughts that resonated with me.

Reno masterfully dissects the sociological underpinnings of the political shifts in the US and the Western world over the past decade and a half. His analysis of the rise of populism and nationalism is compelling and rings true to my personal observations. Reno's central thesis is that post-WW2, the West embraced "weaker gods"—ideals like democracy, pluralism, and liberalism—fearing a repeat of the strong nationalistic sentiments that led to Hitler's rise. He argues that this shift has left many people feeling culturally homeless and desperate for strong leadership that listens to their grievances.

A significant portion of Reno's argument is built on the ideas of Karl Popper, a philosopher I was unfamiliar with before reading this book. Popper's work "The Open Society and Its Enemies" has significantly influenced post-war consensus, which Reno argues has led to a disconnect between the political elite and the common people. This disconnect, he posits, is a primary driver of populist sentiments.

Reno also delves into economics, discussing Friedrich Hayek and his agreement with some of Popper's positions. This intersection of philosophy and economics provides a unique lens through which to view the political landscape. Reno's mention of the Treaty of Versailles as a catalyst for Hitler's rise is a point often overlooked in discussions about WW2. He argues that the punitive measures imposed on Germany created an environment ripe for a strongman to emerge.

One of the most compelling aspects of Reno's argument is his explanation of how the political elite often lose touch with the values and wishes of the people they represent. He draws a powerful analogy between cultural homelessness and the desperation that drives people to seek strong leadership. This section of the book particularly resonated with me, as it aligns with my own observations of the political climate.

I would have liked Reno to start his analysis with Woodrow Wilson, whose "making the world safe for democracy" slogan embodied a form of Christian nationalism. Wilson's ideals and the post-WW1 environment laid the groundwork for the open society movement, which Reno critiques. Exploring this historical context could have strengthened Reno's argument.

Reno occasionally conflates economic liberalism with libertinism, which I found to be a minor flaw in an otherwise strong argument. He rightly points out the need for moral ethics in society but seems to overlook the distinction between economic freedom and moral laxity. Reno's discussion of Milton Friedman's ideas further highlights this confusion. While Reno argues that free trade has contributed to many of our modern ills, I believe the issue lies more with nation-state trade agreements like NAFTA, which are not true examples of free trade.

Another area where I disagree with Reno is his conflation of the nation with the state. Nations are cultural entities that predate and can exist independently of states. Reno's argument would be stronger if he acknowledged this distinction, as it would clarify his points about national pride and cultural heritage.

Reno's final chapters offer a cautionary tale about the return of strong gods and the danger of making them idols. He warns against authoritarianism and the overreach of the state, advocating for a balance that respects cultural heritage without succumbing to nationalism.

In conclusion, "Return of the Strong Gods" is a thought-provoking exploration of the political and cultural shifts of our time. Reno's insights are valuable, and his arguments, while not without flaws, provide a fresh perspective on the rise of populism and nationalism. I recommend this book to anyone seeking a deeper understanding of the forces shaping our world today.

originally posted at https://stacker.news/items/975849

-

@ d61f3bc5:0da6ef4a

2025-05-06 01:37:28

@ d61f3bc5:0da6ef4a

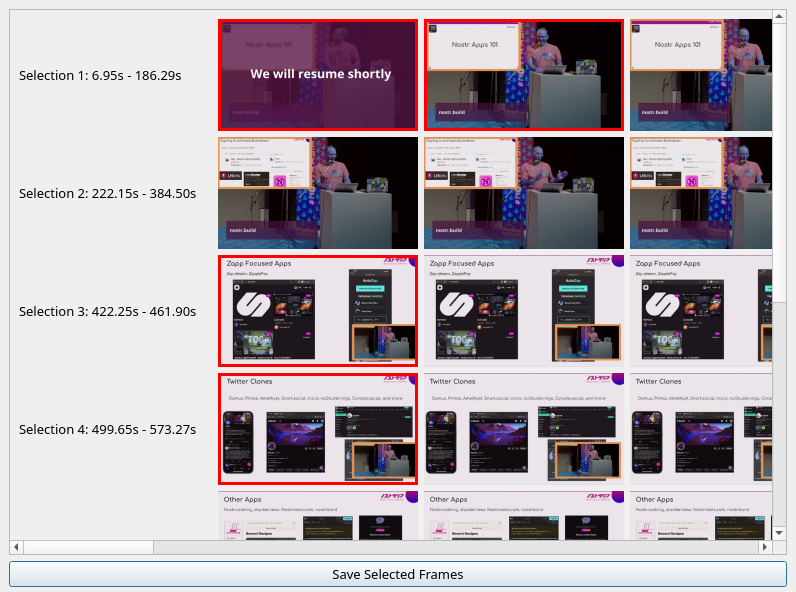

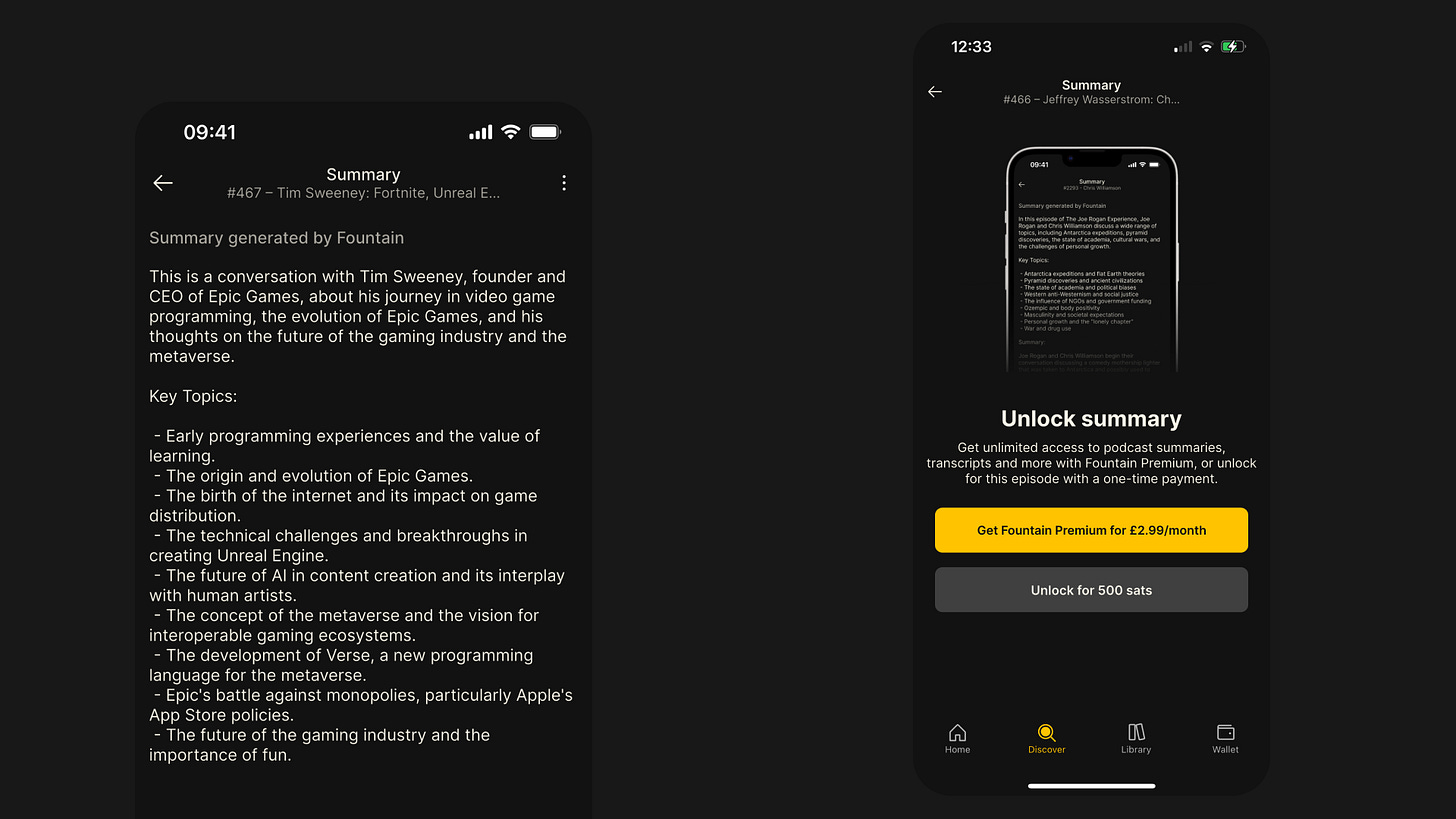

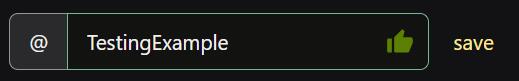

2025-05-06 01:37:28I remember the first gathering of Nostr devs two years ago in Costa Rica. We were all psyched because Nostr appeared to solve the problem of self-sovereign online identity and decentralized publishing. The protocol seemed well-suited for textual content, but it wasn't really designed to handle binary files, like images or video.

The Problem

When I publish a note that contains an image link, the note itself is resilient thanks to Nostr, but if the hosting service disappears or takes my image down, my note will be broken forever. We need a way to publish binary data without relying on a single hosting provider.

We were discussing how there really was no reliable solution to this problem even outside of Nostr. Peer-to-peer attempts like IPFS simply didn't work; they were hopelessly slow and unreliable in practice. Torrents worked for popular files like movies, but couldn't be relied on for general file hosting.

Awesome Blossom

A year later, I attended the Sovereign Engineering demo day in Madeira, organized by Pablo and Gigi. Many projects were presented over a three hour demo session that day, but one really stood out for me.

Introduced by hzrd149 and Stu Bowman, Blossom blew my mind because it showed how we can solve complex problems easily by simply relying on the fact that Nostr exists. Having an open user directory, with the corresponding social graph and web of trust is an incredible building block.

Since we can easily look up any user on Nostr and read their profile metadata, we can just get them to simply tell us where their files are stored. This, combined with hash-based addressing (borrowed from IPFS), is all we need to solve our problem.

How Blossom Works

The Blossom protocol (Blobs Stored Simply on Mediaservers) is formally defined in a series of BUDs (Blossom Upgrade Documents). Yes, Blossom is the most well-branded protocol in the history of protocols. Feel free to refer to the spec for details, but I will provide a high level explanation here.

The main idea behind Blossom can be summarized in three points:

- Users specify which media server(s) they use via their public Blossom settings published on Nostr;

- All files are uniquely addressable via hashes;

- If an app fails to load a file from the original URL, it simply goes to get it from the server(s) specified in the user's Blossom settings.

Just like Nostr itself, the Blossom protocol is dead-simple and it works!

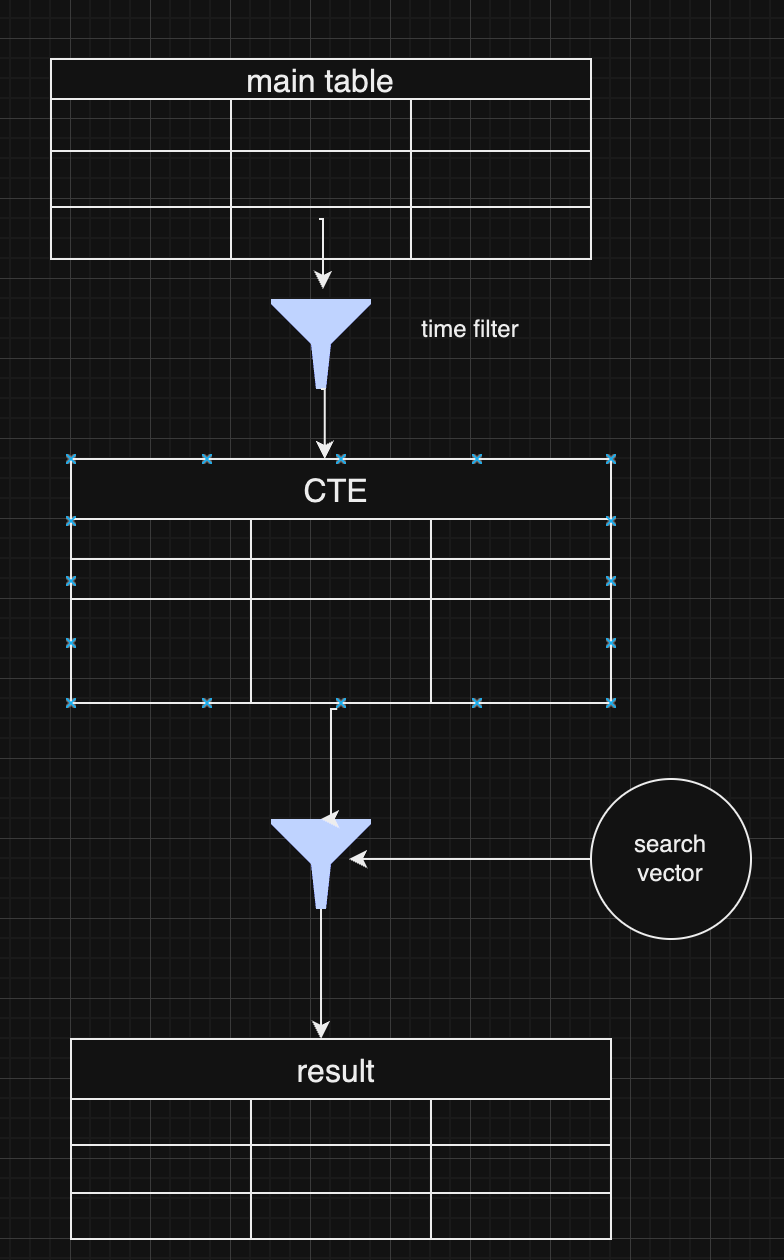

Let's use this image as an example:

If you look at the URL for this image, you will notice that it looks like this:

If you look at the URL for this image, you will notice that it looks like this:blossom.primal.net/c1aa63f983a44185d039092912bfb7f33adcf63ed3cae371ebe6905da5f688d0.jpgAll Blossom URLs follow this format:

[server]/[file-hash].[extension]The file hash is important because it uniquely identifies the file in question. Apps can use it to verify that the file they received is exactly the file they requested. It also gives us the ability to reliably get the same file from a different server.

Nostr users declare which media server(s) they use by publishing their Blossom settings. If I store my files on Server A, and they get removed, I can simply upload them to Server B, update my public Blossom settings, and all Blossom-capable apps will be able to find them at the new location. All my existing notes will continue to display media content without any issues.

Blossom Mirroring

Let's face it, re-uploading files to another server after they got removed from the original server is not the best user experience. Most people wouldn't have the backups of all the files, and/or the desire to do this work.

This is where Blossom's mirroring feature comes handy. In addition to the primary media server, a Blossom user can set one one or more mirror servers. Under this setup, every time a file is uploaded to the primary server the Nostr app issues a mirror request to the primary server, directing it to copy the file to all the specified mirrors. This way there is always a copy of all content on multiple servers and in case the primary becomes unavailable, Blossom-capable apps will automatically start loading from the mirror.

Mirrors are really easy to setup (you can do it in two clicks in Primal) and this arrangement ensures robust media handling without any central points of failure. Note that you can use professional media hosting services side by side with self-hosted backup servers that anyone can run at home.

Using Blossom Within Primal

Blossom is natively integrated into the entire Primal stack and enabled by default. If you are using Primal 2.2 or later, you don't need to do anything to enable Blossom, all your media uploads are blossoming already.

To enhance user privacy, all Primal apps use the "/media" endpoint per BUD-05, which strips all metadata from uploaded files before they are saved and optionally mirrored to other Blossom servers, per user settings. You can use any Blossom server as your primary media server in Primal, as well as setup any number of mirrors:

## Conclusion

## ConclusionFor such a simple protocol, Blossom gives us three major benefits:

- Verifiable authenticity. All Nostr notes are always signed by the note author. With Blossom, the signed note includes a unique hash for each referenced media file, making it impossible to falsify.

- File hosting redundancy. Having multiple live copies of referenced media files (via Blossom mirroring) greatly increases the resiliency of media content published on Nostr.

- Censorship resistance. Blossom enables us to seamlessly switch media hosting providers in case of censorship.

Thanks for reading; and enjoy! 🌸

-

@ 5d4b6c8d:8a1c1ee3

2025-05-09 13:25:40

@ 5d4b6c8d:8a1c1ee3

2025-05-09 13:25:40The second round of the NBA Playoffs is historically weird. We'll try to figure out what's going wrong for all three of the presumed contenders. Which of the other East teams need to be considered contenders now? With how bad the Warriors look without Steph (just how underrated is he?), do the Warriors still have any chance?

In Blok'd Shots, we'll talk about the NHL Star who got traded midseason and then beat his former team in the playoffs.

There was a big trade in the NFL. Plus, @grayruby wants to start a media beef with Colin Cowherd.

The MLB introduced a new stat. I have no idea what it is, but I'm looking forward to trying to understand it live on air.

And, of course, lots of contest and betting updates.

What do you want us to talk about?

originally posted at https://stacker.news/items/975474

-

@ 6e64b83c:94102ee8

2025-05-05 16:50:13

@ 6e64b83c:94102ee8

2025-05-05 16:50:13Nostr-static is a powerful static site generator that transforms long-form Nostr content into beautiful, standalone websites. It makes your content accessible to everyone, even those not using Nostr clients. For more information check out my previous blog post How to Create a Blog Out of Nostr Long-Form Articles

What's New in Version 0.7?

RSS and Atom Feeds

Version 0.7 brings comprehensive feed support with both RSS and Atom formats. The system automatically generates feeds for your main content, individual profiles, and tag-specific pages. These feeds are seamlessly integrated into your site's header, making them easily discoverable by feed readers and content aggregators.

This feature bridges the gap between Nostr and traditional web publishing, allowing your content to reach readers who prefer feed readers or automated content distribution systems.

Smart Content Discovery

The new tag discovery system enhances your readers' experience by automatically finding and recommending relevant articles from the Nostr network. It works by:

- Analyzing the tags in your articles

- Fetching popular articles from Nostr that share these tags

- Using configurable weights to rank these articles based on:

- Engagement metrics (reactions, reposts, replies)

- Zap statistics (amount, unique zappers, average zap size)

- Content quality signals (report penalties)

This creates a dynamic "Recommended Articles" section that helps readers discover more content they might be interested in, all while staying within the Nostr ecosystem.

See the new features yourself by visiting our demo at: https://blog.nostrize.me

-

@ 6e0ea5d6:0327f353

2025-05-04 14:53:42

@ 6e0ea5d6:0327f353

2025-05-04 14:53:42Amico mio, ascolta bene!

Without hesitation, the woman you attract with lies is not truly yours. Davvero, she is the temporary property of the illusion you’ve built to seduce her. And every illusion, sooner or later, crumbles.

Weak men sell inflated versions of themselves. They talk about what they don’t have, promise what they can’t sustain, adorn their empty selves with words that are nothing more than a coat of paint. And they do this thinking that, later, they’ll be able to "show who they really are." Fatal mistake, cazzo!

The truth, amico mio, is not something that appears at the end. It is what holds up the whole beginning.

The woman who approaches a lie may smile at first — but she is smiling at the theater, not at the actor. When the curtains fall, what she will see is not a man. It will be a character tired of performing, begging for love from a self-serving audience in the front row.

That’s why I always point out that lying to win a woman’s heart is the same as sabotaging your own nature. The woman who comes through an invented version of you will be the first to leave when the veil of lies tears apart. Not out of cruelty, but out of consistency with her own interest. Fine... She didn’t leave you, but rather, that version of yourself never truly existed to be left behind.

A worthy man presents himself without deceptive adornments. And those who stay, stay because they know exactly who they are choosing as a man. That’s what differentiates forged seduction from the convenience of love built on honor, loyalty, and respect.

Ah, amico mio, I remember well. It was lunch on an autumn day in Catania. Mediterranean heat, and the Nero D'Avola wine from midday clinging to the lips like dried blood. Sitting in the shade of a lemon tree planted right by my grandfather's vineyard entrance, my uncle — the oldest of my father’s brothers — spoke little, but when he called us to sit by his side, all the nephews would quiet down to listen. And in my youth, he told me something that has never left my mind.

“In Sicily, the woman who endures the silence of a man about his business is more loyal than the one who is enchanted by speeches about what he does or how much he earns. Perchè, figlio mio, the first one has seen the truth. The second one, only a false shine.”

Thank you for reading, my friend!

If this message resonated with you, consider leaving your "🥃" as a token of appreciation.

A toast to our family!

-

@ bbef5093:71228592

2025-05-09 17:01:36

@ bbef5093:71228592

2025-05-09 17:01:36A francia székhelyű Newcleo, az olasz dizájncég Pininfarina és az olasz hajógyártó Fincantieri bemutatták a TL-40 nevű, következő generációs, hajókra szánt nukleáris reaktor teljes méretű modelljét a 19. Velencei Építészeti Biennálén[1][2][5][6][7].

A TL-40 reaktor főbb jellemzői

- IV. generációs, ólom-hűtésű kis moduláris reaktor (SMR), amelyet nagy hajók meghajtására fejlesztettek ki[1][2][5][6].

- Kompakt, biztonságos és fenntartható: A reaktor passzív biztonsági rendszereket alkalmaz, amelyek a fizika törvényein keresztül kizárják a nukleáris balesetek lehetőségét[2][5][6].

- Nukleáris hulladék újrahasznosítása: A TL-40 képes újrafeldolgozott nukleáris hulladékot üzemanyagként használni, ezzel egyszerre járul hozzá az energiatermeléshez és a hulladékcsökkentéshez[1][2][5][6].

- Formatervezés és ipari háttér: A Pininfarina gondoskodott a dizájnról és a reaktor esztétikai megjelenéséről, míg a Fincantieri a hajógyártási tapasztalatát adta hozzá[1][2][5][6][7].

- Nyitott konfiguráció: A reaktor vázája lehetővé teszi a látogatók számára, hogy belülről is megismerjék a technológiát, interaktív módon[1][2][5].

Technológiai és környezeti jelentőség

- Dekarbonizáció: A fejlesztés célja, hogy a nukleáris energiát a hajózás szektorában is a szén-dioxid-kibocsátás csökkentésének eszközeként alkalmazzák[1][2][5][6].

- Európai hulladék hasznosítása: A jelenleg Európában tárolt nukleáris hulladék elegendő lenne a kontinens villamosenergia-igényének fedezésére évszázadokon át, ha ilyen típusú reaktorokban hasznosítanák[2][5][6].

- Kreatív szemlélet: A projekt célja a nukleáris energia társadalmi megítélésének javítása, bemutatva, hogy az lehet egyszerre biztonságos, esztétikus és fenntartható[1][5][6][7].

Együttműködés és jövőbeli tervek

- Folyamatos együttműködés: A Newcleo és a Fincantieri 2023 óta dolgoznak együtt a tengeri alkalmazások fejlesztésén[2][5][6][7].

- Kutatás-fejlesztés: A Pininfarina a Newcleo franciaországi (Chusclan) nukleáris üzemanyag kutató-fejlesztő központjának tervezésében is részt vesz[2][5][6][7].

- Első demonstrációs reaktor: A Newcleo célja, hogy 2031-re bemutassa az első demonstrációs reaktort, 2033-ra pedig kereskedelmi forgalomba hozza azt[8].

- Földi alkalmazás: A vállalat már megkezdte a földi, ólom-hűtésű LFR-AS-30 reaktor telephelyének előkészítését Franciaországban (Chinon régió)[8].

- Európai támogatás: Az LFR dizájn 2024 októberében felkerült az Európai Ipari Szövetség támogatásra javasolt SMR-listájára[8].

Idézetek a projekt vezetőitől

„Ez nem a nagyapáink reaktora. Fenntartható, előremutató technológia, amely a való világ és a klímaváltozás kihívásaira készült.”

(Stefano Buono, a Newcleo vezérigazgatója)[1][5][6][7]„A dizájn stratégiai szerepet játszik a fenntartható energia megoldások fejlesztésében, biztosítva a társadalmi és környezeti szempontok teljes integrációját az innovációs folyamat során.”

(Silvio Angori, a Pininfarina vezérigazgatója)[6][7]Hivatkozások: [1] newcleo, Pininfarina and Fincantieri unveil the future of clean ... https://www.maddyness.com/uk/2025/05/08/newcleo-pininfarina-and-fincantieri-unveil-the-future-of-clean-energy-at-the-venice-biennale/ [2] Newcleo, Pininfarina, and Fincantieri showcase next-generation ... https://www.shippax.com/en/news/newcleo-pininfarina-and-fincantieri-showcase-next-generation-nuclear-power-solution-for-potential-marine-applications.aspx [3] Newcleo and Fincantieri unveil lead-cooled fast nuclear reactor for ... https://www.bairdmaritime.com/shipping/newcleo-and-fincantieri-unveil-lead-cooled-fast-nuclear-reactor-for-maritime-applications [4] Fincantieri Launches Nuclear-Propulsion Study With Newcleo https://maritime-executive.com/article/fincantieri-joints-nuclear-propulsion-study-with-newcleo [5] Industry Partners Showcase Next-Gen Nuclear Reactor For https://www.marinelink.com/news/industry-partners-showcase-nextgen-525512 [6] Fincantieri, newcleo and Pininfarina reveal the look of new nuclear ... https://www.fincantieri.com/en/media/press-releases/2025/fincantieri-newcleo-pininfarina-at-the-venice-biennale/ [7] Fincantieri S p A : , newcleo and Pininfarina reveal the look of new ... https://www.marketscreener.com/quote/stock/FINCANTIERI-S-P-A-16796084/news/Fincantieri-S-p-A-newcleo-and-Pininfarina-reveal-the-look-of-new-nuclear-power-at-the-19th-Inter-49877851/ [8] Pininfarina - NucNet | Independent Nuclear News https://www.nucnet.org/news/tagged/Pininfarina [9] newcleo, Fincantieri and RINA working together on feasibility study ... https://www.rina.org/en/media/press/2023/07/25/newcleo-fincantieri-rina [10] Fincantieri, RINA team up with newcleo to test feasibility of nuclear ... https://www.offshore-energy.biz/fincantieri-rina-team-up-with-newcleo-to-test-feasibility-of-nuclear-naval-propulsion/ [11] newcleo #pininfarina #fincantieri #biennalearchitettura2025 ... https://www.linkedin.com/posts/newcleo_newcleo-pininfarina-fincantieri-activity-7295080775878647810-6i6X [12] A Fourth-Generation Reactor at the Venice Architecture Biennale https://www.theplan.it/eng/whats_on/a-reactor-fourth-generation-pininfarina-newcleo-fincantieri

-

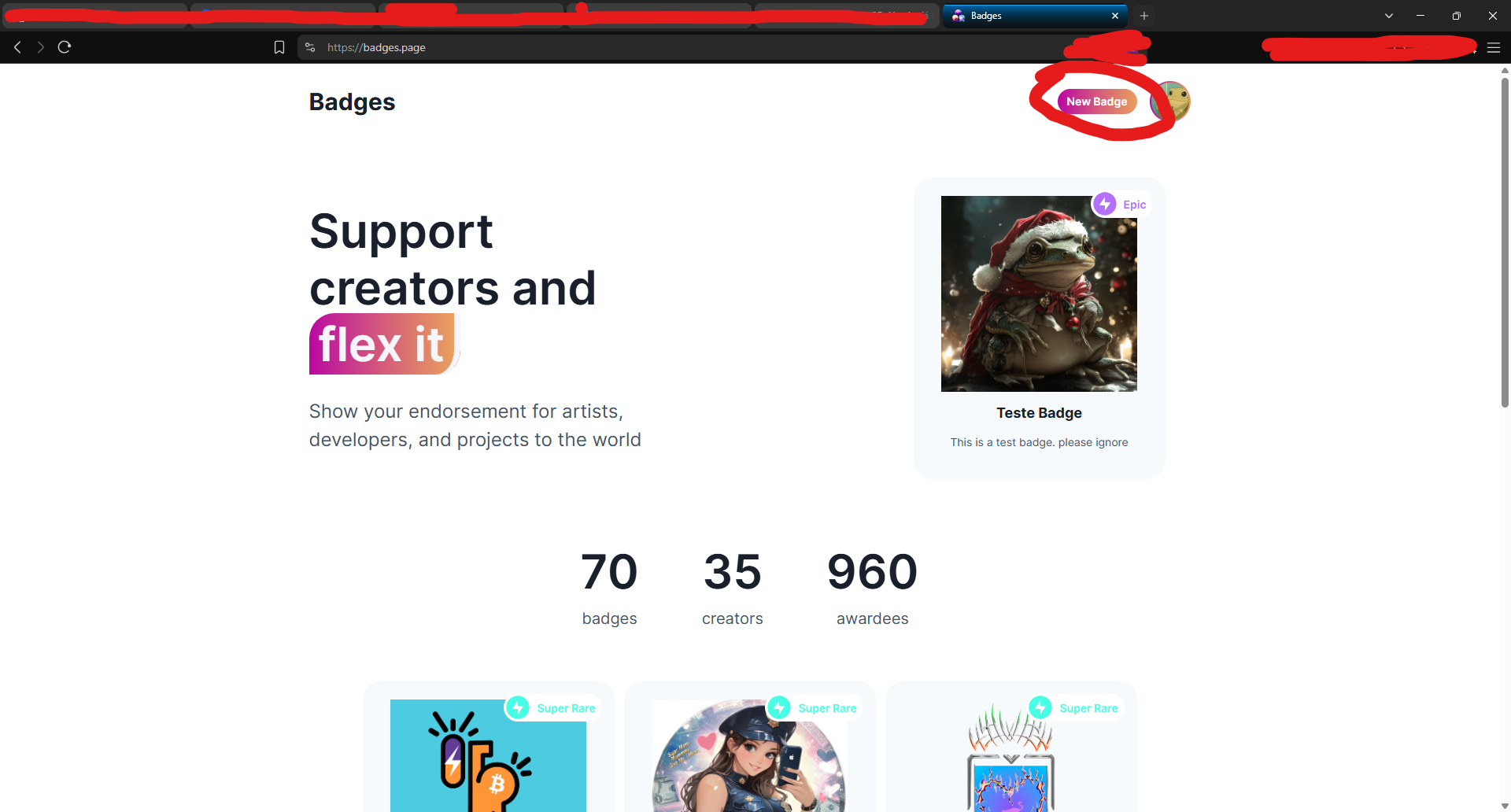

@ 266815e0:6cd408a5

2025-05-02 22:24:59

@ 266815e0:6cd408a5

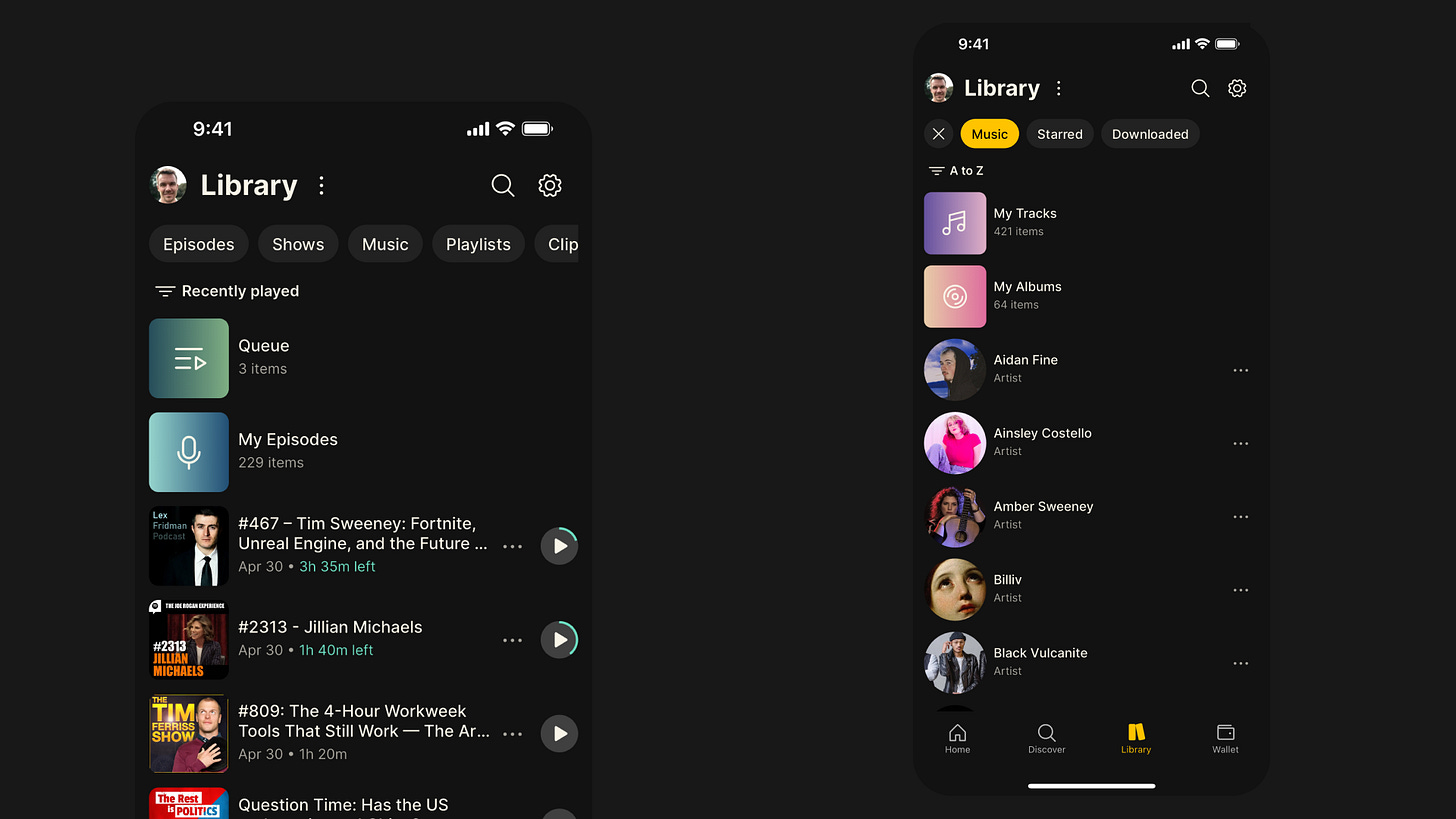

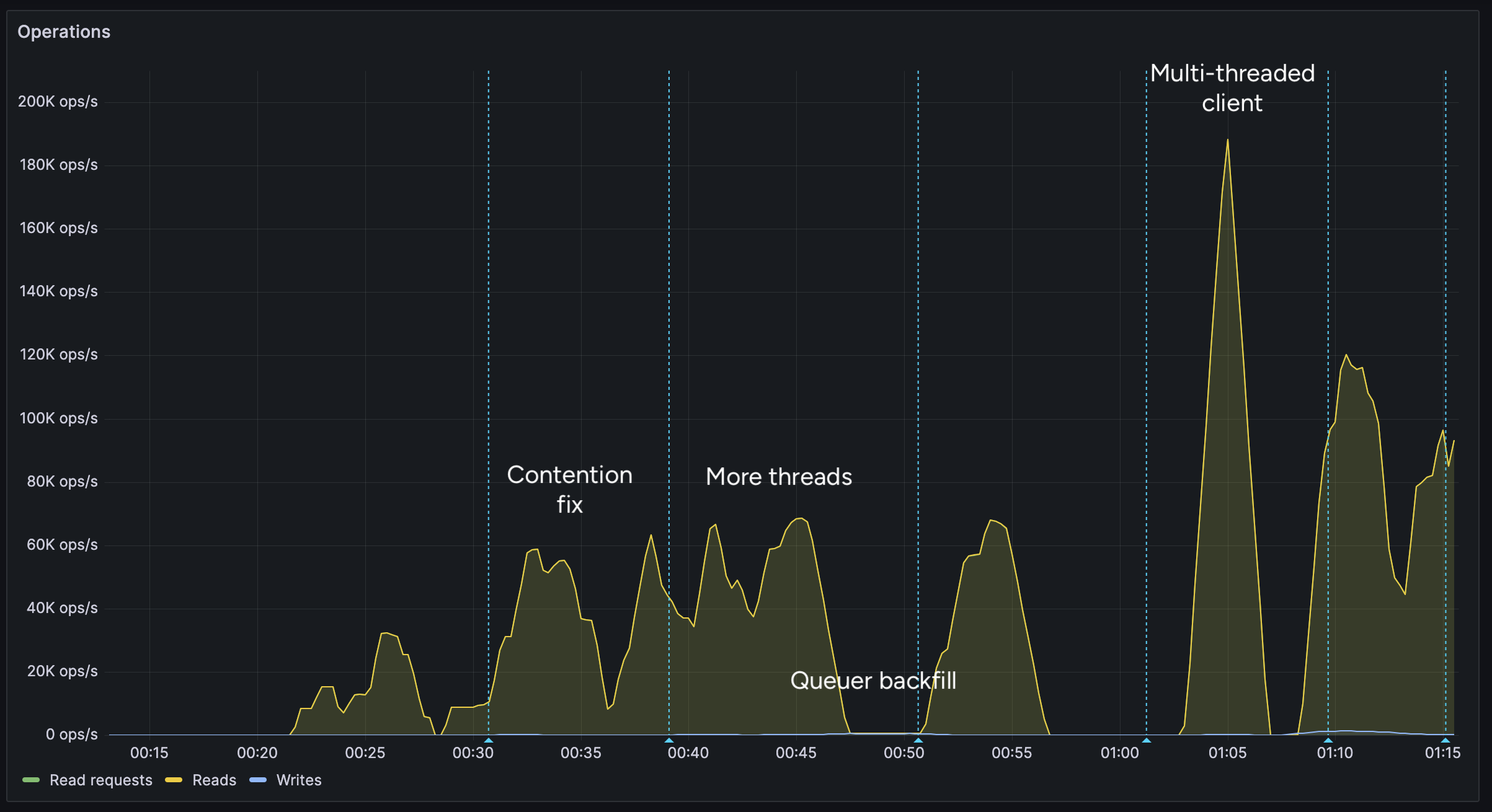

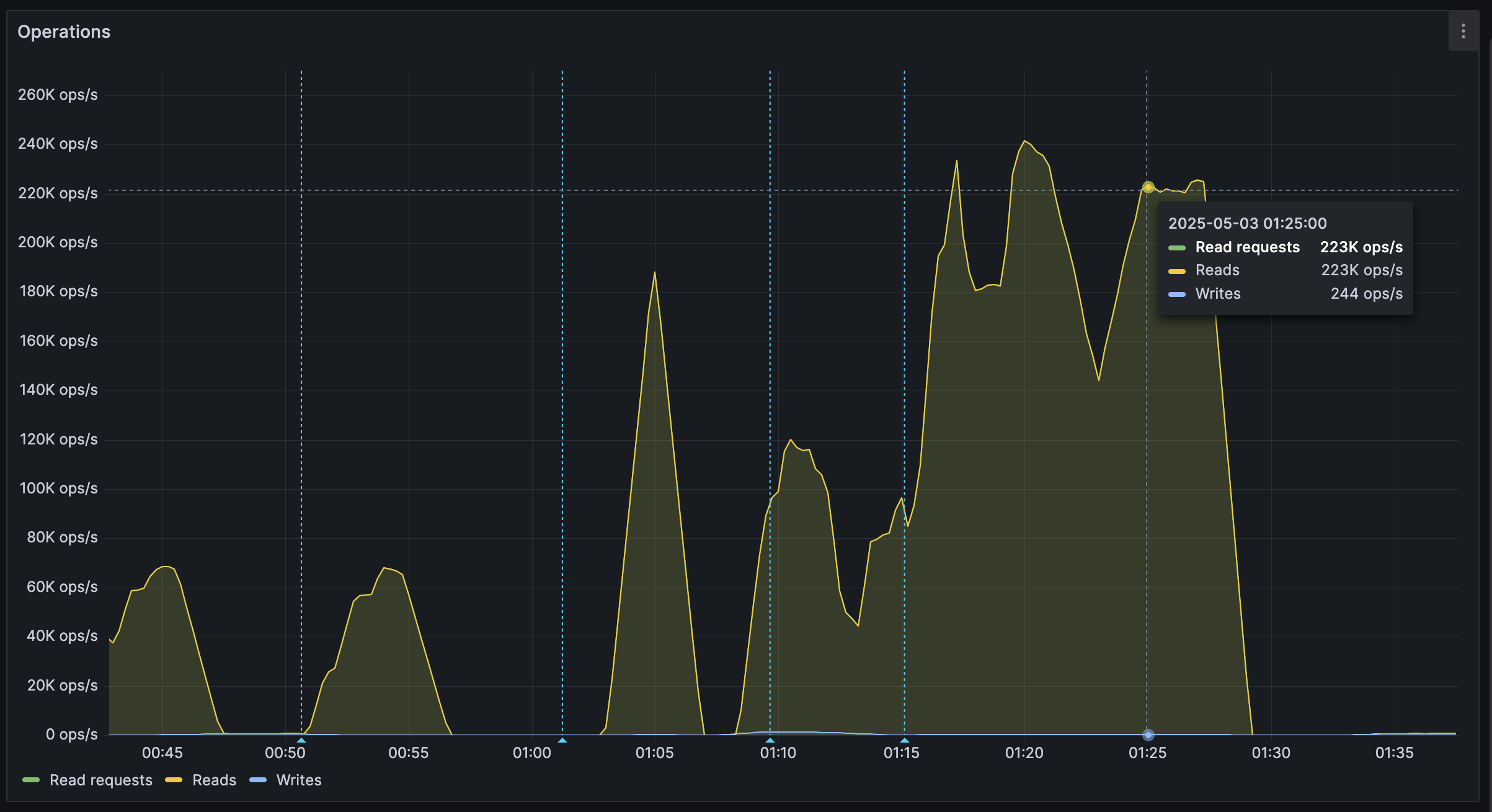

2025-05-02 22:24:59Its been six long months of refactoring code and building out to the applesauce packages but the app is stable enough for another release.

This update is pretty much a full rewrite of the non-visible parts of the app. all the background services were either moved out to the applesauce packages or rewritten, the result is that noStrudel is a little faster and much more consistent with connections and publishing.

New layout

The app has a new layout now, it takes advantage of the full desktop screen and looks a little better than it did before.

Removed NIP-72 communities

The NIP-72 communities are no longer part of the app, if you want to continue using them there are still a few apps that support them ( like satellite.earth ) but noStrudel won't support them going forward.

The communities where interesting but ultimately proved too have some fundamental flaws, most notably that all posts had to be approved by a moderator. There were some good ideas on how to improve it but they would have only been patches and wouldn't have fixed the underlying issues.

I wont promise to build it into noStrudel, but NIP-29 (relay based groups) look a lot more promising and already have better moderation abilities then NIP-72 communities could ever have.

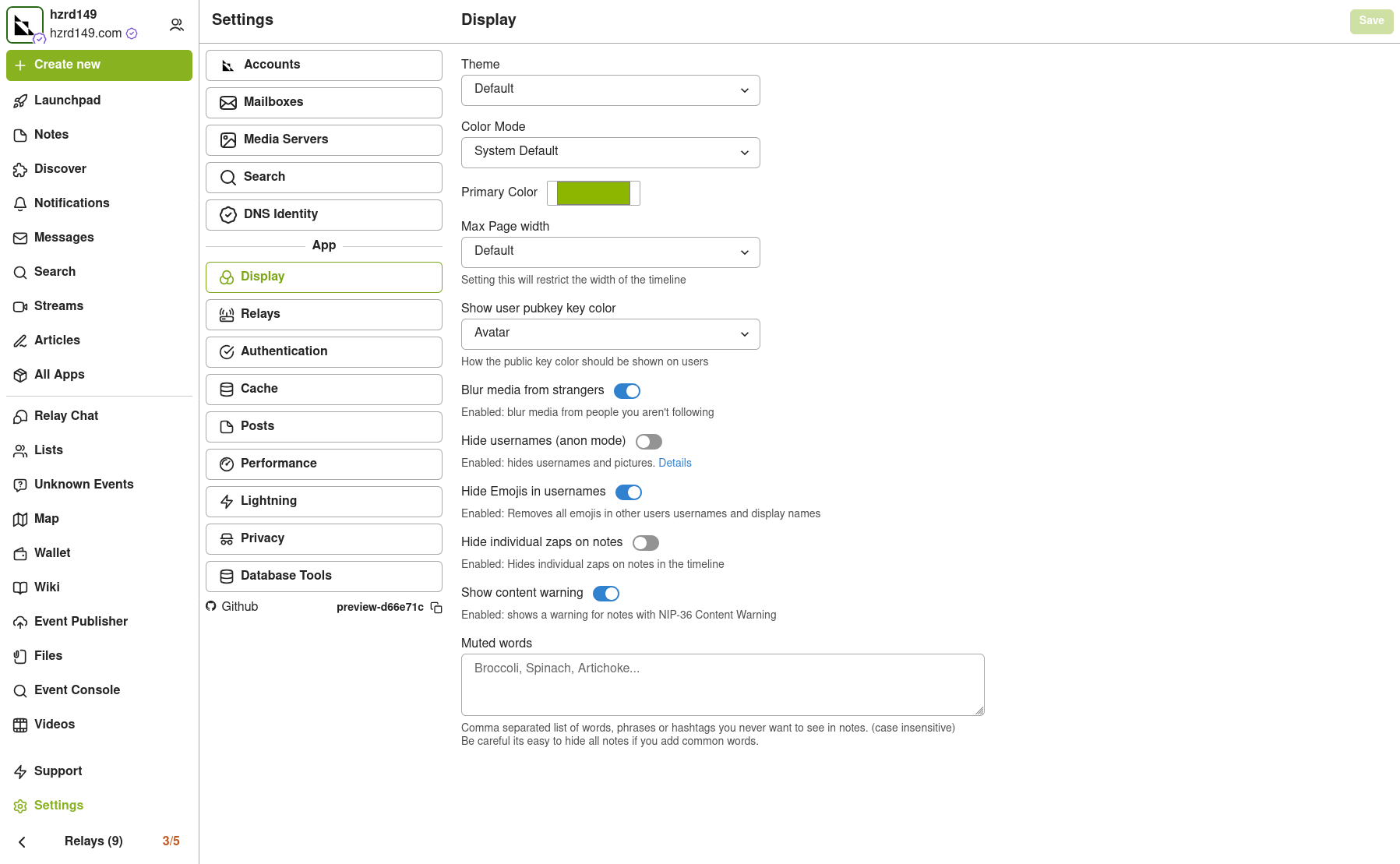

Settings view

There is now a dedicated settings view, so no more hunting around for where the relays are set or trying to find how to add another account. its all in one place now

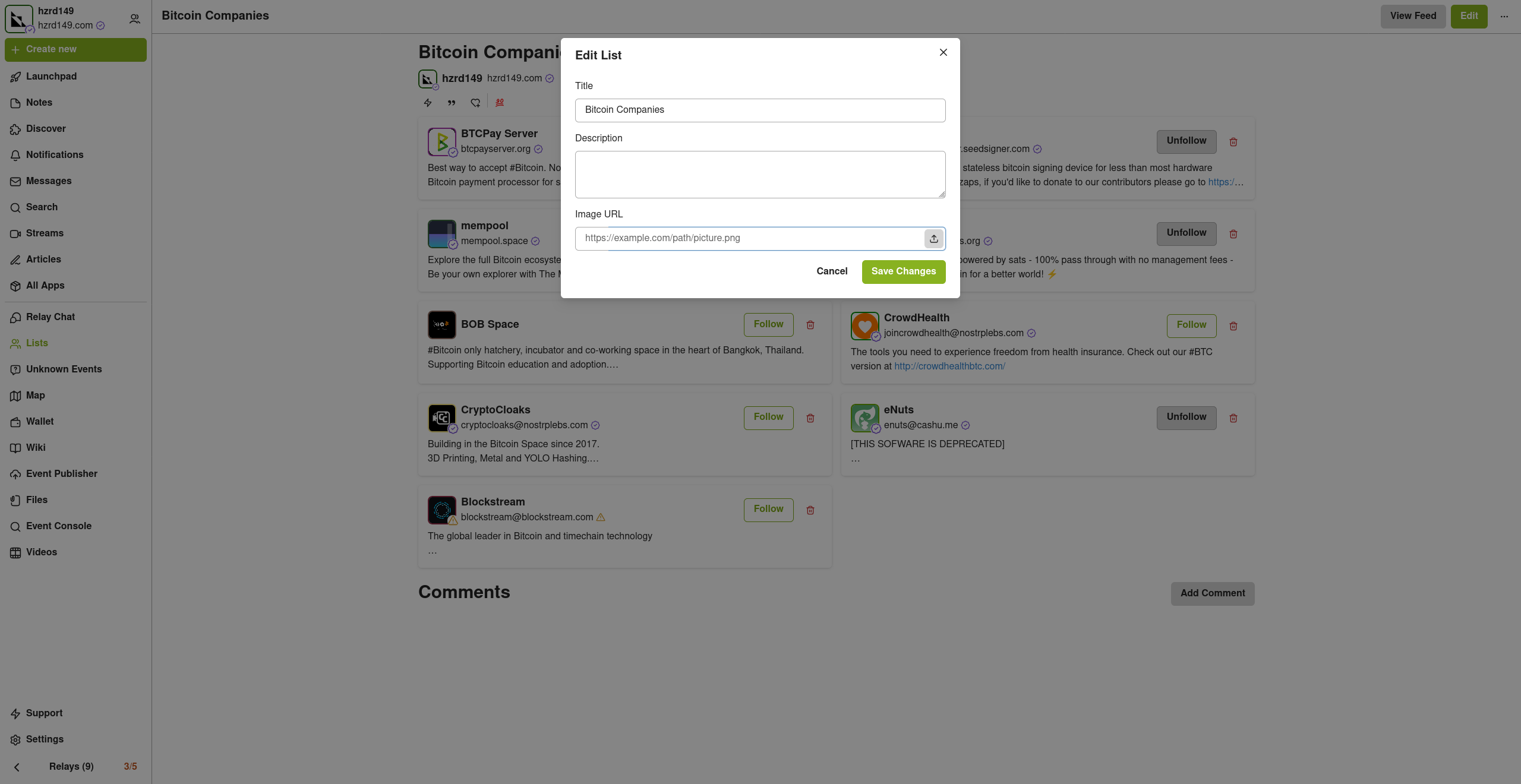

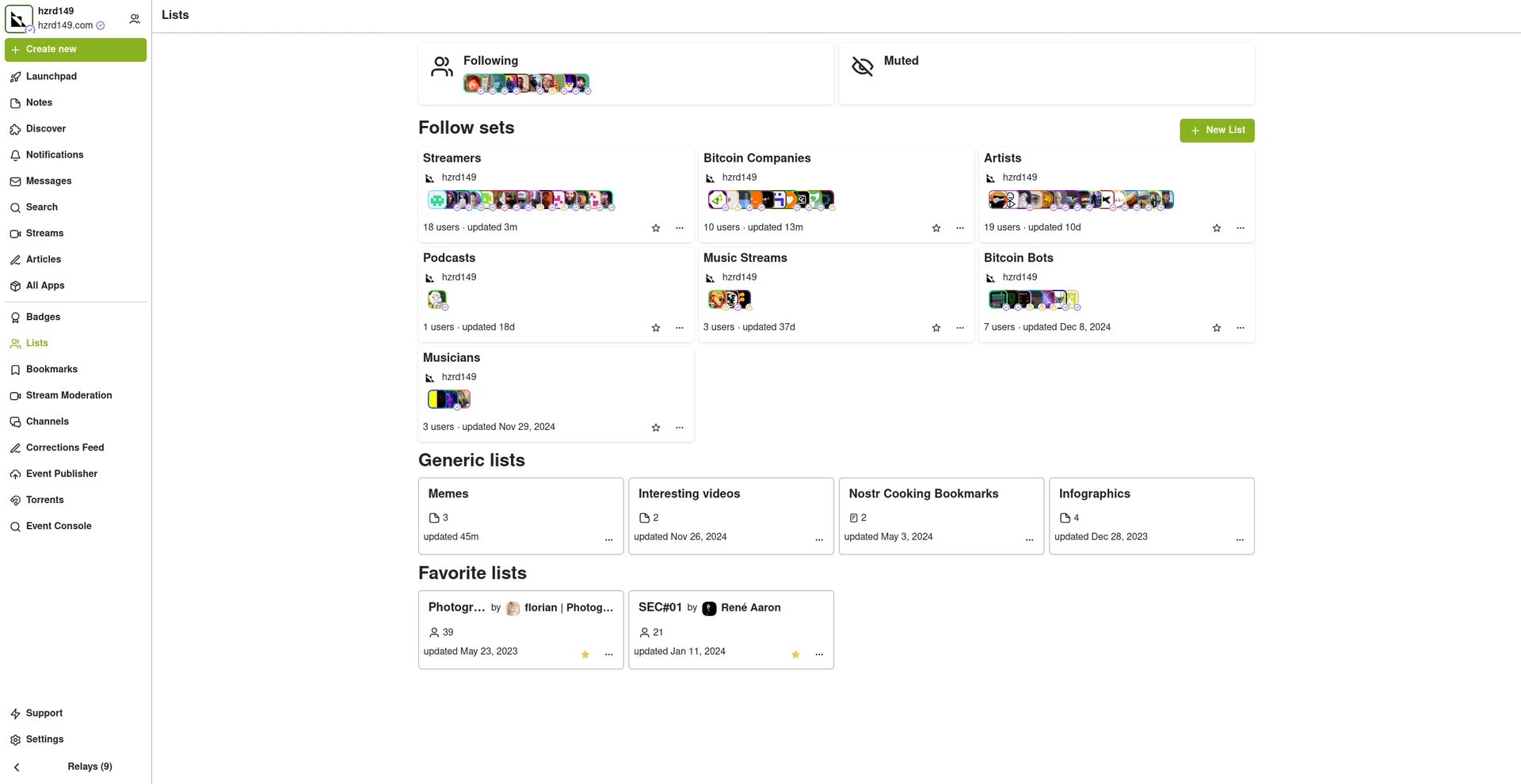

Cleaned up lists

The list views are a little cleaner now, and they have a simple edit modal

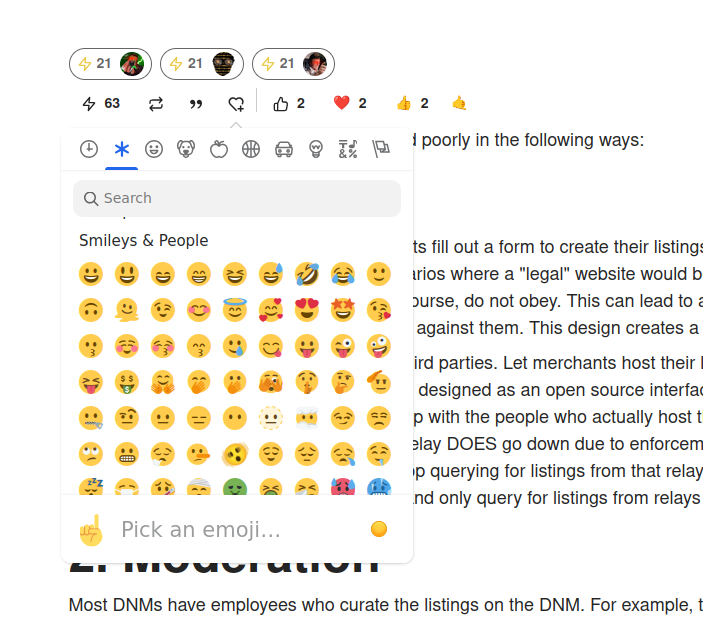

New emoji picker

Just another small improvement that makes the app feel more complete.

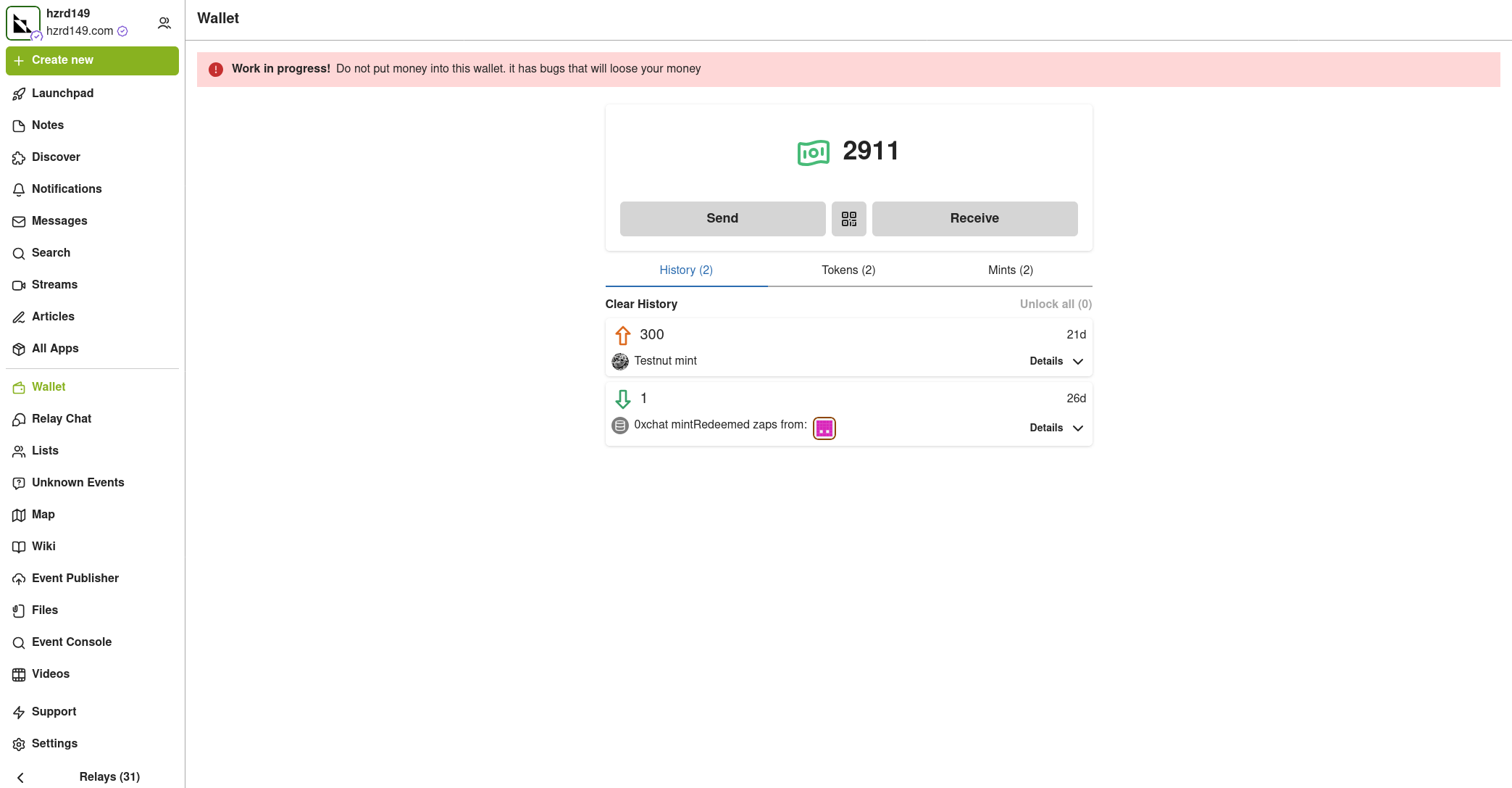

Experimental Wallet

There is a new "wallet" view in the app that lets you manage your NIP-60 cashu wallet. its very experimental and probably won't work for you, but its there and I hope to finish it up so the app can support NIP-61 nutzaps.

WARNING: Don't feed the wallet your hard earned sats, it will eat them!

Smaller improvements

- Added NSFW flag for replies

- Updated NIP-48 bunker login to work with new spec

- Linkfy BIPs

- Added 404 page

- Add NIP-22 comments under badges, files, and articles

- Add max height to timeline notes

- Fix articles view freezing on load

- Add option to mirror blobs when sharing notes

- Remove "open in drawer" for notes

-

@ a39d19ec:3d88f61e

2025-04-22 12:44:42

@ a39d19ec:3d88f61e

2025-04-22 12:44:42Die Debatte um Migration, Grenzsicherung und Abschiebungen wird in Deutschland meist emotional geführt. Wer fordert, dass illegale Einwanderer abgeschoben werden, sieht sich nicht selten dem Vorwurf des Rassismus ausgesetzt. Doch dieser Vorwurf ist nicht nur sachlich unbegründet, sondern verkehrt die Realität ins Gegenteil: Tatsächlich sind es gerade diejenigen, die hinter jeder Forderung nach Rechtssicherheit eine rassistische Motivation vermuten, die selbst in erster Linie nach Hautfarbe, Herkunft oder Nationalität urteilen.

Das Recht steht über Emotionen

Deutschland ist ein Rechtsstaat. Das bedeutet, dass Regeln nicht nach Bauchgefühl oder politischer Stimmungslage ausgelegt werden können, sondern auf klaren gesetzlichen Grundlagen beruhen müssen. Einer dieser Grundsätze ist in Artikel 16a des Grundgesetzes verankert. Dort heißt es:

„Auf Absatz 1 [Asylrecht] kann sich nicht berufen, wer aus einem Mitgliedstaat der Europäischen Gemeinschaften oder aus einem anderen Drittstaat einreist, in dem die Anwendung des Abkommens über die Rechtsstellung der Flüchtlinge und der Europäischen Menschenrechtskonvention sichergestellt ist.“

Das bedeutet, dass jeder, der über sichere Drittstaaten nach Deutschland einreist, keinen Anspruch auf Asyl hat. Wer dennoch bleibt, hält sich illegal im Land auf und unterliegt den geltenden Regelungen zur Rückführung. Die Forderung nach Abschiebungen ist daher nichts anderes als die Forderung nach der Einhaltung von Recht und Gesetz.

Die Umkehrung des Rassismusbegriffs

Wer einerseits behauptet, dass das deutsche Asyl- und Aufenthaltsrecht strikt durchgesetzt werden soll, und andererseits nicht nach Herkunft oder Hautfarbe unterscheidet, handelt wertneutral. Diejenigen jedoch, die in einer solchen Forderung nach Rechtsstaatlichkeit einen rassistischen Unterton sehen, projizieren ihre eigenen Denkmuster auf andere: Sie unterstellen, dass die Debatte ausschließlich entlang ethnischer, rassistischer oder nationaler Kriterien geführt wird – und genau das ist eine rassistische Denkweise.

Jemand, der illegale Einwanderung kritisiert, tut dies nicht, weil ihn die Herkunft der Menschen interessiert, sondern weil er den Rechtsstaat respektiert. Hingegen erkennt jemand, der hinter dieser Kritik Rassismus wittert, offenbar in erster Linie die „Rasse“ oder Herkunft der betreffenden Personen und reduziert sie darauf.

Finanzielle Belastung statt ideologischer Debatte

Neben der rechtlichen gibt es auch eine ökonomische Komponente. Der deutsche Wohlfahrtsstaat basiert auf einem Solidarprinzip: Die Bürger zahlen in das System ein, um sich gegenseitig in schwierigen Zeiten zu unterstützen. Dieser Wohlstand wurde über Generationen hinweg von denjenigen erarbeitet, die hier seit langem leben. Die Priorität liegt daher darauf, die vorhandenen Mittel zuerst unter denjenigen zu verteilen, die durch Steuern, Sozialabgaben und Arbeit zum Erhalt dieses Systems beitragen – nicht unter denen, die sich durch illegale Einreise und fehlende wirtschaftliche Eigenleistung in das System begeben.

Das ist keine ideologische Frage, sondern eine rein wirtschaftliche Abwägung. Ein Sozialsystem kann nur dann nachhaltig funktionieren, wenn es nicht unbegrenzt belastet wird. Würde Deutschland keine klaren Regeln zur Einwanderung und Abschiebung haben, würde dies unweigerlich zur Überlastung des Sozialstaates führen – mit negativen Konsequenzen für alle.

Sozialpatriotismus

Ein weiterer wichtiger Aspekt ist der Schutz der Arbeitsleistung jener Generationen, die Deutschland nach dem Zweiten Weltkrieg mühsam wieder aufgebaut haben. Während oft betont wird, dass die Deutschen moralisch kein Erbe aus der Zeit vor 1945 beanspruchen dürfen – außer der Verantwortung für den Holocaust –, ist es umso bedeutsamer, das neue Erbe nach 1945 zu respektieren, das auf Fleiß, Disziplin und harter Arbeit beruht. Der Wiederaufbau war eine kollektive Leistung deutscher Menschen, deren Früchte nicht bedenkenlos verteilt werden dürfen, sondern vorrangig denjenigen zugutekommen sollten, die dieses Fundament mitgeschaffen oder es über Generationen mitgetragen haben.

Rechtstaatlichkeit ist nicht verhandelbar

Wer sich für eine konsequente Abschiebepraxis ausspricht, tut dies nicht aus rassistischen Motiven, sondern aus Respekt vor der Rechtsstaatlichkeit und den wirtschaftlichen Grundlagen des Landes. Der Vorwurf des Rassismus in diesem Kontext ist daher nicht nur falsch, sondern entlarvt eine selektive Wahrnehmung nach rassistischen Merkmalen bei denjenigen, die ihn erheben.

-

@ 32092ec1:8e9fd13a

2025-05-09 16:13:34

@ 32092ec1:8e9fd13a

2025-05-09 16:13:34Bitcoin controversies are not new, in fact, bitcoin’s past has been riddled with one controversy after another. From the viewpoint of a financial investor, controversies are not good. They drive investor fear by amplifying uncertainty and introducing doubt about the future viability of the project. Although bitcoin is an investable asset, and there are certainly individuals, companies and countries who are investing in bitcoin strictly as a new, diversified financial asset that shows growth characteristics the likes of which humanity has rarely seen, bitcoin is not exclusively a financial instrument. Bitcoin is a network that is only as strong as the people running the miners who create the blocks, the nodes that propagate the blocks, and the users who hold the keys.

In this post I do not care to share my view on any of the latest bitcoin controversies, whether that be about OP_RETURNs, MSTR, Blackrock, MSTY, inscriptions, soft fork proposals, mempools, bitcoin strategic reserves, 21, chain surveillance, on-chain privacy, shitcoins projects, or anything else that anyone cares to opine about on Nostr or X to signal their support or opposition for what they think is critical to the success or failure of bitcoin. What I do care to share here is the ONLY winning strategy to defend bitcoin no matter what your opinion its future: USE THE TOOLS, RUN THE CODE, HOLD YOUR KEYS, TAKE RESPONSIBILITY.

In my humble opinion, the biggest problem with the current batch of bitcoin controversies is that so many people are sharing their opinions and expressing their fears about the future of bitcoin who are not even using the tools; they aren’t running the code, supporting the network or even holding their own keys. Everyone is free to share their opinions about the future of bitcoin and allowing everyone a voice is critical if we are serious about adopting the best ideas. Many visionaries have participated in the growth and development of bitcoin up to this point; we are truly standing on the shoulders of giants.

But before jumping to conclusions and spreading opinions about whether people are good faith or bad faith actors in the bitcoin space, I believe we should all hold ourselves to a certain standard. Set a minimum threshold of education for yourself before you jump on one side or the other of the latest controversy. We have tools that offer a level of sovereignty that is unparalleled in the legacy financial world, are you using them?Don’t like what MSTR is doing? Hold your keys in cold storage, then share your opinion.

Think Blackrock wants to use Bitcoin to launch a dystopian new world order agenda? Tell everyone to run a node, implement on-chain privacy strategies and hold their keys in cold storage.

Are Bitcoin developers making changes you don’t like? Use an alternative implementation, get a Bitaxe and construct your own block templates; for almost everyone this will be a stronger signal than shitposting or spreading FUD on the socials.

If you really want to defend bitcoin, think about how you are using it today. Who are you trusting to put your transactions into a block? Who are you trusting to propagate your transactions across the network? Who are you trusting to hold your keys? Who estimates your transaction fees? If you don’t trust the people who you are currently depending on, move your trust elsewhere, or increase your sovereignty and rely on yourself for some, or all, of these functions.

We make bitcoin what it is today, and we will define what bitcoin will be in the future. If we want to defend bitcoin it is our responsibility to keep it as decentralized as possible and that starts with every individual using the tools, running the code, doing the research and taking responsibility for their money.

895982

-

@ 088436cd:9d2646cc

2025-05-01 21:01:55

@ 088436cd:9d2646cc

2025-05-01 21:01:55The arrival of the coronavirus brought not only illness and death but also fear and panic. In such an environment of uncertainty, people have naturally stocked up on necessities, not knowing when things will return to normal.

Retail shelves have been cleared out, and even online suppliers like Amazon and Walmart are out of stock for some items. Independent sellers on these e-commerce platforms have had to fill the gap. With the huge increase in demand, they have found that their inventory has skyrocketed in value.

Many in need of these items (e.g. toilet paper, hand sanitizer and masks) balk at the new prices. They feel they are being taken advantage of in a time of need and call for intervention by the government to lower prices. The government has heeded that call, labeling the independent sellers as "price gougers" and threatening sanctions if they don't lower their prices. Amazon has suspended seller accounts and law enforcement at all levels have threatened to prosecute. Prices have dropped as a result and at first glance this seems like a victory for fair play. But, we will have to dig deeper to understand the unseen consequences of this intervention.

We must look at the economics of the situation, how supply and demand result in a price and how that price acts as a signal that goes out to everyone, informing them of underlying conditions in the economy and helping coordinate their actions.

It all started with a rise in demand. Given a fixed supply (e.g., the limited stock on shelves and in warehouses), an increase in demand inevitably leads to higher prices. Most people are familiar with this phenomenon, such as paying more for airline tickets during holidays or surge pricing for rides.

Higher prices discourage less critical uses of scarce resources. For example, you might not pay $1,000 for a plane ticket to visit your aunt if you can get one for $100 the following week, but someone else might pay that price to visit a dying relative. They value that plane seat more than you.

*** During the crisis, demand surged and their shelves emptied even though

However, retail outlets have not raised prices. They have kept them low, so the low-value uses of things like toilet paper, masks and hand sanitizer has continued. Often, this "use" just takes the form of hoarding. At everyday low prices, it makes sense to buy hundreds of rolls and bottles. You know you will use them eventually, so why not stock up? And, with all those extra supplies in the closet and basement, you don't need to change your behavior much. You don't have to ration your use.

At the low prices, these scarce resources got bought up faster and faster until there was simply none left. The reality of the situation became painfully clear to those who didn't panic and got to the store late: You have no toilet paper and you're not going to any time soon.

However, if prices had been allowed to rise, a number of effects would have taken place that would have coordinated the behavior of everyone so that valuable resources would not have been wasted or hoarded, and everyone could have had access to what they needed.

On the demand side, if prices had been allowed to rise, people would have begun to self-ration. You might leave those extra plies on the roll next time if you know they will cost ten times as much to replace. Or, you might choose to clean up a spill with a rag rather than disposable tissue. Most importantly, you won't hoard as much. That 50th bottle of hand sanitizer might just not be worth it at the new, high price. You'll leave it on the shelf for someone else who may have none.

On the supply side, higher prices would have incentivized people to offer up more of their stockpiles for sale. If you have a pallet full of toilet paper in your basement and all of the sudden they are worth $15 per roll, you might just list a few online. But, if it is illegal to do so, you probably won't.

Imagine you run a business installing insulation and have a few thousand respirator masks on hand for your employees. During a pandemic, it is much more important that people breathe filtered air than that insulation get installed, and that fact is reflected in higher prices. You will sell your extra masks at the higher price rather than store them for future insulation jobs, and the scarce resource will be put to its most important use.

Producers of hand sanitizer would go into overdrive if prices were allowed to rise. They would pay their employees overtime, hire new ones, and pay a premium for their supplies, making sure their raw materials don't go to less important uses.

These kinds of coordinated actions all across the economy would be impossible without real prices to guide them. How do you know if it makes sense to spend an extra $10k bringing a thousand masks to market unless you know you can get more than $10 per mask? If the price is kept artificially low, you simply can't do it. The money just isn't there.

These are the immediate effects of a price change, but incredibly, price changes also coordinate people's actions across space and time.

Across space, there are different supply and demand conditions in different places, and thus prices are not uniform. We know some places are real "hot spots" for the virus, while others are mostly unaffected. High demand in the hot spots leads to higher prices there, which attracts more of the resource to those areas. Boxes and boxes of essential items would pour in where they are needed most from where they are needed least, but only if prices were allowed to adjust freely.

This would be accomplished by individuals and businesses buying low in the unaffected areas, selling high in the hot spots and subtracting their labor and transportation costs from the difference. Producers of new supply would know exactly where it is most needed and ship to the high-demand, high-price areas first. The effect of these actions is to increase prices in the low demand areas and reduce them in the high demand areas. People in the low demand areas will start to self-ration more, reflecting the reality of their neighbors, and people in the hotspots will get some relief.

However, by artificially suppressing prices in the hot spot, people there will simply buy up the available supply and run out, and it will be cost prohibitive to bring in new supply from low-demand areas.

Prices coordinate economic actions across time as well. Just as entrepreneurs and businesses can profit by transporting scarce necessities from low-demand to high-demand areas, they can also profit by buying in low-demand times and storing their merchandise for when it is needed most.

Just as allowing prices to freely adjust in one area relative to another will send all the right signals for the optimal use of a scarce resource, allowing prices to freely adjust over time will do the same.

When an entrepreneur buys up resources during low-demand times in anticipation of a crisis, she restricts supply ahead of the crisis, which leads to a price increase. She effectively bids up the price. The change in price affects consumers and producers in all the ways mentioned above. Consumers self-ration more, and producers bring more of the resource to market.

Our entrepreneur has done a truly incredible thing. She has predicted the future, and by so doing has caused every individual in the economy to prepare for a shortage they don't even know is coming! And, by discouraging consumption and encouraging production ahead of time, she blunts the impact the crisis will have. There will be more of the resource to go around when it is needed most.

On top of this, our entrepreneur still has her stockpile she saved back when everyone else was blithely using it up. She can now further mitigate the damage of the crisis by selling her stock during the worst of it, when people are most desperate for relief. She will know when this is because the price will tell her, but only if it is allowed to adjust freely. When the price is at its highest is when people need the resource the most, and those willing to pay will not waste it or hoard it. They will put it to its highest valued use.

The economy is like a big bus we are all riding in, going down a road with many twists and turns. Just as it is difficult to see into the future, it is difficult to see out the bus windows at the road ahead.

On the dashboard, we don't have a speedometer or fuel gauge. Instead we have all the prices for everything in the economy. Prices are what tell us the condition of the bus and the road. They tell us everything. Without them, we are blind.

Good times are a smooth road. Consumer prices and interest rates are low, investment returns are steady. We hit the gas and go fast. But, the road is not always straight and smooth. Sometimes there are sharp turns and rough patches. Successful entrepreneurs are the ones who can see what is coming better than everyone else. They are our navigators.

When they buy up scarce resources ahead of a crisis, they are hitting the brakes and slowing us down. When they divert resources from one area to another, they are steering us onto a smoother path. By their actions in the market, they adjust the prices on our dashboard to reflect the conditions of the road ahead, so we can prepare for, navigate and get through the inevitable difficulties we will face.

Interfering with the dashboard by imposing price floors or price caps doesn't change the conditions of the road (the number of toilet paper rolls in existence hasn't changed). All it does is distort our perception of those conditions. We think the road is still smooth--our heavy foot stomping the gas--as we crash onto a rocky dirt road at 80 miles per hour (empty shelves at the store for weeks on end).

Supply, demand and prices are laws of nature. All of this is just how things work. It isn't right or wrong in a moral sense. Price caps lead to waste, shortages and hoarding as surely as water flows downhill. The opposite--allowing prices to adjust freely--leads to conservation of scarce resources and their being put to their highest valued use. And yes, it leads to profits for the entrepreneurs who were able to correctly predict future conditions, and losses for those who weren't.

Is it fair that they should collect these profits? On the one hand, anyone could have stocked up on toilet paper, hand sanitizer and face masks at any time before the crisis, so we all had a fair chance to get the supplies cheaply. On the other hand, it just feels wrong that some should profit so much at a time when there is so much need.

Our instinct in the moment is to see the entrepreneur as a villain, greedy "price gouger". But we don't see the long chain of economic consequences the led to the situation we feel is unfair.

If it weren't for anti-price-gouging laws, the major retailers would have raised their prices long before the crisis became acute. When they saw demand outstrip supply, they would have raised prices, not by 100 fold, but gradually and long before anyone knew how serious things would have become. Late comers would have had to pay more, but at least there would be something left on the shelf.

As an entrepreneur, why take risks trying to anticipate the future if you can't reap the reward when you are right? Instead of letting instead of letting entrepreneurs--our navigators--guide us, we are punishing and vilifying them, trying to force prices to reflect a reality that simply doesn't exist.

In a crisis, more than any other time, prices must be allowed to fluctuate. To do otherwise is to blind ourselves at a time when danger and uncertainty abound. It is economic suicide.

In a crisis, there is great need, and the way to meet that need is not by pretending it's not there, by forcing prices to reflect a world where there isn't need. They way to meet the need is the same it has always been, through charity.

If the people in government want to help, the best way for the to do so is to be charitable and reduce their taxes and fees as much as possible, ideally to zero in a time of crisis. Amazon, for example, could instantly reduce the price of all crisis related necessities by 20% if they waived their fee. This would allow for more uses by more people of these scarce supplies as hoarders release their stockpiles on to the market, knowing they can get 20% more for their stock. Governments could reduce or eliminate their tax burden on high-demand, crisis-related items and all the factors that go into their production, with the same effect: a reduction in prices and expansion of supply. All of us, including the successful entrepreneurs and the wealthy for whom high prices are not a great burden, could donate to relief efforts.

These ideas are not new or untested. This is core micro economics. It has been taught for hundreds of years in universities the world over. The fact that every crisis that comes along stirs up ire against entrepreneurs indicates not that the economics is wrong, but that we have a strong visceral reaction against what we perceive to be unfairness. This is as it should be. Unfairness is wrong and the anger it stirs in us should compel us to right the wrong. Our anger itself isn't wrong, it's just misplaced.

Entrepreneurs didn't cause the prices to rise. Our reaction to a virus did that. We saw a serious threat and an uncertain future and followed our natural impulse to hoard. Because prices at major retail suppliers didn't rise, that impulse ran rampant and we cleared the shelves until there was nothing left. We ran the bus right off the road and them blamed the entrepreneurs for showing us the reality of our situation, for shaking us out of the fantasy of low prices.

All of this is not to say that entrepreneurs are high-minded public servants. They are just doing their job. Staking your money on an uncertain future is a risky business. There are big risks and big rewards. Most entrepreneurs just scrape by or lose their capital in failed ventures.

However, the ones that get it right must be allowed to keep their profits, or else no one will try and we'll all be driving blind. We need our navigators. It doesn't even matter if they know all the positive effects they are having on the rest of us and the economy as a whole. So long as they are buying low and selling high--so long as they are doing their job--they will be guiding the rest of us through the good times and the bad, down the open road and through the rough spots.

-

@ a39d19ec:3d88f61e

2025-03-18 17:16:50

@ a39d19ec:3d88f61e

2025-03-18 17:16:50Nun da das deutsche Bundesregime den Ruin Deutschlands beschlossen hat, der sehr wahrscheinlich mit dem Werkzeug des Geld druckens "finanziert" wird, kamen mir so viele Gedanken zur Geldmengenausweitung, dass ich diese für einmal niedergeschrieben habe.

Die Ausweitung der Geldmenge führt aus klassischer wirtschaftlicher Sicht immer zu Preissteigerungen, weil mehr Geld im Umlauf auf eine begrenzte Menge an Gütern trifft. Dies lässt sich in mehreren Schritten analysieren:

1. Quantitätstheorie des Geldes

Die klassische Gleichung der Quantitätstheorie des Geldes lautet:

M • V = P • Y

wobei:

- M die Geldmenge ist,

- V die Umlaufgeschwindigkeit des Geldes,

- P das Preisniveau,

- Y die reale Wirtschaftsleistung (BIP).Wenn M steigt und V sowie Y konstant bleiben, muss P steigen – also Inflation entstehen.

2. Gütermenge bleibt begrenzt

Die Menge an real produzierten Gütern und Dienstleistungen wächst meist nur langsam im Vergleich zur Ausweitung der Geldmenge. Wenn die Geldmenge schneller steigt als die Produktionsgütermenge, führt dies dazu, dass mehr Geld für die gleiche Menge an Waren zur Verfügung steht – die Preise steigen.

3. Erwartungseffekte und Spekulation

Wenn Unternehmen und Haushalte erwarten, dass mehr Geld im Umlauf ist, da eine zentrale Planung es so wollte, können sie steigende Preise antizipieren. Unternehmen erhöhen ihre Preise vorab, und Arbeitnehmer fordern höhere Löhne. Dies kann eine sich selbst verstärkende Spirale auslösen.

4. Internationale Perspektive

Eine erhöhte Geldmenge kann die Währung abwerten, wenn andere Länder ihre Geldpolitik stabil halten. Eine schwächere Währung macht Importe teurer, was wiederum Preissteigerungen antreibt.

5. Kritik an der reinen Geldmengen-Theorie

Der Vollständigkeit halber muss erwähnt werden, dass die meisten modernen Ökonomen im Staatsauftrag argumentieren, dass Inflation nicht nur von der Geldmenge abhängt, sondern auch von der Nachfrage nach Geld (z. B. in einer Wirtschaftskrise). Dennoch zeigt die historische Erfahrung, dass eine unkontrollierte Geldmengenausweitung langfristig immer zu Preissteigerungen führt, wie etwa in der Hyperinflation der Weimarer Republik oder in Simbabwe.

-

@ 21335073:a244b1ad

2025-05-01 01:51:10

@ 21335073:a244b1ad

2025-05-01 01:51:10Please respect Virginia Giuffre’s memory by refraining from asking about the circumstances or theories surrounding her passing.

Since Virginia Giuffre’s death, I’ve reflected on what she would want me to say or do. This piece is my attempt to honor her legacy.

When I first spoke with Virginia, I was struck by her unshakable hope. I had grown cynical after years in the anti-human trafficking movement, worn down by a broken system and a government that often seemed complicit. But Virginia’s passion, creativity, and belief that survivors could be heard reignited something in me. She reminded me of my younger, more hopeful self. Instead of warning her about the challenges ahead, I let her dream big, unburdened by my own disillusionment. That conversation changed me for the better, and following her lead led to meaningful progress.

Virginia was one of the bravest people I’ve ever known. As a survivor of Epstein, Maxwell, and their co-conspirators, she risked everything to speak out, taking on some of the world’s most powerful figures.

She loved when I said, “Epstein isn’t the only Epstein.” This wasn’t just about one man—it was a call to hold all abusers accountable and to ensure survivors find hope and healing.

The Epstein case often gets reduced to sensational details about the elite, but that misses the bigger picture. Yes, we should be holding all of the co-conspirators accountable, we must listen to the survivors’ stories. Their experiences reveal how predators exploit vulnerabilities, offering lessons to prevent future victims.

You’re not powerless in this fight. Educate yourself about trafficking and abuse—online and offline—and take steps to protect those around you. Supporting survivors starts with small, meaningful actions. Free online resources can guide you in being a safe, supportive presence.

When high-profile accusations arise, resist snap judgments. Instead of dismissing survivors as “crazy,” pause to consider the trauma they may be navigating. Speaking out or coping with abuse is never easy. You don’t have to believe every claim, but you can refrain from attacking accusers online.

Society also fails at providing aftercare for survivors. The government, often part of the problem, won’t solve this. It’s up to us. Prevention is critical, but when abuse occurs, step up for your loved ones and community. Protect the vulnerable. it’s a challenging but a rewarding journey.

If you’re contributing to Nostr, you’re helping build a censorship resistant platform where survivors can share their stories freely, no matter how powerful their abusers are. Their voices can endure here, offering strength and hope to others. This gives me great hope for the future.

Virginia Giuffre’s courage was a gift to the world. It was an honor to know and serve her. She will be deeply missed. My hope is that her story inspires others to take on the powerful.

-

@ 5d4b6c8d:8a1c1ee3

2025-05-08 22:57:55

@ 5d4b6c8d:8a1c1ee3

2025-05-08 22:57:55Using a discussion style post to get better formatting on the nostr cross-post.

The Fed Leaves Fed Funds Rate at 4.5% as Economic Storm Clouds Gather

by Ryan McMaken

The Fed is now hemmed in by a rising risk of stagflation. It doesn’t know where the economy is headed, or is unwilling to take a position. At this point, “hope for the best” is Fed policy.

originally posted at https://stacker.news/items/975059

-

@ c9badfea:610f861a

2025-05-09 15:32:59

@ c9badfea:610f861a

2025-05-09 15:32:59- Install Feeder (it's free and open source)

- Discover RSS feeds from various sources (see links below)

- Copy the Feed URL

- Open Feeder, tap the ⁞ icon, and choose Add Feed

- Paste the Feed URL and tap Search

- Select the found RSS feed item

- Scroll down and tap OK

Some Sources

ℹ️ You can also use YouTube channel URLs as feeds

-

@ 0d97beae:c5274a14

2025-01-11 16:52:08

@ 0d97beae:c5274a14

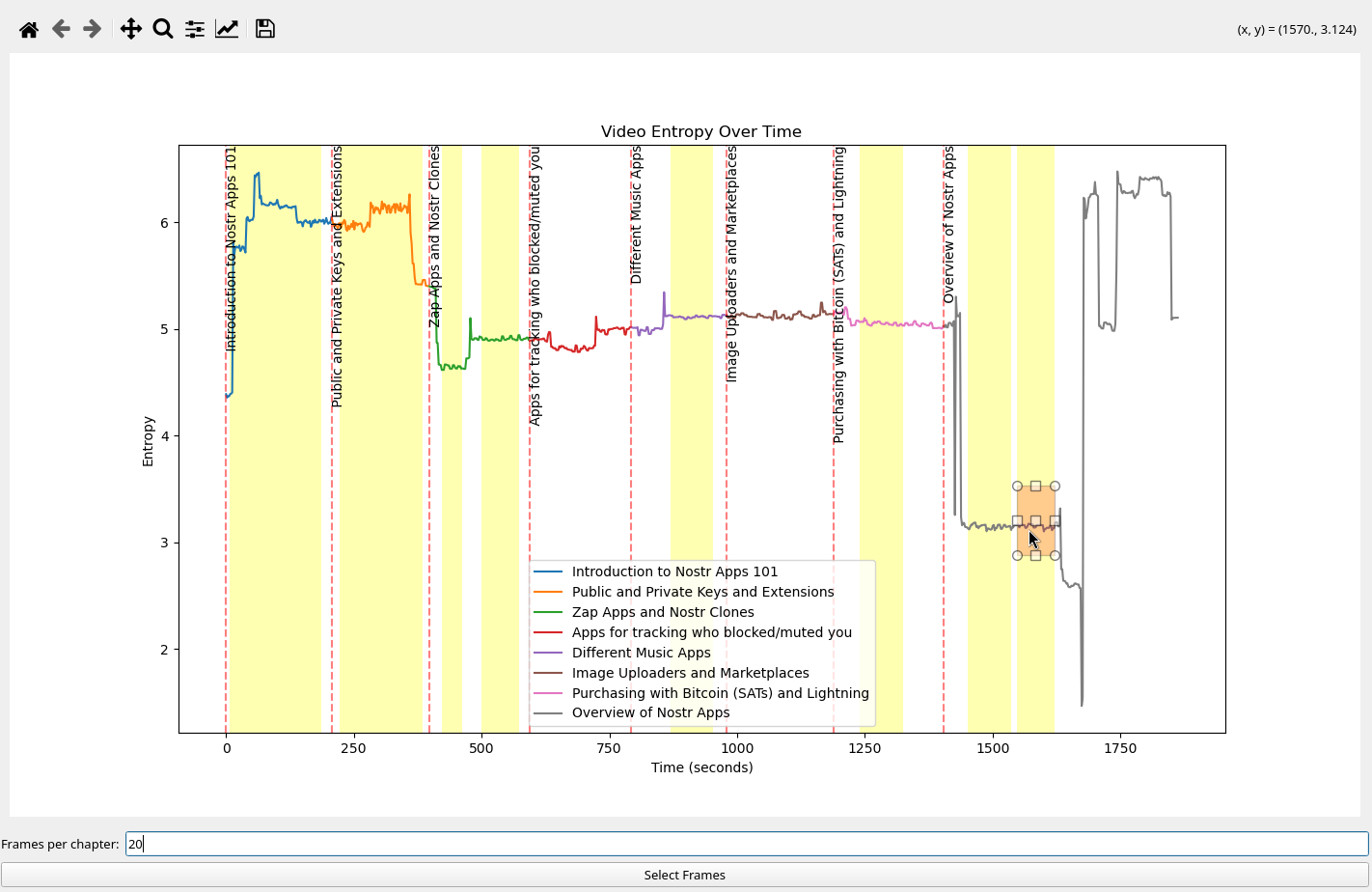

2025-01-11 16:52:08This article hopes to complement the article by Lyn Alden on YouTube: https://www.youtube.com/watch?v=jk_HWmmwiAs

The reason why we have broken money

Before the invention of key technologies such as the printing press and electronic communications, even such as those as early as morse code transmitters, gold had won the competition for best medium of money around the world.

In fact, it was not just gold by itself that became money, rulers and world leaders developed coins in order to help the economy grow. Gold nuggets were not as easy to transact with as coins with specific imprints and denominated sizes.

However, these modern technologies created massive efficiencies that allowed us to communicate and perform services more efficiently and much faster, yet the medium of money could not benefit from these advancements. Gold was heavy, slow and expensive to move globally, even though requesting and performing services globally did not have this limitation anymore.

Banks took initiative and created derivatives of gold: paper and electronic money; these new currencies allowed the economy to continue to grow and evolve, but it was not without its dark side. Today, no currency is denominated in gold at all, money is backed by nothing and its inherent value, the paper it is printed on, is worthless too.

Banks and governments eventually transitioned from a money derivative to a system of debt that could be co-opted and controlled for political and personal reasons. Our money today is broken and is the cause of more expensive, poorer quality goods in the economy, a larger and ever growing wealth gap, and many of the follow-on problems that have come with it.

Bitcoin overcomes the "transfer of hard money" problem

Just like gold coins were created by man, Bitcoin too is a technology created by man. Bitcoin, however is a much more profound invention, possibly more of a discovery than an invention in fact. Bitcoin has proven to be unbreakable, incorruptible and has upheld its ability to keep its units scarce, inalienable and counterfeit proof through the nature of its own design.

Since Bitcoin is a digital technology, it can be transferred across international borders almost as quickly as information itself. It therefore severely reduces the need for a derivative to be used to represent money to facilitate digital trade. This means that as the currency we use today continues to fare poorly for many people, bitcoin will continue to stand out as hard money, that just so happens to work as well, functionally, along side it.

Bitcoin will also always be available to anyone who wishes to earn it directly; even China is unable to restrict its citizens from accessing it. The dollar has traditionally become the currency for people who discover that their local currency is unsustainable. Even when the dollar has become illegal to use, it is simply used privately and unofficially. However, because bitcoin does not require you to trade it at a bank in order to use it across borders and across the web, Bitcoin will continue to be a viable escape hatch until we one day hit some critical mass where the world has simply adopted Bitcoin globally and everyone else must adopt it to survive.

Bitcoin has not yet proven that it can support the world at scale. However it can only be tested through real adoption, and just as gold coins were developed to help gold scale, tools will be developed to help overcome problems as they arise; ideally without the need for another derivative, but if necessary, hopefully with one that is more neutral and less corruptible than the derivatives used to represent gold.

Bitcoin blurs the line between commodity and technology

Bitcoin is a technology, it is a tool that requires human involvement to function, however it surprisingly does not allow for any concentration of power. Anyone can help to facilitate Bitcoin's operations, but no one can take control of its behaviour, its reach, or its prioritisation, as it operates autonomously based on a pre-determined, neutral set of rules.

At the same time, its built-in incentive mechanism ensures that people do not have to operate bitcoin out of the good of their heart. Even though the system cannot be co-opted holistically, It will not stop operating while there are people motivated to trade their time and resources to keep it running and earn from others' transaction fees. Although it requires humans to operate it, it remains both neutral and sustainable.

Never before have we developed or discovered a technology that could not be co-opted and used by one person or faction against another. Due to this nature, Bitcoin's units are often described as a commodity; they cannot be usurped or virtually cloned, and they cannot be affected by political biases.

The dangers of derivatives

A derivative is something created, designed or developed to represent another thing in order to solve a particular complication or problem. For example, paper and electronic money was once a derivative of gold.

In the case of Bitcoin, if you cannot link your units of bitcoin to an "address" that you personally hold a cryptographically secure key to, then you very likely have a derivative of bitcoin, not bitcoin itself. If you buy bitcoin on an online exchange and do not withdraw the bitcoin to a wallet that you control, then you legally own an electronic derivative of bitcoin.

Bitcoin is a new technology. It will have a learning curve and it will take time for humanity to learn how to comprehend, authenticate and take control of bitcoin collectively. Having said that, many people all over the world are already using and relying on Bitcoin natively. For many, it will require for people to find the need or a desire for a neutral money like bitcoin, and to have been burned by derivatives of it, before they start to understand the difference between the two. Eventually, it will become an essential part of what we regard as common sense.

Learn for yourself

If you wish to learn more about how to handle bitcoin and avoid derivatives, you can start by searching online for tutorials about "Bitcoin self custody".

There are many options available, some more practical for you, and some more practical for others. Don't spend too much time trying to find the perfect solution; practice and learn. You may make mistakes along the way, so be careful not to experiment with large amounts of your bitcoin as you explore new ideas and technologies along the way. This is similar to learning anything, like riding a bicycle; you are sure to fall a few times, scuff the frame, so don't buy a high performance racing bike while you're still learning to balance.

-

@ fe820c8e:d2d76f04

2025-05-09 14:33:55

@ fe820c8e:d2d76f04

2025-05-09 14:33:55Nostr Demo

This is your course initialization stub.

Please see the Docs to find out what is possible in LiaScript.

If you want to use instant help in your Atom IDE, please type lia to see all available shortcuts.

Markdown

You can use common Markdown syntax to create your course, such as:

- Lists

-

ordered or

-

unordered

- ones ...

| Header 1 | Header 2 | | :--------- | :--------- | | Item 1 | Item 2 |

Images:

Extensions

--{{0}}--But you can also include other features such as spoken text.

--{{1}}--Insert any kind of audio file:

{{1}}--{{2}}--Even videos or change the language completely.

{{2-3}}!?video

--{{3 Russian Female}}--Первоначально создан в 2004 году Джоном Грубером (англ. John Gruber) и Аароном Шварцем. Многие идеи языка были позаимствованы из существующих соглашений по разметке текста в электронных письмах...

{{3}}Type "voice" to see a list of all available languages.

Styling

The whole text-block should appear in purple color and with a wobbling effect. Which is a bad example, please use it with caution ... ~~ only this is red ;-) ~~

Charts

Use ASCII-Art to draw diagrams:

Multiline 1.9 | DOTS | *** y | * * - | r r r r r r r*r r r r*r r r r r r r a | * * x | * * i | B B B B B * B B B B B B * B B B B B s | * * | * * * * * * -1 +------------------------------------ 0 x-axis 1Quizzes

A Textquiz

What did the fish say when he hit a concrete wall?

[[dam]]Multiple Choice

Just add as many points as you wish:

[[X]] Only the **X** marks the correct point. [[ ]] Empty ones are wrong. [[X]] ...Single Choice

Just add as many points as you wish:

[( )] ... [(X)] <-- Only the **X** is allowed. [( )] ...Executable Code

A drawing example, for demonstrating that any JavaScript library can be used, also for drawing.

```javascript // Initialize a Line chart in the container with the ID chart1 new Chartist.Line('#chart1', { labels: [1, 2, 3, 4], series: [[100, 120, 180, 200]] });

// Initialize a Line chart in the container with the ID chart2 new Chartist.Bar('#chart2', { labels: [1, 2, 3, 4], series: [[5, 2, 8, 3]] }); ```

Projects

You can make your code executable and define projects:

``` js -EvalScript.js let who = data.first_name + " " + data.last_name;

if(data.online) { who + " is online"; } else { who + " is NOT online"; }

json +Data.json { "first_name" : "Sammy", "last_name" : "Shark", "online" : true } ```More

Find out what you can even do more with quizzes:

https://liascript.github.io/course/?https://raw.githubusercontent.com/liaScript/docs/master/README.md

-

@ 37fe9853:bcd1b039

2025-01-11 15:04:40

@ 37fe9853:bcd1b039

2025-01-11 15:04:40yoyoaa

-

@ 62033ff8:e4471203

2025-01-11 15:00:24

@ 62033ff8:e4471203

2025-01-11 15:00:24收录的内容中 kind=1的部分,实话说 质量不高。 所以我增加了kind=30023 长文的article,但是更新的太少,多个relays 的服务器也没有多少长文。

所有搜索nostr如果需要产生价值,需要有高质量的文章和新闻。 而且现在有很多机器人的文章充满着浪费空间的作用,其他作用都用不上。

https://www.duozhutuan.com 目前放的是给搜索引擎提供搜索的原材料。没有做UI给人类浏览。所以看上去是粗糙的。 我并没有打算去做一个发microblog的 web客户端,那类的客户端太多了。

我觉得nostr社区需要解决的还是应用。如果仅仅是microblog 感觉有点够呛

幸运的是npub.pro 建站这样的,我觉得有点意思。

yakihonne 智能widget 也有意思

我做的TaskQ5 我自己在用了。分布式的任务系统,也挺好的。

-

@ a008def1:57a3564d

2025-04-30 17:52:11

@ a008def1:57a3564d

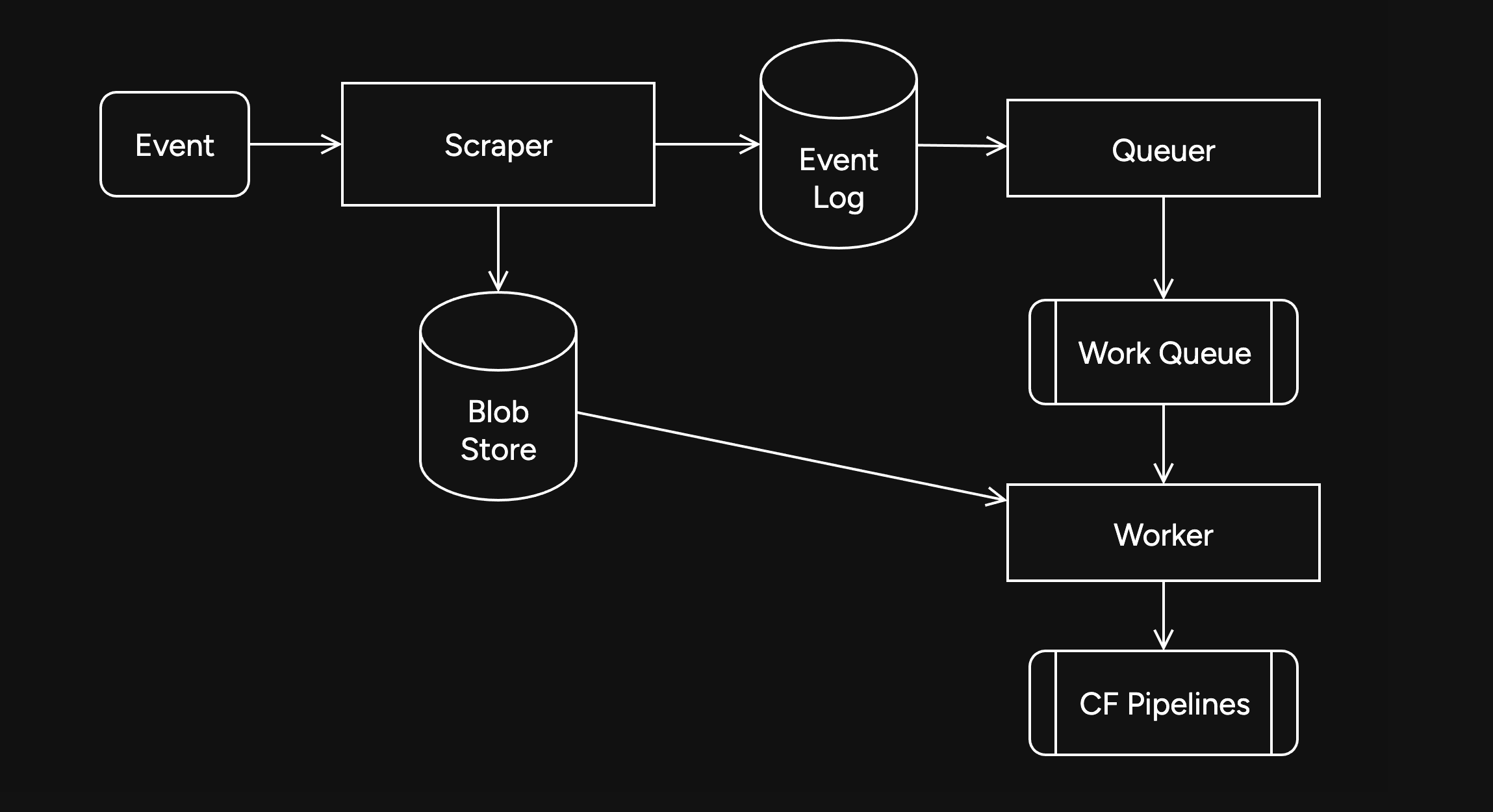

2025-04-30 17:52:11A Vision for #GitViaNostr

Git has long been the standard for version control in software development, but over time, we has lost its distributed nature. Originally, Git used open, permissionless email for collaboration, which worked well at scale. However, the rise of GitHub and its centralized pull request (PR) model has shifted the landscape.

Now, we have the opportunity to revive Git's permissionless and distributed nature through Nostr!

We’ve developed tools to facilitate Git collaboration via Nostr, but there are still significant friction that prevents widespread adoption. This article outlines a vision for how we can reduce those barriers and encourage more repositories to embrace this approach.

First, we’ll review our progress so far. Then, we’ll propose a guiding philosophy for our next steps. Finally, we’ll discuss a vision to tackle specific challenges, mainly relating to the role of the Git server and CI/CD.

I am the lead maintainer of ngit and gitworkshop.dev, and I’ve been fortunate to work full-time on this initiative for the past two years, thanks to an OpenSats grant.

How Far We’ve Come

The aim of #GitViaNostr is to liberate discussions around code collaboration from permissioned walled gardens. At the core of this collaboration is the process of proposing and applying changes. That's what we focused on first.

Since Nostr shares characteristics with email, and with NIP34, we’ve adopted similar primitives to those used in the patches-over-email workflow. This is because of their simplicity and that they don’t require contributors to host anything, which adds reliability and makes participation more accessible.

However, the fork-branch-PR-merge workflow is the only model many developers have known, and changing established workflows can be challenging. To address this, we developed a new workflow that balances familiarity, user experience, and alignment with the Nostr protocol: the branch-PR-merge model.

This model is implemented in ngit, which includes a Git plugin that allows users to engage without needing to learn new commands. Additionally, gitworkshop.dev offers a GitHub-like interface for interacting with PRs and issues. We encourage you to try them out using the quick start guide and share your feedback. You can also explore PRs and issues with gitplaza.

For those who prefer the patches-over-email workflow, you can still use that approach with Nostr through gitstr or the

ngit sendandngit listcommands, and explore patches with patch34.The tools are now available to support the core collaboration challenge, but we are still at the beginning of the adoption curve.

Before we dive into the challenges—such as why the Git server setup can be jarring and the possibilities surrounding CI/CD—let’s take a moment to reflect on how we should approach the challenges ahead of us.

Philosophy

Here are some foundational principles I shared a few years ago:

- Let Git be Git

- Let Nostr be Nostr

- Learn from the successes of others

I’d like to add one more:

- Embrace anarchy and resist monolithic development.

Micro Clients FTW

Nostr celebrates simplicity, and we should strive to maintain that. Monolithic developments often lead to unnecessary complexity. Projects like gitworkshop.dev, which aim to cover various aspects of the code collaboration experience, should not stifle innovation.

Just yesterday, the launch of following.space demonstrated how vibe-coded micro clients can make a significant impact. They can be valuable on their own, shape the ecosystem, and help push large and widely used clients to implement features and ideas.

The primitives in NIP34 are straightforward, and if there are any barriers preventing the vibe-coding of a #GitViaNostr app in an afternoon, we should work to eliminate them.

Micro clients should lead the way and explore new workflows, experiences, and models of thinking.

Take kanbanstr.com. It provides excellent project management and organization features that work seamlessly with NIP34 primitives.

From kanban to code snippets, from CI/CD runners to SatShoot—may a thousand flowers bloom, and a thousand more after them.

Friction and Challenges

The Git Server

In #GitViaNostr, maintainers' branches (e.g.,

master) are hosted on a Git server. Here’s why this approach is beneficial:- Follows the original Git vision and the "let Git be Git" philosophy.

- Super efficient, battle-tested, and compatible with all the ways people use Git (e.g., LFS, shallow cloning).

- Maintains compatibility with related systems without the need for plugins (e.g., for build and deployment).

- Only repository maintainers need write access.

In the original Git model, all users would need to add the Git server as a 'git remote.' However, with ngit, the Git server is hidden behind a Nostr remote, which enables:

- Hiding complexity from contributors and users, so that only maintainers need to know about the Git server component to start using #GitViaNostr.

- Maintainers can easily swap Git servers by updating their announcement event, allowing contributors/users using ngit to automatically switch to the new one.

Challenges with the Git Server

While the Git server model has its advantages, it also presents several challenges:

- Initial Setup: When creating a new repository, maintainers must select a Git server, which can be a jarring experience. Most options come with bloated social collaboration features tied to a centralized PR model, often difficult or impossible to disable.

-

Manual Configuration: New repositories require manual configuration, including adding new maintainers through a browser UI, which can be cumbersome and time-consuming.

-

User Onboarding: Many Git servers require email sign-up or KYC (Know Your Customer) processes, which can be a significant turn-off for new users exploring a decentralized and permissionless alternative to GitHub.

Once the initial setup is complete, the system works well if a reliable Git server is chosen. However, this is a significant "if," as we have become accustomed to the excellent uptime and reliability of GitHub. Even professionally run alternatives like Codeberg can experience downtime, which is frustrating when CI/CD and deployment processes are affected. This problem is exacerbated when self-hosting.

Currently, most repositories on Nostr rely on GitHub as the Git server. While maintainers can change servers without disrupting their contributors, this reliance on a centralized service is not the decentralized dream we aspire to achieve.

Vision for the Git Server

The goal is to transform the Git server from a single point of truth and failure into a component similar to a Nostr relay.

Functionality Already in ngit to Support This

-

State on Nostr: Store the state of branches and tags in a Nostr event, removing reliance on a single server. This validates that the data received has been signed by the maintainer, significantly reducing the trust requirement.

-

Proxy to Multiple Git Servers: Proxy requests to all servers listed in the announcement event, adding redundancy and eliminating the need for any one server to match GitHub's reliability.

Implementation Requirements

To achieve this vision, the Nostr Git server implementation should:

-

Implement the Git Smart HTTP Protocol without authentication (no SSH) and only accept pushes if the reference tip matches the latest state event.

-

Avoid Bloat: There should be no user authentication, no database, no web UI, and no unnecessary features.

-

Automatic Repository Management: Accept or reject new repositories automatically upon the first push based on the content of the repository announcement event referenced in the URL path and its author.

Just as there are many free, paid, and self-hosted relays, there will be a variety of free, zero-step signup options, as well as self-hosted and paid solutions.

Some servers may use a Web of Trust (WoT) to filter out spam, while others might impose bandwidth or repository size limits for free tiers or whitelist specific npubs.

Additionally, some implementations could bundle relay and blossom server functionalities to unify the provision of repository data into a single service. These would likely only accept content related to the stored repositories rather than general social nostr content.

The potential role of CI / CD via nostr DVMs could create the incentives for a market of highly reliable free at the point of use git servers.

This could make onboarding #GitViaNostr repositories as easy as entering a name and selecting from a multi-select list of Git server providers that announce via NIP89.

!(image)[https://image.nostr.build/badedc822995eb18b6d3c4bff0743b12b2e5ac018845ba498ce4aab0727caf6c.jpg]

Git Client in the Browser

Currently, many tasks are performed on a Git server web UI, such as:

- Browsing code, commits, branches, tags, etc.

- Creating and displaying permalinks to specific lines in commits.

- Merging PRs.

- Making small commits and PRs on-the-fly.

Just as nobody goes to the web UI of a relay (e.g., nos.lol) to interact with notes, nobody should need to go to a Git server to interact with repositories. We use the Nostr protocol to interact with Nostr relays, and we should use the Git protocol to interact with Git servers. This situation has evolved due to the centralization of Git servers. Instead of being restricted to the view and experience designed by the server operator, users should be able to choose the user experience that works best for them from a range of clients. To facilitate this, we need a library that lowers the barrier to entry for creating these experiences. This library should not require a full clone of every repository and should not depend on proprietary APIs. As a starting point, I propose wrapping the WASM-compiled gitlib2 library for the web and creating useful functions, such as showing a file, which utilizes clever flags to minimize bandwidth usage (e.g., shallow clone, noblob, etc.).

This approach would not only enhance clients like gitworkshop.dev but also bring forth a vision where Git servers simply run the Git protocol, making vibe coding Git experiences even better.

song

nostr:npub180cvv07tjdrrgpa0j7j7tmnyl2yr6yr7l8j4s3evf6u64th6gkwsyjh6w6 created song with a complementary vision that has shaped how I see the role of the git server. Its a self-hosted, nostr-permissioned git server with a relay baked in. Its currently a WIP and there are some compatability with ngit that we need to work out.

We collaborated on the nostr-permissioning approach now reflected in nip34.

I'm really excited to see how this space evolves.

CI/CD

Most projects require CI/CD, and while this is often bundled with Git hosting solutions, it is currently not smoothly integrated into #GitViaNostr yet. There are many loosely coupled options, such as Jenkins, Travis, CircleCI, etc., that could be integrated with Nostr.

However, the more exciting prospect is to use DVMs (Data Vending Machines).

DVMs for CI/CD

Nostr Data Vending Machines (DVMs) can provide a marketplace of CI/CD task runners with Cashu for micro payments.

There are various trust levels in CI/CD tasks:

- Tasks with no secrets eg. tests.

- Tasks using updatable secrets eg. API keys.

- Unverifiable builds and steps that sign with Android, Nostr, or PGP keys.

DVMs allow tasks to be kicked off with specific providers using a Cashu token as payment.

It might be suitable for some high-compute and easily verifiable tasks to be run by the cheapest available providers. Medium trust tasks could be run by providers with a good reputation, while high trust tasks could be run on self-hosted runners.

Job requests, status, and results all get published to Nostr for display in Git-focused Nostr clients.

Jobs could be triggered manually, or self-hosted runners could be configured to watch a Nostr repository and kick off jobs using their own runners without payment.

But I'm most excited about the prospect of Watcher Agents.

CI/CD Watcher Agents